Build applications written in NVIDIA® CUDA™ code for OpenCL™ 1.2 devices.

- Compile using

cocl - link using

-lcocl -lOpenCL - at runtime, loads libOpenCL.so

- write a CUDA sourcecode file, or find an existing one

- here's a simple example: cuda_sample.cu

- Run

coclto compile it:

$ cocl cuda_sample.cu

...

... (bunch of compily stuff) ...

...

./cuda_sample.cu compiled into ./cuda_sample

Run:

$ ./cuda_sample

Using Intel , OpenCL platform: Intel Gen OCL Driver

Using OpenCL device: Intel(R) HD Graphics 5500 BroadWell U-Processor GT2

hostFloats[2] 123

hostFloats[2] 222

hostFloats[2] 444

If you want, you can compile in two steps:

cocl -c teststream.cu

g++ -o teststream teststream.o -lcocl -lOpenCL

Result is the same:

$ ./cuda_sample

Using Intel , OpenCL platform: Intel Gen OCL Driver

Using OpenCL device: Intel(R) HD Graphics 5500 BroadWell U-Processor GT2

hostFloats[2] 123

hostFloats[2] 222

hostFloats[2] 444

| Option | Description |

|---|---|

| -I | provide an include directory, eg -I /usr/local/eigen |

| -o | output filepath, eg -o foo.o |

| -c | compile to .o file; dont link |

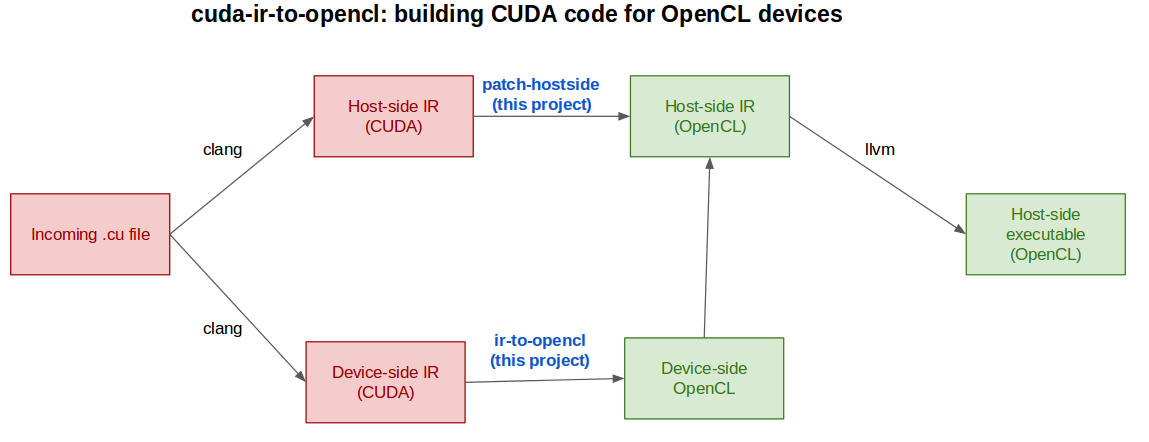

Behind the scenes, there are a few parts:

- Device-side,

coclconverts the CUDA kernels into OpenCL kernels - Host-side,

cocl:- converts the cuda kernel launch code into opencl kernel launch code, and

- bakes in the OpenCL code

- compiler for host-side code, including memory allocation, copy, streams, kernel launches

- compiler for device-side code, handling templated C++ code, converting it into bog-standard OpenCL 1.2 code

- BLAS (using Cedric Nugteren's CLBlast)

- Ubuntu 16.04

- clang/llvm 3.8 (installed in 'Procedure' below)

- OpenCL-enabled GPU, and appropriate OpenCL drivers installed for the GPU

Other operating systems, and clang/llvm versions, might work too, but untested. Your mileage may vary :-)

sudo apt-get install git cmake llvm-3.8-dev clang-3.8-dev libc6-dev-i386 make gcc g++

git clone --recursive https://github.com/hughperkins/cuda-on-cl

cd cuda-on-cl

make -j 4

sudo make install

Simply run:

make run-tests

You can run a test by name, eg:

make run-test-cocl-offsetkernelargs

Result:

################################

# running:

################################

LD_LIBRARY_PATH=build: build/test-cocl-offsetkernelargs

Using Intel , OpenCL platform: Intel Gen OCL Driver

Using OpenCL device: Intel(R) HD Graphics 5500 BroadWell U-Processor GT2

126.456

- tests are at test/cocl

- Eigen-CL: Minimally-tweaked fork of Eigen, which can be compiled/run using cuda-on-cl, on an OpenCL device, https://bitbucket.org/hughperkins/eigen/commits/branch/eigen-cl

- Tensorflow-CL: Fork of Tensorflow, that can be built and run on an OpenCL-1.2 enabled GPU, using cuda-on-cl, https://github.com/hughperkins/tensorflow-cl

- Oct 26:

- fixed a bug where BLAS results were empty on HD5500, using beignet 1.2

- added

__shfl_downshim - moved Eigen tests into a new Eigen fork, https://bitbucket.org/hughperkins/eigen/commits/branch/eigen-cl

- Oct 25:

- BLAS wrapper handles memory offsets correctly now

- Oct 24:

- fixed

pow,min,max(beta)

- fixed

- Oct 23:

- fixed

float4s. This is a critical bug-fix, without which Eigen componentwise works less well in Tensorflow :-P - added BLAS, using Cedric Nugteren's CLBlast)

- fixed

- Oct 22:

- arrays of structs can be passed to kernels again, as long as they contain no pointers

- (structs containing pointers can be passed only by-value)

- possible to call kernels with offsets added now, as in eg test/cocl/offsetkernelargs.cu

- arrays of structs can be passed to kernels again, as long as they contain no pointers

- Oct 20:

- fix bug where

threadIdx.xwas being incorrectly written asget_global_idinstead ofget_local_id...- magically, the

test_cuda_elementwisekernel works much better now :-)

- magically, the

- fix bug where

- Oct 18:

- installs to

/usr/localnow libcocl.acontainslibEasyCL.anow, no need forlibEasyCL.soat runtime- fixed bug with linking multiple compiled

.cufiles causing error about 'multiple definitions of __opencl_source'

- installs to

- Oct 16:

- added streams, including kernel launch on non-default stream

- removed pinned memory:

cuMemHostAllocnow just callsmalloc, see design.md for analysis and thoughts on this. Let me know if you have any ideas (eg via an issue). - added ability to copy to/from device memory, with an offset added

- Oct 15:

- fixed critical bug where

return;wasnt being written out. Which didnt matter when that was at the end of a kernel. But mattered more when that was the only exit condition for a kernel :-P - added event handling

added pinned memory handling- added a bunch of api call implementations for getting information about the driver (mostly stubbed out for now...)

- fixed critical bug where

- Oct 10:

- test/eigen/test_cuda_elementwise_small.cu builds and runs ok now

- Older news