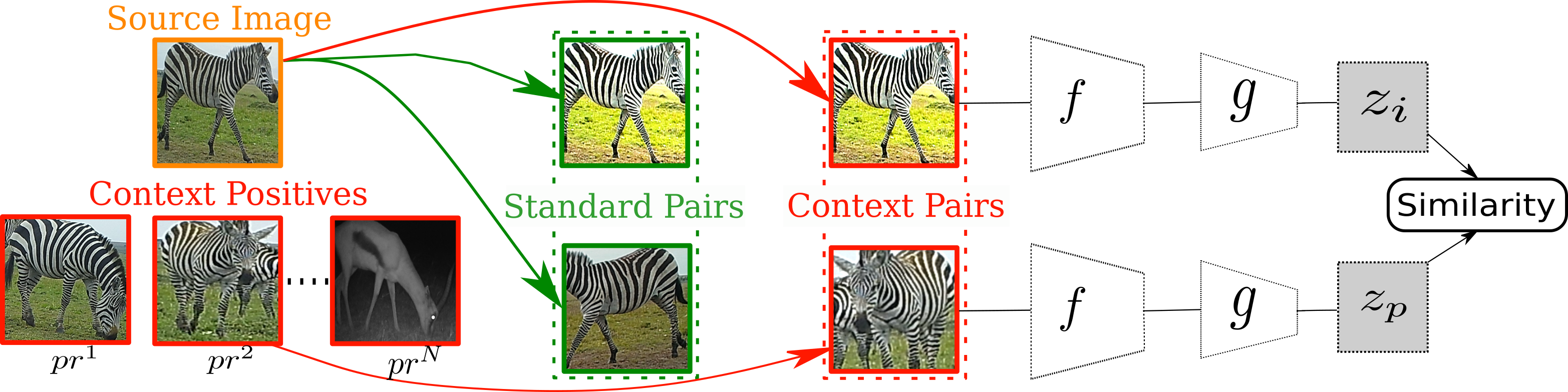

This repository contains the code for reproducing the results of our ICCV 2021 paper. A summary video can be found here The following figure illustrates our context positive approach within a SSL framework.

The organization of the repository is the following:

requirements.txtContains the libraries needed to run the codemain.pyis the main process that orchestrates model training and evaluation.models.pyModel utility functions.datasets.pyData utility functions i.e. Dataset classeslosses.pyhas a set of supported loss functions.evaluate.pycontains the code for evaluating model.utils.pycontains useful utility functions, such as feature extraction, linear evaluation etc.data_processing/preprocess_images.pyis used to filter images and extract the regions that contain objectx.data_processing/preprocess_context.pygenerates a file with meta-information for each image.

A large repository of camera trap data can be found at lila.science, including Caltech Camera Traps (CCT20), Island Conservation Camera Traps (ICCT) and Snapshot Serengeti datasets which were used for our main paper experiments.

- Download images and the corresponding annotation file that includes the bounding boxes into a local path (/path/to/downloaded_data/). Note: If annotations are not available for a camera trap dataset, regions can be extracted by utilizing the MegaDetector developed by Microsoft AI for Earth, something we tried on the supplemental material of the paper.

- Install libraries and dependencies based on

requirements.txt.

- Filter and generate the training images by extracting the regions that correspond to the bounding box objects (i.e. animals) and store under cam_data/.

- Generate dataframe including the metadata file of the above cropped images and save under cam_data/ i.e. cam_data/cct20_context_file.csv for the CCT20 dataset.

- The code for the above data processing steps lies under the

data_processing/directory, in filespreprocess_images.pyandpreprocess_context.pyrespectively.

- The process is orchestrated by the

main.pypython file, where its parameters define the dataset, the self-supevised loss and positive mining technique will be chosen. Other arguments cover various training or model parameters. - SSL can be replaced by fully supervised or transfer-learning to get a baseline.

There follows an example of code executing the above steps. The example is for the CCT20 dataset and uses SimCLR as the base self-supervised learning approach.

Extract camera trap object regions from images (these can be either available from the given data or acquired from Megadetector)

python data_processing/preprocess_images.py --dataset cct20

Save a metadata file for the contextual information of each image

python data_processing/preprocess_context.py --dataset cct20 --annotation_file CaltechCameraTrapsECCV18.json

Learn representations with a variety of SSL training settings and evaluate their quality on a downstream task (i.e. species classification).

The following scenarios cover standard SimCLR, SimCLR with sequence positives and SimCLR with context-informed positives:

python main.py --train_loss simclr --pos_type augment_self --backbone resnet18 --im_res 112 --dataset cct20 --exp_name "simclr standard"

python main.py --train_loss simclr --pos_type seq_positive --backbone resnet18 --im_res 112 --dataset cct20 --exp_name "simclr seq positive"

python main.py --train_loss simclr --pos_type context_sample --backbone resnet18 --im_res 112 --dataset cct20 --exp_name "simclr context distance"

In the above Python scripts important parameters include: train_loss which can be simclr, triplet, simsiam (for SSL pretraining) or rand_init,imagenet,supervised (for supervised or transfer-learning baselines). In addition pos_type parameter corresponds to the type of SSL approach and can be augment_self (standard augmentation), seq_positive (sequence positives), context_sample (context positives) and oracle (oracle positives).

If you find our work useful in your research please consider citing our paper:

@inproceedings{PantazisICCV2021,

title={Focus on the Positives: Self-Supervised Learning for Biodiversity Monitoring},

author={Pantazis, Omiros and

Brostow, Gabriel and

Jones, Kate and

Mac Aodha, Oisin},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

year={2021}

}