When it comes to biological datasets, it's very important to have data processing options that are fast, scalable, and low-cost. In this project, Polar and DuckDB are chosen to benchmark on the BELKA dataset using different data format (CSV and Parquet). The study shows that DuckDB works better than Polar when it comes to speed and memory usage. This is especially true in situations where big data tools like Apache Spark are too expensive and complicated to use. The goal is to find useful ways to handle big biological information without the extra work that comes with regular Big Data systems.

Target Audience: Data engineers, bioinformaticians, database architects interested in efficient data processing solutions, especially those working with biological and scientific datasets where Big Data infrastructure may be excessive.

Reference:

# setup kaggle

# https://github.com/Kaggle/kaggle-api/blob/main/docs/README.md

# content: {"username":"your_name","key":"your_key"}

$KAGGLE_CONFIG_DIR/kaggle.json

# start download

kaggle competitions download -c leash-BELKA

# uncompress file

unzip leash-BELKA.zip -d leash-BELKA

# install libraries

conda create -n polar_duckdb_benchmark python=3.10

conda activate polar_duckdb_benchmark

pip install -r requirements.txt

# for Deepchem (python=3.7)

# conda install -c conda-forge deepchem

# pip install --pre deepchem

pip install deepchem==2.5.0.dev

pip install --pre deepchem[torch]

pip install --pre deepchem[tensorflow]

pip install --pre deepchem[jax]

conda install cuda --channel nvidia/label/cuda-11.8.0 -y

conda install pytorch torchvision pytorch-cuda=11.8 -c pytorch -c nvidia -yThe notebooks aim to benchmark the performance of Polars and DuckDB on the BELKA dataset across two different data formats: CSV and Parquet. The key areas of comparison are:

- Time taken from basic operations like counting records to complex queries such as filtering, grouping and sorting.

- Memory consumption during these operations.

- CSV (Comma Separated Values)

- Parquet (Efficient columnar format)

- Polars: A high-performance DataFrame library implemented in Rust.

- DuckDB: An in-process SQL database management system focused on OLAP workloads.

- Matplotlib: For visualizing the results.

-

Load and Inspect Data:

- The dataset is loaded in both CSV and Parquet formats to analyze the differences in data processing time and memory usage.

-

Benchmark Operations:

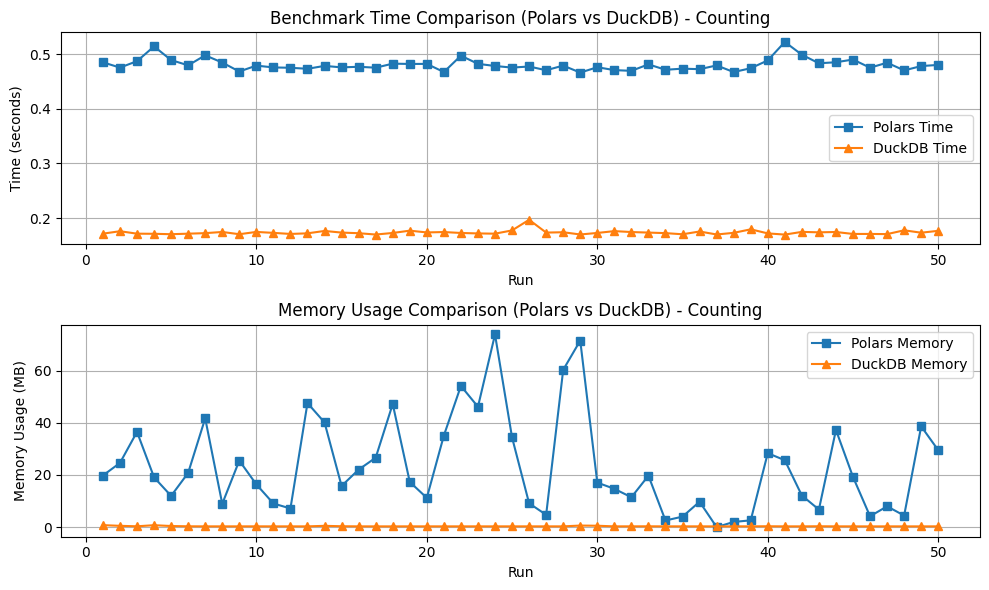

- Counting Records: A fundamental operation is performed on both CSV and Parquet formats using Polars and DuckDB.

- Complex queries: Experiment filtering, grouping, and sorting on large dataset using Polars and DuckDB.

-

Time and Memory Usage Comparison:

- The time taken for each operation and the memory consumed are recorded.

-

Plot Results:

- The results are visualized to compare the performance of Polars and DuckDB in terms of time efficiency and memory usage.

- DuckDB consistently outperforms Polars in terms of time calculation for both CSV and Parquet file formats.

- DuckDB also shows better memory management, consuming less memory compared to Polars when processing large datasets.

This analysis suggests that DuckDB is a more efficient choice over Polars from basic to complex data operations on medium-to-large biological datasets, particularly when working with Parquet files. The findings are significant for bioinformatics practitioners who aim to avoid the complexities and costs associated with big data frameworks like Apache Spark.