Image Super-Resolution Using Deep Feedforward Neural Networks In Spectral Domain

Abstract

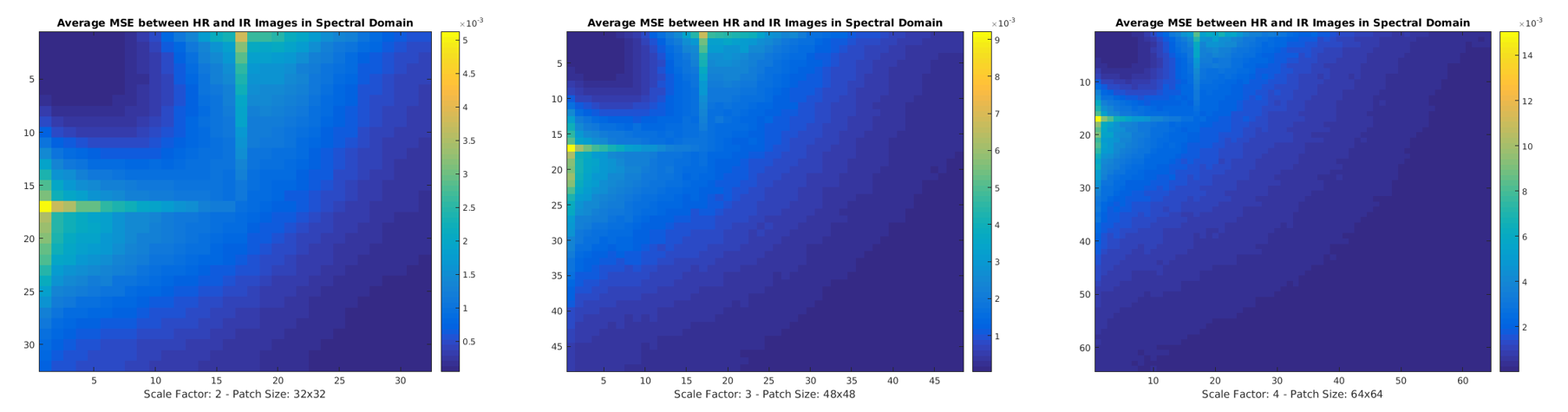

With recent advances in deep learning area, learning machinery and mainstream approaches in computer vision research have changed dramatically from hardcoded features combined with classifiers to end-to-end trained deep convolutional neural networks (CNN) which give the state-of-the-art results in most of the computer vision research areas. Single-image super-resolution is one of these areas which are considerably influenced by deep learning advancements. Most of the current state-of-the-art methods on super-resolution problem learn a nonlinear mapping from low-resolution images to high-resolution images in the spatial domain using consecutive convolutional layers in their network architectures. However, these state-of-the-art results are obtained by training a separate neural network architecture for each different scale factor. We propose a novel singleimage super-resolution system with the limited number of learning parameters in spectral domain in order to eliminate the necessity to train a separate neural network for each scale factor. As a spectral transform function which converts images from the spatial domain to the frequency domain, discrete cosine transform (DCT) which is a variant of discrete Fourier transform (DFT) is used. In addition, in the post-processing step, an artifact reduction module is added for removing ringing artifacts occurred due to spectral transformations. Even if the peak signal-to-noise ratio (PSNR) measurement of our super-resolution system is lower than current state-of-the-art methods, the spectral domain allows us to develop a single model with a single dataset for any scale factor and relatively obtain better structural similarity index (SSIM) results.

References

[1] C.-Y. Yang and M.-H. Yang, ["Fast direct super-resolution by simple functions,"](https://pdfs.semanticscholar.org/09b2/42913575cc8b651a54d88b9364ad9f10603c.pdf) in Proceedings of the IEEE International Conference on Computer Vision, pp. 561-568, 2013.

[2] R. Timofte, V. De Smet, and L. Van Gool, "A+: Adjusted anchored neighborhood regression for fast super-resolution," in Asian Conference on Computer Vision, pp. 111-126, Springer, 2014.

[3] J. Yang, J. Wright, T. Huang, and Y. Ma, "Image super-resolution as sparse representation of raw image patches," in Computer Vision and Pattern Recognition, 2008. CVPR 2008. IEEE Conference on, pp. 1-8, IEEE, 2008.

[4] J. Yang, J. Wright, T. S. Huang, and Y. Ma, "Image super-resolution via sparse representation," IEEE transactions on image processing, vol. 19, no. 11, pp. 2861-2873, 2010.

[5] S. Schulter, C. Leistner, and H. Bischof, "Fast and accurate image upscaling with super-resolution forests," in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3791-3799, 2015.

[6] C. Dong, C. C. Loy, K. He, and X. Tang, "Image super-resolution using deep convolutional networks," IEEE transactions on pattern analysis and machine intelligence, vol. 38, no. 2, pp. 295-307, 2016.

[7] J. Kim, J. Kwon Lee, and K. Mu Lee, "Accurate image super-resolution using very deep convolutional networks," in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1646-1654, 2016.

[8] W.-S. Lai, J.-B. Huang, N. Ahuja, and M.-H. Yang, "Deep laplacian pyramid networks for fast and accurate super-resolution," arXiv preprint arXiv:1704.03915, 2017.

[9] O. Rippel, J. Snoek, and R. P. Adams, "Spectral representations for convolutional neural networks," in Advances in Neural Information Processing Systems, pp. 2449-2457, 2015.

[10] Y. Wang, C. Xu, S. You, D. Tao, and C. Xu, "Cnnpack: Packing convolutional neural networks in the frequency domain," in Advances in neural information processing systems, pp. 253-261, 2016.

[11] N. Kumar, R. Verma, and A. Sethi, "Convolutional neural networks for wavelet domain super resolution," Pattern Recognition Letters, vol. 90, pp. 65-71, 2017.

[12] J. Li, S. You, and A. Robles-Kelly, "A frequency domain neural network for fast image super-resolution," arXiv preprint arXiv:1712.03037, 2017.

[13] S. Russell, P. Norvig, and A. Intelligence, "A modern approach," Artificial Intelligence. Prentice-Hall, Egnlewood Cliffs, vol. 25, p. 27, 1995.

[14] V. Mnih, K. Kavukcuoglu, D. Silver, A. Graves, I. Antonoglou, D. Wierstra, and M. Riedmiller, "Playing atari with deep reinforcement learning," arXiv preprint arXiv:1312.5602, 2013.

[15] E. Tzeng, C. Devin, J. Hoffman, C. Finn, X. Peng, S. Levine, K. Saenko, and T. Darrell, "Towards adapting deep visuomotor representations from simulated to real environments," arXiv preprint arXiv:1511.07111, 2015.

[16] Y. LeCun, Y. Bengio, and G. Hinton, "Deep learning," Nature, vol. 521, no. 7553, pp. 436-444, 2015.

[17] I. Goodfellow, Y. Bengio, and A. Courville, "Deep learning." MIT press, 2016.

[18] V. Nair and G. E. Hinton, "Rectified linear units improve restricted boltzmann machines," in Proceedings of the 27th international conference on machine learning (ICML-10), pp. 807-814, 2010.

[19] D.-A. Clevert, T. Unterthiner, and S. Hochreiter, "Fast and accurate deep network learning by exponential linear units (elus)," arXiv preprint arXiv:1511.07289, 2015.

[20] Y. LeCun, B. E. Boser, J. S. Denker, D. Henderson, R. E. Howard, W. E. Hubbard, and L. D. Jackel, "Handwritten digit recognition with a backpropagation network," in Advances in neural information processing systems, pp. 396-404, 1990.

[21] Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner, "Gradient-based learning applied to document recognition," Proceedings of the IEEE, vol. 86, no. 11, pp. 2278-2324, 1998.

[22] D. Kingma and J. Ba, "Adam: A method for stochastic optimization," arXiv preprint arXiv:1412.6980, 2014.

[23] A. Krizhevsky, I. Sutskever, and G. E. Hinton, "Imagenet classification with deep convolutional neural networks," in Advances in neural information processing systems, pp. 1097-1105, 2012.

[24] C. Szegedy, W. Liu, Y. Jia, P. Sermanet, S. Reed, D. Anguelov, D. Erhan, V. Vanhoucke, and A. Rabinovich, "Going deeper with convolutions," in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 1-9, 2015.

[25] K. Simonyan and A. Zisserman, "Very deep convolutional networks for largescale image recognition," arXiv preprint arXiv:1409.1556, 2014.

[26] K. He, X. Zhang, S. Ren, and J. Sun, "Deep residual learning for image recognition," in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770-778, 2016.

[27] M. D. Zeiler and R. Fergus, "Visualizing and understanding convolutional networks," in European conference on computer vision, pp. 818-833, Springer, 2014.

[28] I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, "Generative adversarial nets," in Advances in neural information processing systems, pp. 2672-2680, 2014.

[29] A. Radford, L. Metz, and S. Chintala, "Unsupervised representation learning with deep convolutional generative adversarial networks," arXiv preprint arXiv:1511.06434, 2015.

[30] J. Yosinski, J. Clune, Y. Bengio, and H. Lipson, "How transferable are features in deep neural networks?," in Advances in neural information processing systems, pp. 3320-3328, 2014.

[31] M. Oquab, L. Bottou, I. Laptev, and J. Sivic, "Learning and transferring mid-level image representations using convolutional neural networks," in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 1717-1724, 2014.

[32] J.-B. Huang, A. Singh, and N. Ahuja, "Single image super-resolution from transformed self-exemplars," in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5197-5206, 2015.

[33] C. Dong, C. C. Loy, and X. Tang, "Accelerating the super-resolution convolutional neural network," in European Conference on Computer Vision, pp. 391-407, Springer, 2016.

[34] C. Ledig, L. Theis, F. Husz´ar, J. Caballero, A. Cunningham, A. Acosta, A. Aitken, A. Tejani, J. Totz, Z. Wang, et al., "Photo-realistic single image super-resolution using a generative adversarial network," arXiv preprint arXiv:1609.04802, 2016.

[35] A. V. Oppenheim, "Discrete-time signal processing." Pearson Education India, 1999.

[36] X. Glorot and Y. Bengio, "Understanding the difficulty of training deep feedforward neural networks," in Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, pp. 249-256, 2010.

[37] N. Srivastava, G. E. Hinton, A. Krizhevsky, I. Sutskever, and R. Salakhutdinov, "Dropout: a simple way to prevent neural networks from overfitting.," Journal of machine learning research, vol. 15, no. 1, pp. 1929-1958, 2014.

[38] S. Ioffe and C. Szegedy, "Batch normalization: Accelerating deep network training by reducing internal covariate shift," in International Conference on Machine Learning, pp. 448-456, 2015.

[39] C. Dong, Y. Deng, C. Change Loy, and X. Tang, "Compression artifacts reduction by a deep convolutional network," in Proceedings of the IEEE International Conference on Computer Vision, pp. 576-584, 2015.

[40] P. Svoboda, M. Hradis, D. Barina, and P. Zemcik, "Compression artifacts removal using convolutional neural networks," arXiv preprint arXiv:1605.00366, 2016.

[41] A. v. d. Oord, N. Kalchbrenner, and K. Kavukcuoglu, "Pixel recurrent neural networks," arXiv preprint arXiv:1601.06759, 2016.

[42] A. v. d. Oord, N. Kalchbrenner, O. Vinyals, L. Espeholt, A. Graves, and K. Kavukcuoglu, "Conditional image generation with pixelcnn decoders," arXiv preprint arXiv:1606.05328, 2016.