Yuxuan Zhang1,*, Tianheng Cheng1,*, Lei Liu2, Heng Liu2, Longjin Ran2, Xiaoxin Chen2, Wenyu Liu1, Xinggang Wang1,📧

1 Huazhong University of Science and Technology, 2 vivo AI Lab

(* equal contribution, 📧 corresponding author)

We have expanded our EVF-SAM to powerful SAM-2. Besides improvements on image prediction, our new model also performs well on video prediction (powered by SAM-2). Only at the expense of a simple image training process on RES datasets, we find our EVF-SAM has zero-shot video text-prompted capability. Try our code!

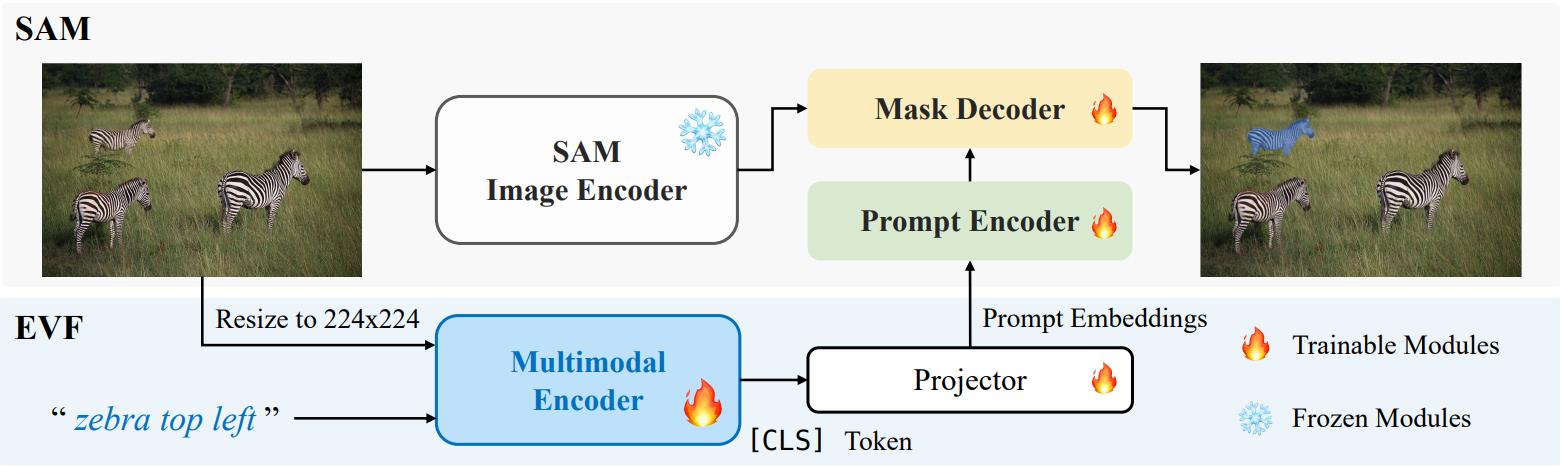

- EVF-SAM extends SAM's capabilities with text-prompted segmentation, achieving high accuracy in Referring Expression Segmentation.

- EVF-SAM is designed for efficient computation, enabling rapid inference in few seconds per image on a T4 GPU.

- Release code

- Release weights

- Release demo 👉 🤗 evf-sam

- Release code and weights based on SAM-2

- Update demo supporting SAM-2👉 🤗 evf-sam2

- Clone this repository

- Install pytorch for your cuda version. Note that torch>=2.0.0 is needed if you are to use SAM-2, and torch>=2.2 is needed if you want to enable flash-attention. (We use torch==2.0.1 with CUDA 11.7 and it works fine.)

- pip install -r requirements.txt

- If you are to use the video prediction function, run:

cd model/segment_anything_2

python setup.py build_ext --inplace

| Name | SAM | BEIT-3 | Params | Prompt Encoder & Mask Decoder | Reference Score |

| EVF-SAM2 | SAM-2-L | BEIT-3-L | 898M | freeze | 83.6 |

| EVF-SAM | SAM-H | BEIT-3-L | 1.32B | train | 83.7 |

| EVF-Effi-SAM-L | EfficientSAM-S | BEIT-3-L | 700M | train | 83.5 |

| EVF-Effi-SAM-B | EfficientSAM-T | BEIT-3-B | 232M | train | 80.0 |

python inference.py \

--version <path to evf-sam> \

--precision='fp16' \

--vis_save_path "<path to your output direction>" \

--model_type <"ori" or "effi" or "sam2", depending on your loaded ckpt> \

--image_path <path to your input image> \

--prompt <customized text prompt>

--load_in_8bit and --load_in_4bit is optional

for example:

python inference.py \

--version YxZhang/evf-sam2 \

--precision='fp16' \

--vis_save_path "vis" \

--model_type sam2 \

--image_path "assets/zebra.jpg" \

--prompt "zebra top left"

firstly slice video into frames

ffmpeg -i <your_video>.mp4 -q:v 2 -start_number 0 <frame_dir>/'%05d.jpg'

then:

python inference_video.py \

--version <path to evf-sam2> \

--precision='fp16' \

--vis_save_path "vis/" \

--image_path <frame_dir> \

--prompt <customized text prompt> \

--model_type sam2

you can use frame2video.py to concat the predicted frames to a video.

image demo

python demo.py <path to evf-sam>

video demo

python demo_video.py <path to evf-sam2>

Referring segmentation datasets: refCOCO, refCOCO+, refCOCOg, refCLEF (saiapr_tc-12) and COCO2014train

├── dataset

│ ├── refer_seg

│ │ ├── images

│ │ | ├── saiapr_tc-12

│ │ | └── mscoco

│ │ | └── images

│ │ | └── train2014

│ │ ├── refclef

│ │ ├── refcoco

│ │ ├── refcoco+

│ │ └── refcocog

torchrun --standalone --nproc_per_node <num_gpus> eval.py \

--version <path to evf-sam> \

--dataset_dir <path to your data root> \

--val_dataset "refcoco|unc|val" \

--model_type <"ori" or "effi" or "sam2", depending on your loaded ckpt>

We borrow some codes from LISA, unilm, SAM, EfficientSAM, SAM-2.

@article{zhang2024evfsamearlyvisionlanguagefusion,

title={EVF-SAM: Early Vision-Language Fusion for Text-Prompted Segment Anything Model},

author={Yuxuan Zhang and Tianheng Cheng and Rui Hu and Lei Liu and Heng Liu and Longjin Ran and Xiaoxin Chen and Wenyu Liu and Xinggang Wang},

year={2024},

eprint={2406.20076},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2406.20076},

}