Adaptive Style Transfer in TensorFlow and TensorLayer

Before "Arbitrary Style Transfer in Real-time with Adaptive Instance Normalization", there were two main approaches for style transfer. First, given one content image and one style image, we randomly initialize a noise image and update it to get the output image. The drawback of this apporach is slow, it usually takes 3 mins to get one image. After that, academic proposed to train one model for one specific style, which input one image to network, and output one image. This approach is far more faster than the previous approach, and achieved real-time style transfer.

However, one model for one style still not good enough for production. If a mobile APP want to support 100 styles offline, it is impossible to store 100 models in the cell phone. Adaptive style transfer which in turn supports arbitrary styles in one single model !!! We don't need to train new model for new style. Just simply input one content image and one style image you want !!!

Usage

- Install TensorFlow and the master of TensorLayer:

pip install git+https://github.com/tensorlayer/tensorlayer.git

-

You can use the train.py script to train your own model. To train the model, you need to download MSCOCO dataset and Wikiart dataset, and put the dataset images under the 'dataset/COCO_train_2014' folder and 'dataset/wiki_all_images' folder.

-

Alternatively, you can use the test.py script to run my pretrained models. My pretrained models can be downloaded from here, and should be put into the 'pretrained_models' folder for testing.

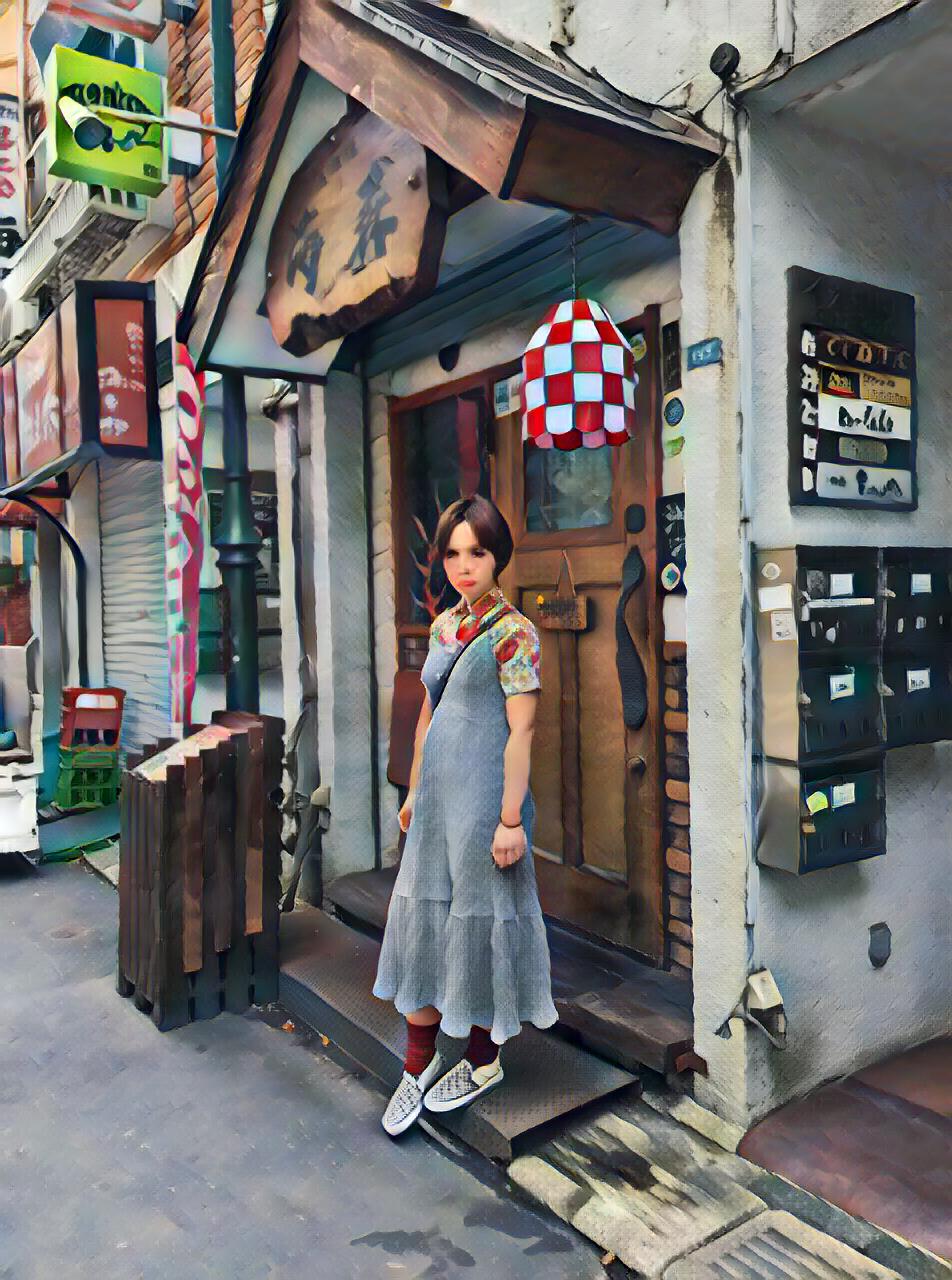

Results

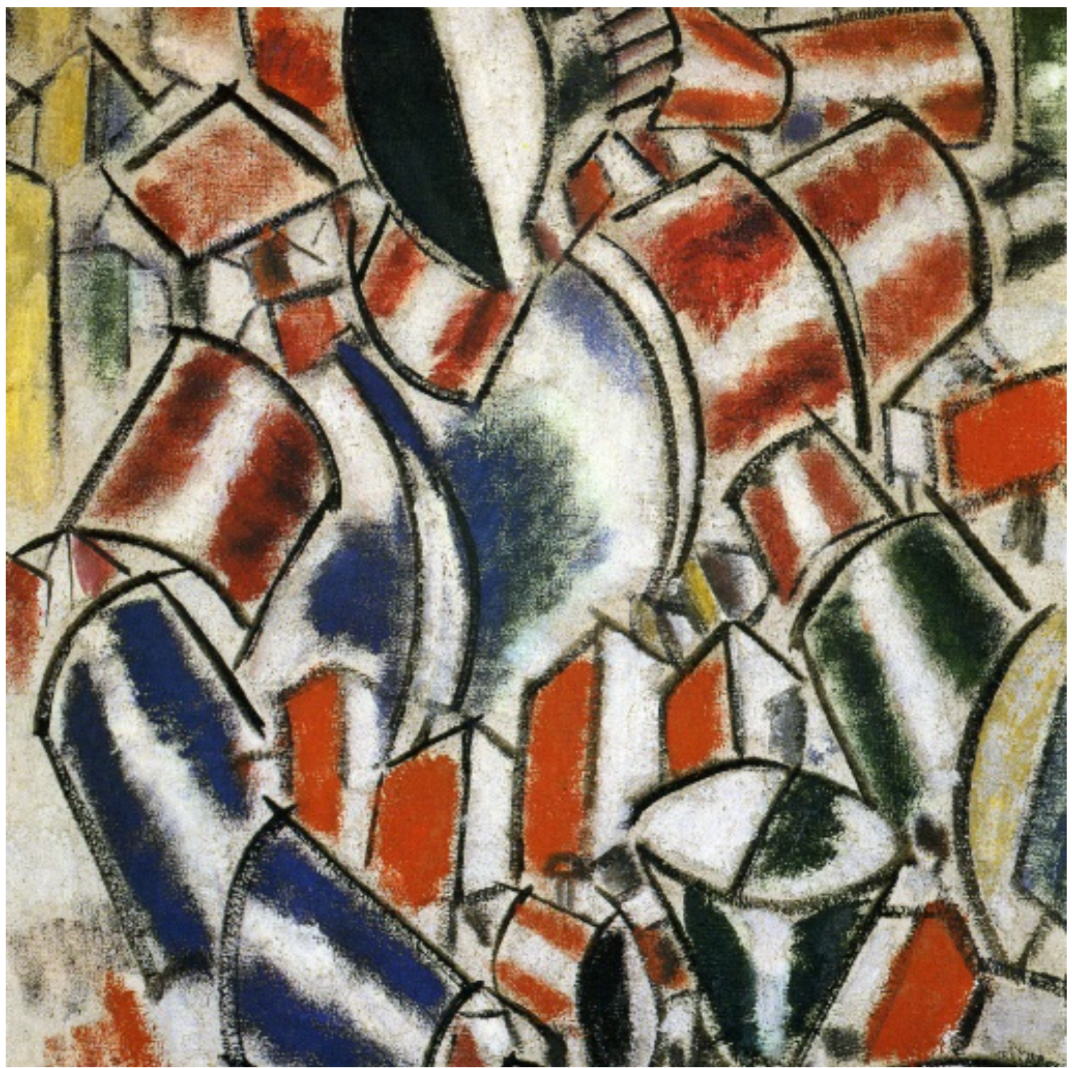

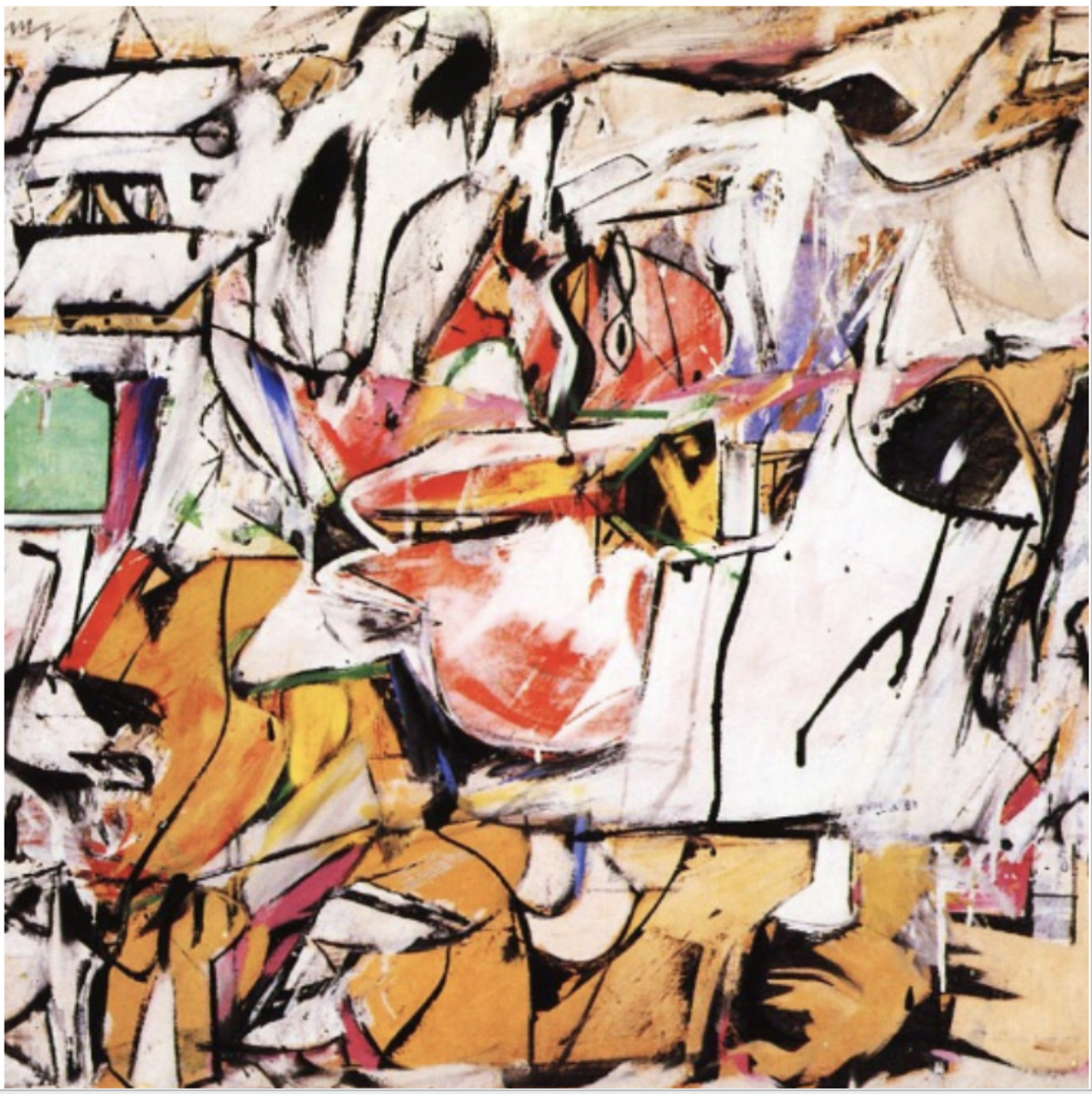

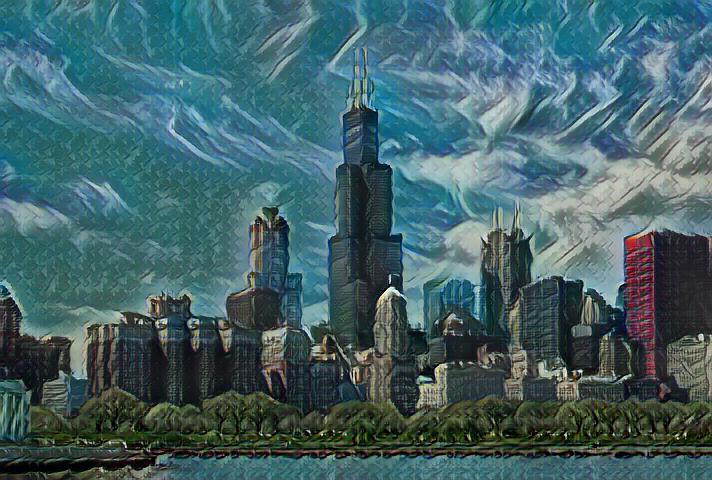

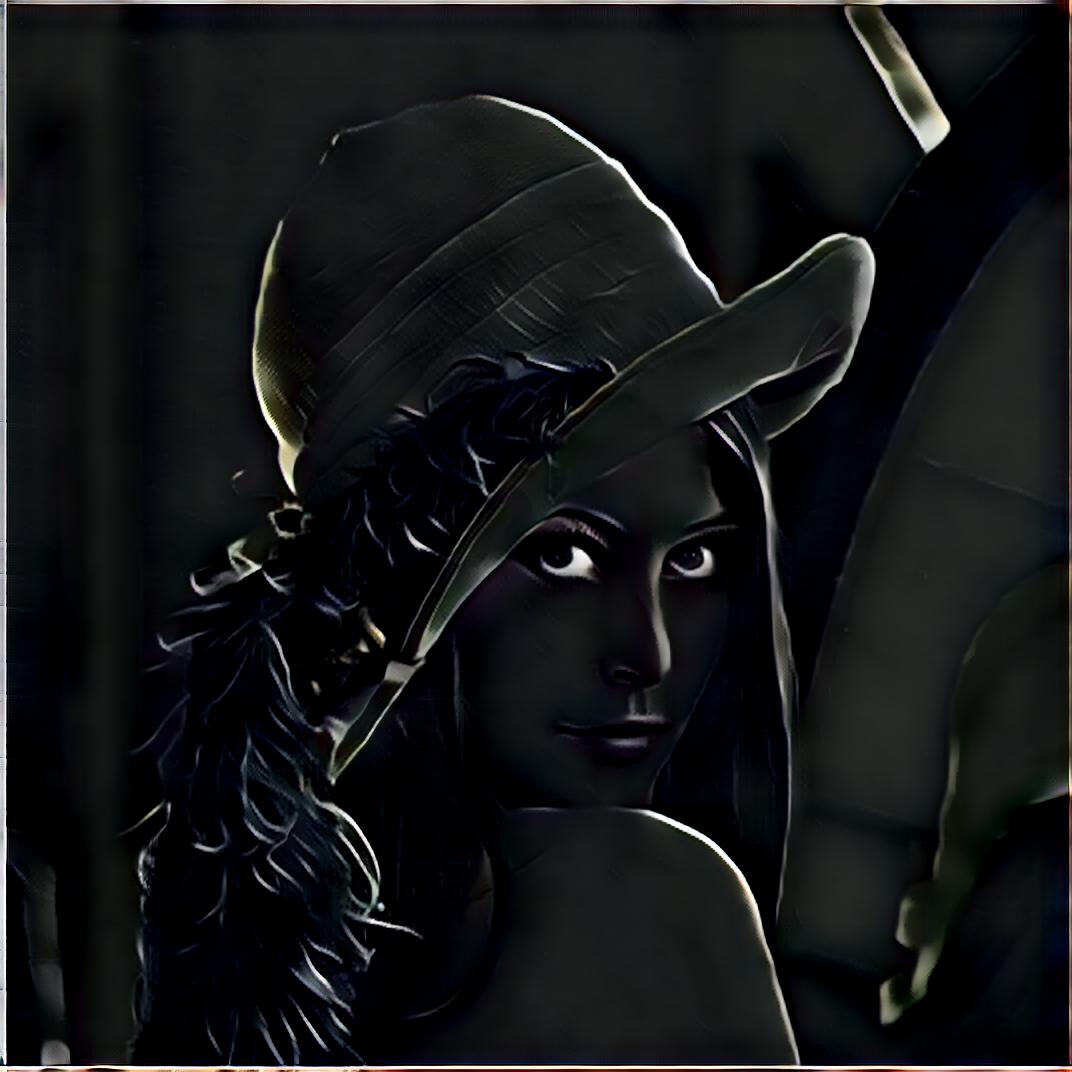

Here are some result images (Left to Right: Content , Style , Result):

Enjoy !

Discussion

License

- This project is for academic use only.