Please see the accompanying SmartNoise Documentation, SmartNoise SDK repository and SmartNoise Core repository for this system.

Differential privacy is the gold standard definition of privacy protection. The SmartNoise project, in collaboration with OpenDP, aims to connect theoretical solutions from the academic community with the practical lessons learned from real-world deployments, to make differential privacy broadly accessible to future deployments. Specifically, we provide several basic building blocks that can be used by people involved with sensitive data, with implementations based on vetted and mature differential privacy research. In this Samples repository we provide example code and notebooks to:

- demonstrate the use of the system platform,

- teach the properties of differential privacy,

- highlight some of the nuances of the system implementation.

This repository includes several sets of sample Python notebooks that demonstrate SmartNoise functionality:

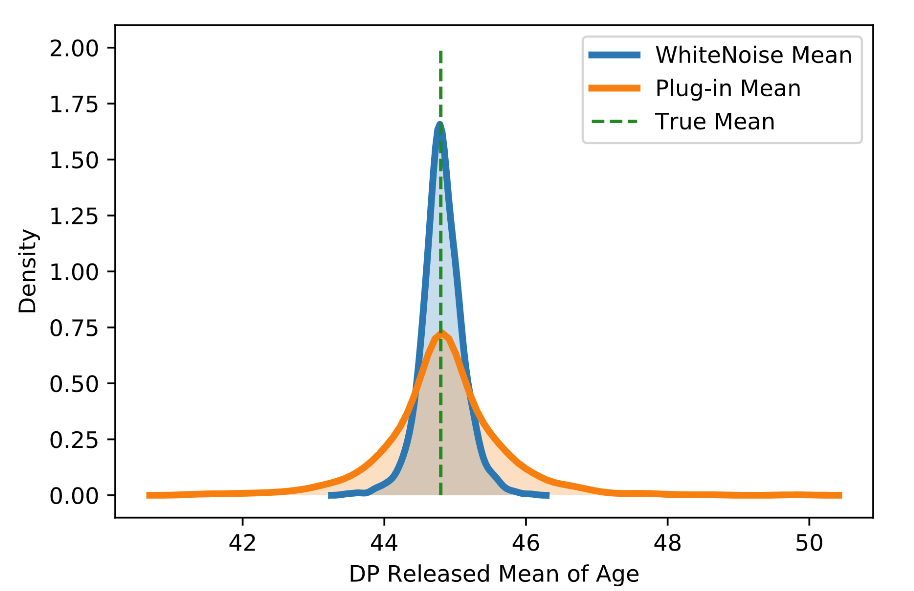

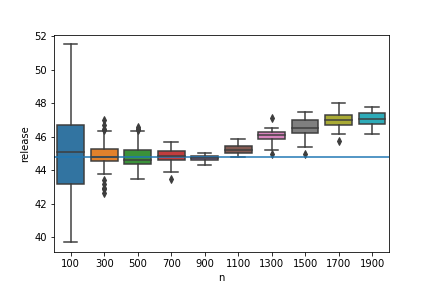

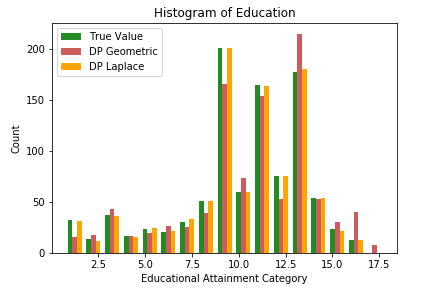

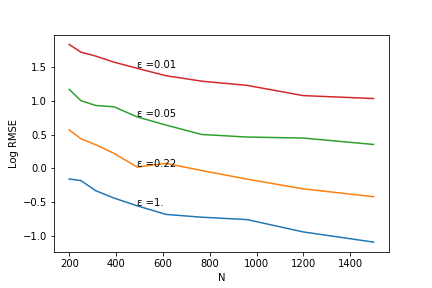

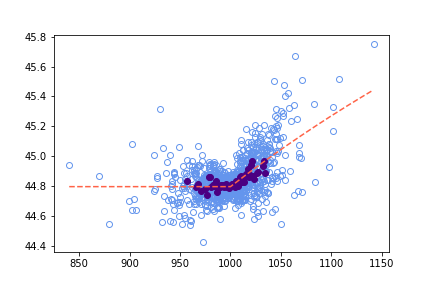

- Sample Analysis Notebooks - In addition to a brief tutorial, there are examples of histograms, differentially private covariance, how dataset size and privacy-loss parameter selection impact utility, and working with unknown dataset sizes.

- Attack Notebooks - Walk-throughs of how SmartNoise mitigates basic attacks as well as a database reconstruction attack.

- SQL Data Access - Code examples and notebooks show how to issue SQL queries against CSV files, database engines, and Spark clusters.

- SmartNoise Whitepaper Demo Notebooks - Based on the whitepaper titled Microsoft SmartNoise Differential Privacy Machine Learning Case Studies these notebooks include a demonstration of how to perform supervised machine learning with differential privacy and an example of creating a synthetic dataset with high utility for machine learning as well as examples of creating DP releases with histograms and protecting against a reidentification attack.

Core Library Reference: The Core Library implements the runtime validator and execution engine. Documentation is available for:

- Python - https://opendp.github.io/smartnoise-core-python/)

- Rust - https://opendp.github.io/smartnoise-core/doc/smartnoise_validator/docs/components/

- You are very welcome to join us on GitHub Discussions!

- Please use GitHub Issues for bug reports and feature requests.

- For other requests, including security issues, please contact us at smartnoise@opendp.org.

Please let us know if you encounter a bug by creating an issue.

We appreciate all contributions. We welcome pull requests with bug-fixes without prior discussion.

If you plan to contribute new features, utility functions or extensions to the samples repository, please first open an issue and discuss the feature with us.

- Sending a PR without discussion might end up resulting in a rejected PR, because we may be taking the examples in a different direction than you might be aware of.

- After cloning the repository and setting up virtual environment, install requirements.

git clone https://github.com/opendifferentialprivacy/smartnoise-samples.git && cd smartnoise-samples

python3 -m venv venv

. venv/bin/activate

pip install -r requirements.txt

# if running locally

pip install jupyterlab

# launch locally

jupyter-lab