Table of Contents 📖

- [29/11/2024] We have now added a demo page on ModelScope. Many thanks to @wangxingjun778 !

- [24/10/2024] OpenR now supports MCTS reasoning (#24)! 🌲

- [15/10/2024] Our report is on Arxiv!

- [12/10/2024] OpenR has been released! 🚀

| Feature | Contents |

|---|---|

| ✅ Process-supervision Data Generation | - OmegaPRM: Improve Mathematical Reasoning in Language Models by Automated Process Supervision |

| ✅ Online Policy Training | - RL Training: APPO, GRPO, TPPO; |

| ✅ Generative and Discriminative PRM Training | - PRM Training: Supervised Training for PRMs - Generative RM Training: Direct GenRM |

| ✅ Multiple Search Strategies | - Greedy Search - Best-of-N - Beam Search - MCTS - rStar: Mutual Reasoning Makes Smaller LLMs Stronger Problem-Solvers - Critic-MCTS: Under Review |

| ✅ Test-time Computation and Scaling Law | TBA, see benchmark |

| Feature | TODO (High Priority, We value you contribution!) |

|---|---|

| 👨💻Data | - Re-implement Journey Learning |

| 👨💻RL Training | - Distributed Training - Reinforcement Fine-Tuning (RFT) #80 |

| 👨💻PRM | - Larger-scale training - GenRM-CoT implementation - Soft-label training #57 |

| 👨💻Reasoning | - Optimize code structure #53 - More tasks on reasoning (AIME, etc.) #53 - Multi-modal reasoning #82 - Reasoning in code generation #68 - Dots #75 - Consistency check - Benchmarking |

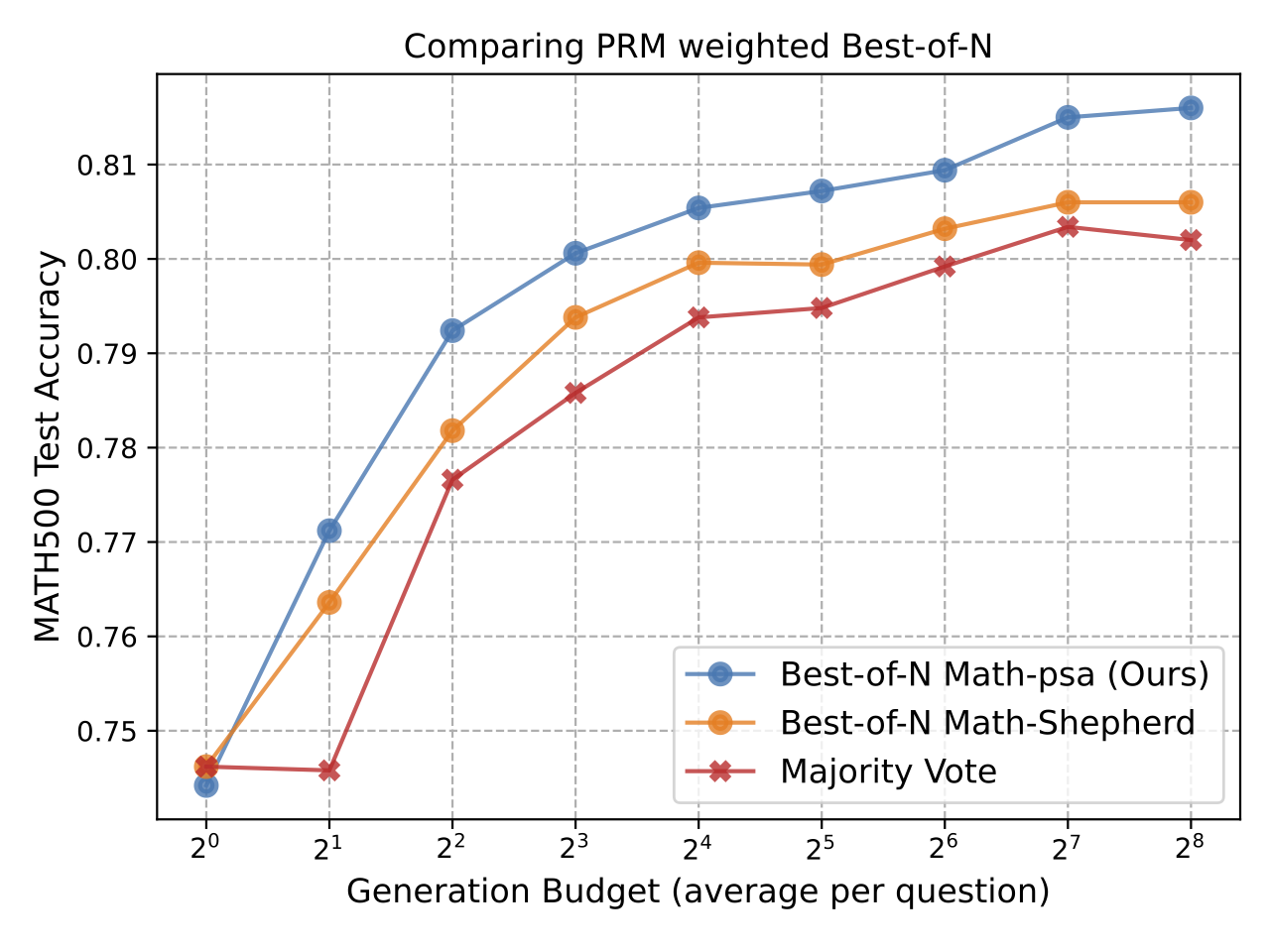

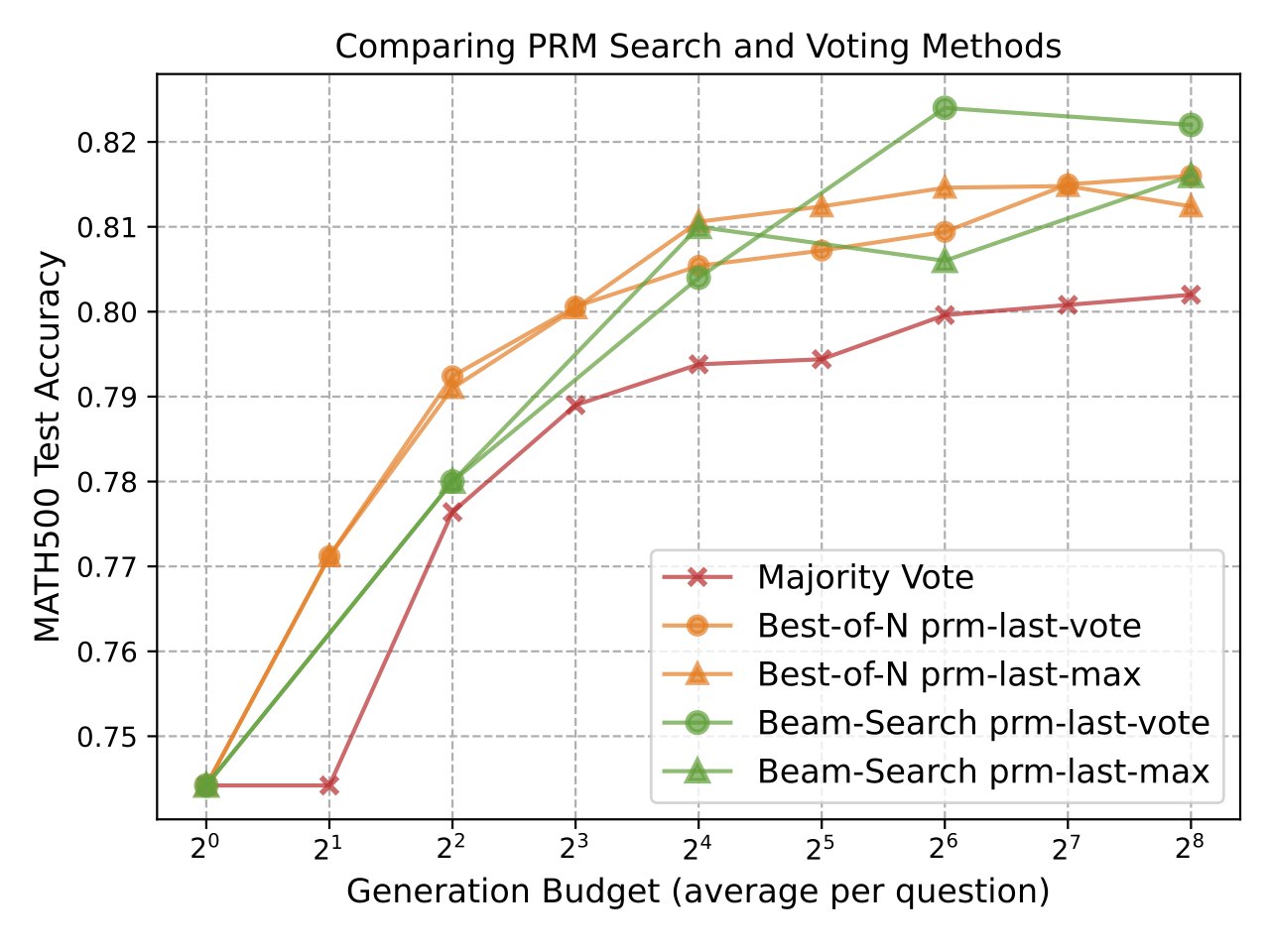

See Benchmark !

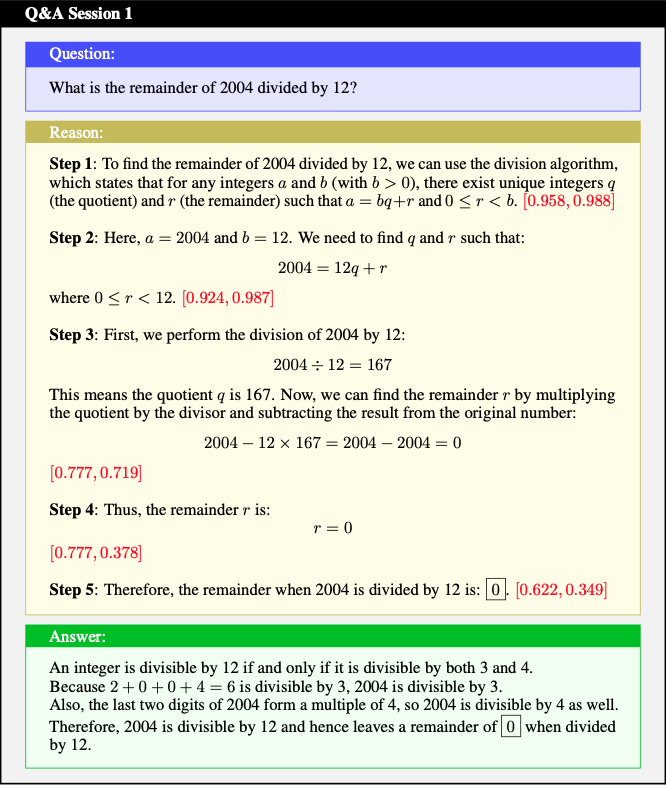

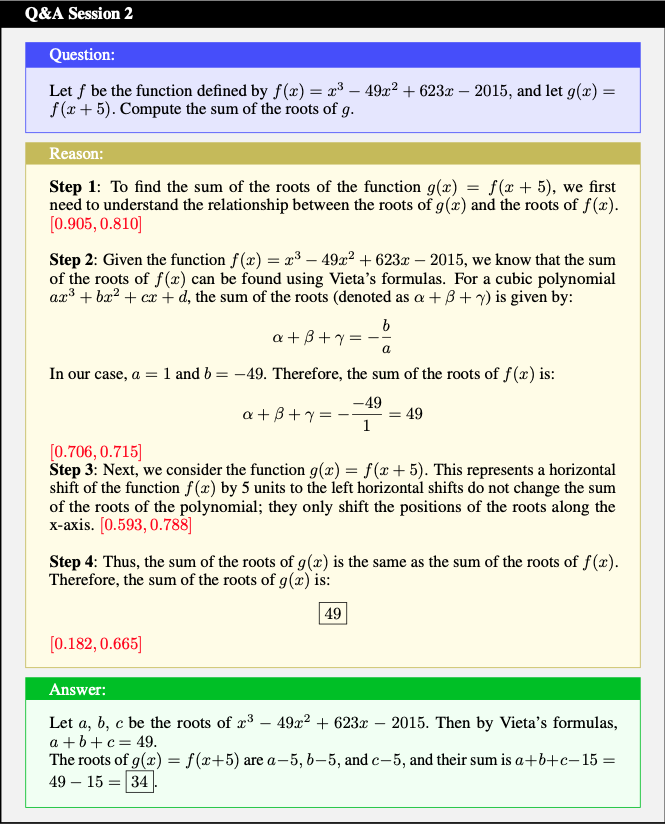

MATH-APS (Our Dataset)

MATH-psa (Our Process Reward Model)

conda create -n open_reasoner python=3.10

conda activate open_reasoner

pip install -r requirements.txt

pip3 install "fschat[model_worker,webui]"

pip install -U pydantic

cd envs/MATH/latex2sympy

pip install -e .

cd -

Before running the project, please ensure that all required base models are downloaded. The models used in this project include:

Qwen2.5-Math-1.5B-Instruct,Qwen2.5-Math-7B-Instructpeiyi9979/mistral-7b-sftpeiyi9979/math-shepherd-mistral-7b-prm

To download these models, please refer to the Hugging Face model downloading tutorial for step-by-step guidance on downloading models from the Hugging Face Hub.

Please make sure that all models are saved in their directories according to the project setup before proceeding.

Before running inference, please modify the following variables in the scripts under reason/llm_service/ to set the appropriate base models for your usage:

$MODEL_BASE: Set this to the directory where your models are stored.$POLICY_MODEL_NAME: Set this to the name of the policy model you wish to use.$VALUE_MODEL_NAME: Set this to the name of the value model you wish to use.$NUM_LM_WORKER: Set this to the number of language model (LM) workers to start.$NUM_RM_WORKER: Set this to the number of reward model (RM) workers to start.

Then it prepares and runs inference using different techniques.

For example, to start the LM and RM services for the Math Shepherd model, run the following command:

sh reason/llm_service/create_service_math_shepherd.shTo kill the server processes, recommend using the following command:

tmux kill-session -t {Your Session Name} # default is `FastChat`--LM, --RM) in the script aligns with the variables ($POLICY_MODEL_NAME, $VALUE_MODEL_NAME) in the pending worker!

export PYTHONPATH=$(pwd)

sh scripts/eval/cot_greedy.sh

# Method: cot. Average result: ({'majority_vote': 0.734, 'total_completion_tokens': 559.13},)

sh scripts/eval/cot_rerank.sh

# Method: best_of_n. Average result: ({'majority_vote': 0.782,

# 'prm_min_max': 0.772,

# 'prm_min_vote': 0.792,

# 'prm_last_max': 0.776,

# 'prm_last_vote': 0.792,

# 'total_completion_tokens': 4431.268},)

sh scripts/eval/beam_search.sh

# Method: beam_search. Average result: ({'majority_vote': 0.74, 'total_completion_tokens': 2350.492},)

sh scripts/eval/vanila_mcts.sh

$dataset_path, $model_name_or_path and $prm_name_or_path in train/mat/scripts/train_llm.sh.

cd train/mat/scripts

bash train_llm.shcd prm/code

\\ single gpu

python finetune_qwen_single_gpu.py --model_path $YOUR_MODEL_PATH \

--train_data_path $TRAIN_DATA_PATH \

--test_data_path $TEST_DATA_PATH

\\ multi gpu

torchrun --nproc_per_node=2 finetune_qwen.py --model_path $YOUR_MODEL_PATH \

--data_path $YOUR_DATA_FOLDER_PATH \

--datasets both \Every contribution is valuable to the community.

Thank you for your interest in OpenR ! 🥰 We are deeply committed to the open-source community, and we welcome contributions from everyone. Your efforts, whether big or small, help us grow and improve. Contributions aren’t limited to code—answering questions, helping others, enhancing our documentation, and sharing the project are equally impactful.

Feel free to checkout the contribution guidance !

-

Add More Comprehensive Evaluations on RL Training and Search Strategies

-

Scaling the Prove-Verifier Model Size

-

Support Self-improvement Training

The OpenR community is maintained by:

- Openreasoner Team (openreasoner@gmail.com)

OpenR is released under the MIT License.

If you do find our resources helpful, please cite our paper:

@misc{wang2024tutorial,

author = {Jun Wang},

title = {A Tutorial on LLM Reasoning: Relevant Methods Behind ChatGPT o1},

year = {2024},

url = {https://github.com/openreasoner/openr/blob/main/reports/tutorial.pdf},

note = {Available on GitHub}

}

@article{wang2024openr,

title={OpenR: An Open Source Framework for Advanced Reasoning with Large Language Models},

author={Wang, Jun and Fang, Meng and Wan, Ziyu and Wen, Muning and Zhu, Jiachen and Liu, Anjie and Gong, Ziqin and Song, Yan and Chen, Lei and Ni, Lionel M and others},

journal={arXiv preprint arXiv:2410.09671},

year={2024}

}

WeChat:

[1] Alphazero-like tree-search can guide large language model decoding and training.

[2] Reasoning with language model is planning with world model.

[3] Scaling LLM test-time compute optimally can be more effective than scaling model parameters

[4] Think before you speak: Training language models with pause tokens

[1] Training verifiers to solve math word problems

[2] Solving math word problems with process-and outcome-based feedback

[4] Making large language models better reasoners with step-aware verifier

[5] Ovm, outcome-supervised value models for planning in mathematical reasoning

[6] Generative verifiers: Reward modeling as next-token prediction

[1] Star: Bootstrapping reasoning with reasoning

[2] Quiet-star: Language models can teach themselves to think before speaking

[3] Improve mathematical reasoning in language models by automated process supervision

[4] Shepherd: A critic for language model generation

[5] Math-shepherd: Verify and reinforce llms step-by-step without human annotations