Wentao Bao, Lele Chen, Libing Zeng, Zhong Li, Yi Xu, Junsong Yuan, Yu Kong

This is an official PyTorch implementation of the USST published in ICCV 2023. We release the dataset annotations (H2O-PT and EgoPAT3D-DT), PyTorch codes (training, inference, and demo), and the pretrained model weights.

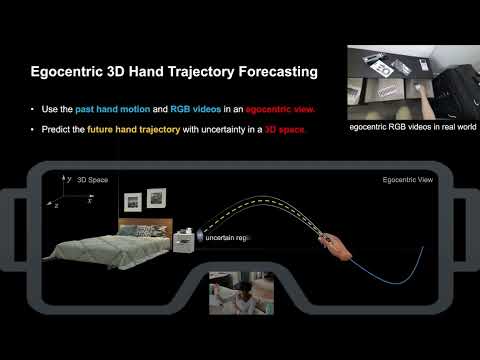

Egocentric 3D Hand Trajectory Forecasting (Ego3D-HTF) aims to predict the future 3D hand trajectory (in red color) given the past observation of an egocentric RGB video and historical trajectory (in blue color). Compared to predicting the trajectory in 2D space, predicting trajectory in global 3D space is practically more valuable to understand human intention for AR/VR applications.

Brief Intro. [YouTube]

- Create a conda virtual environment with

python 3.7:

conda create -n usst python=3.7

conda activate usst- Install the latest

PyTorch:

pip install torch torchvision --extra-index-url https://download.pytorch.org/whl/cu113- Install other python packages:

pip install -r requirements.txt- [Optional] Install

ffmpegif you need to visualize results by GIF animation:

conda install ffmpeg-

This dataset can be downloaded here: OneDrive, which is collected by re-annotating the raw RGB-D recordings from EgoPAT3D Dataset.

-

After downloaded, place the downloaded

tar.gzfile underdata/EgoPAT3D/and extract it:tar zxvf EgoPAT3D-postproc.tar.gz. The dataset folder should be structured as follows.

data/EgoPAT3D/EgoPAT3D-postproc

|-- odometry # visual odometry data, e.g., "1/*/*.npy"

|-- trajectory_repair # trajectory data, e.g., "1/*/*.pkl"

|-- video_clips_hand # video clips, e.g., "1/*/*.mp4"-

This dataset can be downloaded here: OneDrive, which is collected by re-annotating the H2O Dataset.

-

After downloaded, place the downloaded

tar.gzfile underdata/H2O/and extract it:tar zxvf Ego3DTraj.tar.gz. The dataset folder should be structured as follows.

data/H2O/Ego3DTraj

|-- splits # training splits ("train.txt", "val.txt", "test.txt")

|-- traj # trajectory data from pose (PT), e.g., "*.pkl", ...

|-- video # video clips, e.g., "*.mp4", ...a. Train the proposed ViT-based USST model on EgoPAT3D-DT dataset using GPU_ID=0 and 8 workers:

cd exp

nohup bash train.sh 0 8 usst_vit_3d >train_egopat3d.log 2>&1 &b. Train the proposed ViT-based USST model on H2O-PT dataset using GPU_ID=0 and 8 workers:

cd exp

nohup bash trainval_h2o.sh 0 8 h2o/usst_vit_3d train >train_h2o.log 2>&1 &c. This repo contains TensorboardX suport to monitor the training status:

# open a new terminial

cd output/EgoPAT3D/usst_vit_3d

tensorboard --logdir=./logs

# open the browser with the prompted localhost url.d. Checkout other model variants in the config/ folder, including the ResNet-18 backbones (usst_res18_xxx.yml), 3D/2D trajectory target (usst_xxx_3d/2d.yml), and 3D target in local camera reference (usst_xxx_local3d).

a. Test and evaluate a trained model, e.g., usst_vit_3d, on EgoPAT3D-DT testing set:

cd exp

bash test.sh 0 8 usst_vit_3db. Test and evaluate a trained model, e.g., usst_res18_3d, on H2O-PT testing set:

cd exp

bash trainval_h2o.sh 0 8 usst_res18_3d evalEvaluation results will be cached in output/[EgoPAT3D|H2O]/usst_vit_3d and reported on the terminal.

c. To evaluate the 2D trajectory forecasting performance of a pretrained 3D target model, modify the config file usst_xxx_3d.yml to set TEST.eval_space: norm2d, then run the test.sh (or trainval_h2o.sh) again.

d. If only doing testing without training, please download our pretrained model from here: OneDrive. After downloaded a zip file, place it under the output/ folder, e.g., output/EgoPAT3D/usst_res18_3d.zip and then extract it: cd output/EgoPAT3D && unzip usst_res18_3d.zip. Then, run the run the test.sh (or trainval_h2o.sh).

e. [Optional] Show the demos of a testing examples in our paper:

python demo_paper.py --config config/usst_vit_3d.yml --tag usst_vit_3dIf you find the code useful in your research, please cite:

@inproceedings{BaoUSST_ICCV23,

author = "Wentao Bao and Lele Chen and Libing Zeng and Zhong Li and Yi Xu and Junsong Yuan and Yu Kong",

title = "Uncertainty-aware State Space Transformer for Egocentric 3D Hand Trajectory Forecasting",

booktitle = "International Conference on Computer Vision (ICCV)",

year = "2023"

}

Please also cite the EgoPAT3D paper if you use our EgoPAT3D-DT annotations:

@InProceedings{Li_2022_CVPR,

title = {Egocentric Prediction of Action Target in 3D},

author = {Li, Yiming and Cao, Ziang and Liang, Andrew and Liang, Benjamin and Chen, Luoyao and Zhao, Hang and Feng, Chen},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022}

}

and H2O paper if you use our H2O-PT annotations:

@InProceedings{Kwon_2021_ICCV,

author = {Kwon, Taein and Tekin, Bugra and St\"uhmer, Jan and Bogo, Federica and Pollefeys, Marc},

title = {H2O: Two Hands Manipulating Objects for First Person Interaction Recognition},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2021},

pages = {10138-10148}

}

- Codes and checkpoints are licensed under the Apache 2.0 License.

- Dataset EgoPAT3D-DT is licensed following the EgoPAT3D, which is licensed under the MIT License.

- Dataset H2O-PT is licensed by following ETH Zurich H2O Dataset Terms of Use.

We sincerely thank the owners of the following source code repos, which are referred by our released codes: EgoPAT3D, hoi_forecast, pyk4a, RAFT, and NewCRFs.