- Criar o cluster do k3d

┌─[orbite]@[orbite-desktop]:~

└──> $ k3d cluster create

- Listar o cluster

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ k3d cluster list

NAME SERVERS AGENTS LOADBALANCER

k3s-default 1/1 0/0 true

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6256de9fec2c rancher/k3d-proxy:5.2.2 "/bin/sh -c nginx-pr…" 2 minutes ago Up 2 minutes 80/tcp, 0.0.0.0:38907->6443/tcp k3d-k3s-default-serverlb

ec40d1598e57 rancher/k3s:v1.21.7-k3s1 "/bin/k3s server --t…" 3 minutes ago Up 2 minutes k3d-k3s-default-server-0

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k3d-k3s-default-server-0 Ready control-plane,master 3m16s v1.21.7+k3s1

- Deletando o cluster

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ k3d cluster delete

INFO[0000] Deleting cluster 'k3s-default'

INFO[0001] Deleting cluster network 'k3d-k3s-default'

INFO[0001] Deleting image volume 'k3d-k3s-default-images'

INFO[0001] Removing cluster details from default kubeconfig...

INFO[0001] Removing standalone kubeconfig file (if there is one)...

INFO[0001] Successfully deleted cluster k3s-default!

- Criando o cluster sem LB

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ k3d cluster create --no-lb

INFO[0000] Prep: Network

INFO[0000] Created network 'k3d-k3s-default'

INFO[0000] Created volume 'k3d-k3s-default-images'

INFO[0000] Starting new tools node...

INFO[0000] Starting Node 'k3d-k3s-default-tools'

INFO[0001] Creating node 'k3d-k3s-default-server-0'

INFO[0001] Using the k3d-tools node to gather environment information

INFO[0001] HostIP: using network gateway 172.20.0.1 address

INFO[0001] Starting cluster 'k3s-default'

INFO[0001] Starting servers...

INFO[0001] Starting Node 'k3d-k3s-default-server-0'

INFO[0008] All agents already running.

INFO[0008] All helpers already running.

INFO[0008] Injecting '172.20.0.1 host.k3d.internal' into /etc/hosts of all nodes...

INFO[0008] Injecting records for host.k3d.internal and for 1 network members into CoreDNS configmap...

INFO[0010] Cluster 'k3s-default' created successfully!

INFO[0010] You can now use it like this:

kubectl cluster-info

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k3d-k3s-default-server-0 Ready control-plane,master 2m58s v1.21.7+k3s1

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

79a0c43eff01 rancher/k3s:v1.21.7-k3s1 "/bin/k3s server --t…" 3 minutes ago Up 3 minutes 0.0.0.0:35657->6443/tcp k3d-k3s-default-server-0

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ k3d cluster list

NAME SERVERS AGENTS LOADBALANCER

k3s-default 1/1 0/0 false

- Criando um cluster com 3 server

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ k3d cluster create meucluster --servers 3 --agents 3

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k3d-meucluster-agent-0 Ready <none> 47s v1.21.7+k3s1

k3d-meucluster-agent-1 Ready <none> 46s v1.21.7+k3s1

k3d-meucluster-agent-2 Ready <none> 46s v1.21.7+k3s1

k3d-meucluster-server-0 Ready control-plane,etcd,master 84s v1.21.7+k3s1

k3d-meucluster-server-1 Ready control-plane,etcd,master 67s v1.21.7+k3s1

k3d-meucluster-server-2 Ready control-plane,etcd,master 55s v1.21.7+k3s1

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e57f4c3a6262 rancher/k3d-proxy:5.2.2 "/bin/sh -c nginx-pr…" 2 minutes ago Up About a minute 80/tcp, 0.0.0.0:35805->6443/tcp k3d-meucluster-serverlb

f54f8f6a77d3 rancher/k3s:v1.21.7-k3s1 "/bin/k3s agent" 2 minutes ago Up About a minute k3d-meucluster-agent-2

cfde6489444a rancher/k3s:v1.21.7-k3s1 "/bin/k3s agent" 2 minutes ago Up About a minute k3d-meucluster-agent-1

b480f47efbb0 rancher/k3s:v1.21.7-k3s1 "/bin/k3s agent" 2 minutes ago Up About a minute k3d-meucluster-agent-0

40a4f34a1df0 rancher/k3s:v1.21.7-k3s1 "/bin/k3s server --t…" 2 minutes ago Up About a minute k3d-meucluster-server-2

c8c3e962a870 rancher/k3s:v1.21.7-k3s1 "/bin/k3s server --t…" 2 minutes ago Up 2 minutes k3d-meucluster-server-1

2ad2d32f5d5f rancher/k3s:v1.21.7-k3s1 "/bin/k3s server --c…" 2 minutes ago Up 2 minutes k3d-meucluster

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ k3d cluster list

NAME SERVERS AGENTS LOADBALANCER

meucluster 3/3 3/3 true

- Kubectl Api

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ kubectl api-resources

NAME SHORTNAMES APIVERSION NAMESPACED KIND

bindings v1 true Binding

componentstatuses cs v1 false ComponentStatus

configmaps cm v1 true ConfigMap

endpoints ep v1 true Endpoints

events ev v1 true Event

limitranges limits v1 true LimitRange

namespaces ns v1 false Namespace

nodes no v1 false Node

persistentvolumeclaims pvc v1 true PersistentVolumeClaim

persistentvolumes pv v1 false PersistentVolume

pods po v1 true Pod

podtemplates v1 true PodTemplate

replicationcontrollers rc v1 true ReplicationController

resourcequotas quota v1 true ResourceQuota

secrets v1 true Secret

serviceaccounts sa v1 true ServiceAccount

services svc v1 true Service

mutatingwebhookconfigurations admissionregistration.k8s.io/v1 false MutatingWebhookConfiguration

validatingwebhookconfigurations admissionregistration.k8s.io/v1 false ValidatingWebhookConfiguration

customresourcedefinitions crd,crds apiextensions.k8s.io/v1 false CustomResourceDefinition

apiservices apiregistration.k8s.io/v1 false APIService

controllerrevisions apps/v1 true ControllerRevision

daemonsets ds apps/v1 true DaemonSet

deployments deploy apps/v1 true Deployment

replicasets rs apps/v1 true ReplicaSet

statefulsets sts apps/v1 true StatefulSet

tokenreviews authentication.k8s.io/v1 false TokenReview

localsubjectaccessreviews authorization.k8s.io/v1 true LocalSubjectAccessReview

selfsubjectaccessreviews authorization.k8s.io/v1 false SelfSubjectAccessReview

selfsubjectrulesreviews authorization.k8s.io/v1 false SelfSubjectRulesReview

subjectaccessreviews authorization.k8s.io/v1 false SubjectAccessReview

horizontalpodautoscalers hpa autoscaling/v1 true HorizontalPodAutoscaler

cronjobs cj batch/v1 true CronJob

jobs batch/v1 true Job

certificatesigningrequests csr certificates.k8s.io/v1 false CertificateSigningRequest

leases coordination.k8s.io/v1 true Lease

endpointslices discovery.k8s.io/v1 true EndpointSlice

events ev events.k8s.io/v1 true Event

ingresses ing extensions/v1beta1 true Ingress

flowschemas flowcontrol.apiserver.k8s.io/v1beta1 false FlowSchema

prioritylevelconfigurations flowcontrol.apiserver.k8s.io/v1beta1 false PriorityLevelConfiguration

helmchartconfigs helm.cattle.io/v1 true HelmChartConfig

helmcharts helm.cattle.io/v1 true HelmChart

addons k3s.cattle.io/v1 true Addon

nodes metrics.k8s.io/v1beta1 false NodeMetrics

pods metrics.k8s.io/v1beta1 true PodMetrics

ingressclasses networking.k8s.io/v1 false IngressClass

ingresses ing networking.k8s.io/v1 true Ingress

networkpolicies netpol networking.k8s.io/v1 true NetworkPolicy

runtimeclasses node.k8s.io/v1 false RuntimeClass

poddisruptionbudgets pdb policy/v1 true PodDisruptionBudget

podsecuritypolicies psp policy/v1beta1 false PodSecurityPolicy

clusterrolebindings rbac.authorization.k8s.io/v1 false ClusterRoleBinding

clusterroles rbac.authorization.k8s.io/v1 false ClusterRole

rolebindings rbac.authorization.k8s.io/v1 true RoleBinding

roles rbac.authorization.k8s.io/v1 true Role

priorityclasses pc scheduling.k8s.io/v1 false PriorityClass

csidrivers storage.k8s.io/v1 false CSIDriver

csinodes storage.k8s.io/v1 false CSINode

csistoragecapacities storage.k8s.io/v1beta1 true CSIStorageCapacity

storageclasses sc storage.k8s.io/v1 false StorageClass

volumeattachments storage.k8s.io/v1 false VolumeAttachment

ingressroutes traefik.containo.us/v1alpha1 true IngressRoute

ingressroutetcps traefik.containo.us/v1alpha1 true IngressRouteTCP

ingressrouteudps traefik.containo.us/v1alpha1 true IngressRouteUDP

middlewares traefik.containo.us/v1alpha1 true Middleware

serverstransports traefik.containo.us/v1alpha1 true ServersTransport

tlsoptions traefik.containo.us/v1alpha1 true TLSOption

tlsstores traefik.containo.us/v1alpha1 true TLSStore

traefikservices traefik.containo.us/v1alpha1 true TraefikService

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ kubectl api-resources | grep pod

pods po v1 true Pod

podtemplates v1 true PodTemplate

horizontalpodautoscalers hpa autoscaling/v1 true HorizontalPodAutoscaler

pods metrics.k8s.io/v1beta1 true PodMetrics

poddisruptionbudgets pdb policy/v1 true PodDisruptionBudget

podsecuritypolicies psp policy/v1beta1 false PodSecurityPolicy

- Meu Cluster Service Cluster-IP

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ k3d cluster create meucluster --servers 3 --agents 3 -p "30000:30000@loadbalancer"

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 63s

- Criando Dockerfile e executando,dockerignore

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/src

└──> $ docker build -t orbite82/kube-new:v1 -f Dockerfile .

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/src

└──> $ docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

orbite82/kube-new v1 6dfa208425a9 40 seconds ago 936MB

- Docker push

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/src

└──> $ docker push orbite82/kube-new:v1

- Subindo latest

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/src

└──> $ docker tag orbite82/kube-new:v1 orbite82/kube-new:latest

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/src

└──> $ docker push orbite82/kube-new:latest

- Criar pasta k8s

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ mkdir k8s

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ cd k8s/

- Aplicando deployment

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/k8s

└──> $ kubectl apply -f deployment.yaml

deployment.apps/postgre created

service/postgre created

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/k8s

└──> $ kubectl get pods

NAME READY STATUS RESTARTS AGE

postgre-786bc7b694-hrh4k 1/1 Running 0 43s

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/k8s

└──> $ kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

postgre 1/1 1 1 10m

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/k8s

└──> $ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/postgre-786bc7b694-hrh4k 1/1 Running 0 2m57s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 47m

service/postgre ClusterIP 10.43.105.88 <none> 5432/TCP 10m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/postgre 1/1 1 1 10m

NAME DESIRED CURRENT READY AGE

replicaset.apps/postgre-786bc7b694 1 1 1 2m57s

replicaset.apps/postgre-8d956584f 0 0 0 10m

- Port Forward

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/k8s

└──> $ kubectl port-forward service/postgre 5432:5432

Forwarding from 127.0.0.1:5432 -> 5432

Forwarding from [::1]:5432 -> 5432

#Deployment do Postgre

apiVersion: apps/v1

kind: Deployment

metadata:

name: postgre

spec:

selector:

matchLabels:

app: postgre

template:

metadata:

labels:

app: postgre

spec:

containers:

- name: postgre

image: postgres:14.3

ports:

- containerPort: 5432

env:

- name: POSTGRES_PASSWORD

value: "Kube#123"

- name: POSTGRES_USER

value: "kubenews"

- name: POSTGRES_DB

value: "kubenews"

---

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/k8s

└──> $ kubectl apply -f deployment.yaml

deployment.apps/postgre unchanged

service/postgre unchanged

deployment.apps/kubenews unchanged

service/kube-news created

# Deployment do Postgre

apiVersion: apps/v1

kind: Deployment

metadata:

name: postgre

spec:

selector:

matchLabels:

app: postgre

template:

metadata:

labels:

app: postgre

spec:

containers:

- name: postgre

image: postgres:14.3

ports:

- containerPort: 5432

env:

- name: POSTGRES_PASSWORD

value: "Kube#123"

- name: POSTGRES_USER

value: "kubenews"

- name: POSTGRES_DB

value: "kubenews"

---

apiVersion: v1

kind: Service

metadata:

name: postgre

spec:

selector:

app: postgre

ports:

- port: 5432

targetPort: 5432

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kubenews

spec:

selector:

matchLabels:

app: kubenews

template:

metadata:

labels:

app: kubenews

spec:

containers:

- name: kubenews

image: orbite82/kube-new:v1

env:

- name: DB_DATABASE

value: "kubenews"

- name: DB_USERNAME

value: "kubenews"

- name: DB_PASSWORD

value: "Kube#123"

- name: DB_HOST

value: "postgre"

---

apiVersion: v1

kind: Service

metadata:

name: kube-news

spec:

selector:

app: kubenews

ports:

- port: 80

targetPort: 8080

nodePort: 30000

type: NodePort

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/k8s

└──> $ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/kubenews-7f58f7f674-hgf4g 1/1 Running 0 3m24s

pod/postgre-786bc7b694-hrh4k 1/1 Running 0 22m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kube-news NodePort 10.43.92.127 <none> 80:30000/TCP 2m52s

service/kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 66m

service/postgre ClusterIP 10.43.105.88 <none> 5432/TCP 30m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/kubenews 1/1 1 1 3m24s

deployment.apps/postgre 1/1 1 1 30m

NAME DESIRED CURRENT READY AGE

replicaset.apps/kubenews-7f58f7f674 1 1 1 3m24s

replicaset.apps/postgre-786bc7b694 1 1 1 22m

replicaset.apps/postgre-8d956584f 0 0 0 30m

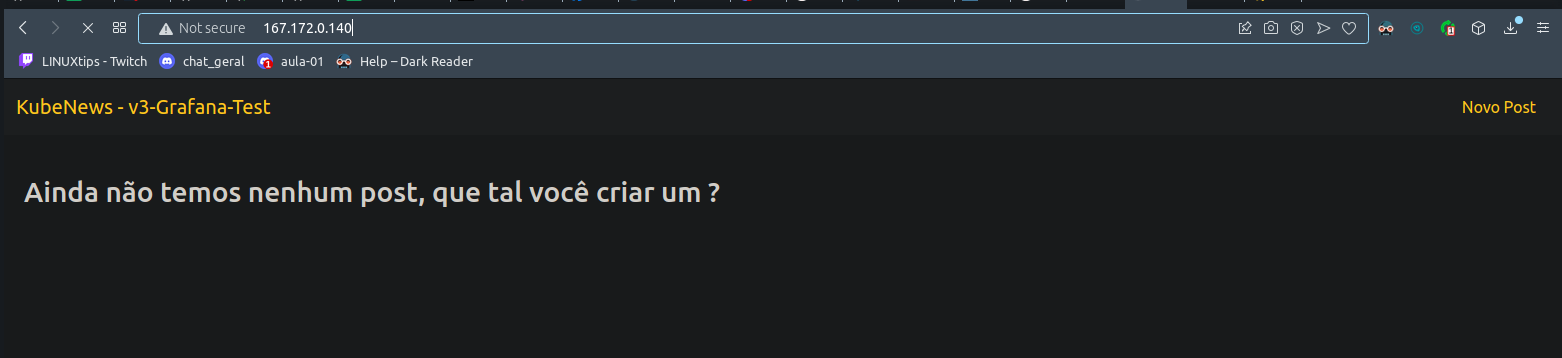

- Teste http://localhost:30000

- Testar no Db Beaver teste de conexão novamente!

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/k8s

└──> $ kubectl port-forward service/postgre 5432:5432

Forwarding from 127.0.0.1:5432 -> 5432

Forwarding from [::1]:5432 -> 5432

no db beaver -> Databases -> kubenes -> Schemas -> public -> Tables -> Posts -> Botão direito -> view data!

Resultado:

1 Teste Resumo Teste 2022-06-07 Esse é um teste 2022-06-07 19:49:07.884 -0300 2022-06-07 19:49:07.884 -0300

- Adicionando 20 replicas

# Deployment do Postgre

apiVersion: apps/v1

kind: Deployment

metadata:

name: postgre

spec:

selector:

matchLabels:

app: postgre

template:

metadata:

labels:

app: postgre

spec:

containers:

- name: postgre

image: postgres:14.3

ports:

- containerPort: 5432

env:

- name: POSTGRES_PASSWORD

value: "Kube#123"

- name: POSTGRES_USER

value: "kubenews"

- name: POSTGRES_DB

value: "kubenews"

---

apiVersion: v1

kind: Service

metadata:

name: postgre

spec:

selector:

app: postgre

ports:

- port: 5432

targetPort: 5432

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kubenews

spec:

replicas: 20

selector:

matchLabels:

app: kubenews

template:

metadata:

labels:

app: kubenews

spec:

containers:

- name: kubenews

image: orbite82/kube-new:v1

env:

- name: DB_DATABASE

value: "kubenews"

- name: DB_USERNAME

value: "kubenews"

- name: DB_PASSWORD

value: "Kube#123"

- name: DB_HOST

value: "postgre"

---

apiVersion: v1

kind: Service

metadata:

name: kube-news

spec:

selector:

app: kubenews

ports:

- port: 80

targetPort: 8080

nodePort: 30000

type: NodePort

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/k8s

└──> $ kubectl apply -f deployment.yaml

deployment.apps/postgre unchanged

service/postgre unchanged

deployment.apps/kubenews configured

service/kube-news unchanged

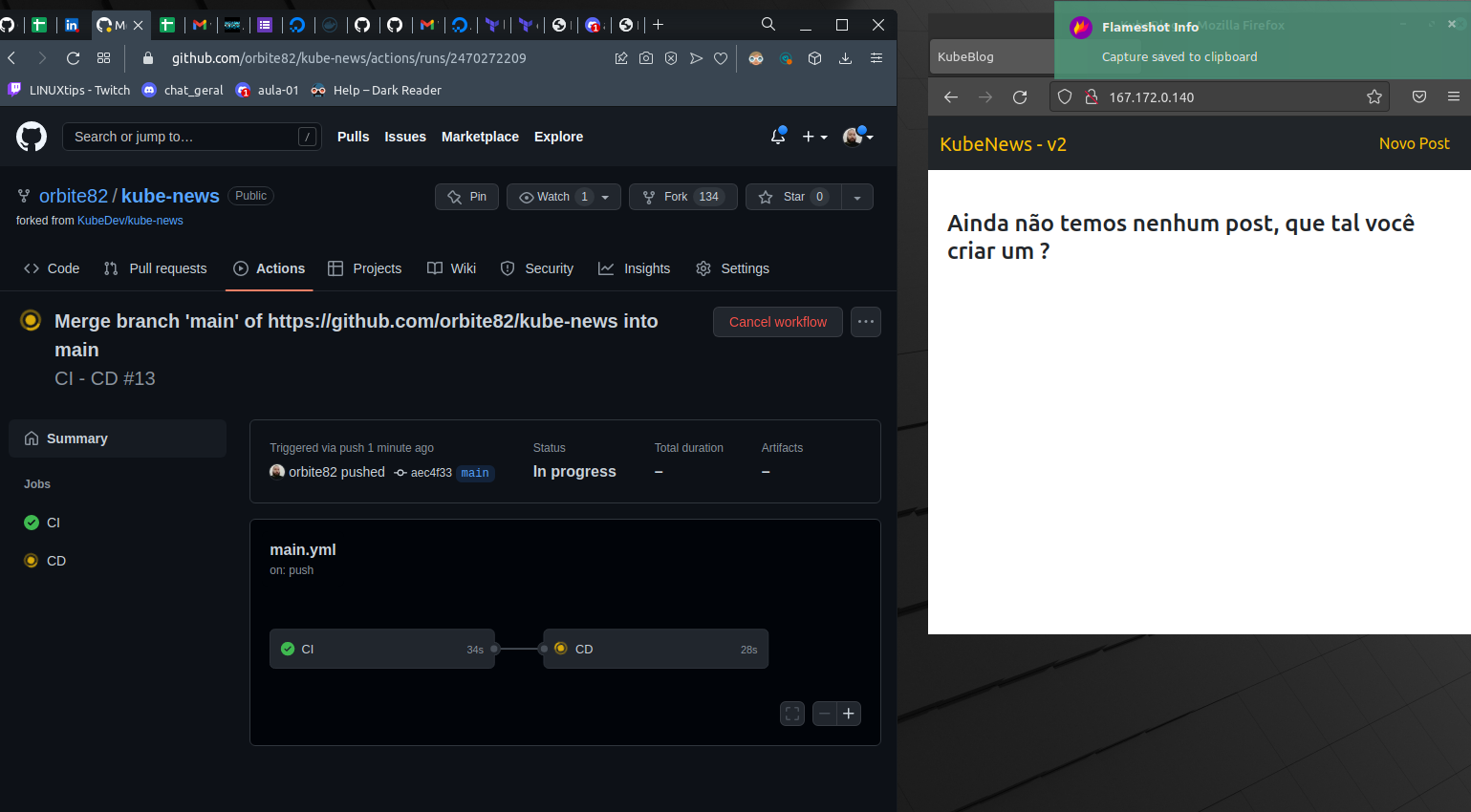

- Alterando index

views -> partial -> nav-bar.ejs

De: KubeNews Para: KubeNews- v2

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/k8s

└──> $ cd ..

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ cd src/

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/src

└──> $ docker build -t orbite82/kube-new:v2 .

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/src

└──> $ docker push orbite82/kube-new:v2

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/src

└──> $ docker tag orbite82/kube-new:v2 orbite82/kube-new:latest

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/src

└──> $ docker push orbite82/kube-new:latest

- Alterar a imagem para a v2 no deployment

# Deployment do Postgre

apiVersion: apps/v1

kind: Deployment

metadata:

name: postgre

spec:

selector:

matchLabels:

app: postgre

template:

metadata:

labels:

app: postgre

spec:

containers:

- name: postgre

image: postgres:14.3

ports:

- containerPort: 5432

env:

- name: POSTGRES_PASSWORD

value: "Kube#123"

- name: POSTGRES_USER

value: "kubenews"

- name: POSTGRES_DB

value: "kubenews"

---

apiVersion: v1

kind: Service

metadata:

name: postgre

spec:

selector:

app: postgre

ports:

- port: 5432

targetPort: 5432

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kubenews

spec:

replicas: 20

selector:

matchLabels:

app: kubenews

template:

metadata:

labels:

app: kubenews

spec:

containers:

- name: kubenews

image: orbite82/kube-new:v2

env:

- name: DB_DATABASE

value: "kubenews"

- name: DB_USERNAME

value: "kubenews"

- name: DB_PASSWORD

value: "Kube#123"

- name: DB_HOST

value: "postgre"

---

apiVersion: v1

kind: Service

metadata:

name: kube-news

spec:

selector:

app: kubenews

ports:

- port: 80

targetPort: 8080

nodePort: 30000

type: NodePort

- rodar

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/src

└──> $ watch 'kubectl get pods'

Every 2,0s: kubectl get pods orbite-desktop: Tue Jun 7 20:11:23 2022

NAME READY STATUS RESTARTS AGE

kubenews-55554d8b4f-22jrq 1/1 Running 0 15s

kubenews-55554d8b4f-4zpkz 1/1 Running 0 27s

kubenews-55554d8b4f-5qczt 1/1 Running 0 27s

kubenews-55554d8b4f-8drqf 1/1 Running 0 27s

kubenews-55554d8b4f-9blmn 1/1 Running 0 15s

kubenews-55554d8b4f-cc5vj 1/1 Running 0 20s

kubenews-55554d8b4f-cl47p 1/1 Running 0 13s

kubenews-55554d8b4f-glkr5 1/1 Running 0 27s

kubenews-55554d8b4f-jmk2j 1/1 Running 0 13s

kubenews-55554d8b4f-k274g 1/1 Running 0 27s

kubenews-55554d8b4f-kpjzg 1/1 Running 0 27s

kubenews-55554d8b4f-lmqbz 1/1 Running 0 27s

kubenews-55554d8b4f-md4gc 1/1 Running 0 13s

kubenews-55554d8b4f-mm4kv 1/1 Running 0 26s

kubenews-55554d8b4f-plps2 1/1 Running 0 27s

kubenews-55554d8b4f-q9kd8 1/1 Running 0 14s

kubenews-55554d8b4f-qr4tg 1/1 Running 0 27s

kubenews-55554d8b4f-rggj9 1/1 Running 0 13s

kubenews-55554d8b4f-vqxxh 1/1 Running 0 13s

kubenews-55554d8b4f-zn8qr 1/1 Running 0 21s

kubenews-7f58f7f674-2qhfl 1/1 Terminating 0 11m

kubenews-7f58f7f674-4mrzc 1/1 Terminating 0 11m

kubenews-7f58f7f674-5ks6m 1/1 Terminating 0 11m

kubenews-7f58f7f674-5x2b5 1/1 Terminating 0 11m

kubenews-7f58f7f674-8kfsc 1/1 Terminating 0 11m

kubenews-7f58f7f674-8l45q 1/1 Terminating 0 11m

kubenews-7f58f7f674-8nh2n 1/1 Terminating 0 11m

kubenews-7f58f7f674-hgf4g 1/1 Terminating 0 28m

kubenews-7f58f7f674-m5tpr 1/1 Running 0 11m

kubenews-7f58f7f674-mv7db 1/1 Terminating 0 11m

kubenews-7f58f7f674-n525k 1/1 Terminating 0 11m

kubenews-7f58f7f674-nh9tf 1/1 Terminating 0 11m

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/k8s

└──> $ kubectl apply -f deployment.yaml

deployment.apps/postgre unchanged

service/postgre unchanged

deployment.apps/kubenews configured

service/kube-news unchanged

- Aqui vi aparecer como KubeNews - v2

- Fazendo o Rollback undo

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/k8s

└──> $ kubectl rollout undo deployment kubenews

deployment.apps/kubenews rolled back

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/k8s

└──> $ kubectl get pods

NAME READY STATUS RESTARTS AGE

kubenews-55554d8b4f-22jrq 1/1 Terminating 0 5m49s

kubenews-55554d8b4f-4zpkz 1/1 Terminating 0 6m1s

kubenews-55554d8b4f-5qczt 1/1 Terminating 0 6m1s

kubenews-55554d8b4f-8drqf 1/1 Terminating 0 6m1s

kubenews-55554d8b4f-9blmn 1/1 Terminating 0 5m49s

kubenews-55554d8b4f-cc5vj 1/1 Terminating 0 5m54s

kubenews-55554d8b4f-cl47p 1/1 Terminating 0 5m47s

kubenews-55554d8b4f-glkr5 1/1 Terminating 0 6m1s

kubenews-55554d8b4f-jmk2j 1/1 Terminating 0 5m47s

kubenews-55554d8b4f-k274g 1/1 Terminating 0 6m1s

kubenews-55554d8b4f-kpjzg 1/1 Terminating 0 6m1s

kubenews-55554d8b4f-lmqbz 1/1 Terminating 0 6m1s

kubenews-55554d8b4f-md4gc 1/1 Terminating 0 5m47s

kubenews-55554d8b4f-mm4kv 1/1 Terminating 0 6m

kubenews-55554d8b4f-plps2 1/1 Terminating 0 6m1s

kubenews-55554d8b4f-q9kd8 1/1 Terminating 0 5m48s

kubenews-55554d8b4f-qr4tg 1/1 Terminating 0 6m1s

kubenews-55554d8b4f-rggj9 1/1 Terminating 0 5m47s

kubenews-55554d8b4f-vqxxh 1/1 Terminating 0 5m47s

kubenews-55554d8b4f-zn8qr 1/1 Terminating 0 5m55s

kubenews-7f58f7f674-6dxlb 1/1 Running 0 21s

kubenews-7f58f7f674-6jv4l 1/1 Running 0 18s

kubenews-7f58f7f674-6shpx 1/1 Running 0 9s

kubenews-7f58f7f674-7djtn 1/1 Running 0 21s

kubenews-7f58f7f674-7g7nf 1/1 Running 0 21s

kubenews-7f58f7f674-7h84w 1/1 Running 0 14s

kubenews-7f58f7f674-8kzpq 1/1 Running 0 13s

kubenews-7f58f7f674-bvgz4 1/1 Running 0 20s

kubenews-7f58f7f674-c44vq 1/1 Running 0 20s

kubenews-7f58f7f674-ckgh4 1/1 Running 0 21s

kubenews-7f58f7f674-fsfcx 1/1 Running 0 16s

kubenews-7f58f7f674-g8vwk 1/1 Running 0 20s

kubenews-7f58f7f674-jrb47 1/1 Running 0 20s

kubenews-7f58f7f674-kbc2c 1/1 Running 0 21s

kubenews-7f58f7f674-lwkx6 1/1 Running 0 15s

kubenews-7f58f7f674-mbvtr 1/1 Running 0 20s

kubenews-7f58f7f674-pzmd7 1/1 Running 0 7s

kubenews-7f58f7f674-qlg6f 1/1 Running 0 15s

kubenews-7f58f7f674-rwzk9 1/1 Running 0 17s

kubenews-7f58f7f674-xtpkq 1/1 Running 0 9s

postgre-786bc7b694-hrh4k 1/1 Running 0 53m

- Teste novamente o rolback

- Link do Docker Hub

- Subindo 20 pods Na Digital Ocean

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ kubectl get pods

NAME READY STATUS RESTARTS AGE

kubenews-7bd5d6654d-6n8zg 1/1 Running 0 2m49s

kubenews-7bd5d6654d-7thlp 1/1 Running 0 2m31s

kubenews-7bd5d6654d-8x45g 1/1 Running 0 2m50s

kubenews-7bd5d6654d-9js5w 1/1 Running 0 2m50s

kubenews-7bd5d6654d-bcdtc 1/1 Running 0 2m50s

kubenews-7bd5d6654d-fvj85 1/1 Running 0 2m38s

kubenews-7bd5d6654d-h29bg 1/1 Running 0 2m40s

kubenews-7bd5d6654d-j9flf 1/1 Running 0 2m50s

kubenews-7bd5d6654d-k7kmb 1/1 Running 0 2m49s

kubenews-7bd5d6654d-lwx8t 1/1 Running 0 2m27s

kubenews-7bd5d6654d-psx8h 1/1 Running 0 2m49s

kubenews-7bd5d6654d-rzjp9 1/1 Running 0 2m35s

kubenews-7bd5d6654d-sb6dz 1/1 Running 0 2m35s

kubenews-7bd5d6654d-t5v57 1/1 Running 0 2m49s

kubenews-7bd5d6654d-tnszr 1/1 Running 0 2m33s

kubenews-7bd5d6654d-w7n9r 1/1 Running 0 2m37s

kubenews-7bd5d6654d-wx9h5 1/1 Running 0 2m50s

kubenews-7bd5d6654d-xkxvw 1/1 Running 0 2m49s

kubenews-7bd5d6654d-z2sqr 1/1 Running 0 2m38s

kubenews-7bd5d6654d-z5wg6 1/1 Running 0 2m39s

postgre-786bc7b694-5wbzc 1/1 Running 0 13m

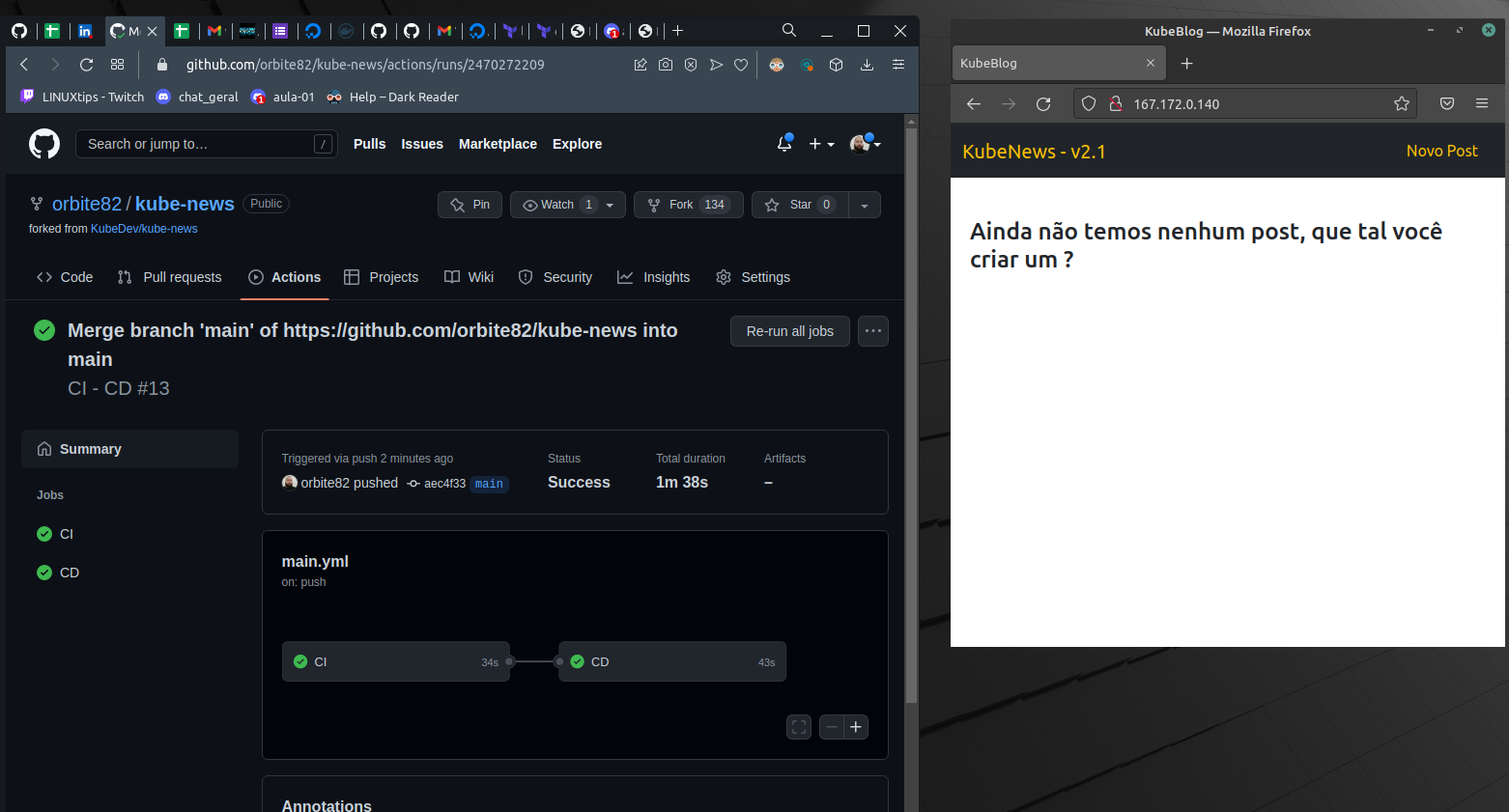

- Editar a versão pra 2.1

src -> views -> partial -> nav-bar.ejs:

<a class="navbar-brand text-warning" href="/">KubeNews - v2.1</a>

-

De V2:

- Para V2.1:

========

- Instalando Helm com Prometheus

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

"prometheus-community" has been added to your repositories

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "prometheus-community" chart repository

Update Complete. ⎈Happy Helming!⎈

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ helm show values prometheus-community/prometheus

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ mkdir prometheus

cd prometheus/

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/prometheus

└──> $

helm show values prometheus-community/prometheus > values-prometheus.yaml

- Atualização e Instalação do prometheus via helm

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/prometheus

└──> $ helm upgrade --install prometheus prometheus-community/prometheus --set alertmanager.enabled=false,server.persistentVolume.enabled=false,server.service.type=LoadBalancer,server.global.scrape_interval=10s,pushgateway.enabled=false

Release "prometheus" does not exist. Installing it now.

NAME: prometheus

LAST DEPLOYED: Fri Jun 10 15:38:37 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The Prometheus server can be accessed via port 80 on the following DNS name from within your cluster:

prometheus-server.default.svc.cluster.local

Get the Prometheus server URL by running these commands in the same shell:

NOTE: It may take a few minutes for the LoadBalancer IP to be available.

You can watch the status of by running 'kubectl get svc --namespace default -w prometheus-server'

export SERVICE_IP=$(kubectl get svc --namespace default prometheus-server -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

echo http://$SERVICE_IP:80

#################################################################################

###### WARNING: Persistence is disabled!!! You will lose your data when #####

###### the Server pod is terminated. #####

#################################################################################

#################################################################################

###### WARNING: Pod Security Policy has been moved to a global property. #####

###### use .Values.podSecurityPolicy.enabled with pod-based #####

###### annotations #####

###### (e.g. .Values.nodeExporter.podSecurityPolicy.annotations) #####

#################################################################################

For more information on running Prometheus, visit:

https://prometheus.io/

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/prometheus

└──> $ kubectl get pods

NAME READY STATUS RESTARTS AGE

kubenews-5685bb94b6-2ld4v 1/1 Running 0 24h

kubenews-5685bb94b6-4hw4r 1/1 Running 0 24h

kubenews-5685bb94b6-4rfvc 1/1 Running 0 24h

kubenews-5685bb94b6-4rhq8 1/1 Running 0 24h

kubenews-5685bb94b6-66sbh 1/1 Running 0 24h

kubenews-5685bb94b6-6qcb6 1/1 Running 0 24h

kubenews-5685bb94b6-798x8 1/1 Running 0 24h

kubenews-5685bb94b6-8lqhd 1/1 Running 0 24h

kubenews-5685bb94b6-b4lhh 1/1 Running 0 24h

kubenews-5685bb94b6-cmtdq 1/1 Running 0 24h

kubenews-5685bb94b6-fdlcb 1/1 Running 0 24h

kubenews-5685bb94b6-fhhqf 1/1 Running 0 24h

kubenews-5685bb94b6-hbk8x 1/1 Running 0 24h

kubenews-5685bb94b6-hkkvm 1/1 Running 0 24h

kubenews-5685bb94b6-hzf28 1/1 Running 0 24h

kubenews-5685bb94b6-k94bx 1/1 Running 0 24h

kubenews-5685bb94b6-lzds6 1/1 Running 0 24h

kubenews-5685bb94b6-mkvdl 1/1 Running 0 24h

kubenews-5685bb94b6-rmwcm 1/1 Running 0 24h

kubenews-5685bb94b6-wmbw5 1/1 Running 0 24h

postgre-786bc7b694-5wbzc 1/1 Running 0 24h

prometheus-kube-state-metrics-5fd8648d78-qcdxf 1/1 Running 0 73s

prometheus-node-exporter-64nhq 1/1 Running 0 73s

prometheus-node-exporter-nkr2l 1/1 Running 0 73s

prometheus-server-6988774754-jzkcl 2/2 Running 0 73s

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/prometheus

└──> $ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-news LoadBalancer 10.245.171.128 167.172.0.140 80:30000/TCP 24h

kubernetes ClusterIP 10.245.0.1 <none> 443/TCP 29h

postgre ClusterIP 10.245.25.246 <none> 5432/TCP 24h

prometheus-kube-state-metrics ClusterIP 10.245.120.194 <none> 8080/TCP 4m18s

prometheus-node-exporter ClusterIP 10.245.77.91 <none> 9100/TCP 4m18s

prometheus-server LoadBalancer 10.245.68.176 161.35.254.149 80:32755/TCP 4m18s

- Link Prometheus 161.35.254.149

- Alterando de 20 pra 1 Pod

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/prometheus

└──> $ kubectl apply -f ~/Iniciativa-Devops/kube-news/k8s/deployment.yaml

deployment.apps/postgre unchanged

service/postgre unchanged

deployment.apps/kubenews configured

service/kube-news unchanged

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news/prometheus

└──> $ kubectl get pods

NAME READY STATUS RESTARTS AGE

kubenews-55554d8b4f-vl9kq 1/1 Running 0 31s

kubenews-5685bb94b6-2ld4v 1/1 Terminating 0 24h

kubenews-5685bb94b6-4hw4r 1/1 Terminating 0 24h

kubenews-5685bb94b6-4rfvc 1/1 Terminating 0 24h

kubenews-5685bb94b6-4rhq8 1/1 Terminating 0 24h

kubenews-5685bb94b6-6qcb6 1/1 Terminating 0 24h

kubenews-5685bb94b6-798x8 1/1 Terminating 0 24h

kubenews-5685bb94b6-8lqhd 1/1 Terminating 0 24h

kubenews-5685bb94b6-b4lhh 1/1 Terminating 0 24h

kubenews-5685bb94b6-cmtdq 1/1 Terminating 0 24h

kubenews-5685bb94b6-fdlcb 1/1 Terminating 0 24h

kubenews-5685bb94b6-fhhqf 1/1 Terminating 0 24h

kubenews-5685bb94b6-hbk8x 1/1 Terminating 0 24h

kubenews-5685bb94b6-hkkvm 1/1 Terminating 0 24h

kubenews-5685bb94b6-hzf28 1/1 Terminating 0 24h

kubenews-5685bb94b6-k94bx 0/1 Terminating 0 24h

kubenews-5685bb94b6-lzds6 1/1 Terminating 0 24h

kubenews-5685bb94b6-mkvdl 1/1 Terminating 0 24h

kubenews-5685bb94b6-wmbw5 0/1 Terminating 0 24h

postgre-786bc7b694-5wbzc 1/1 Running 0 25h

prometheus-kube-state-metrics-5fd8648d78-qcdxf 1/1 Running 0 8m43s

prometheus-node-exporter-64nhq 1/1 Running 0 8m43s

prometheus-node-exporter-nkr2l 1/1 Running 0 8m43s

prometheus-server-6988774754-jzkcl 2/2 Running 0 8m43s

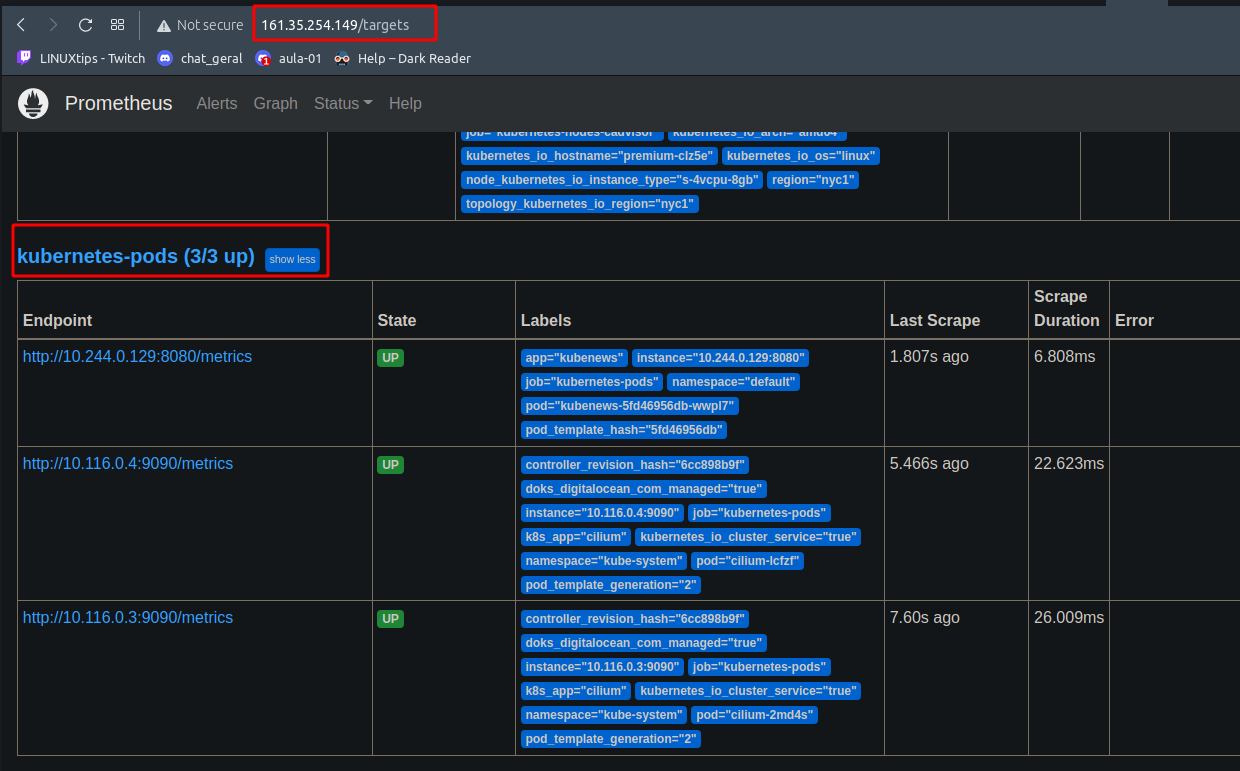

- Metricas da minha aplicação 167.172.0.140/metrics

- Alterando meu deployment com versão 18 que foi a utlima e add annotation

# Deployment do Postgre

apiVersion: apps/v1

kind: Deployment

metadata:

name: postgre

spec:

selector:

matchLabels:

app: postgre

template:

metadata:

labels:

app: postgre

spec:

containers:

- name: postgre

image: postgres:14.3

ports:

- containerPort: 5432

env:

- name: POSTGRES_PASSWORD

value: "Kube#123"

- name: POSTGRES_USER

value: "kubenews"

- name: POSTGRES_DB

value: "kubenews"

---

apiVersion: v1

kind: Service

metadata:

name: postgre

spec:

selector:

app: postgre

ports:

- port: 5432

targetPort: 5432

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kubenews

spec:

replicas: 1

selector:

matchLabels:

app: kubenews

template:

metadata:

labels:

app: kubenews

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "8080"

prometheus.io/path: "/metrics"

spec:

containers:

- name: kubenews

image: orbite82/kube-new:18

env:

- name: DB_DATABASE

value: "kubenews"

- name: DB_USERNAME

value: "kubenews"

- name: DB_PASSWORD

value: "Kube#123"

- name: DB_HOST

value: "postgre"

---

apiVersion: v1

kind: Service

metadata:

name: kube-news

spec:

selector:

app: kubenews

ports:

- port: 80

targetPort: 8080

nodePort: 30000

type: LoadBalancer

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ kubectl apply -f ~/Iniciativa-Devops/kube-news/k8s/deployment.yaml

deployment.apps/postgre unchanged

service/postgre unchanged

deployment.apps/kubenews configured

service/kube-news unchanged

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ kubectl get pods

NAME READY STATUS RESTARTS AGE

kubenews-5fd46956db-wwpl7 1/1 Running 0 37s

postgre-786bc7b694-5wbzc 1/1 Running 0 25h

prometheus-kube-state-metrics-5fd8648d78-qcdxf 1/1 Running 0 31m

prometheus-node-exporter-64nhq 1/1 Running 0 31m

prometheus-node-exporter-nkr2l 1/1 Running 0 31m

prometheus-server-6988774754-jzkcl 2/2 Running 0 31m

- 1 Replica

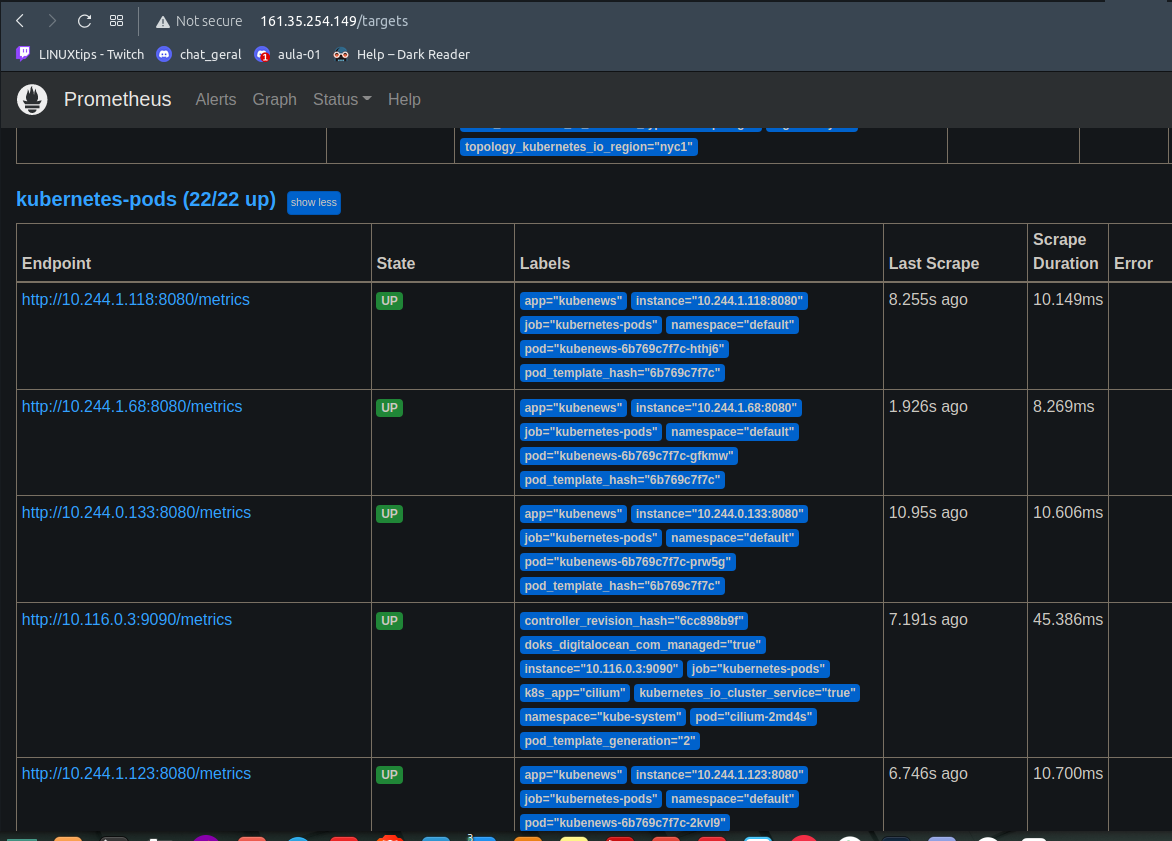

kubernetes-pods (3/3 up)

- Ajustando pra v2 e voltar versionamento

# Deployment do Postgre

apiVersion: apps/v1

kind: Deployment

metadata:

name: postgre

spec:

selector:

matchLabels:

app: postgre

template:

metadata:

labels:

app: postgre

spec:

containers:

- name: postgre

image: postgres:14.3

ports:

- containerPort: 5432

env:

- name: POSTGRES_PASSWORD

value: "Kube#123"

- name: POSTGRES_USER

value: "kubenews"

- name: POSTGRES_DB

value: "kubenews"

---

apiVersion: v1

kind: Service

metadata:

name: postgre

spec:

selector:

app: postgre

ports:

- port: 5432

targetPort: 5432

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kubenews

spec:

replicas: 20

selector:

matchLabels:

app: kubenews

template:

metadata:

labels:

app: kubenews

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "8080"

prometheus.io/path: "/metrics"

spec:

containers:

- name: kubenews

image: orbite82/kube-new:v2

env:

- name: DB_DATABASE

value: "kubenews"

- name: DB_USERNAME

value: "kubenews"

- name: DB_PASSWORD

value: "Kube#123"

- name: DB_HOST

value: "postgre"

---

apiVersion: v1

kind: Service

metadata:

name: kube-news

spec:

selector:

app: kubenews

ports:

- port: 80

targetPort: 8080

nodePort: 30000

type: LoadBalancer

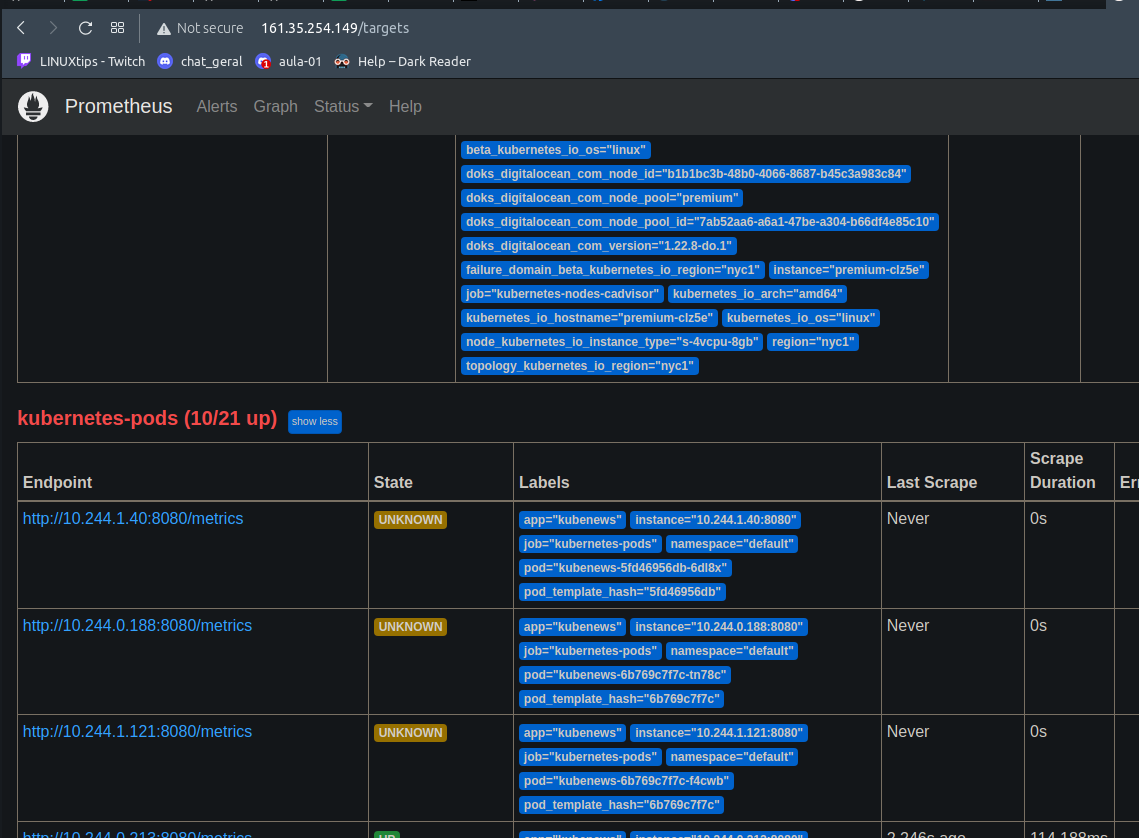

- Subindo 20 replicas

- Estavel

- Voltando pra 1 pod

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ kubectl apply -f ~/Iniciativa-Devops/kube-news/k8s/deployment.yaml

deployment.apps/postgre unchanged

service/postgre unchanged

deployment.apps/kubenews configured

service/kube-news unchanged

- Install Grafana Helm

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ helm repo add grafana https://grafana.github.io/helm-charts

"grafana" has been added to your repositories

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "prometheus-community" chart repository

...Successfully got an update from the "grafana" chart repository

Update Complete. ⎈Happy Helming!⎈

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ helm upgrade --install grafana grafana/grafana --set service.type=LoadBalancer

Release "grafana" does not exist. Installing it now.

W0610 17:48:26.581975 28607 warnings.go:70] policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

W0610 17:48:26.747093 28607 warnings.go:70] policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

W0610 17:48:29.916647 28607 warnings.go:70] policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

W0610 17:48:29.919125 28607 warnings.go:70] policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

NAME: grafana

LAST DEPLOYED: Fri Jun 10 17:48:24 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

1. Get your 'admin' user password by running:

kubectl get secret --namespace default grafana -o jsonpath="{.data.admin-password}" | base64 --decode ; echo

2. The Grafana server can be accessed via port 80 on the following DNS name from within your cluster:

grafana.default.svc.cluster.local

Get the Grafana URL to visit by running these commands in the same shell:

NOTE: It may take a few minutes for the LoadBalancer IP to be available.

You can watch the status of by running 'kubectl get svc --namespace default -w grafana'

export SERVICE_IP=$(kubectl get svc --namespace default grafana -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

http://$SERVICE_IP:80

3. Login with the password from step 1 and the username: admin

#################################################################################

###### WARNING: Persistence is disabled!!! You will lose your data when #####

###### the Grafana pod is terminated. #####

#################################################################################

- Cuidado essa é a senha admin: xR1Ft6s3qnmj***************m

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ kubectl get secret --namespace default grafana -o jsonpath="{.data.admin-password}" | base64 --decode ; echo

xR1Ft6s3qnmj****************m*

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/grafana-c7c79dfc7-cskxn 1/1 Running 0 2m11s

pod/kubenews-55d44749cf-kp86p 1/1 Running 0 82m

pod/postgre-786bc7b694-5wbzc 1/1 Running 0 27h

pod/prometheus-kube-state-metrics-5fd8648d78-qcdxf 1/1 Running 0 132m

pod/prometheus-node-exporter-64nhq 1/1 Running 0 132m

pod/prometheus-node-exporter-nkr2l 1/1 Running 0 132m

pod/prometheus-server-6988774754-jzkcl 2/2 Running 0 132m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/grafana LoadBalancer 10.245.200.22 <pending> 80:32211/TCP 2m11s

service/kube-news LoadBalancer 10.245.171.128 167.172.0.140 80:30000/TCP 27h

service/kubernetes ClusterIP 10.245.0.1 <none> 443/TCP 31h

service/postgre ClusterIP 10.245.25.246 <none> 5432/TCP 27h

service/prometheus-kube-state-metrics ClusterIP 10.245.120.194 <none> 8080/TCP 132m

service/prometheus-node-exporter ClusterIP 10.245.77.91 <none> 9100/TCP 132m

service/prometheus-server LoadBalancer 10.245.68.176 161.35.254.149 80:32755/TCP 132m

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/prometheus-node-exporter 2 2 2 2 2 <none> 132m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/grafana 1/1 1 1 2m12s

deployment.apps/kubenews 1/1 1 1 27h

deployment.apps/postgre 1/1 1 1 27h

deployment.apps/prometheus-kube-state-metrics 1/1 1 1 132m

deployment.apps/prometheus-server 1/1 1 1 132m

NAME DESIRED CURRENT READY AGE

replicaset.apps/grafana-c7c79dfc7 1 1 1 2m12s

replicaset.apps/kubenews-55554d8b4f 0 0 0 123m

replicaset.apps/kubenews-55d44749cf 1 1 1 82m

replicaset.apps/kubenews-5685bb94b6 0 0 0 26h

replicaset.apps/kubenews-5fd46956db 0 0 0 101m

replicaset.apps/kubenews-644b94d7db 0 0 0 26h

replicaset.apps/kubenews-65b64c76f9 0 0 0 26h

replicaset.apps/kubenews-6b769c7f7c 0 0 0 89m

replicaset.apps/kubenews-766fdc877c 0 0 0 26h

replicaset.apps/kubenews-78c6759564 0 0 0 26h

replicaset.apps/kubenews-7998b68689 0 0 0 26h

replicaset.apps/kubenews-7bd5d6654d 0 0 0 26h

replicaset.apps/postgre-786bc7b694 1 1 1 27h

replicaset.apps/prometheus-kube-state-metrics-5fd8648d78 1 1 1 132m

replicaset.apps/prometheus-server-6988774754 1 1 1 132m

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

grafana LoadBalancer 10.245.200.22 138.197.224.130 80:32211/TCP 4m45s

kube-news LoadBalancer 10.245.171.128 167.172.0.140 80:30000/TCP 27h

kubernetes ClusterIP 10.245.0.1 <none> 443/TCP 31h

postgre ClusterIP 10.245.25.246 <none> 5432/TCP 27h

prometheus-kube-state-metrics ClusterIP 10.245.120.194 <none> 8080/TCP 134m

prometheus-node-exporter ClusterIP 10.245.77.91 <none> 9100/TCP 134m

prometheus-server LoadBalancer 10.245.68.176 161.35.254.149 80:32755/TCP 134m

- Endereço do Grafana

login: admin senha: xR1Ft6s3qnmj***************m

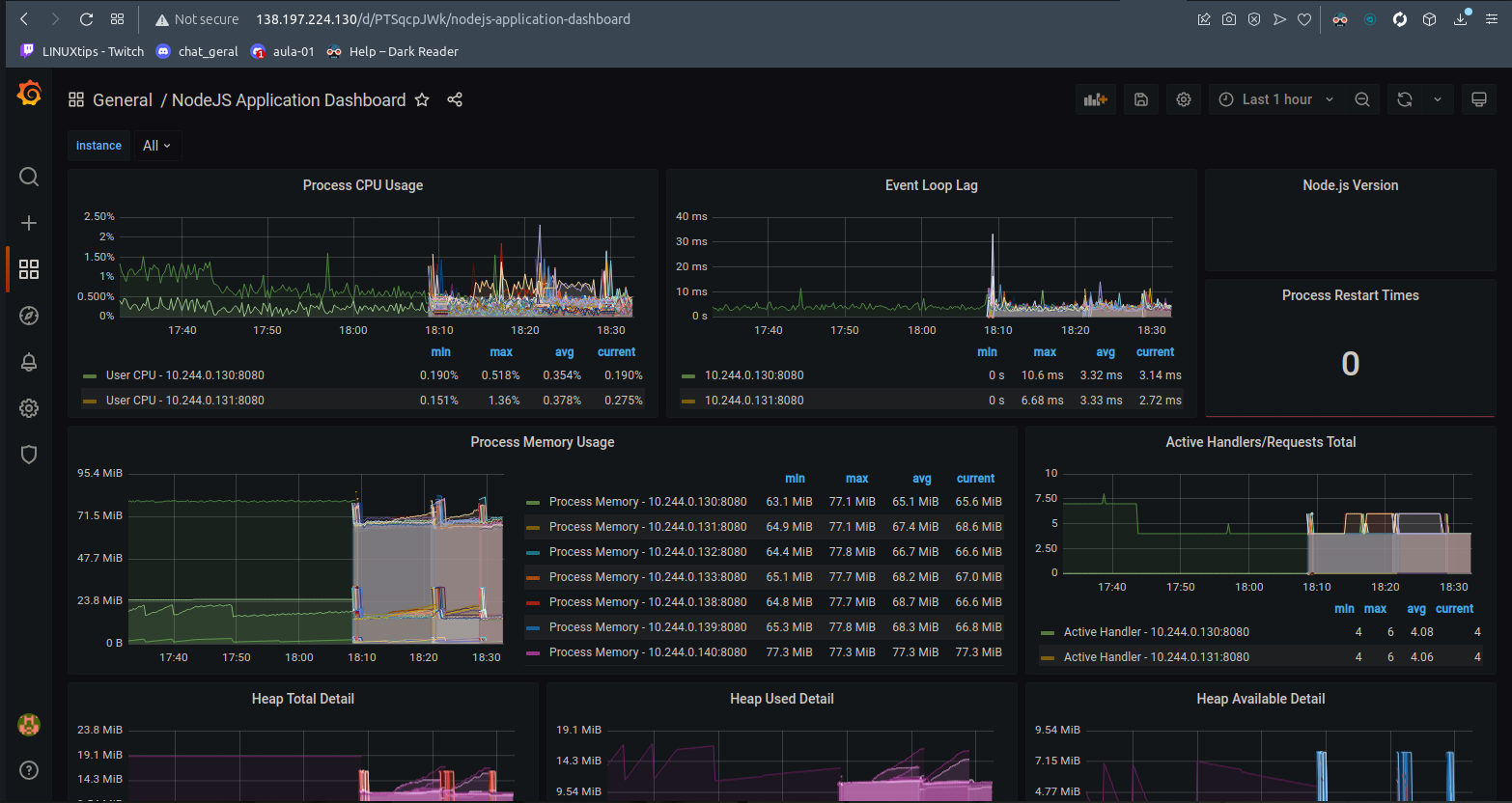

- Testando o dashboard grafana com 20 replicas

┌─[orbite]@[orbite-desktop]:~/Iniciativa-Devops/kube-news

└──> $ kubectl apply -f ~/Iniciativa-Devops/kube-news/k8s/deployment.yaml

deployment.apps/postgre unchanged

service/postgre unchanged

deployment.apps/kubenews configured

service/kube-news unchanged

- Teste App + Grafana + Prometheus + Digital Ocean K8s + CI/CD GithubActions

- Dashboard Final