If you find this repository helpful, please consider giving us a star⭐!

This repository contains an simple and unofficial implementation of Animate Anyone. This project is built upon magic-animate and AnimateDiff.

The first training phase basic test passed, currently in training and testing the second phase.

Training may be slow due to GPU shortage.😢

It only takes a few days to release the weights.😄

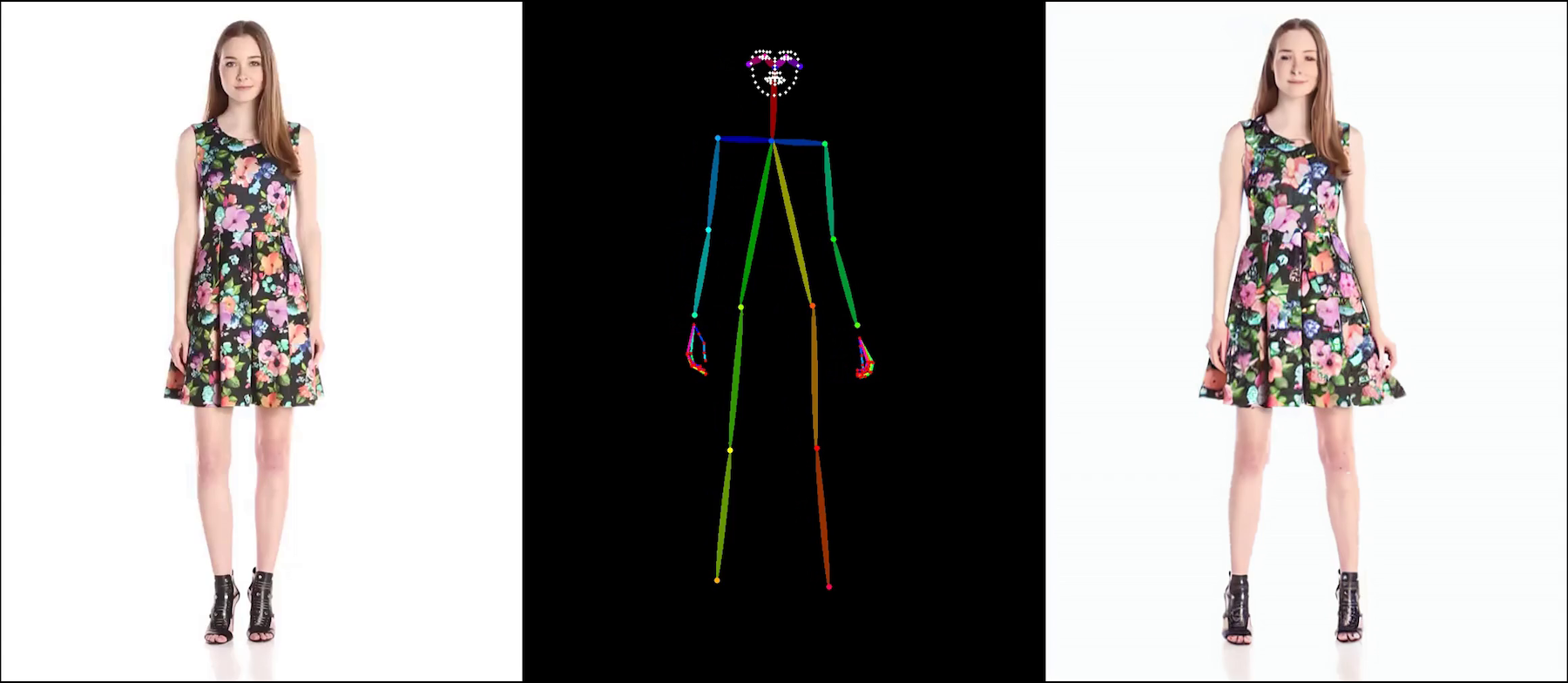

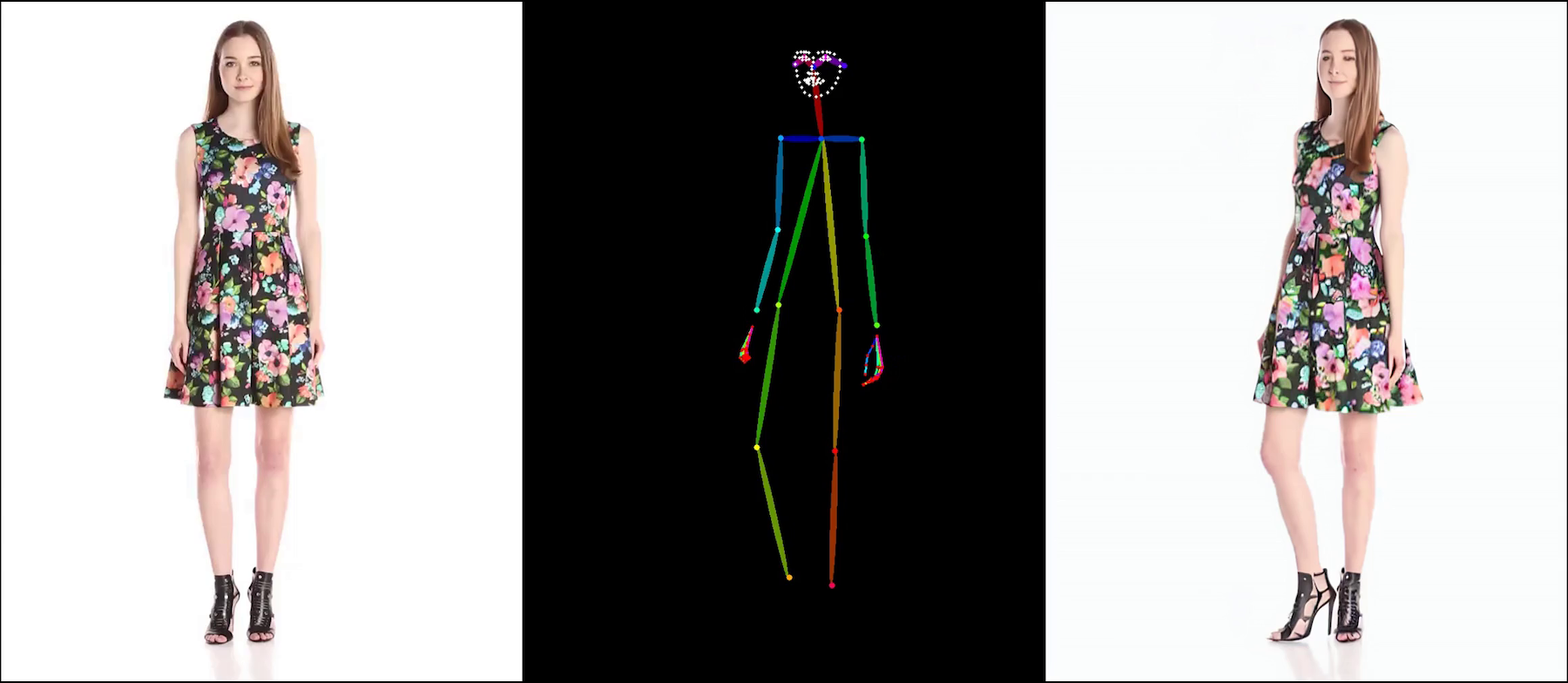

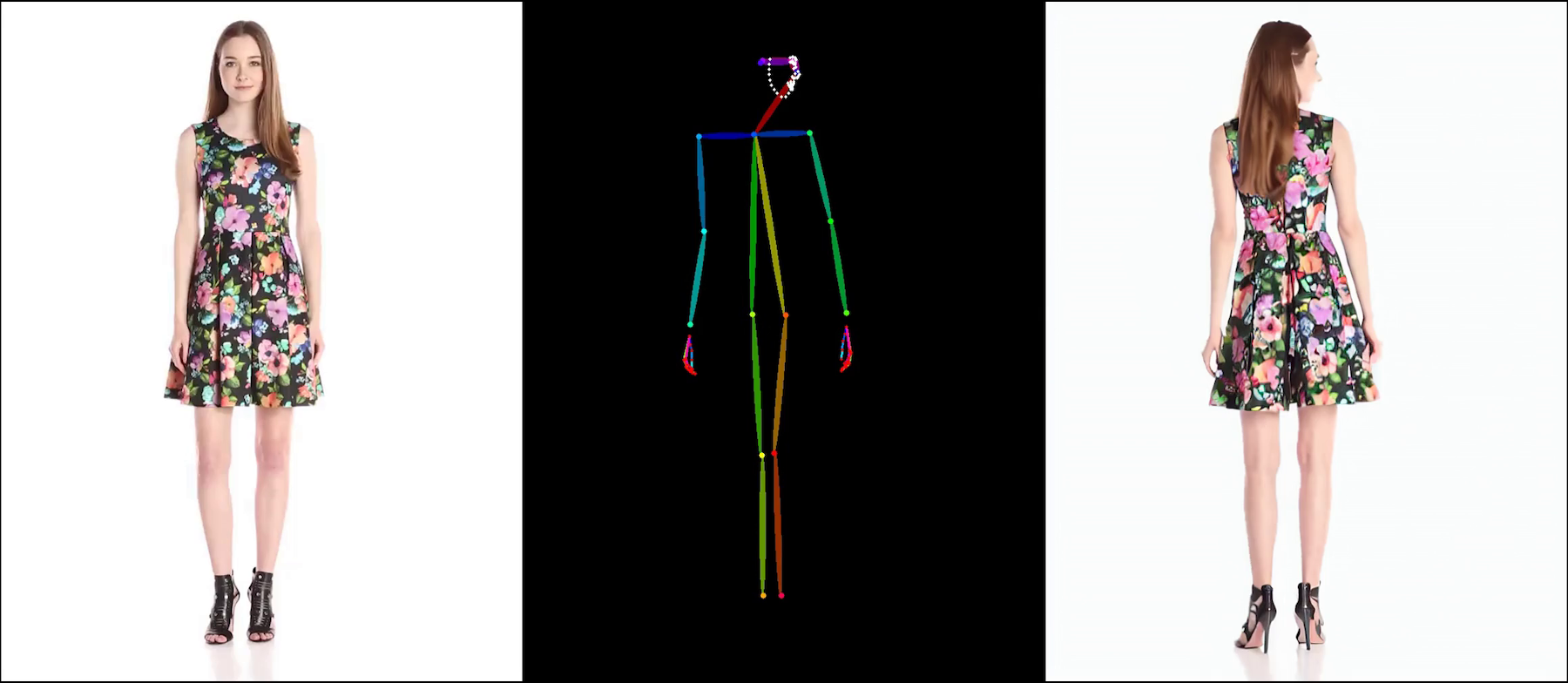

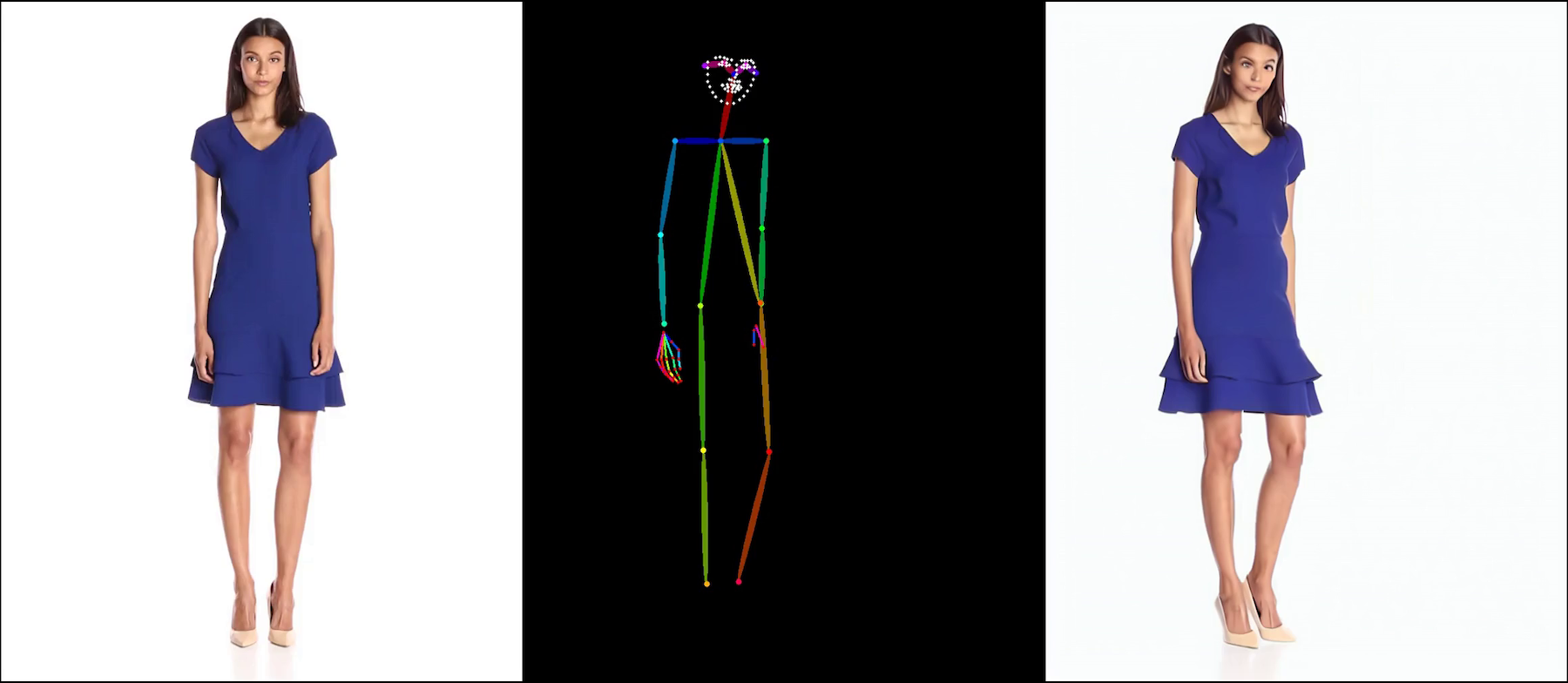

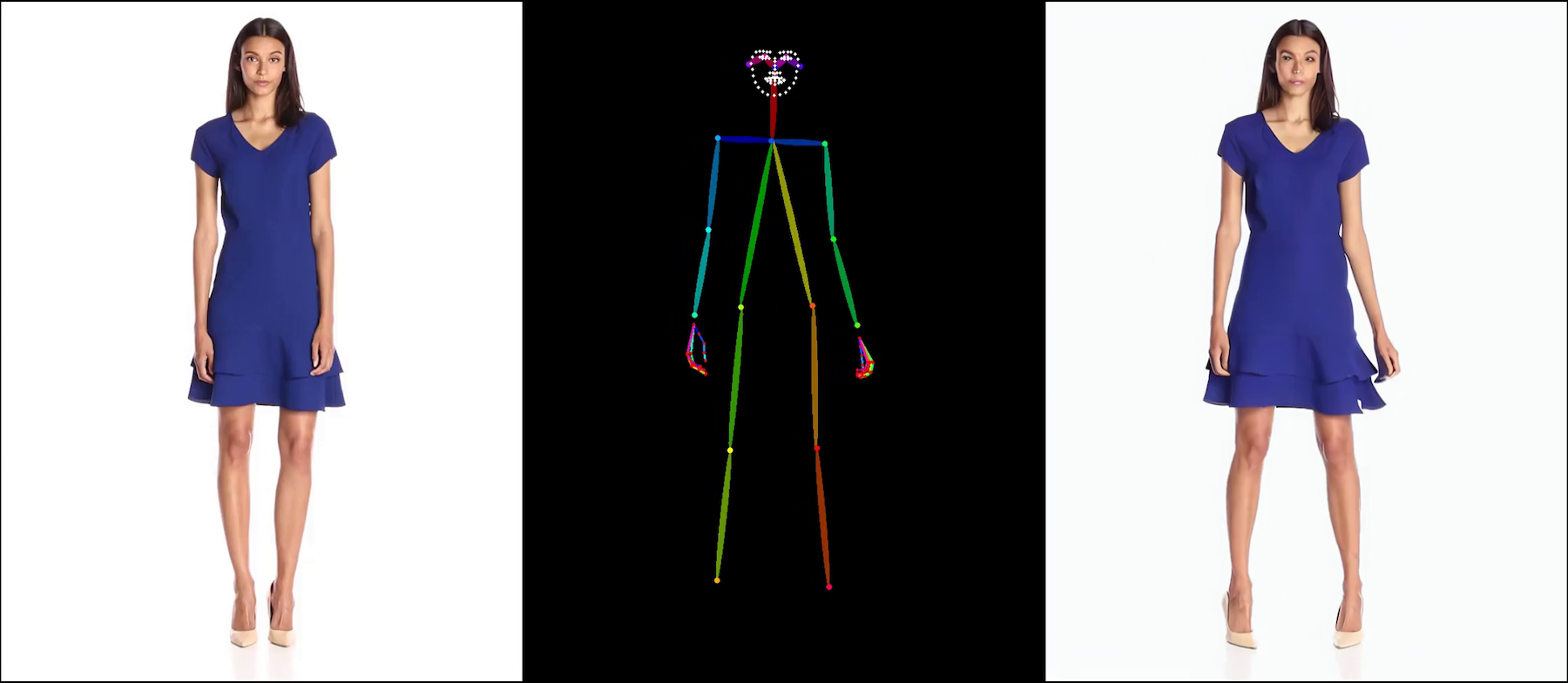

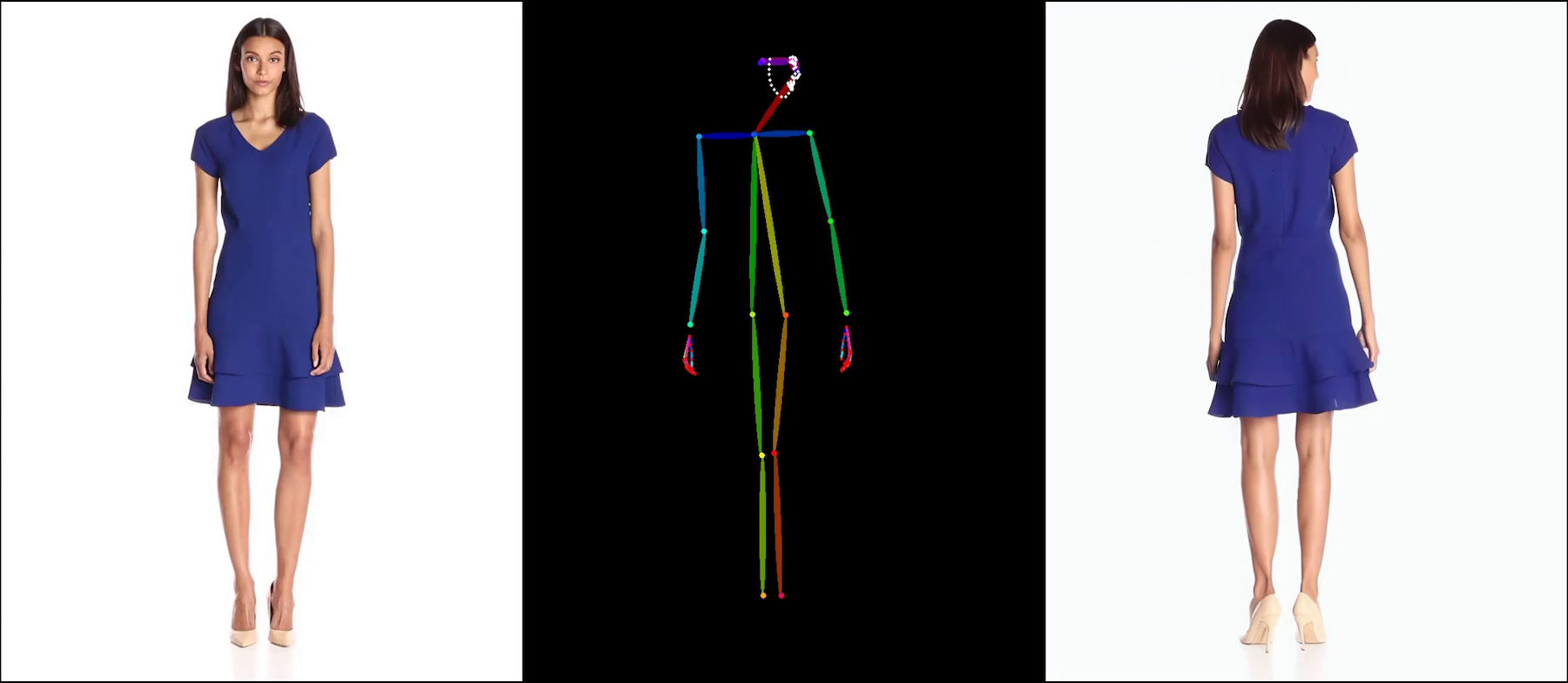

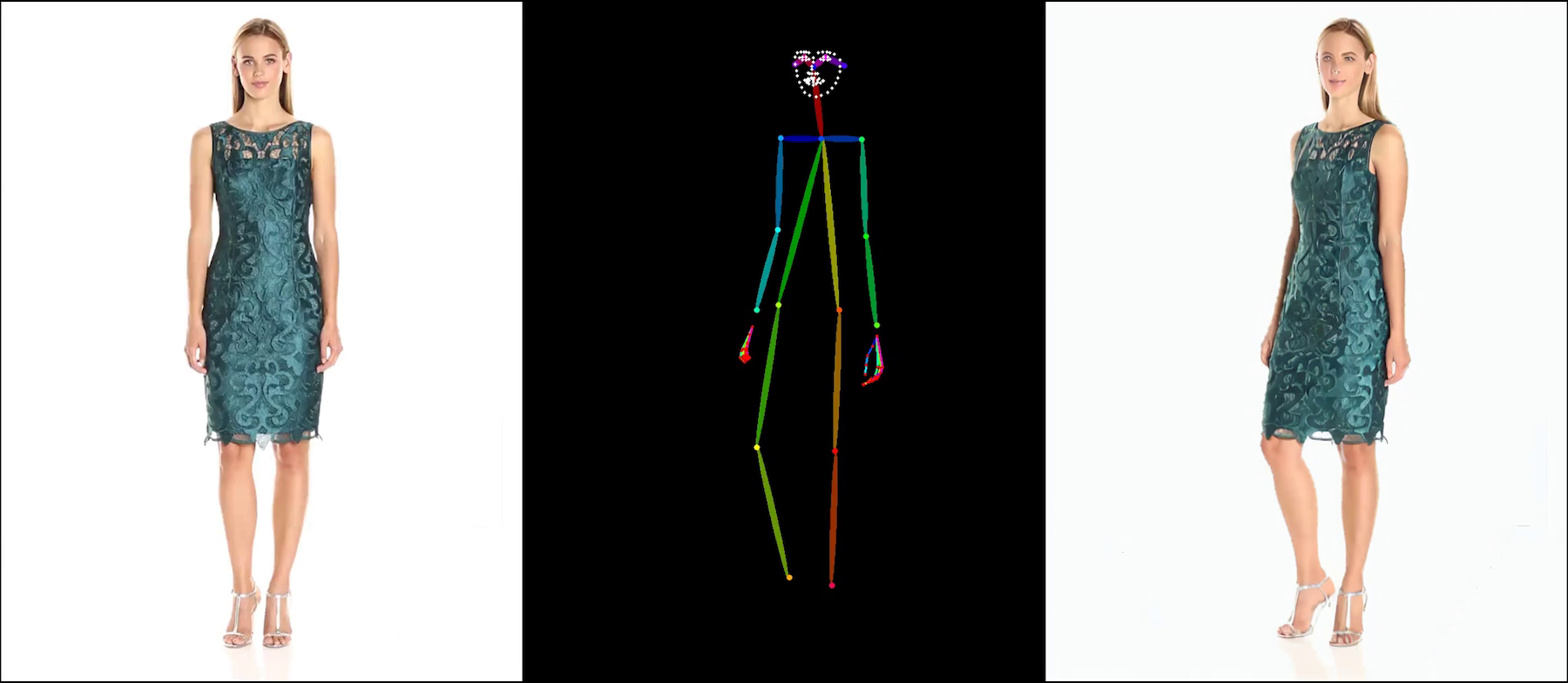

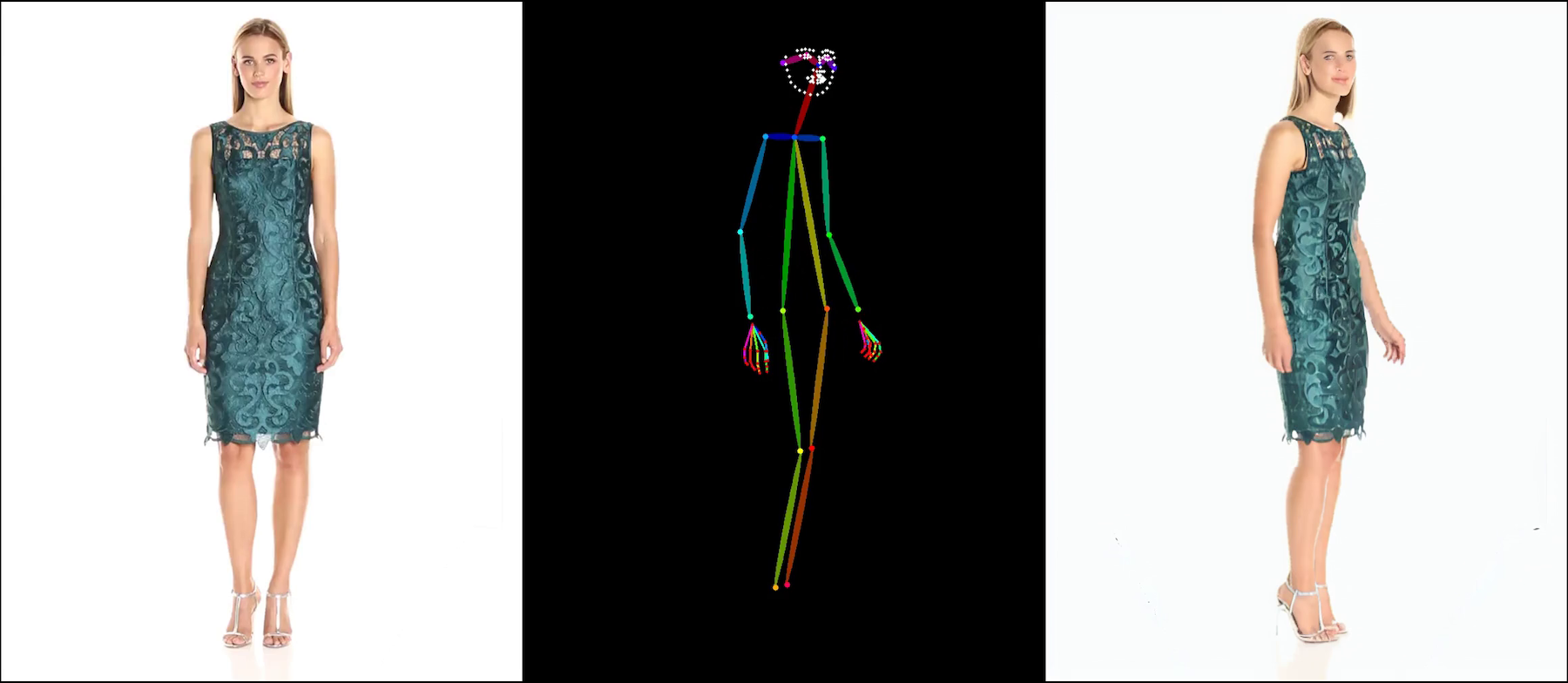

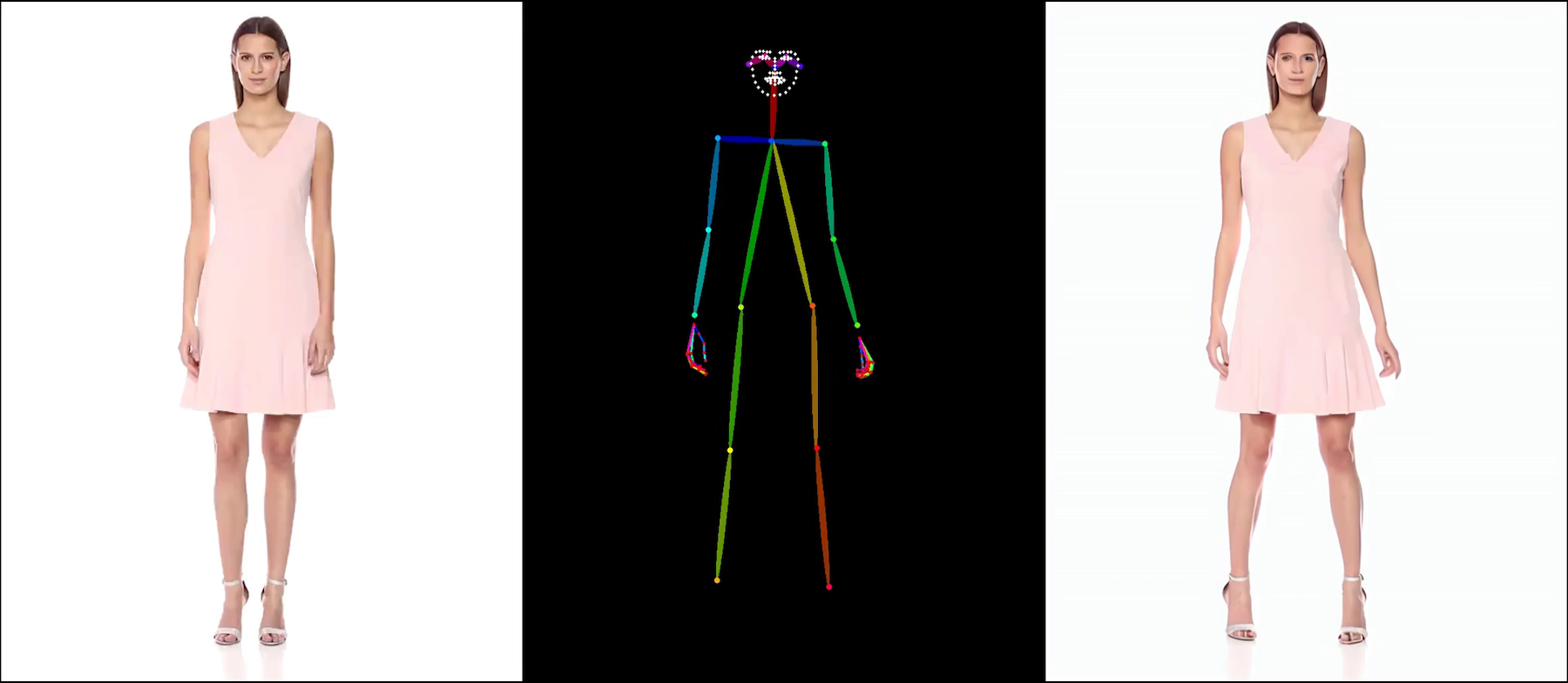

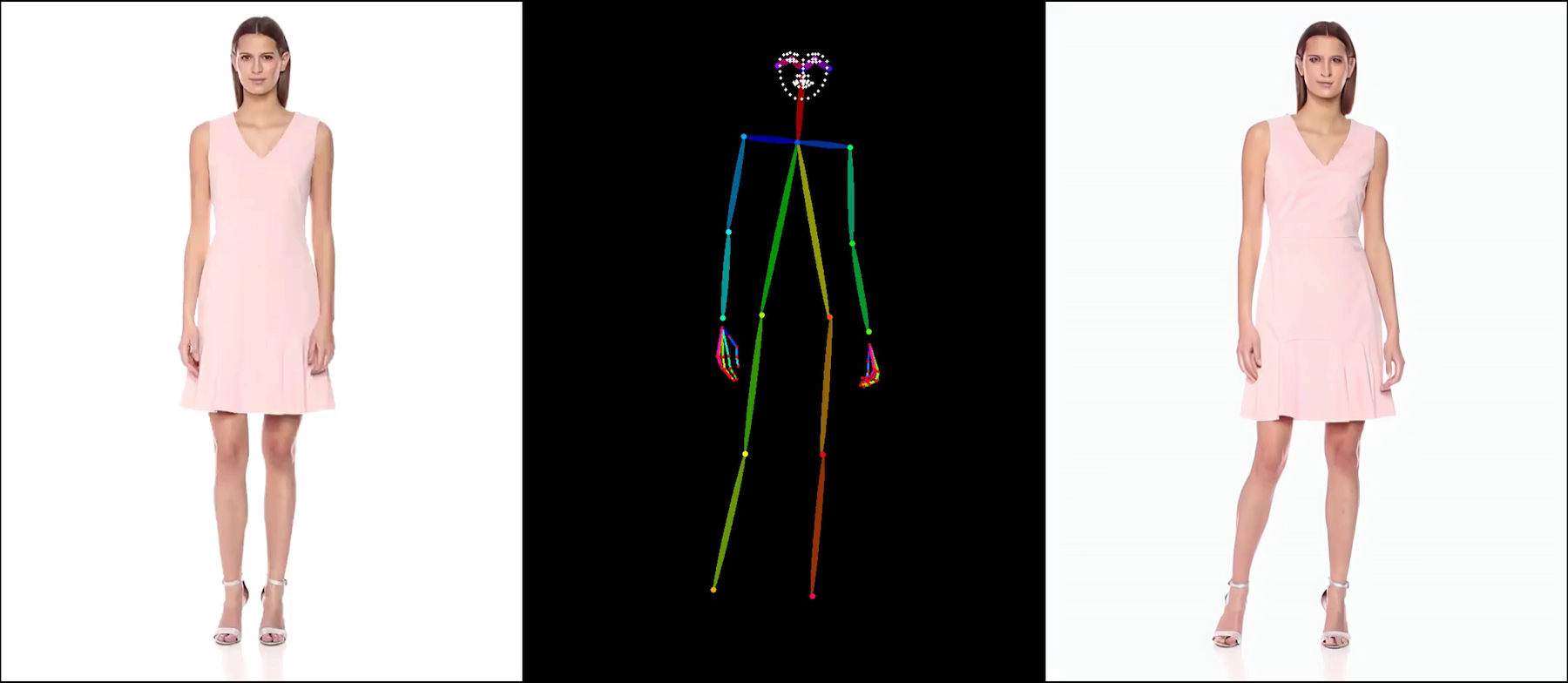

The current version of the face also has some artifacts. Also, this is a model trained on a UBC dataset rather than a large-scale dataset.

|  |

|  |

|  |

|  |

|  |

This project is under continuous development in part-time, there may be bugs in the code, welcome to correct them, I will optimize the code after the pre-trained model is released!

In the current version, we recommend training on 8 or 16 A100,H100 (80G) at 512 or 768 resolution. Low resolution (256,384) does not give good results!!!(VAE is very poor at reconstruction at low resolution.)

- Release Training Code.

- Release Inference Code.

- Release Unofficial Pre-trained Weights. (Note:Train on public datasets instead of large-scale private datasets, just for academic research.🤗)

- Release Gradio Demo.

- DeepSpeed + Accelerator Training.

Same as magic-animate.

or you can:

bash fast_env.shtorchrun --nnodes=2 --nproc_per_node=8 train.py --config configs/training/train_stage_1.yamltorchrun --nnodes=2 --nproc_per_node=8 train.py --config configs/training/train_stage_2.yamlpython3 -m pipelines.animation_stage_1 --config configs/prompts/animation_stage_1.yamlpython3 -m pipelines.animation_stage_2 --config configs/prompts/animation_stage_2.yamlSpecial thanks to the original authors of the Animate Anyone project and the contributors to the magic-animate and AnimateDiff repository for their open research and foundational work that inspired this unofficial implementation.

My response may be slow, please don't ask me nonsense questions.