- [2024.01.05] ⏭️ Check out my new OWOD paper, where I attempt to integrate foundation models into the OWOD objective!

- [2023.06.18] 🤝 Presenting at CVPR - come check out our poster, and discuss the future of OWOD.

- [2023.02.27] 🚀 PROB was accepted to CVPR 2023!

- [2022.12.02] First published on arXiv.

Certainly! Here's a more concise version of your "Highlights" section:

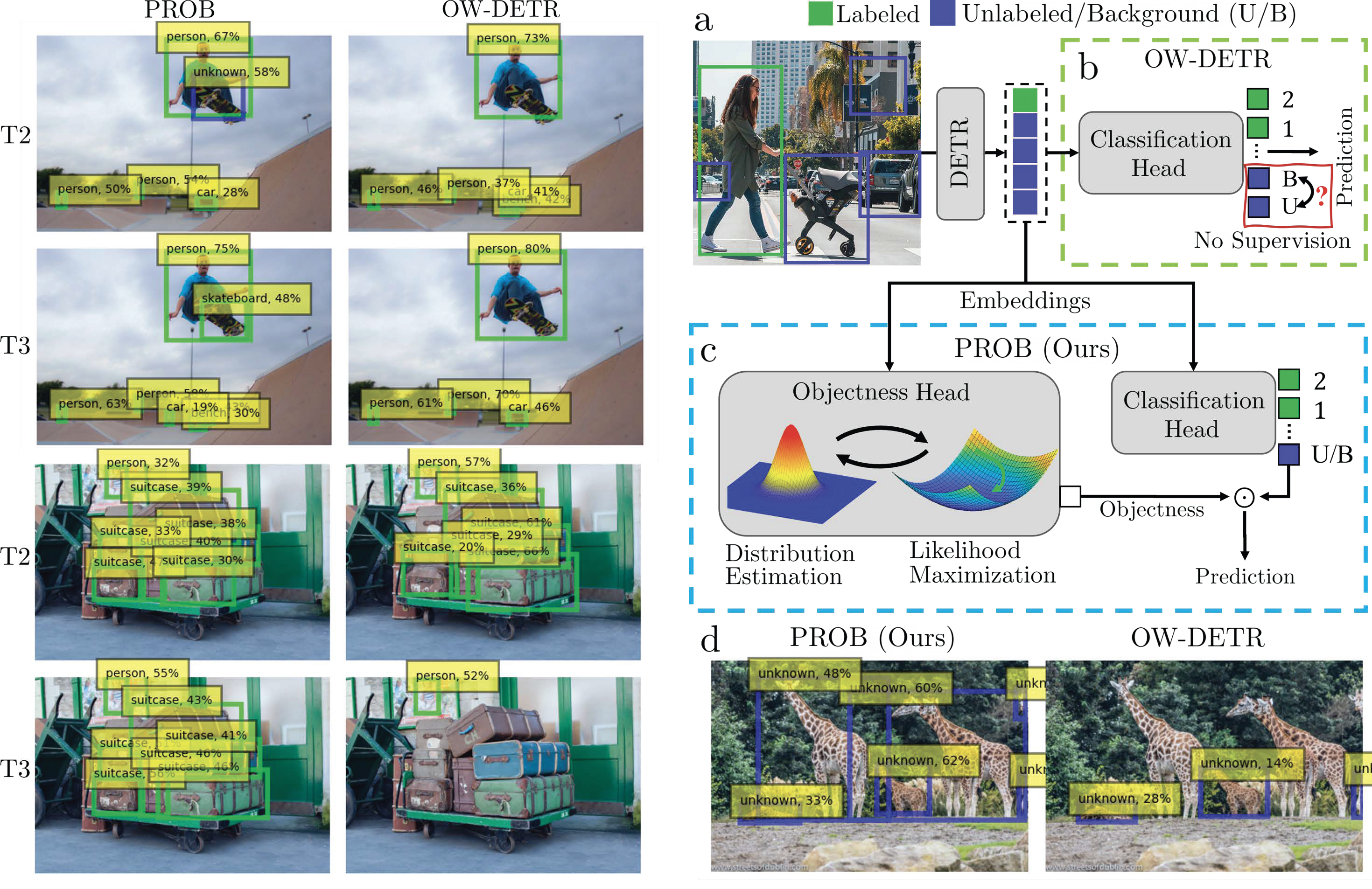

- Open World Object Detection (OWOD): A new computer vision task that extends traditional object detection to include both seen and unknown objects, aligning more with real-world scenarios.

- Challenges with Standard OD: Traditional methods inadequately classify unknown objects as background, failing in OWOD contexts.

- Novel Probabilistic Framework: Introduces a method for estimating objectness in embedded feature space, enhancing the identification of unknown objects.

- PROB: A Transformer-Based Detector: A new model that adapts existing OD models for OWOD, significantly improving unknown object detection.

- Superior Performance: PROB outperforms existing OWOD methods, doubling the recall for unknown objects and increasing known object detection mAP by 10%.

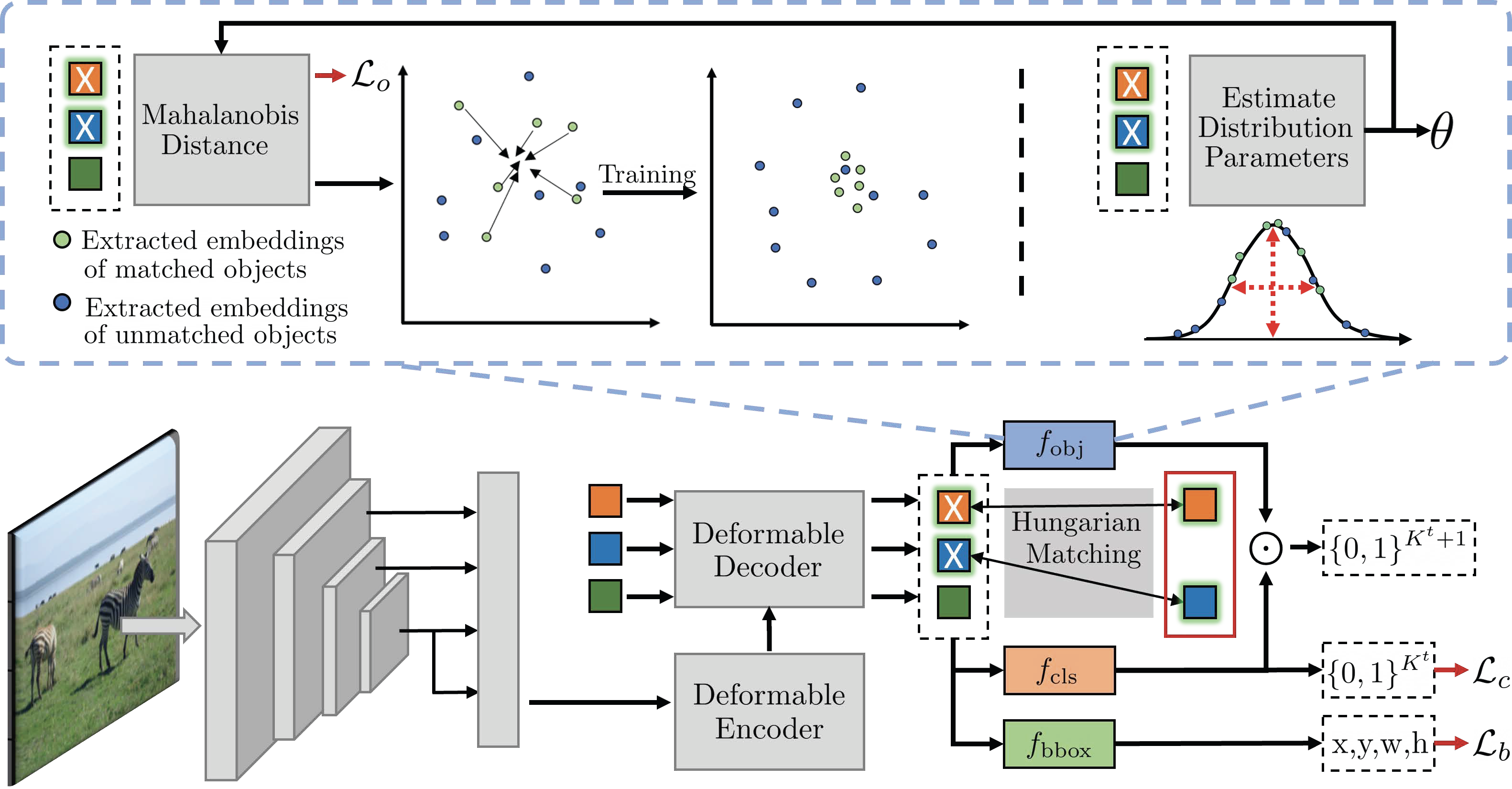

PROB adapts the Deformable DETR model by adding the proposed 'probabilistic objectness' head. In training, we alternate between distribution estimation (top right) and objectness likelihood maximization of matched ground-truth objects (top left). For inference, the objectness probability multiplies the classification probabilities. For more, see the manuscript.

| Task1 | Task2 | Task3 | Task4 | ||||

|---|---|---|---|---|---|---|---|

| Method | U-Recall | mAP | U-Recall | mAP | U-Recall | mAP | mAP |

| OW-DETR | 7.5 | 59.2 | 6.2 | 42.9 | 5.7 | 30.8 | 27.8 |

| PROB | 19.4 | 59.5 | 17.4 | 44.0 | 19.6 | 36.0 | 31.5 |

We have trained and tested our models on Ubuntu 16.04, CUDA 11.1/11.3, GCC 5.4.0, Python 3.10.4

conda create --name prob python==3.10.4

conda activate prob

pip install -r requirements.txt

pip install torch==1.12.0+cu113 torchvision==0.13.0+cu113 torchaudio==0.12.0 --extra-index-url https://download.pytorch.org/whl/cu113Download the self-supervised backbone from here and add in models folder.

cd ./models/ops

sh ./make.sh

# unit test (should see all checking is True)

python test.pyThe file structure:

PROB/

└── data/

└── OWOD/

├── JPEGImages

├── Annotations

└── ImageSets

├── OWDETR

├── TOWOD

└── VOC2007

The splits are present inside data/OWOD/ImageSets/ folder.

- Download the COCO Images and Annotations from coco dataset into the

data/directory. - Unzip train2017 and val2017 folder. The current directory structure should look like:

PROB/

└── data/

└── coco/

├── annotations/

├── train2017/

└── val2017/

- Move all images from

train2017/andval2017/toJPEGImagesfolder. - Use the code

coco2voc.pyfor converting json annotations to xml files. - Download the PASCAL VOC 2007 & 2012 Images and Annotations from pascal dataset into the

data/directory. - untar the trainval 2007 and 2012 and test 2007 folders.

- Move all the images to

JPEGImagesfolder and annotations toAnnotationsfolder.

Currently, we follow the VOC format for data loading and evaluation

To train PROB on a single node with 4 GPUS, run

bash ./run.sh**note: you may need to give permissions to the .sh files under the 'configs' and 'tools' directories by running chmod +x *.sh in each directory.

By editing the run.sh file, you can decide to run each one of the configurations defined in \configs:

- EVAL_M_OWOD_BENCHMARK.sh - evaluation of tasks 1-4 on the MOWOD Benchmark.

- EVAL_S_OWOD_BENCHMARK.sh - evaluation of tasks 1-4 on the SOWOD Benchmark.

- M_OWOD_BENCHMARK.sh - training for tasks 1-4 on the MOWOD Benchmark.

- M_OWOD_BENCHMARK_RANDOM_IL.sh - training for tasks 1-4 on the MOWOD Benchmark with random exemplar selection.

- S_OWOD_BENCHMARK.sh - training for tasks 1-4 on the SOWOD Benchmark.

To train PROB on a slurm cluster having 2 nodes with 8 GPUS each (not tested), run

bash run_slurm.sh**note: you may need to give permissions to the .sh files under the 'configs' and 'tools' directories by running chmod +x *.sh in each directory.

| System | Hyper Parameters | Notes | Verified By |

|---|---|---|---|

| 2, 4, 8, 16 A100 (40G) | - | - | orrzohar |

| 2 A100 (80G) | lr_drop = 30 | lower lr_drop required to sustain U-Recall | #47 |

| 4 Titan RTX (24G) | lr_drop = 40, batch_size = 2 | class_error drops more slowly during training. | #26 |

| 4 3090 (24G) | lr_drop = 35, batch_size = 2 lr = 1e-4, lr_drop=35, batch_size = 3 | Performance drops to K_AP50= 58.338, U_R50=19.443. | #48 |

| 1 2080Ti(11G) | lr = 2e-5, lr_backbone = 4e-6, batch size = 1, obj_temp = 1.3 | Performance drops to K_AP50=57.9826 U_R50=19.2624. | #50 |

For reproducing any of the aforementioned results, please download our weights and place them in the

'exps' directory. Run the run_eval.sh file to utilize multiple GPUs.

**note: you may need to give permissions to the .sh files under the 'configs' and 'tools' directories by running chmod +x *.sh in each directory.

PROB/

└── exps/

├── MOWODB/

| └── PROB/ (t1.ph - t4.ph)

└── SOWODB/

└── PROB/ (t1.ph - t4.ph)

Note: Please check the Deformable DETR repository for more training and evaluation details.

If you use PROB, please consider citing:

@InProceedings{Zohar_2023_CVPR,

author = {Zohar, Orr and Wang, Kuan-Chieh and Yeung, Serena},

title = {PROB: Probabilistic Objectness for Open World Object Detection},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2023},

pages = {11444-11453}

}Should you have any questions, please contact 📧 orrzohar@stanford.edu

PROB builds on previous works' code bases such as OW-DETR, Deformable DETR, Detreg, and OWOD. If you found PROB useful please consider citing these works as well.