Code of our The Web Conference 2024 paper GraphTranslator: Aligning Graph Model to Large Language Model for Open-ended Tasks

Authors: Mengmei Zhang, Mingwei Sun, Peng Wang, Shen Fan, Yanhu Mo, Xiaoxiao Xu, Hong Liu, Cheng Yang, Chuan Shi

-

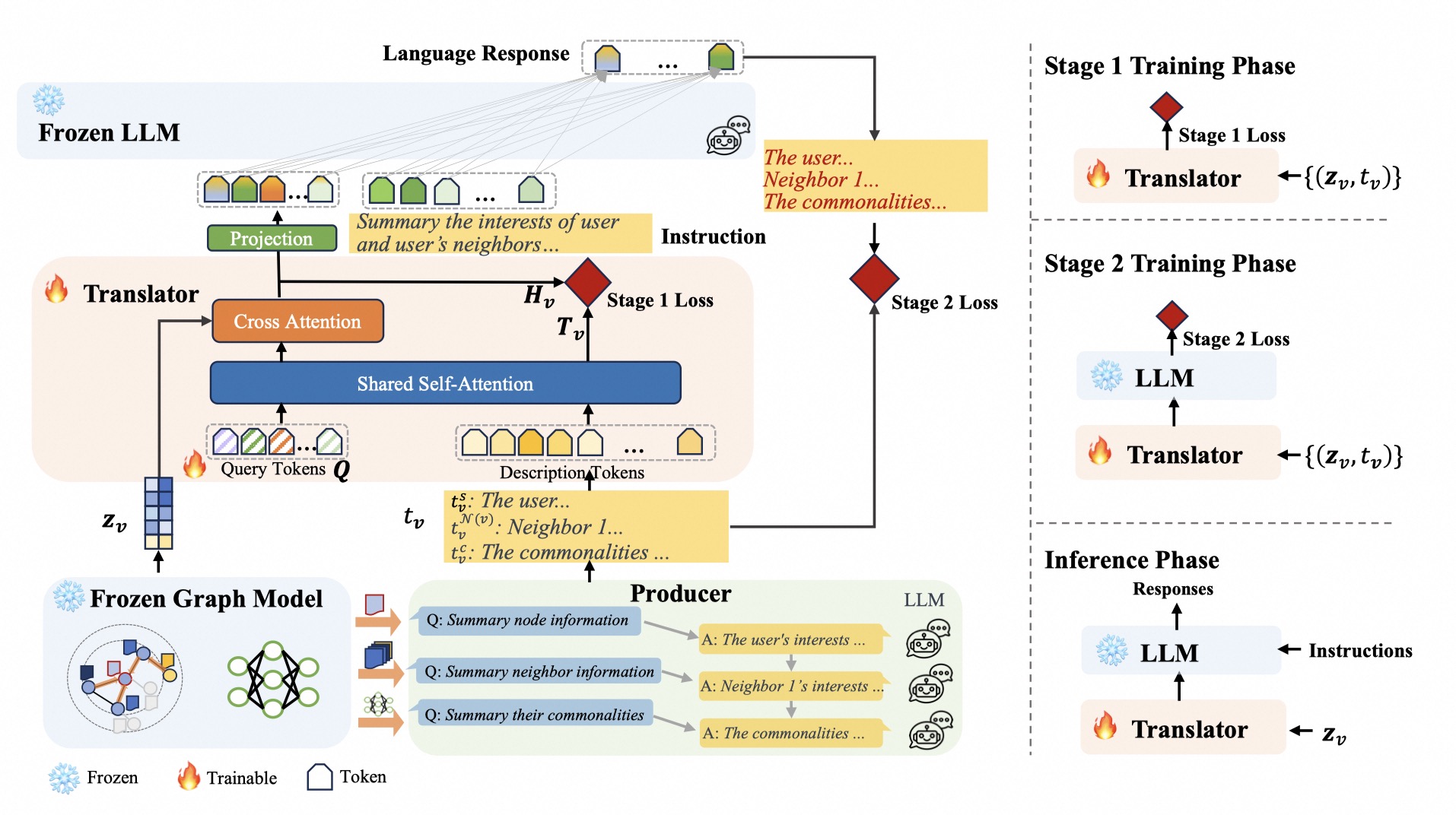

Pre-training Graph Model Phase. In the pre-training phase, we employ link prediction as the self-supervised task for pre-training the graph model.

-

Producer Phase. In the Producer phase, we employ LLM to summary Node/Neighbor Information.

-

Translator Training Phase.

Stage 1: Training the Translator for GraphModel-Text alignment.

Stage 2: Training the Translator for GraphModel-LLM alignment.

-

Translator Generate Phase. Generate the predictions with the pre-trained Translator model.

We run our experiment with the following settings.

- CPU: Intel(R) Xeon(R) Platinum 8163 CPU @ 2.50GHz

- GPU: Tesla V100-SXM2-32GB

- OS: Linux (Ubuntu 18.04.6 LTS)

- Python==3.9, CUDA==11.4, Pytorch==1.12.1

The ./requirements.txt list all Python libraries that GraphTranslator depend on, and you can install using:

conda create -n graphtranslator python=3.9

conda activate graphtranslator

git clone https://github.com/alibaba/GraphTranslator.git

cd GraphTranslator/

pip install -r requirements.txt

Download datasets and model checkpoints used in this project with huggingface api (recommend).

pip install -U huggingface_hub

pip install huggingface-cli

ArXiv Dataset

Download files bert_node_embeddings.pt, graphsage_node_embeddings.pt and titleabs.tsv from link and insert them to ./data/arxiv.

huggingface-cli download --resume-download --local-dir-use-symlinks False --repo-type dataset Hualouz/GraphTranslator-arxiv --local-dir ./data/arxiv

Translator Model

Download bert-base-uncased.zip from link and unzip it to ./Translator/models.

huggingface-cli download --resume-download Hualouz/Qformer --local-dir ./Translator/models --local-dir-use-symlinks False

cd Translator/models/

unzip bert-base-uncased.zip

ChatGLM2-6B Model

Download the ChatGLM2-6B model from link and insert it to ./Translator/models

huggingface-cli download --resume-download THUDM/chatglm2-6b --local-dir ./Translator/models/chatglm2-6b --local-dir-use-symlinks False

- Generate node summary text with LLM (ChatGLM2-6B).

cd ./Producer/inference

python producer.py

Train the Translator model with the prepared ArXiv dataset.

- Stage 1 Training

Train the Translator for GraphModel-Text alignment. The training configurations are in the file ./Translator/train/pretrain_arxiv_stage1.yaml.

cd ./Translator/train

python train.py --cfg-path ./pretrain_arxiv_stage1.yaml

After stage 1, you will get a model checkpoint stored in ./Translator/model_output/pretrain_arxiv_stage1/checkpoint_0.pth.

- Stage 2 Training

Train the Translator for GraphModel-LLM alignment. The training configurations are in the file ./Translator/train/pretrain_arxiv_stage2.yaml.

cd ./Translator/train

python train.py --cfg-path ./pretrain_arxiv_stage2.yaml

After stage 2, you will get a model checkpoint stored in ./Translator/model_output/pretrain_arxiv_stage2/checkpoint_0.pth.

After all the training stages , you will get a model checkpoint that can translate GraphModel information into that the LLM can understand.

- Note: Training phase is not necessary if you only want to obtain inference results with our pre-trained model checkpoint. You can download our pre-trained checkpoint

checkpoint_0.pthfrom link and place it in the./Translator/model_output/pretrain_arxiv_stage2directory. Then skip the whole Training Phase and go to the Generate Phase.

- generate prediction with the pre-trained Translator model. The generate configurations are in the file

./Translator/train/pretrain_arxiv_generate_stage2.yaml. As to the inference efficiency, it may take a while to generate all the predictions and save them into file.

cd ./Translator/train

python generate.py

The generated prediction results will be saved in ./data/arxiv/pred.txt.

Evaluate the accuracy of the generated predictions.

cd ./Evaluate

python eval.py

@article{zhang2024graphtranslator,

title={GraphTranslator: Aligning Graph Model to Large Language Model for Open-ended Tasks},

author={Zhang, Mengmei and Sun, Mingwei and Wang, Peng and Fan, Shen and Mo, Yanhu and Xu, Xiaoxiao and Liu, Hong and Yang, Cheng and Shi, Chuan},

journal={arXiv preprint arXiv:2402.07197},

year={2024}

}

Thanks to all the previous works that we used and that inspired us.