An open-source Kafka visualizer with Dead Letter Queue support for failed messages. Built for JavaScript developers!

- About the Project

- Getting Started

- Features

- Prometheus Server and Pre-configured Cluster

- Roadmap

- Contributors

- License

Kafe is an open-source application to help independent developers to monitor and to manage their Apache Kafka clusters, while offering debugging and failed messages support. With Kafe you can monitor key metrics to your cluster, brokers, and topic performance and take actions accordingly. Through the UI you are able to:

- Monitor key performance metrics in cluster and brokers with real time charts, and insights of your cluster with info cards.

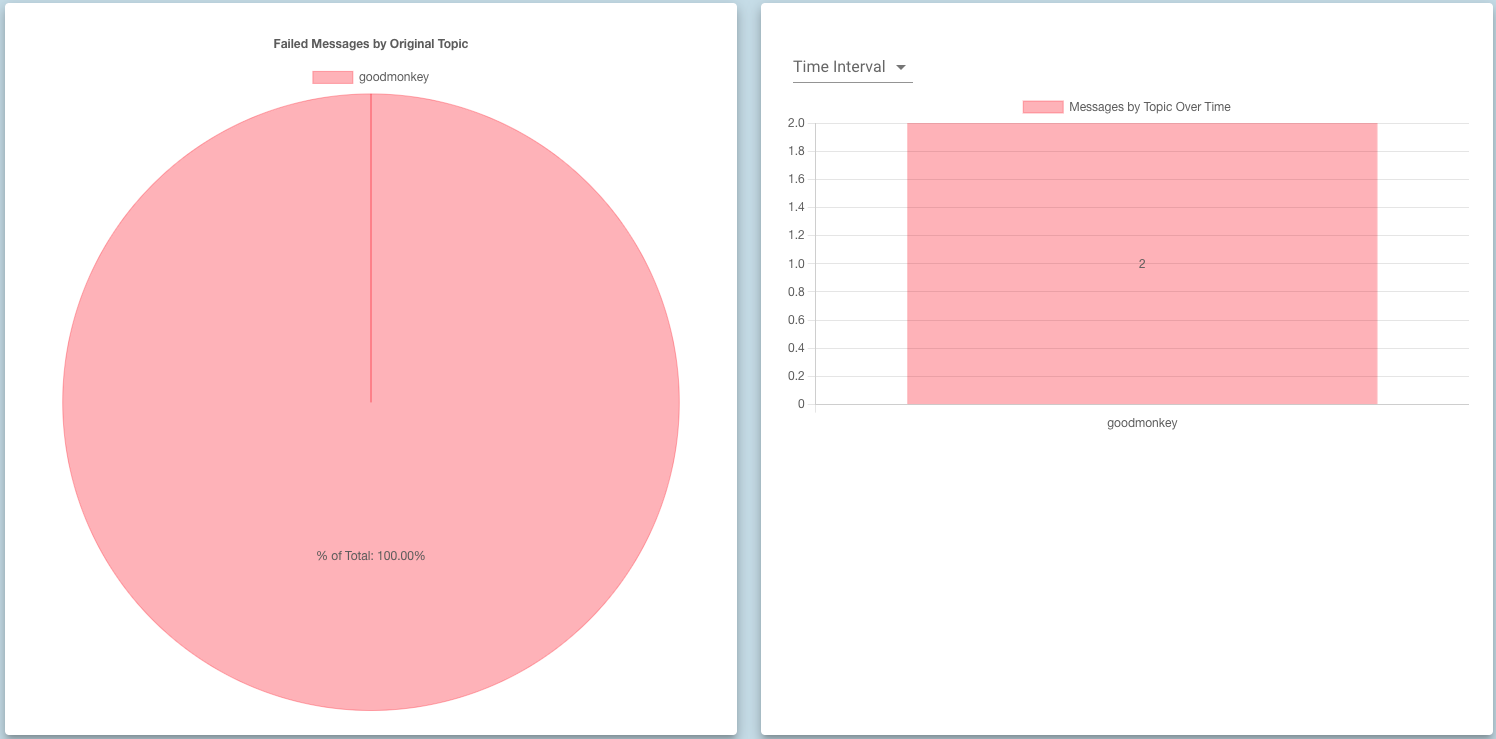

- Diagnose and debug your topics with Dead Letter Queue failed messages table and analytic charts.

- Create and delete topics within a cluster.

- Reassign partition replicas to support with load balancing and solve for underreplication issues.

Do the following to get set up with Kafe:

-

Have node installed. Kafe is built to work on Node 14+.

-

If you want to use our pre-configured Kafka cluster, have Docker Desktop and Docker Compose installed and follow the demo instructions.

-

Download JMX exporter and add it to your Kafka cluster configurations. You can find the configuration files and a copy of the JMX exporter jar file in the

configs/jmx_expfolder in this repo. Use the earlier version (jmx_prometheus_javaagent-0.16.1)- If you're starting your Kafka cluster from the CLI you can set up JMX exporter following these commands:

export KAFKA_OPTS='-javaagent:{PATH_TO_JMX_EXPORTER}/jmx-exporter.jar={PORT}:{PATH_TO_JMX_EXPORTER_KAFKA.yml}/kafka.yml'- Launch and start your brokers as you normally would.

-

Be sure to have a Prometheus metrics server running with the proper targets exposed on your brokers. You can find an example of the Prometheus settings we use for our demo cluster in

configs/prometheus/prometheus.yml

Refer to the docker-compose files on how to use Docker to launch a pre-configured cluster with proper exposure to Prometheus.

- Clone down this repository:

git clone https://github.com/oslabs-beta/Kafe.git

- Create a

.envfile using the template in the.env.templatefile to set the environment variables. - In the Kafe root directory to install all dependencies:

npm install

- Build your version of Kafe:

npm run build

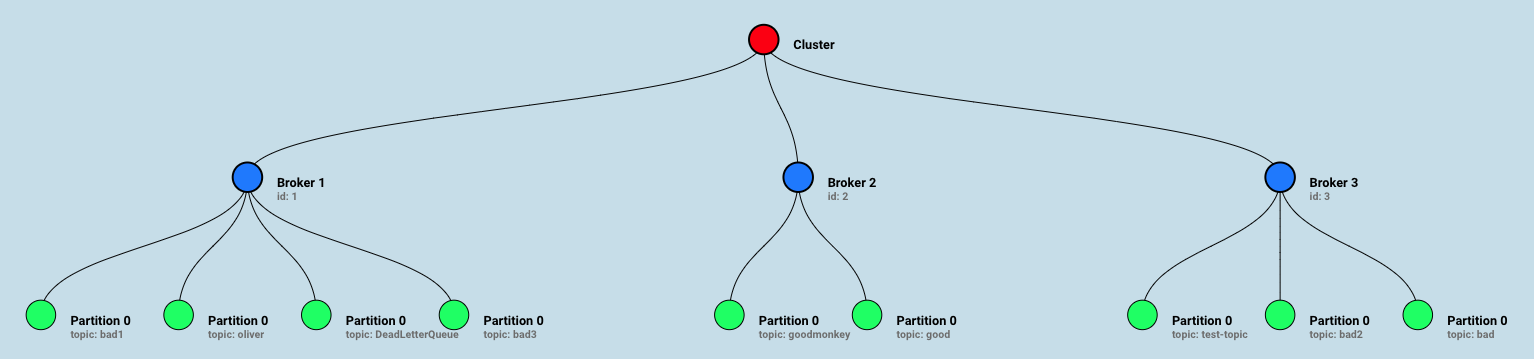

- When you first load Kafe, you can navigate to "home" to see a tree representing your Kafka cluster. It will show the distribution of all your partitions and which brokers they belong to. Each node can be expanded or collapsed for viewing convenience.

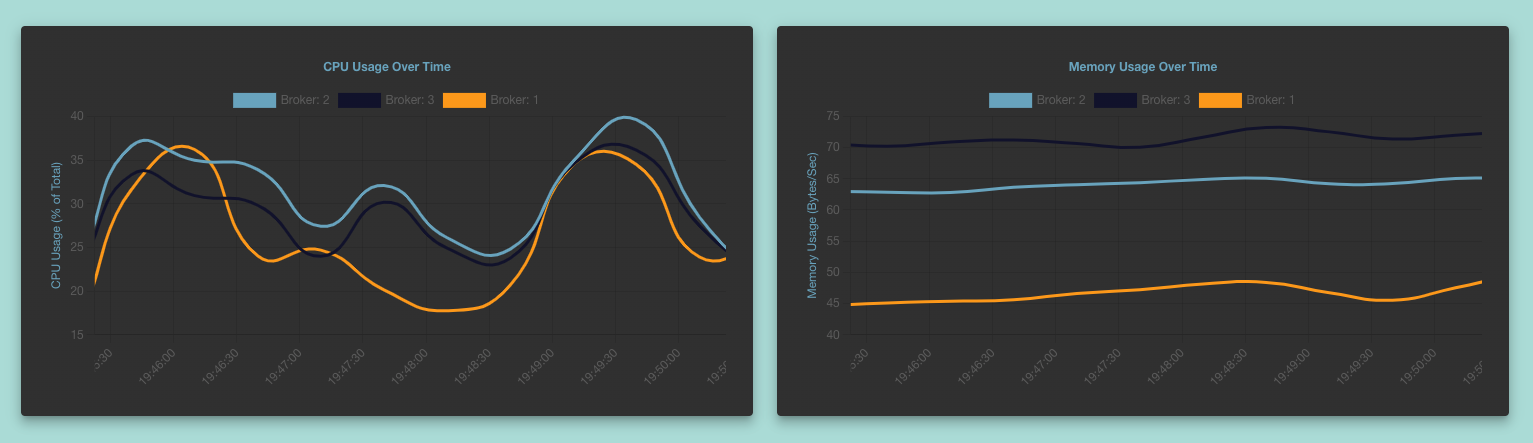

- Kafe provides realtime charts to track important metrics that reflect the health of your Kafka cluster. Metrics that Kafe tracks include but are not limited to:

- Overall CPU and Memory usage of your cluster

- Time it takes to produce messages

- Time it takes for consumers to receive messages from topics they are subscribed to

- Kafe comes with an intuitive and easy-to-use GUI tool to manage your cluster. It should render cards for all active topics and will allow you to create new topics, delete existing topics, reassign replicas of each topic partition and clear all messages for topic.

-

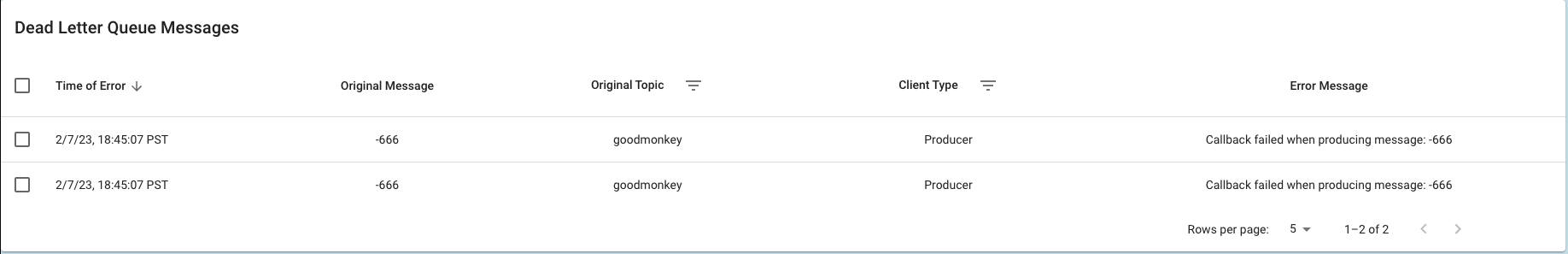

KafkaJS lacks native support for processing failed messages, so we created a lightweight npm package Kafe-DLQ to handle any failed messages within a Kafka cluster and forward them to a custom 'DeadLetterQueue' topic. Our application then creates a consumer that subscribes to 'DeadLetterQueue', which will then display a dynamic table with information on all messages that have failed since starting the cluster. You can read more about Kafe-DLQ here. If you want to utilize Kafe's DLQ features, be sure to do the following:

- In your Kafka producer application run:

npm install kafe-dlq- Declare your Kafka cluster configuration. The method is identical to how you would do it with kafkajs:

const { KafeDLQClient } = require('kafe-dlq'); const { Kafka } = require('kafkajs'); const kafka = new Kafka({ clientId: 'dlq-companion', brokers: process.env.KAFKA_BROKERS.split(','), });

- Create a custom callback to handle specific failure edge cases, initialize a Kafe-DLQ client and instantiate a producer. You can read more about the implementation at the Kafe-DLQ README.

const callback = ((message ) => { return parseInt(message) > 0; }); //instantiate a DLQ client by passing in KafkaJS client and the callback function const client = new KafeDLQClient(kafka, callback); const testProducer = client.producer();

- You are ready to start producing messages to your Kafka cluster just like normal, except now any messages that fail will be forwarded to the 'DeadLetterQueue' topic, which Kafe-DLQ will create automatically! Kafe already has a consumer subscribed to this topic, but in a standalone application you would need to spin up the consumer yourself.

testProducer.connect() .then(() => testProducer.send({ topic: 'good', messages: [{key: '1', value: '1'}, {key: '2', value: '2'}, {key: '3', value: '3'}] })) .then(() => testProducer.send({ topic: 'bad', messages: [{key: '1', value: '-666'}, {key: '2', value: '-666'}, {key: '3', value: '3'}] })) .catch((err) => console.log(err));

There is an example docker-compose file for spinning up a Prometheus server along with a Kafka cluser: docker-kafka-prom.yml.

-

If you just need want to spin up a Prometheus server + Kafka Cluster:

- We already have a Prometheus config set up, so don't worry about it!

- Be sure to change the

KAFKA_BROKERSenvironment variable to the ports that you plan on running your cluster on, separated by commas. In our .env.template file it is spinning up three brokers at localhost:9091, localhost:9092 and localhost:9093 - Run the following command:

docker-compose -f docker-compose-kafka-prom.yml up -d

Kafe was just launched in Feb, 2023, and we want to see it grow and evolve through user feedback and continuous iteration. Here are some features we're working on bringing to Kafe in the near future:

- Additional filtering options for topics and to filter data by time

- More flexibility for cluster manipulation such as changing replication factor for a topic, and the option to auto-reprocess failed messages in a topic

- Additional authentication support for Kafka Clusters

- Consumer metrics to monitor consumer performance and make improvements

- Additional frontend testing such as being able to send messages to a topic and testing the response time of the consumer

If you don't see a feature that you're looking for listed above, find any bugs, or have any other suggestions, please feel free to open an issue and our team will work with you to get it implemented!

Also if you create a custom implementation of Kafe we'd love to see how you're using it!

- Oliver Zhang | GitHub | Linkedin

- Yirou Chen | GitHub | Linkedin

- Jacob Cole| GitHub | Linkedin

- Caro Gomez | GitHub | Linkedin

- Kpange Kaitibi | GitHub | Linkedin

This product is licensed under the MIT License without restriction.