| description | cover | coverY |

|---|---|---|

How to write compositional language model programs |

0 |

You’ll learn how to:

- Amplify language models like GPT-3 through recursive question-answering and debate

- Reason about long texts by combining search and generation

- Run decompositions quickly by parallelizing language model calls

- Build human-in-the-loop agents

- Use verification of answers and reasoning steps to improve responses

- And more!

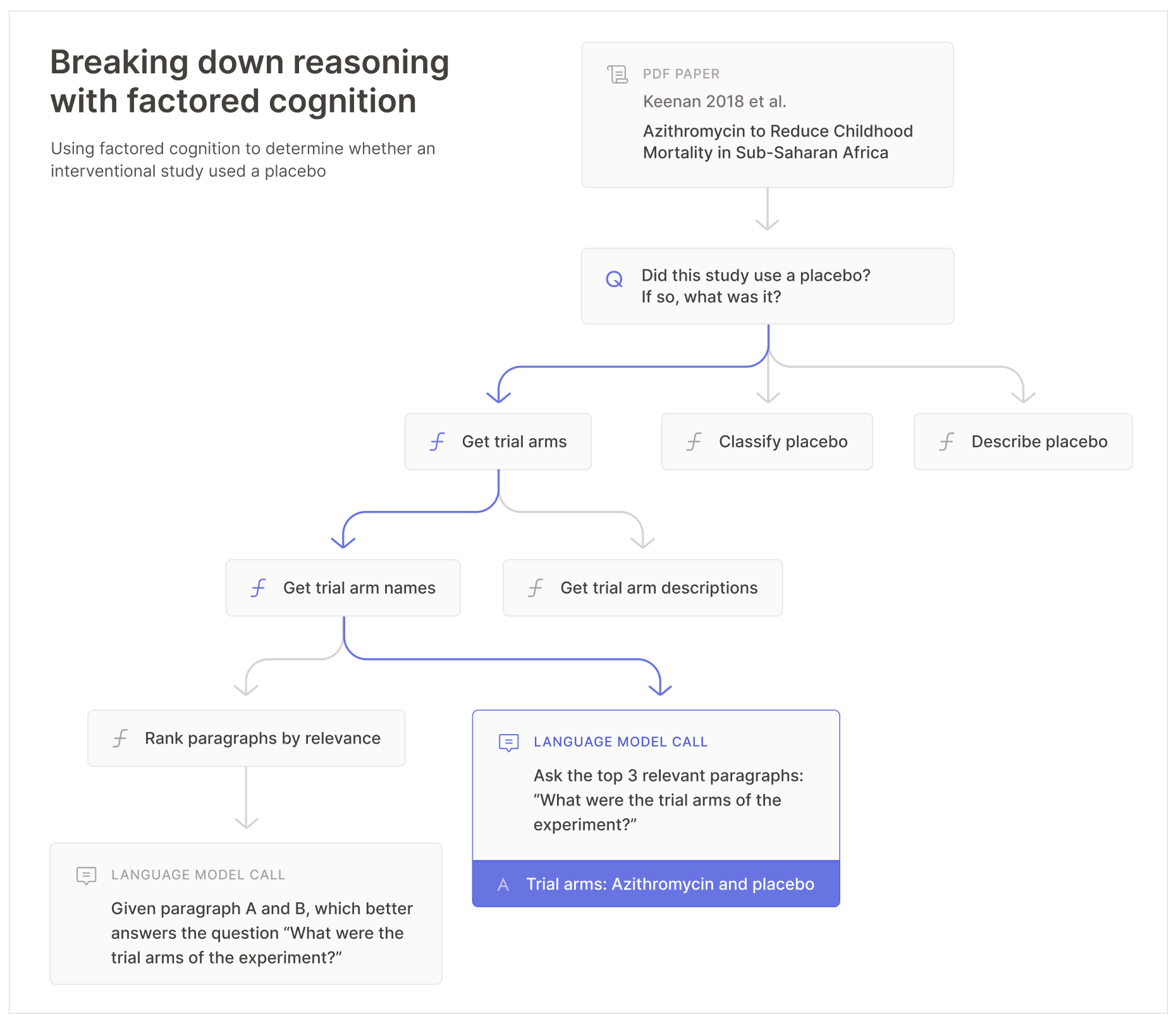

Example of a decomposition for reasoning about papers.

The book focuses on techniques that are likely to remain relevant for better language models.

How to cite this book

Please cite this book as:

{% code overflow="wrap" %}

A. Stuhlmüller and J. Reppert and L. Stebbing (2022). Factored Cognition Primer. Retrieved December 6, 2022 from https://primer.ought.org.

{% endcode %}

BibTeX:

@misc{primer2022,

author = {Stuhlmüller, Andreas and Reppert, Justin and Stebbing, Luke},

title = {Factored Cognition Primer},

year = {2022},

howpublished = {\url{https://primer.ought.org}},

urldate = {2022-12-06}

}