Export Prometheus metrics from arbitrary unstructured log data.

Grok is a tool to parse crappy unstructured log data into something structured and queryable. Grok is heavily used in Logstash to provide log data as input for ElasticSearch.

Grok ships with about 120 predefined patterns for syslog logs, apache and other webserver logs, mysql logs, etc. It is easy to extend Grok with custom patterns.

The grok_exporter aims at porting Grok from the ELK stack to Prometheus monitoring. The goal is to use Grok patterns for extracting Prometheus metrics from arbitrary log files.

Download grok_exporter-$ARCH.zip for your operating system from the releases page, extract the archive, cd grok_exporter-$ARCH, then run

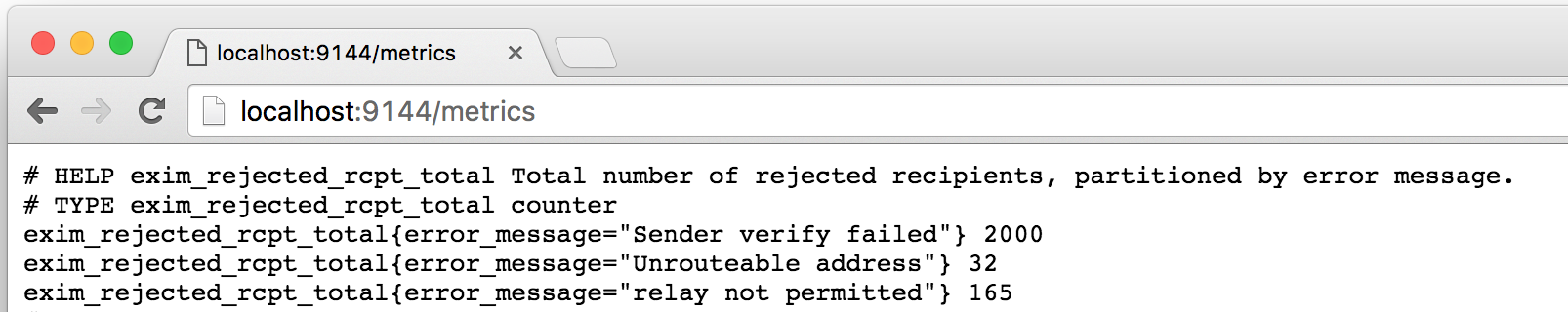

./grok_exporter -config ./example/config.ymlThe example log file exim-rejected-RCPT-examples.log contains log messages from the Exim mail server. The configuration in config.yml counts the total number of rejected recipients, partitioned by error message.

The exporter provides the metrics on http://localhost:9144/metrics:

Example configuration:

global:

config_version: 3

input:

type: file

path: ./example/example.log

readall: true

imports:

- type: grok_patterns

dir: ./logstash-patterns-core/patterns

metrics:

- type: counter

name: grok_example_lines_total

help: Counter metric example with labels.

match: '%{DATE} %{TIME} %{USER:user} %{NUMBER}'

labels:

user: '{{.user}}'

logfile: '{{base .logfile}}'

server:

port: 9144CONFIG.md describes the grok_exporter configuration file and shows how to define Grok patterns, Prometheus metrics, and labels. It also details how to configure file, stdin, and webhook inputs.

In order to compile grok_exporter from source, you need

- Go installed and

$GOPATHset. - gcc installed for

cgo. On Ubuntu, useapt-get install build-essential. - Header files for the Oniguruma regular expression library, see below.

Installing the Oniguruma library on OS X

brew install onigurumaInstalling the Oniguruma library on Ubuntu Linux

sudo apt-get install libonig-devInstalling the Oniguruma library from source

curl -sLO https://github.com/kkos/oniguruma/releases/download/v6.9.5_rev1/onig-6.9.5-rev1.tar.gz

tar xfz onig-6.9.5-rev1.tar.gz

cd onig-6.9.5

./configure

make

make installInstalling grok_exporter

git clone https://github.com/fstab/grok_exporter

cd grok_exporter

git submodule update --init --recursive

go install .The resulting grok_exporter binary will be dynamically linked to the Oniguruma library, i.e. it needs the Oniguruma library to run. The releases are statically linked with Oniguruma, i.e. the releases don't require Oniguruma as a run-time dependency. The releases are built with hack/release.sh.

Note: Go 1.13 for Mac OS has a bug affecting the file input. It is recommended to use Go 1.12 on Mac OS until the bug is fixed. Go 1.13.5 is affected. golang/go#35767.

User documentation is included in the GitHub repository:

- CONFIG.md: Specification of the config file.

- BUILTIN.md: Definition of metrics provided out-of-the-box.

Developer notes are available on the GitHub Wiki pages:

External documentation:

- Extracting Prometheus Metrics from Application Logs - https://labs.consol.de/...

- Counting Errors with Prometheus - https://labs.consol.de/...

- [Video] Lightning talk on grok_exporter - https://www.youtube.com/...

- For feature requests, bugs reports, etc: Please open a GitHub issue.

- For bug fixes, contributions, etc: Create a pull request.

- Questions? Contact me at fabian@fstab.de.

Google's mtail goes in a similar direction. It uses its own pattern definition language, so it will not work out-of-the-box with existing Grok patterns. However, mtail's RE2 regular expressions are probably more CPU efficient than Grok's Oniguruma patterns. mtail reads logfiles using the fsnotify library, which might be an obstacle on operating systems other than Linux.

Licensed under the Apache License, Version 2.0. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0.