🖋 Authors: Siru Ouyang, Zhuosheng Zhang, Bing Yan, Xuan Liu, Yejin Choi, Yejin Choi, Jiawei Han, Lianhui Qin

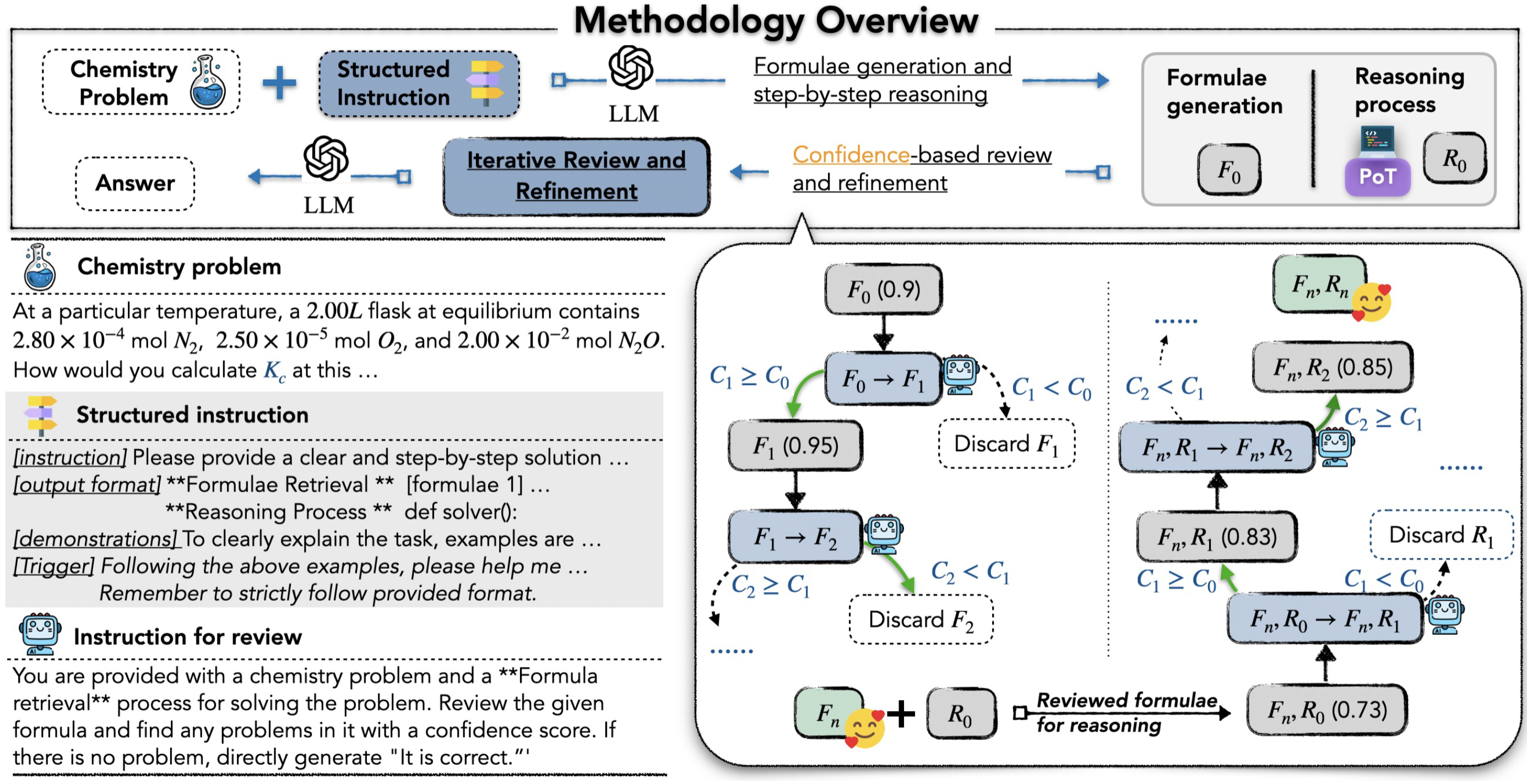

Large Language Models (LLMs) excel in diverse areas, yet struggle with complex scientific reasoning, especially in the field of chemistry. The errors often stem not from a lack of domain knowledge within the LLMs, but rather from the absence of an effective reasoning structure that guides the LLMs to elicit the right knowledge, incorporate the knowledge in step-by-step reasoning, and iteratively refine results for further improved quality.

We introduce StructChem, a simple yet effective prompting strategy that offers the desired guidance and substantially boosts the LLMs' chemical reasoning capability.

All the prompts and instructions used in our experiments are in the prompts folder.

instruction.txt offers the overall instruction for generation, which disentangles the generation process into formulae collection and reasoning process.

feedback_formulae.txt and feedback_reasoning.txt provides guidance for iterative review and refinement on the previously mentioned two stages.

verified_instruction.txt concludes the final answer by taking into the verified formulae and reasoning process into consideration.

To run StruchChem on the SciBench datasets, fill in your open-ai api key and simply run

python run.pyThis code will automatically run all four datasets.

For evaluation, config the output files in get_accuracy.py:

python get_accuracy.pyThe data generation process could be found in data_generation

python gpt_generation.pyThe data are further post-processed for fine-tuning:

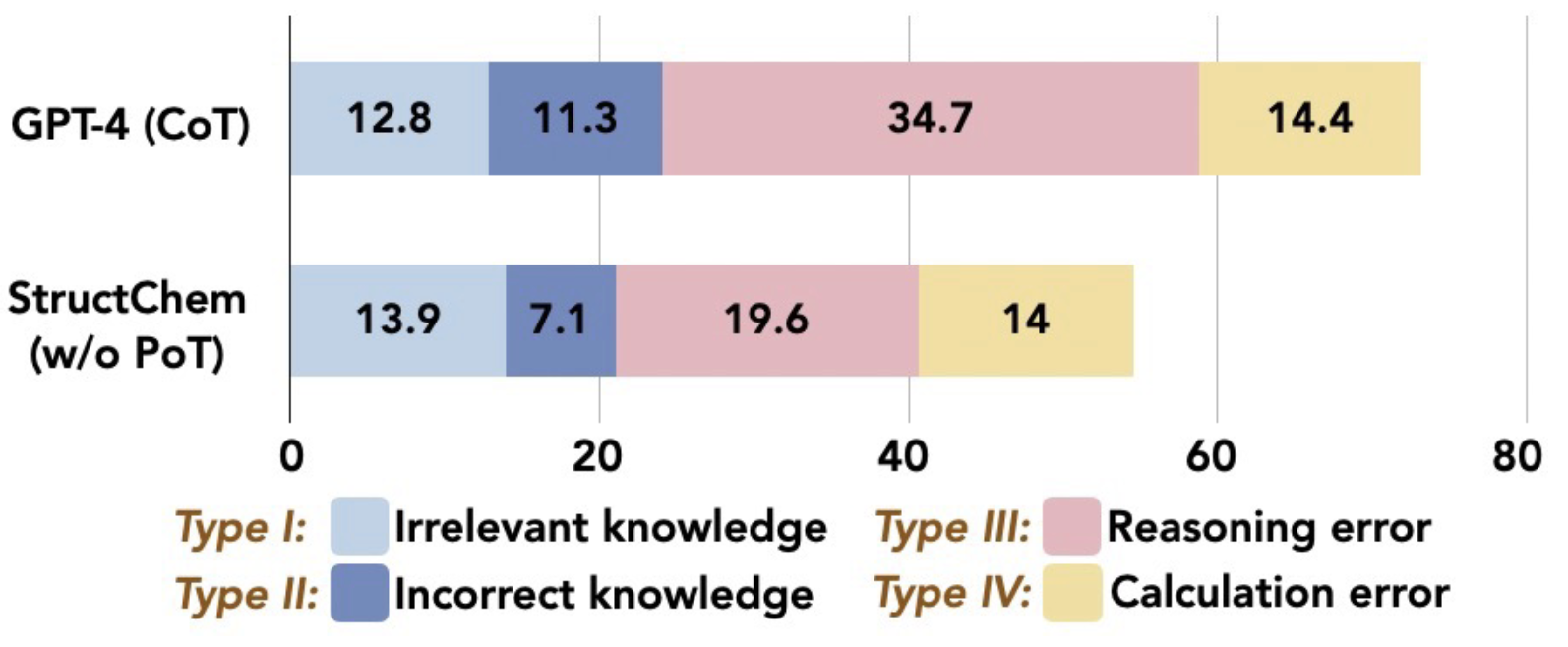

python data_cleaning.pybash finetune.shWe also conduct human annotations on all error cases generated by StructChem into four error categories. These annotationss offer additional insights into the performance of StructChem and shed lights on future potential directions. For detailed annotation results of each sample, please visit human_annotation.

If you find this work useful, please kindly cite our paper:

@misc{ouyang2024structured,

title={Structured Chemistry Reasoning with Large Language Models},

author={Siru Ouyang and Zhuosheng Zhang and Bing Yan and Xuan Liu and Yejin Choi and Jiawei Han and Lianhui Qin},

year={2024},

eprint={2311.09656},

archivePrefix={arXiv},

primaryClass={cs.CL}

}