nanograd

Own version of micrograd

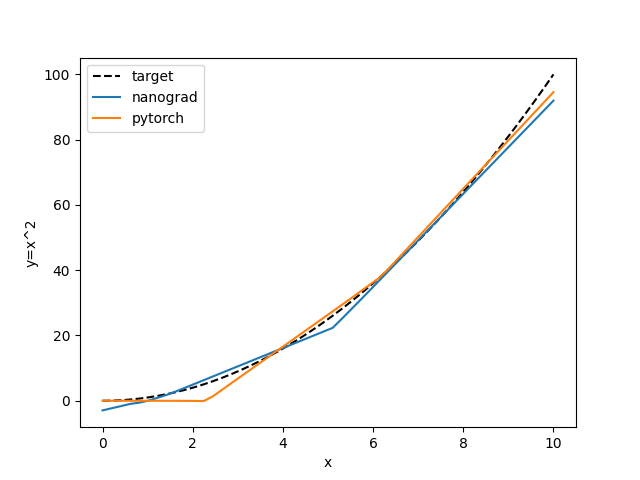

See benchmark.py for a comparison with a torch model of same size (layer size 1,10,10,1) using

SGD optimizer and MSE as loss function. In the example I try to approximate

| Loss | Predictions |

|---|---|

|

|

Nanograd training: 50.84s, Torch training: 1.85sIt is considerably slower than pytorch since the autograd is implemented on a scalar level not tensor.

Testing

pytest nanograd