Deployment automation for a 2 tier web service.

The resulting service can be reached at http://www.castle.snagovsky.com

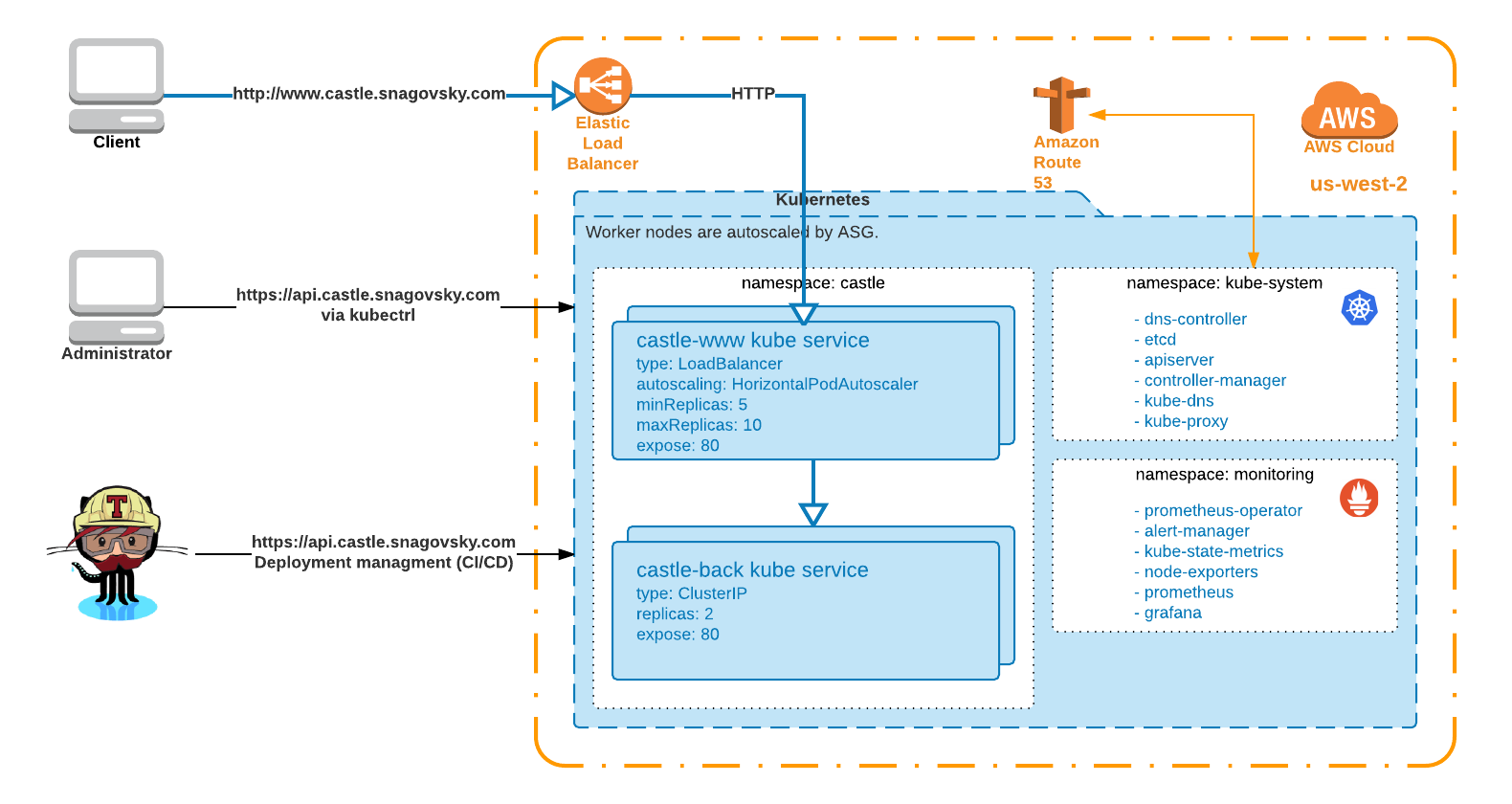

tl;dr: Cloud native style. Deployment and service lyfecycle are managed via CI/CD pipeline in GitHub/Travis CI, with rolling updates and existing tests validation. The service components dockerized, artifacts are stored on Docker Hub. The service is deployed to kubernetes cluster in AWS with Prometheus monitoring. All components are resilient to failure and setup for reactive auto-scaling based on demand.

Reach out to me with any additional questions or to get more details on the project.

Contents:

To meet set criteria a Kubernetes cluster was setup in AWS (kops deployment) with autoscalable worker nodes and Prometheus. Applications have been dockerized.

- Route53 managed delegated subdomain for the project - castle.snagovsky.com

- Kubernetes cluster in AWS, kube API - https://api.castle.snagovsky.com, kube dashboard - https://kube.castle.snagovsky.com/ui

- Docker Hub repositories setup to store docker image artifacts.

- GitHub + Travis-Ci projects setup

:info: The cluster will be live for a week from Wed, Jun 21 2017. Feel free to ask for kube cluster creds if you would like to get your hands on the setup.

The service design follows traditional Microservice (contemporary SOA) design pattern. Each tier is part of separate deployment allowing to apply separate resilience and scalability models. Components interact over HTTP.

Each tier is fronted by a loadbalancer, ELB + kube-proxy in the case of the www app and kube-proxy for the backend app loadbalancing between kube replica-sets for corresponding app with simple healthchecks.

Consistency and repeatability of the service is achieved by describing service via Dockerfiles, Kubernetes and CI/CD config files.

- The service endpoint authoritative A record is managed by Route53 aliasing it to ELB resource used for the first tier app ingress.

- The service is deployed to a single Kubernetes cluster running in the us-west-2 AWS region, worker nodes are in a single AZ, autoscaled with ASG.

- The only supported protocol for the service is HTTP.

- There are two endpoints exposed: a) The service itself - http://www.castle.snagovsky.com; b) Kubernetes API and dashboard https://api.castle.snagovsky.com.

- The first tier (www|frontend) has limited throughput and autoscaled on demand by HorizontalPodAutoscaler with a minimum replicas of 5 to allow at least 50 rps.

- The second tier isn't exposed publicly, it has high throughput and deployed with 2 replica-sets for resiliency. With a max of 10 replicas on the first tier, the backend can't become a bottle neck. In case of a failure of a single replica-set it can handle max workloads on a single replica while kube brings up a second healthy one.

- Basic metrics are colleted by Prometheus node-exporter and can be presented via Grafana deployed in monitoring namespace, or via default Prometheus UI. No alerting or custom metrics are setup. Prometheus and its components are not exposed publicly.

- Logs are written locally on a none-persistent volumes. No aggregation or filtering/analytics setup.

- Merges and pushes to the project git repository trigger a test job at Travis-CI. The job runs basic tests and docker builds. Initially only

go fmt && go buildanddocker build. NOTE: resulting images are not uploaded to Docker Hub, this test validates that the code complies and images build. See bellow for deployment job details. - On merges (or pushes if you dare) to deploy branch (

master) besides all tests from the job above, docker images will be uploaded to Docker Hub and the service will be deployed usingkubectlwithout service interruption using rolling update. - Sensitive data is stored using Travis-CI Encryption keys

- AWS multiple regions for resiliency and perfomance (geographical approximaty) with Route53 latency based routing rules cross region loadbalancing.

- Consider serverless architecture for this service, Kube cluster is overkill, plus based on use patens it might save significant amounts.

- Design and implement more tests for CI/CD: a) go unit tests for each app; b) docker image tests; c) functional testing etc.

- Improve monitoring tracking application specific metrics, add alerting and setup external monitoring for publicly facing service.

- HorizontalPodAutoscaler for the www app should be using a custom metrics to trigger up/down scaling, current use of CPU utilization is to demonstrate the concept only. A custom kube controller can be written to base scaling decisions on ELB CloudWatch metrics as an alternative.

- CI/CD versioned deployments based on Semantic versioning git tags.

- Implement more service lifeCycle actions for CI/CD: destroy service, deploy to a different cluster etc.

- Log aggregation and analysis.

Reach out to me with any additional questions or to get more details on the project.

Repository contents:

├── .travis.yml # Travis-CI configuration

├── LICENSE # Licence

├── README.md # This README

├── back-deployment.yaml # Backend kube deployment, service and autoscaling

├── backend

│ ├── Dockerfile # Dockerfile for backend container

│ └── backend.go # backend source

├── castle-namespace.yaml # Castle namespace kube config

├── docs

│ └── arch_diagram.png # Architectural diagram

├── www

│ ├── Dockerfile # Dockerfile for www container

│ └── www.go # www source

└── www-deployment.yaml # www kube deployment and service