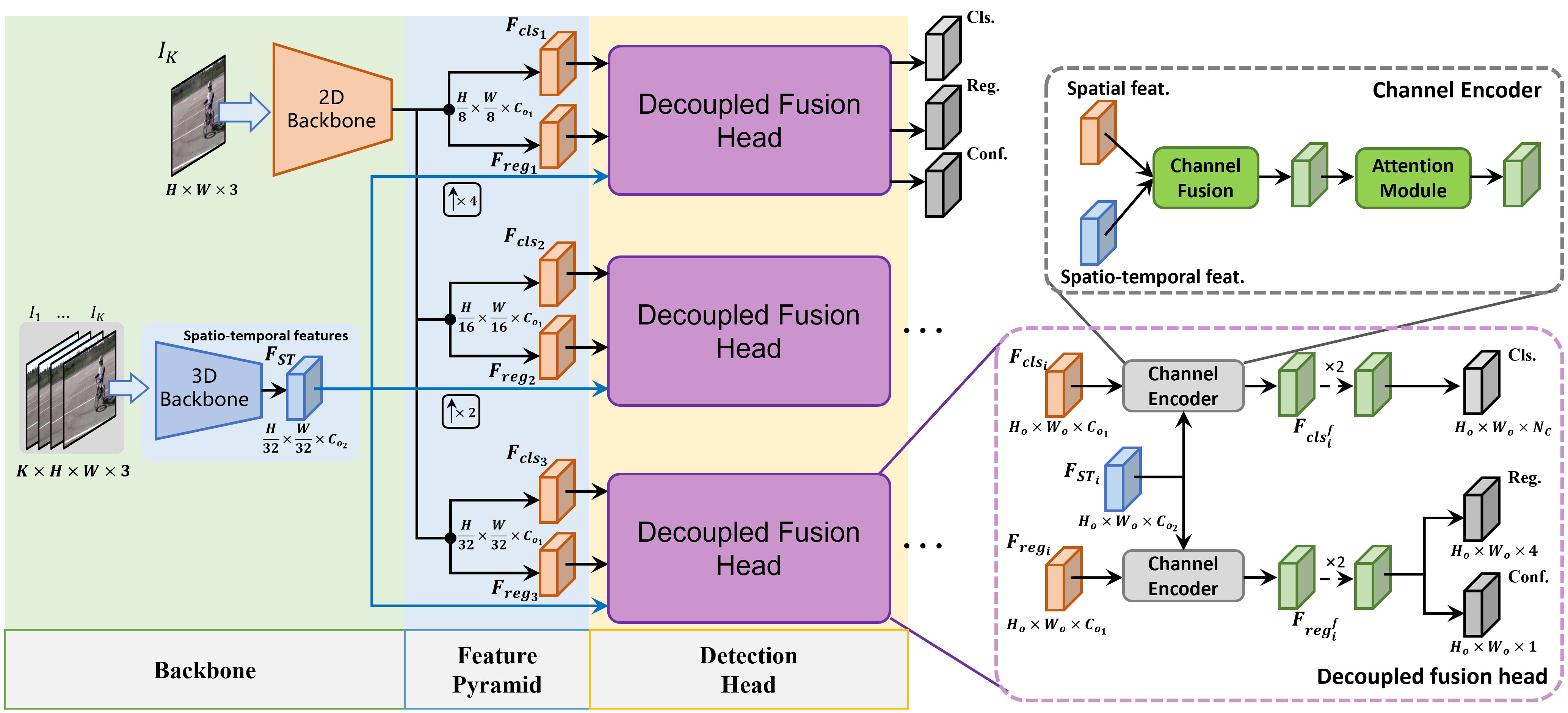

YOWOv2: A Stronger yet Efficient Multi-level Detection Framework for Real-time Spatio-temporal Action Detection

English | 简体中文

- We recommend you to use Anaconda to create a conda environment:

conda create -n yowo python=3.6- Then, activate the environment:

conda activate yowo- Requirements:

pip install -r requirements.txt You can download UCF24 from the following links:

- Google drive

Link: https://drive.google.com/file/d/1Dwh90pRi7uGkH5qLRjQIFiEmMJrAog5J/view?usp=sharing

- BaiduYun Disk

Link: https://pan.baidu.com/s/11GZvbV0oAzBhNDVKXsVGKg

Password: hmu6

You can use instructions from here to prepare AVA dataset.

- UCF101-24

| Model | Clip | GFLOPs | Params | F-mAP | V-mAP | FPS | Weight |

|---|---|---|---|---|---|---|---|

| YOWOv2-Nano | 16 | 1.3 | 3.5 M | 78.8 | 48.0 | 42 | ckpt |

| YOWOv2-Tiny | 16 | 2.9 | 10.9 M | 80.5 | 51.3 | 50 | ckpt |

| YOWOv2-Medium | 16 | 12.0 | 52.0 M | 83.1 | 50.7 | 42 | ckpt |

| YOWOv2-Large | 16 | 53.6 | 109.7 M | 85.2 | 52.0 | 30 | ckpt |

| YOWOv2-Nano | 32 | 2.0 | 3.5 M | 79.4 | 49.0 | 42 | ckpt |

| YOWOv2-Tiny | 32 | 4.5 | 10.9 M | 83.0 | 51.2 | 50 | ckpt |

| YOWOv2-Medium | 32 | 12.7 | 52.0 M | 83.7 | 52.5 | 40 | ckpt |

| YOWOv2-Large | 32 | 91.9 | 109.7 M | 87.0 | 52.8 | 22 | ckpt |

All FLOPs are measured with a video clip with 16 or 32 frames (224×224). The FPS is measured with batch size 1 on a 3090 GPU from the model inference to the NMS operation.

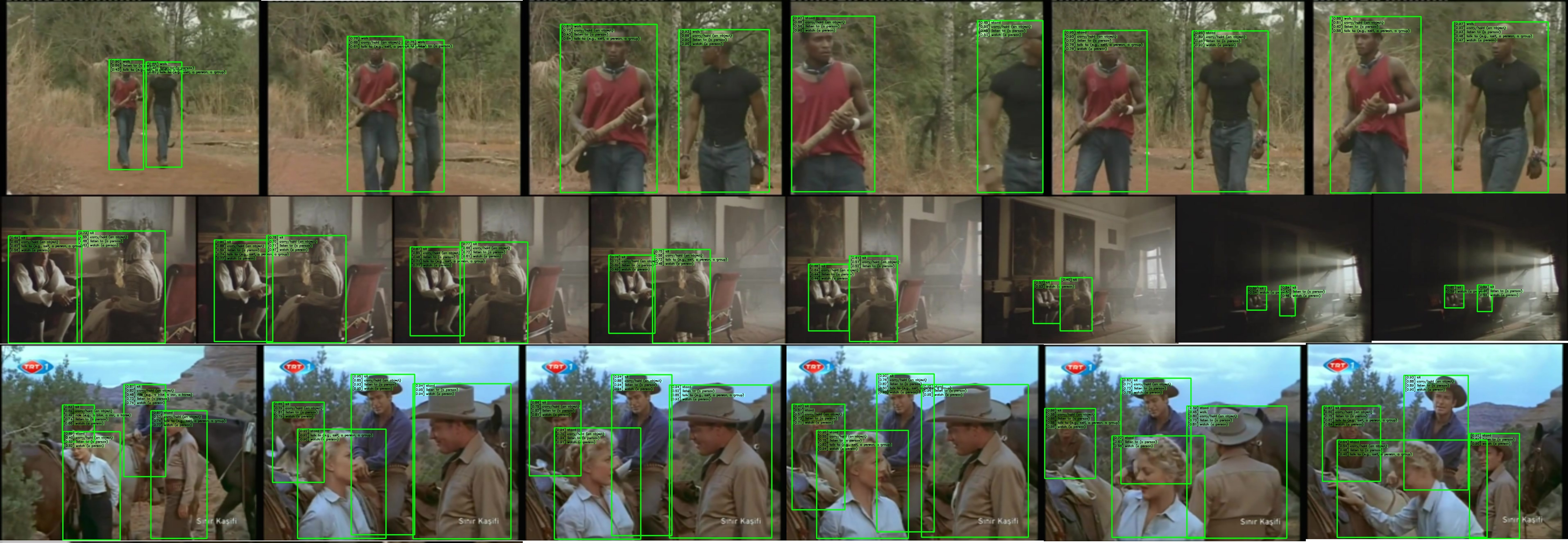

Qualitative results on UCF101-24

- AVA v2.2

| Model | Clip | mAP | FPS | weight |

|---|---|---|---|---|

| YOWOv2-Nano | 16 | 12.6 | 40 | ckpt |

| YOWOv2-Tiny | 16 | 14.9 | 49 | ckpt |

| YOWOv2-Medium | 16 | 18.4 | 41 | ckpt |

| YOWOv2-Large | 16 | 20.2 | 29 | ckpt |

| YOWOv2-Nano | 32 | 12.7 | 40 | ckpt |

| YOWOv2-Tiny | 32 | 15.6 | 49 | ckpt |

| YOWOv2-Medium | 32 | 18.4 | 40 | ckpt |

| YOWOv2-Large | 32 | 21.7 | 22 | ckpt |

- UCF101-24

For example:

python train.py --cuda -d ucf24 --root path/to/dataset -v yowo_v2_nano --num_workers 4 --eval_epoch 1 --max_epoch 8 --lr_epoch 2 3 4 5 -lr 0.0001 -ldr 0.5 -bs 8 -accu 16 -K 16or you can just run the script:

sh train_ucf.sh- AVA

python train.py --cuda -d ava_v2.2 --root path/to/dataset -v yowo_v2_nano --num_workers 4 --eval_epoch 1 --max_epoch 10 --lr_epoch 3 4 5 6 -lr 0.0001 -ldr 0.5 -bs 8 -accu 16 -K 16 --evalor you can just run the script:

sh train_ava.shIf you have multiple GPUs, you can launch DDP to train the YOWOv2, for example:

python train.py --cuda -dist -d ava_v2.2 --root path/to/dataset -v yowo_v2_nano --num_workers 4 --eval_epoch 1 --max_epoch 10 --lr_epoch 3 4 5 6 -lr 0.0001 -ldr 0.5 -bs 8 -accu 16 -K 16 --evalHowever, I have not multiple GPUs, so I am not sure if there are any bugs, or if the given performance can be reproduced using DDP.

- UCF101-24 For example:

python test.py --cuda -d ucf24 -v yowo_v2_nano --weight path/to/weight -size 224 --show- AVA For example:

python test.py --cuda -d ava_v2.2 -v yowo_v2_nano --weight path/to/weight -size 224 --showFor example:

python test_video_ava.py --cuda -d ava_v2.2 -v yowo_v2_nano --weight path/to/weight --video path/to/video --showNote that you can set path/to/video to other videos in your local device, not AVA videos.

- UCF101-24 For example:

# Frame mAP

python eval.py \

--cuda \

-d ucf24 \

-v yowo_v2_nano \

-bs 16 \

-size 224 \

--weight path/to/weight \

--cal_frame_mAP \# Video mAP

python eval.py \

--cuda \

-d ucf24 \

-v yowo_v2_nano \

-bs 16 \

-size 224 \

--weight path/to/weight \

--cal_video_mAP \- AVA

Run the following command to calculate frame mAP@0.5 IoU:

python eval.py \

--cuda \

-d ava_v2.2 \

-v yowo_v2_nano \

-bs 16 \

--weight path/to/weight# run demo

python demo.py --cuda -d ucf24 -v yowo_v2_nano -size 224 --weight path/to/weight --video path/to/video --show

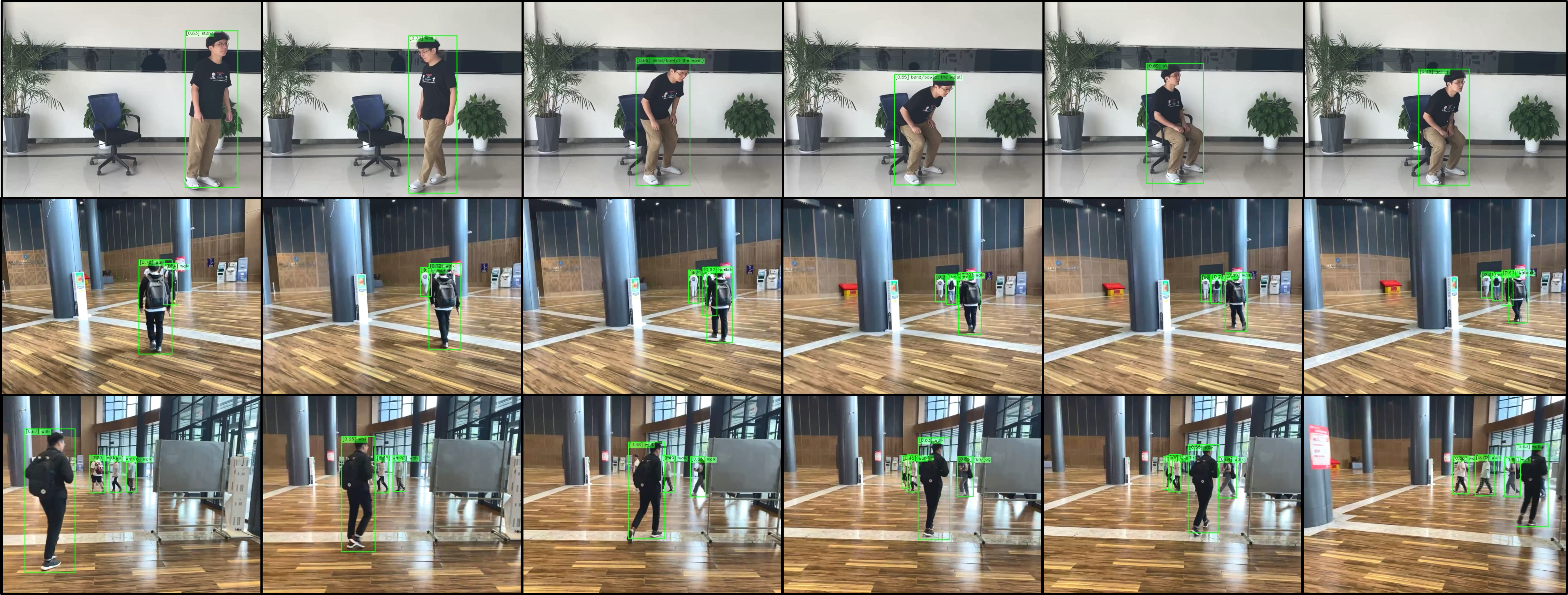

-d ava_v2.2Qualitative results in real scenarios

If you are using our code, please consider citing our paper.

@article{yang2023yowov2,

title={YOWOv2: A Stronger yet Efficient Multi-level Detection Framework for Real-time Spatio-temporal Action Detection},

author={Yang, Jianhua and Kun, Dai},

journal={arXiv preprint arXiv:2302.06848},

year={2023}

}