AniPortrait: Audio-Driven Synthesis of Photorealistic Portrait Animations

Huawei Wei, Zejun Yang, Zhisheng Wang

Tencent Games Zhiji, Tencent

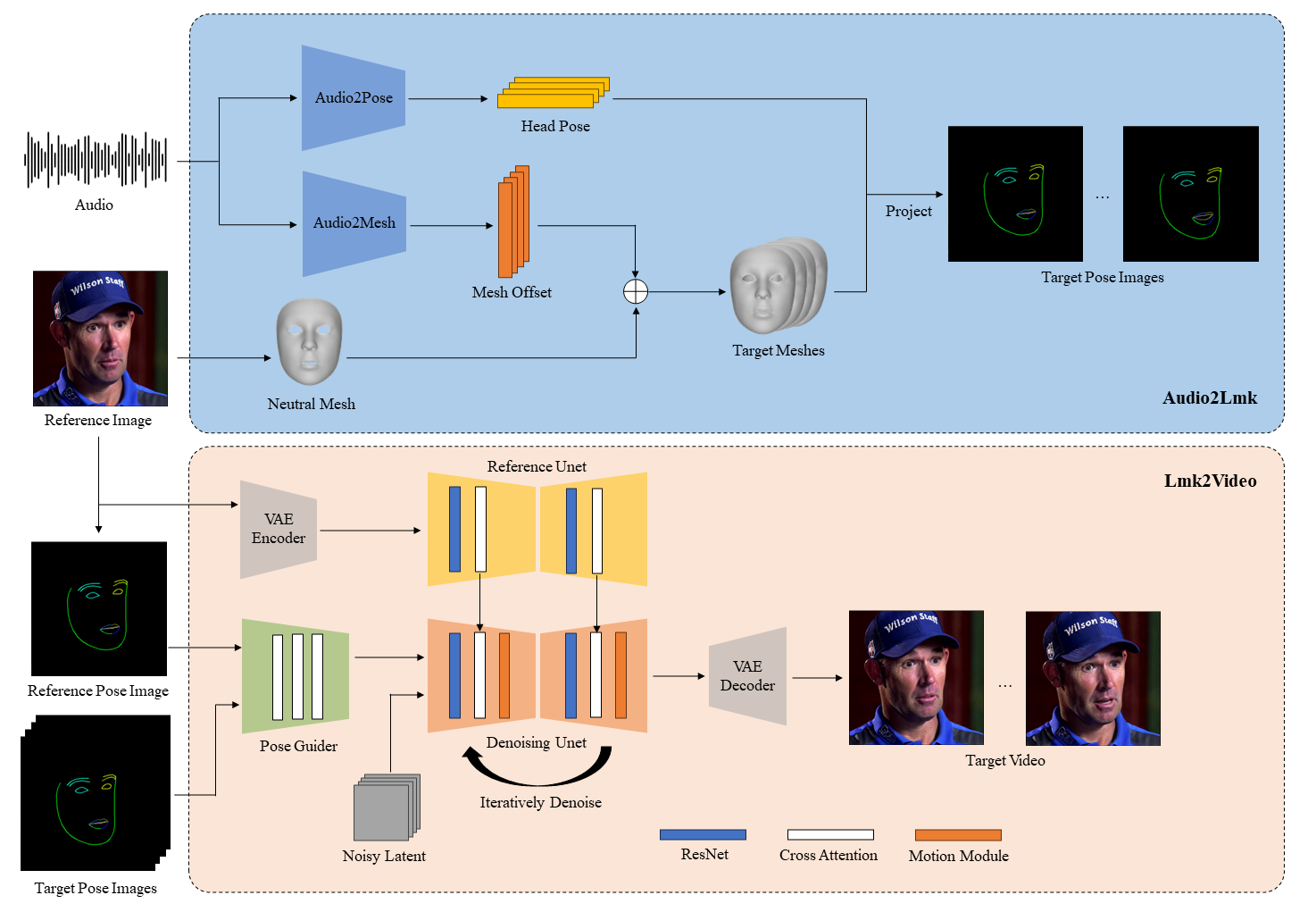

Here we propose AniPortrait, a novel framework for generating high-quality animation driven by audio and a reference portrait image. You can also provide a video to achieve face reenacment.

-

Now our paper is available on arXiv.

-

Update the code to generate pose_temp.npy for head pose control.

-

We will release audio2pose pre-trained weight for audio2video after futher optimization. You can choose head pose template in

./configs/inference/head_pose_tempas substitution.

cxk.mp4 |

solo.mp4 |

Aragaki.mp4 |

num18.mp4 |

jijin.mp4 |

kara.mp4 |

lyl.mp4 |

zl.mp4 |

We recommend a python version >=3.10 and cuda version =11.7. Then build environment as follows:

pip install git+https://github.com/painebenjamin/aniportrait.gitYou can now use the command line utility aniportrait. See these for examples in this repository:

aniportrait configs/inference/ref_images/solo.png --video configs/inference/video/Aragaki_song.mp4 --num-frames 64 --width 512 --height 512Note: remove --num-frames 64 to match the length of the video.

aniportrait configs/inference/ref_images/lyl.png --audio configs/inference/video/lyl.wav --num-frames 96 --width 512 --height 512Note: remove --num-frames 64 to match the length of the audio.

For help, run aniportrait --help.

Usage: aniportrait [OPTIONS] INPUT_IMAGE

Run AniPortrait on an input image with a video, and/or audio file. - When

only a video file is provided, a video-to-video (face reenactment) animation

is performed. - When only an audio file is provided, an audio-to-video (lip-

sync) animation is performed. - When both a video and audio file are

provided, a video-to-video animation is performed with the audio as guidance

for the face and mouth movements.

Options:

-v, --video FILE Video file to drive the animation.

-a, --audio FILE Audio file to drive the animation.

-fps, --frame-rate INTEGER Video FPS. Also controls the sampling rate

of the audio. Will default to the video FPS

if a video file is provided, or 30 if not.

-cfg, --guidance-scale FLOAT Guidance scale for the diffusion process.

[default: 3.5]

-ns, --num-inference-steps INTEGER

Number of diffusion steps. [default: 20]

-cf, --context-frames INTEGER Number of context frames to use. [default:

16]

-co, --context-overlap INTEGER Number of context frames to overlap.

[default: 4]

-nf, --num-frames INTEGER An explicit number of frames to use. When

not passed, use the length of the audio or

video

-s, --seed INTEGER Random seed.

-w, --width INTEGER Output video width. Defaults to the input

image width.

-h, --height INTEGER Output video height. Defaults to the input

image height.

-m, --model TEXT HuggingFace model name.

-nh, --no-half Do not use half precision.

-g, --gpu-id INTEGER GPU ID to use.

-sf, --single-file Download and use a single file instead of a

directory.

-cf, --config-file TEXT Config file to use when using the single-

file option. Accepts a path or a filename in

the same directory as the single file. Will

download from the repository passed in the

model option if not provided. [default:

config.json]

-mf, --model-filename TEXT The model file to download when using the

single-file option. [default:

aniportrait.safetensors]

-rs, --remote-subfolder TEXT Remote subfolder to download from when using

the single-file option.

-c, --cache-dir DIRECTORY Cache directory to download to. Default uses

the huggingface cache.

-o, --output FILE Output file. [default: output.mp4]

--help Show this message and exit.Extract keypoints from raw videos and write training json file (here is an example of processing VFHQ):

python -m scripts.preprocess_dataset --input_dir VFHQ_PATH --output_dir SAVE_PATH --training_json JSON_PATHUpdate lines in the training config file:

data:

json_path: JSON_PATHRun command:

accelerate launch train_stage_1.py --config ./configs/train/stage1.yamlPut the pretrained motion module weights mm_sd_v15_v2.ckpt (download link) under ./pretrained_weights.

Specify the stage1 training weights in the config file stage2.yaml, for example:

stage1_ckpt_dir: './exp_output/stage1'

stage1_ckpt_step: 30000 Run command:

accelerate launch train_stage_2.py --config ./configs/train/stage2.yamlWe first thank the authors of EMO, and part of the images and audios in our demos are from EMO. Additionally, we would like to thank the contributors to the Moore-AnimateAnyone, majic-animate, animatediff and Open-AnimateAnyone repositories, for their open research and exploration.

@misc{wei2024aniportrait,

title={AniPortrait: Audio-Driven Synthesis of Photorealistic Portrait Animations},

author={Huawei Wei and Zejun Yang and Zhisheng Wang},

year={2024},

eprint={2403.17694},

archivePrefix={arXiv},

primaryClass={cs.CV}

}