Automatic setup and deploy a MLflow server. This includes:

- A MLflow server

- A Postgres database to store MLflow metadata, such as:

- experiment data;

- run data;

- model registry data.

- Minio, an open-source object storage system, to store and retrieve large files, such as:

- model artifacts;

- data sets.

You could use WSL2 on a Windows machine, as an alternative to an Ubuntu machine.

1. Install pyenv

Pyenv is used with MLflow to manage different Python versions and packages in isolated environments.

- Remove previous installations (optional):

rm -rf ~/.pyenv - Install any necessary package:

sudo apt-get update -y sudo apt-get install -y make build-essential libssl-dev zlib1g-dev libbz2-dev libreadline-dev libsqlite3-dev wget curl llvm libncursesw5-dev xz-utils tk-dev libxml2-dev libxmlsec1-dev libffi-dev liblzma-dev

- Automatic install

pyenv:curl https://pyenv.run | bash - Edit the

~/.bashrcfile to recognizepyenvsudo nano ~/.bashrc - And copy/paste the following line into the end of the file:

# Config for PyEnv export PYENV_ROOT="$HOME/.pyenv" export PATH="$PYENV_ROOT/bin:$PATH" eval "$(pyenv init --path)"

- Save/close (

Crtl-X>y>Enter), and then refresh the~/.bashrcfile:source ~/.bashrc

- Create and activate your MLflow experiment environment:

conda create -n mlflow_env python=3.10 conda activate mlflow_env pip install pandas scikit-learn mlflow[extras]

- Add these to the environment; edit to your own preferred secrets:

export AWS_ACCESS_KEY_ID=minio export AWS_SECRET_ACCESS_KEY=minio123 export MLFLOW_S3_ENDPOINT_URL=http://localhost:9000

-

Clone this repo and navigate inside:

git clone https://github.com/pandego/mlflow-postgres-minio.git cd ./mlflow-postgres-minio -

Edit

default.envto your own preferred secrets: -

Launch the

docker-composecommand to build and start all containers needed for the MLflow service:docker compose --env-file default.env up -d --build

-

Give it a few minutes and once

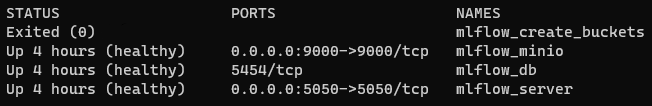

docker-composeis finished, check the containers health:docker ps

-

You should see something like this:

-

You should also be able to navigate to:

- The MLflow UI -> http://localhost:5000

- The Minio UI -> http://localhost:9000

That's it! 🥳 You can now start using MLflow!

- From your previously created MLflow environment,

mlflow_env, simply run the example provided in this repo:python ./wine_quality_example/wine_quality_example.py

-

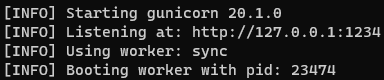

From your previously created MLflow environment,

mlflow_env, serve the model by running the following command, replacing the<full_path>for your own:mlflow models serve -m <full_path> -h 127.0.0.1 -p 1234 --timeout 0

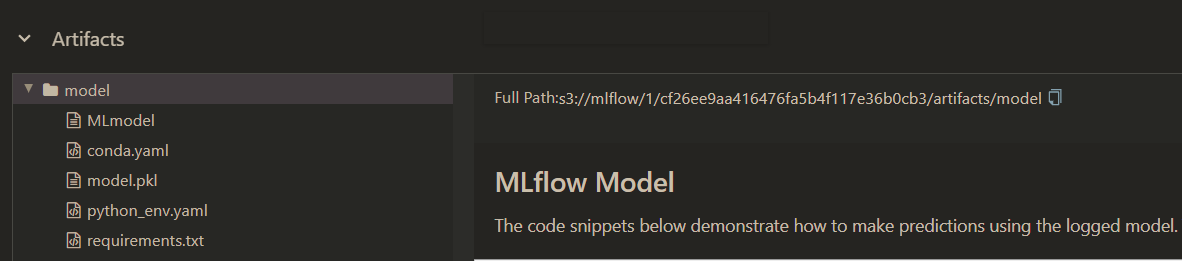

You just have to replace

<full_path>by the full path to your model artifact as provided in the MLFlow web UI.

-

Let it run, it should look like this:

Mlflow also allows you to build a dockerized API based on a model stored in one of your runs.

- The following command allows you to build this dockerzed API:

mlflow models build-docker \ -m <full_path> \ -n adorable-mouse \ --enable-mlserver - All is left to do is to run this container:

docker run -p 1234:8080 adorable-mouse

- In case you just want to generate a docker file for later use, use the following command:

mlflow models generate-dockerfile \ -m <full_path> \ -d ./adorable-mouse \ --enable-mlserver

- You can then include it in a

docker-compose.yml:version: '3.8' services: mlflow-model-serve-from-adorable-mouse: build: ./adorable-mouse image: adorable-mouse container_name: adorable-mouse_instance restart: always ports: - 1234:8080 healthcheck: test: ["CMD", "curl", "-f", "http://localhost:8080/health/"] interval: 30s timeout: 10s retries: 3

- You can run this

docker-compose.ymlwith the following command:docker compose up

- In a different terminal, send a request to test the served model:

curl -X POST -H "Content-Type: application/json" --data '{"dataframe_split": {"data": [[7.4,0.7,0,1.9,0.076,11,34,0.9978,3.51,0.56,9.4]], "columns": ["fixed acidity","volatile acidity","citric acid","residual sugar","chlorides","free sulfur dioxide","total sulfur dioxide","density","pH","sulphates","alcohol"]}}' http://127.0.0.1:1234/invocations

- The output should be the something like the following:

$ {"predictions": [5.576883967129616]}

🎊 Et voilà! 🎊