News

05/08/2023The code and the pretrained model are released!23/10/2023We have collected recent advances in Human Avatars in this repo, welcome stars!

Official code for ICCV 2023 paper:

TransHuman: A Transformer-based Human Representation for Generalizable Neural Human Rendering

Xiao Pan1,2, Zongxin Yang1, Jianxin Ma2, Chang Zhou2, Yi Yang1

1 ReLER Lab, CCAI, Zhejiang University; 2 Alibaba DAMO Academy

[Project Page | arXiv]

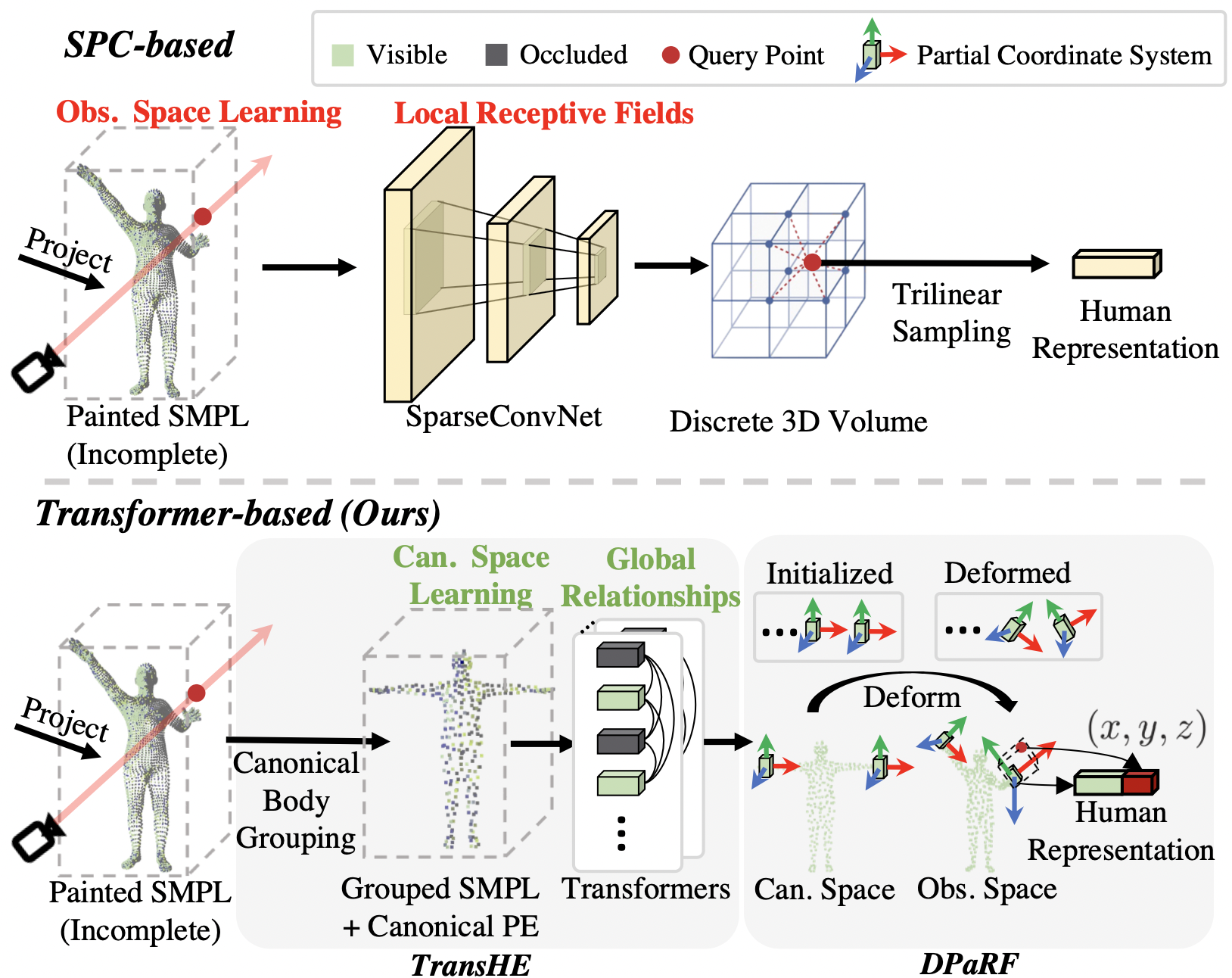

- We present a brand-new framework named TransHuman for generalizable neural human rendering, which learns the painted SMPL under the canonical space and captures the global relationships between human parts with transformers.

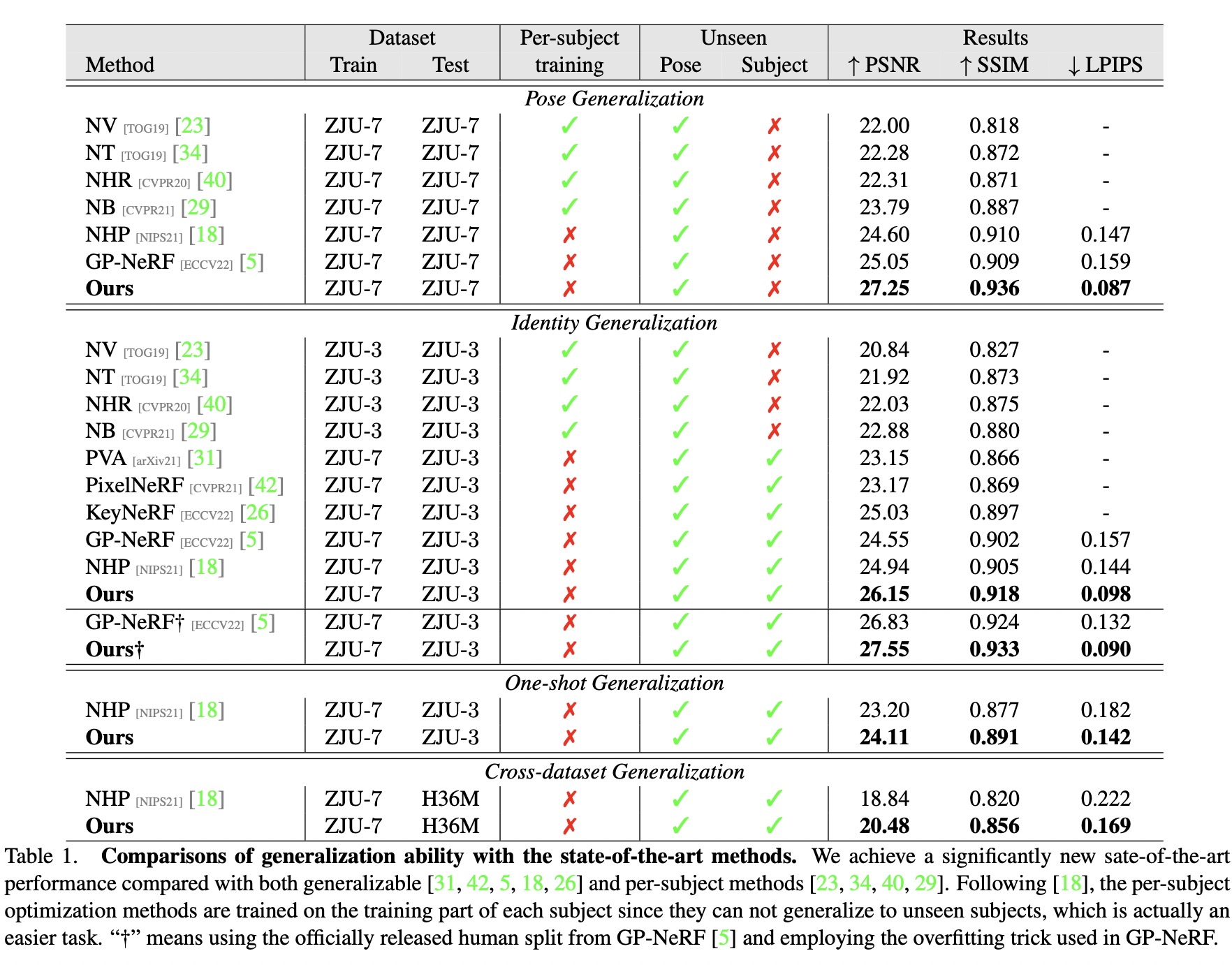

- We achieve SOTA performance on various settings and datasets.

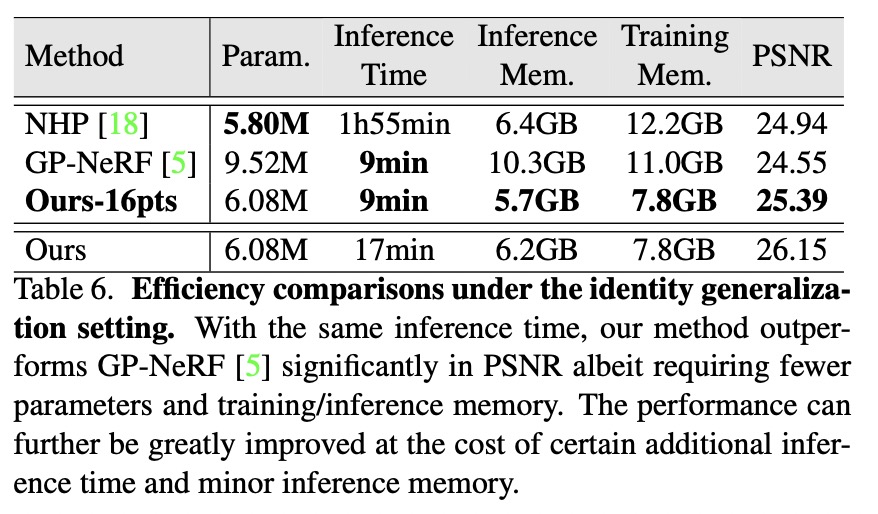

- We also have better efficiency.

We test with:

- python==3.6.12

- pytorch==1.10.2

- cuda==11.3

# under TransHuman dir

# create conda environment

conda create -n transhuman python=3.6

conda activate transhuman

# make sure that the pytorch cuda is consistent with the system cuda

# install pytorch via conda

https://pytorch.org/get-started/locally/

# install requirements

pip install -r requirements.txt

# install pytorch3d (we build from source)

https://github.com/facebookresearch/pytorch3d/blob/main/INSTALL.md

Please follow NHP to prepare the ZJU-MoCap dataset.

The final structure of data folder should be:

# under TransHuman dir

-data

-smplx

- smpl

- ...

-zju_mocap

- CoreView_313

- ...

-zju_rasterization

- CoreView_313

- ...TODO: Code for more datasets are coming.

sh ./scripts/train.shThe checkpoints will be saved under ./data/trained_model/transhuman/$EXP_NAME .

sh ./scripts/test.sh $GPU_NUMBER $EPOCH_NUMBER $EXP_NAME The config of different settings are provided in test.sh. Modify them as you need.

For reproducing the results in the paper, please download the official checkpoints from here.

Put it under ./data/trained_model/transhuman/official, and run:

sh ./scripts/test.sh 0 2100 official -

Render the free-viewpoint frames via running:

sh ./scripts/video.sh $GPU_NUMBER $EPOCH_NUMBER $EXP_NAME

The rendered frames will be saved under

./data/perform/$EXP_NAME. -

Use

gen_freeview_video.pyfor getting the final video.

-

Extract the mesh via running:

sh ./script/mesh.sh $GPU_NUMBER $EPOCH_NUMBER $EXP_NAME

The meshes will be saved under

./data/mesh/$EXP_NAME. -

Render the meshes using

render_mesh_dynamic.py. The rendered frames will also be saved under./data/mesh/$EXP_NAME -

Use

gen_freeview_video.pyfor getting the final video.

If you find our work useful, please kindly cite:

@InProceedings{Pan_2023_ICCV,

author = {Pan, Xiao and Yang, Zongxin and Ma, Jianxin and Zhou, Chang and Yang, Yi},

title = {TransHuman: A Transformer-based Human Representation for Generalizable Neural Human Rendering},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2023},

pages = {3544-3555}

}

For questions, feel free to contact xiaopan@zju.edu.cn.

This project is mainly based on the code from NHP and humannerf. We also thank Sida Peng of Zhejiang University for helpful discussions on details of ZJU-MoCap dataset.