This repository shares the documentation and development kit of the View of Delft automotive dataset.

- Introduction

- Sensors and Data

- Annotation

- Access

- Getting Started

- Examples and Demo

- Citation

- Original paper

- Code on Github

- Links

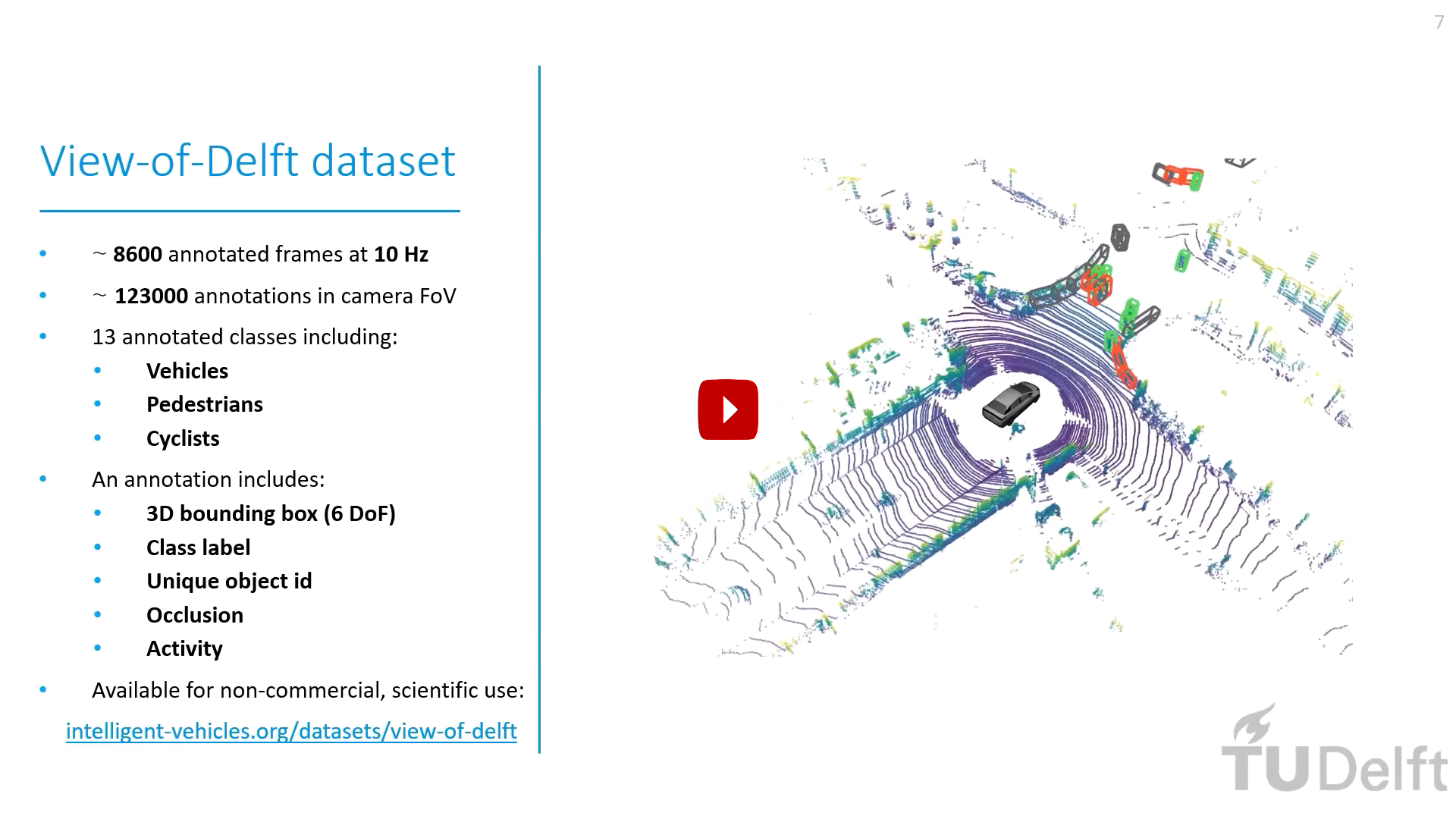

The View-of-Delft (VoD) dataset is a novel automotive dataset containing 8600 frames of synchronized and calibrated 64-layer LiDAR-, (stereo) camera-, and 3+1D radar-data acquired in complex, urban traffic. It consists of more than 123000 3D bounding box annotations, including more than 26000 pedestrian, 10000 cyclist and 26000 car labels.

A short introduction video of the dataset can be found here (click the thumbnail below):

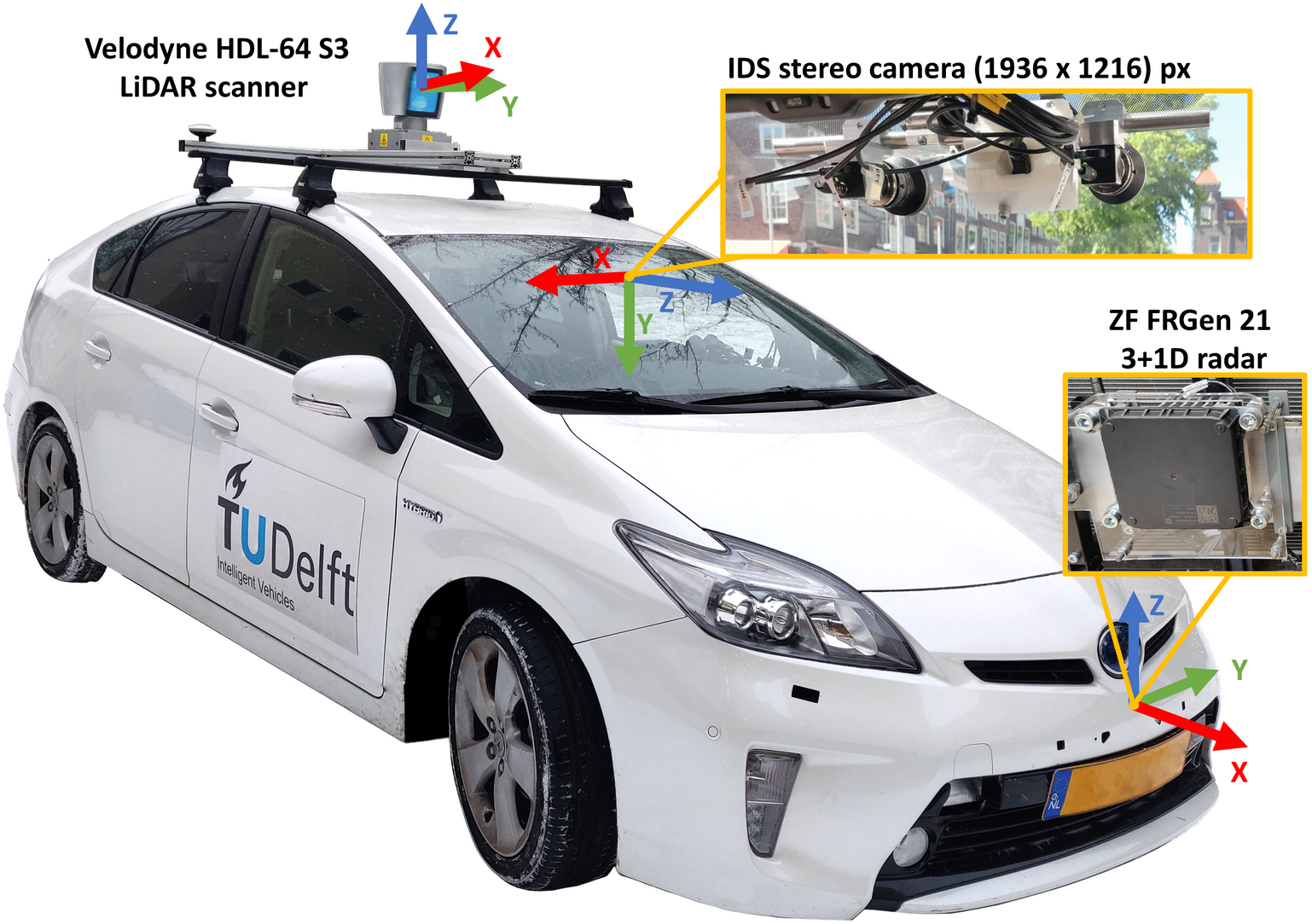

We recorded the output of the following sensors:

- a ZF FRGen21 3+1D radar (∼13 Hz) mounted behind the front bumper,

- a stereo camera (1936 × 1216 px, ∼30 Hz) mounted on the windshield,

- a Velodyne HDL-64 S3 LIDAR (∼10 Hz) scanner on the roof, and

- the ego vehicle’s odometry (filtered combination of RTK GPS, IMU, and wheel odometry, ∼100 Hz).

All sensors were jointly calibrated. See the figure below for a general overview of the sensor setup.

For details about the sensors, the data streams, and the format of of the provided data, please refer to the SENSORS and DATA documentation.

The dataset contains 3D bounding box annotations for 13 road user classes with occlusion, activity, information, along with a track id to follow objects across frames. For more details, please refer to the Annotation documentation.

The dataset is made freely available for non-commercial research purposes only. Eligibility to use the dataset is limited to Master- and PhD-students, and staff of academic and non-profit research institutions. Access will be possible to request by filling this form:

Form to request access to the VoD dataset

By requesting access, the researcher agrees to use and handle the data according to the license. See furthermore our privacy statement.

After validating the researcher’s association to a research institue, we will send an email containing password protected download link(s) of the VoD dataset. Sharing these links and/or the passwords is strictly forbidden (see licence).

In case of questions of problems, please send an email to a.palffy at tudelft.nl.

Please refer to the GETTING_STARTED manual to learn how to organize the data and start using the development kit, as well as find information regarding evaluation.

Please refer to this EXAMPLES manual for several examples (Jupyter Notebooks) of how to use the dataset and the development kit, including data loading, fetching and applying transformations, and 2D/3D visualization.

- TODO the development kit is realeased under the TBD license.

- The dataset can be used by accepting the Research Use License.

- Annotation was done by understand.ai, a subsidiary of DSpace.

- This work was supported by the Dutch Science Foundation NWO-TTW, within the SafeVRU project (nr. 14667).

- During our experiments, we used the OpenPCDet library both for training, and for evaluation purposes.

- We thank Ronald Ensing, Balazs Szekeres, and Hidde Boekema for their extensive help developing this development kit.

If you find the dataset useful in your research, please consider citing it as:

@ARTICLE{apalffy2022,

author={Palffy, Andras and Pool, Ewoud and Baratam, Srimannarayana and Kooij, Julian F. P. and Gavrila, Dariu M.},

journal={IEEE Robotics and Automation Letters},

title={Multi-Class Road User Detection With 3+1D Radar in the View-of-Delft Dataset},

year={2022},

volume={7},

number={2},

pages={4961-4968},

doi={10.1109/LRA.2022.3147324}}