I work at Replicate. It's an API for running models.

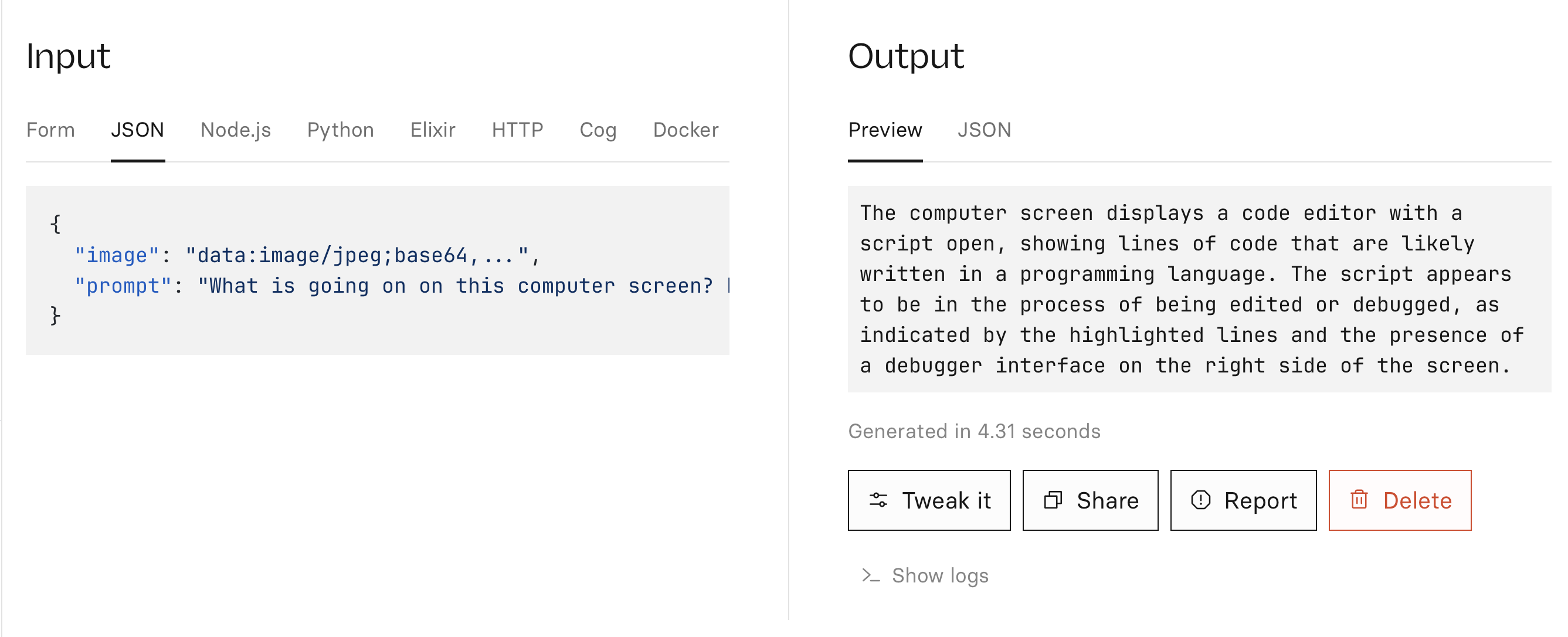

One of the cool models is llava. llava is like an open source alternative to GPT vision. What can you do with llava? 🤔

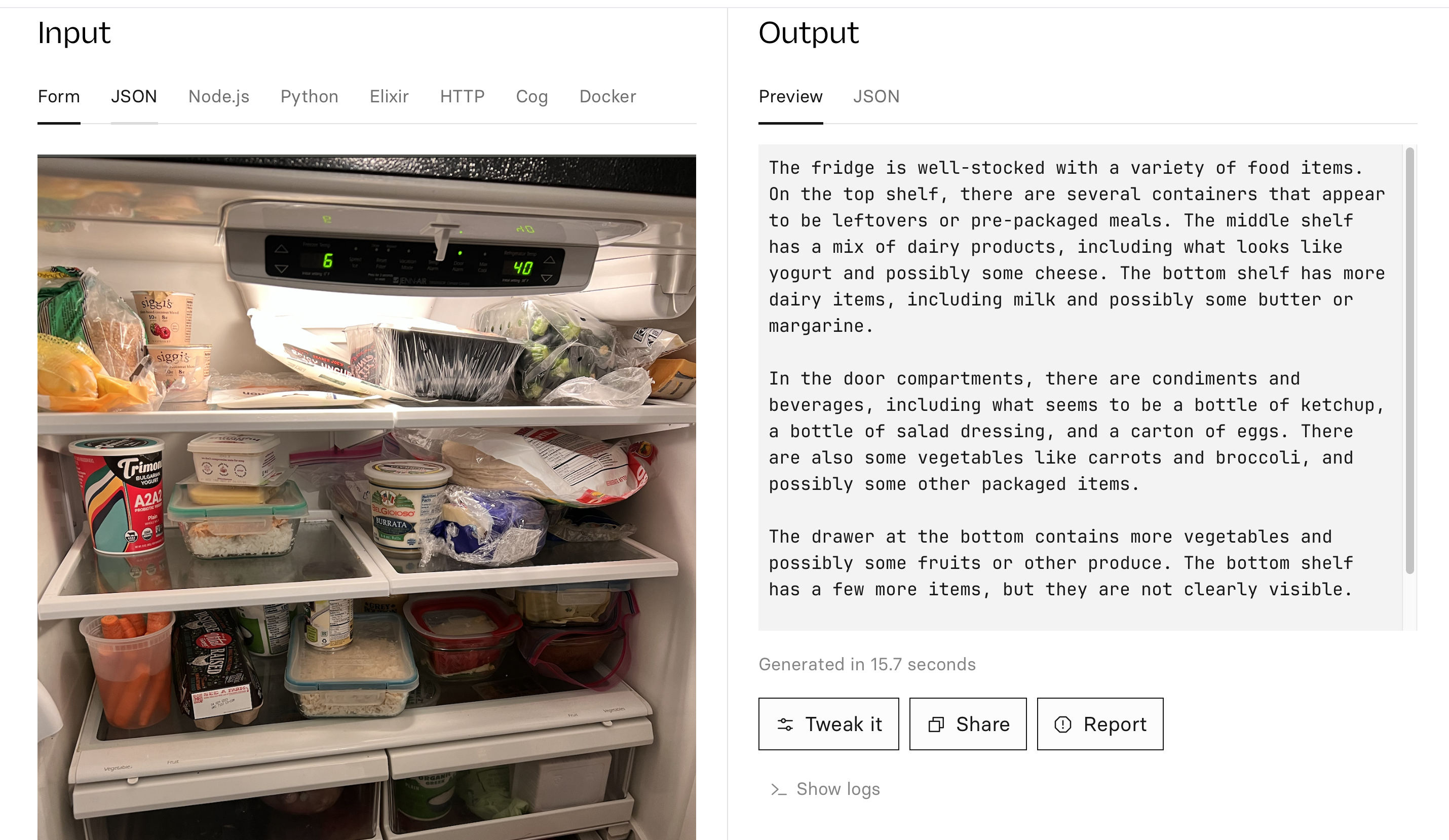

prompt: what's in this fridge?

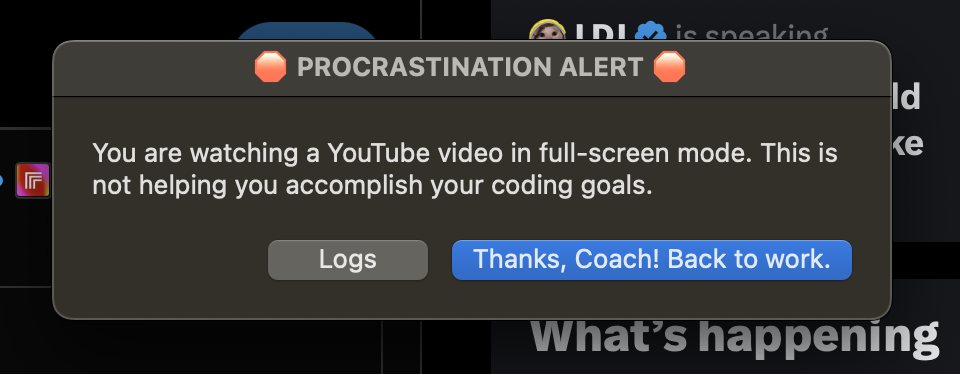

Well, I procrastinate a lot. So I procrastinated by making a thing that helps me stop procrastinating!

python coach.py --goal "work on a coding project"

recorder.mp4

osascript -e 'tell application "System Events" to get the name of the first process whose frontmost is true'

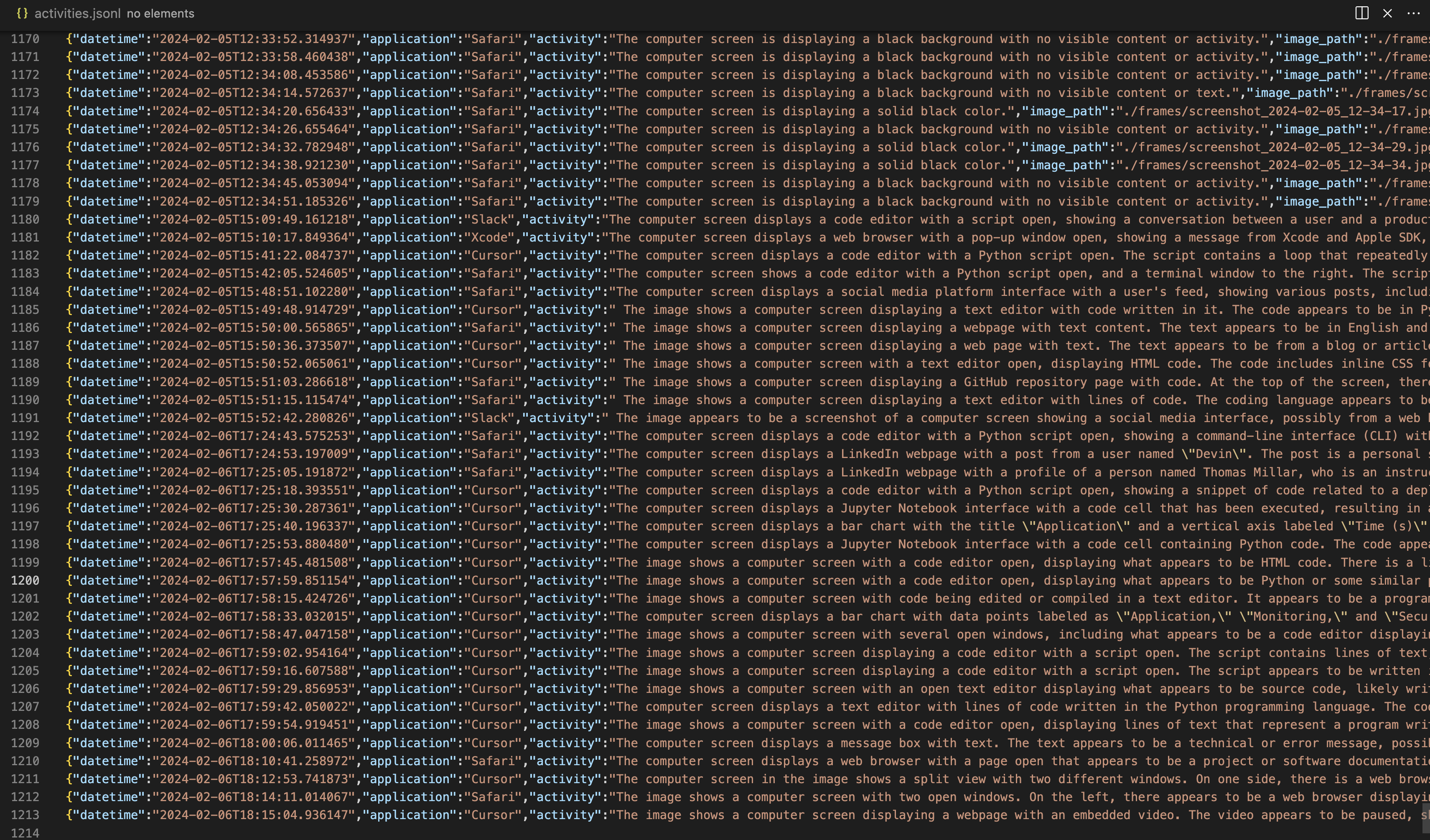

Each activity is saved in this format:

Activity(

datetime: datetime

application: str

activity: str

image_path: str

model: str

prompt: str

goal: str = None

is_productive: bool = None

explanation: str = None

iteration_duration: float = None

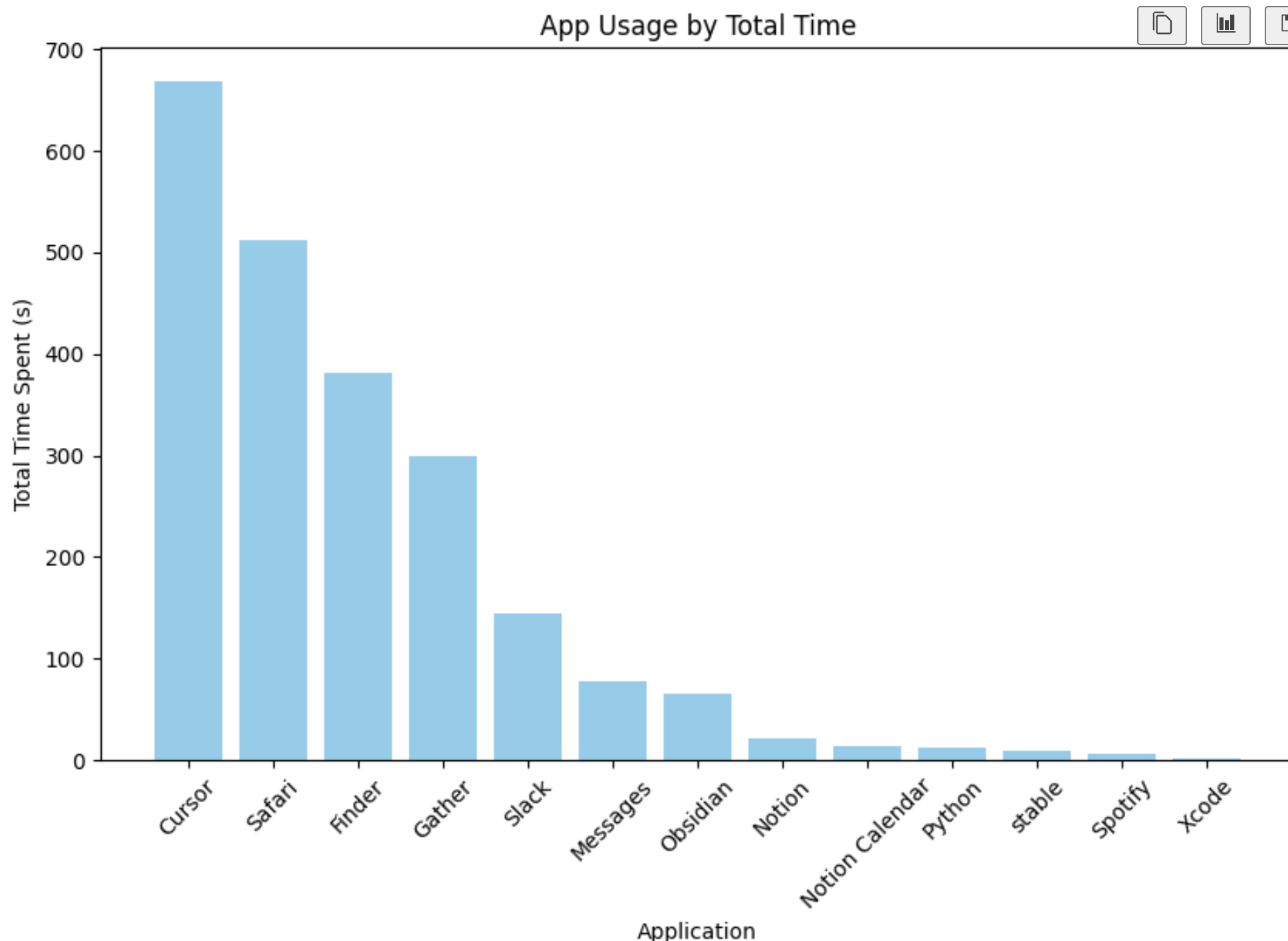

)You can already do interesting things with this data:

> python test_coach.py \

> --image_description "The computer screen displays a code editor with a file open, showing a Python script." \

> --goal "work on a coding project"

>

> productive=True explanation='Based on the information provided, it appears that you have a code editor open and are viewing a Python script, which aligns with your goal of working on a coding project. Therefore, your current activity is considered productive.'> python test_coach.py \

> --image_description "The computer screen displays a web browser with YouTube Open" \

> --goal "work on a coding project"

>

> productive=False explanation='Watching videos on YouTube is not helping you work on your coding project. Try closing the YouTube tab and opening your coding project instead.'How do I guarantee that the output is JSON? Mixtral doesn't support function calling yet, so I just ask it nicely to give me JSON. I then use a library called instructor to retry if the output fails.

model = "ollama/mixtral"

messages = [

{

"role": "system",

"content": """You are a JSON extractor. Please extract the following JSON, No Talking at all. Just output JSON based on the description. NO TALKING AT ALL!!""",

},

{

"role": "user",

"content": f"""You are a productivity coach. You are helping my accomplish my goal of {goal}. Let me know if you think the description of my current activity is in line with my goals.

RULES: You must respond in JSON format. DO NOT RESPOND WITH ANY TALKING.

## Current status:

Goal: {goal}

Current activity: {description}

## Result:""",

},

]

record = completion(

model=model,

response_model=GoalExtract,

max_retries=5,

messages=messages,

)python coach.py --goal 'work on a coding project' --cloud

OR remove cloud flag to run locally on Ollama:

python coach.py --goal 'work on a coding project'

Optionally, activate hard mode:

python coach.py --goal 'work on a coding project' --cloud

Demo video:

coach-demo-compressed.mp4

What happens if you embed the text on your screen and see how far it is from distracting keywords?

python ocr.py