A floating-point matrix multiplication implemented in hardware.

This repo describes the implementation of a floating-point matrix multiplication on a PYNQ-Z1 development board.

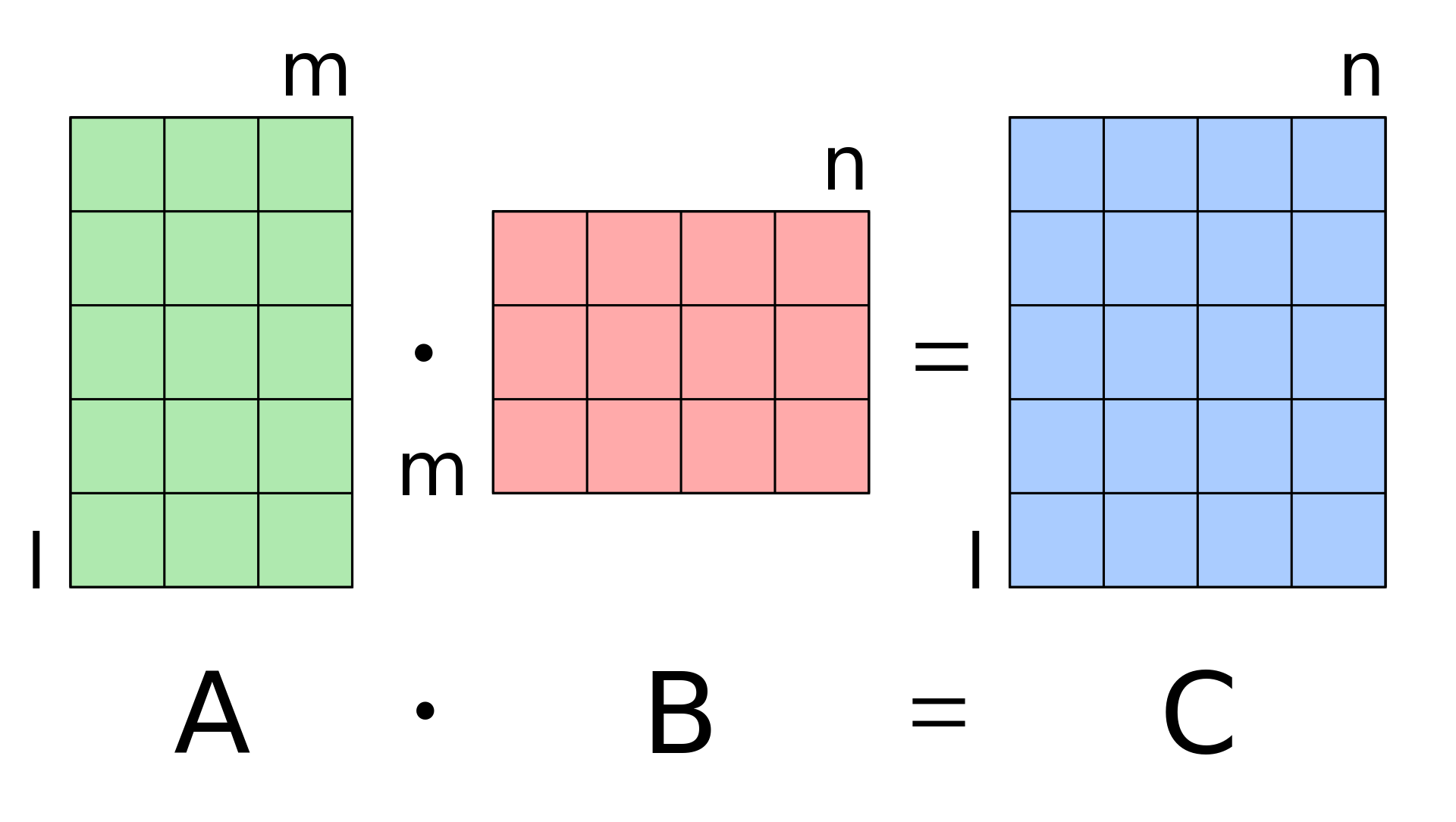

The hardware module implements the matrix product C = AB, where A, B, and C are 128 x 128 matrices.

This hardware accelerator provides a 2.8x speedup compared to NumPy. It should be noted that NumPy uses both vectorization and, presumably, a more efficient algorithm than the naive one implemented in this example.

A 3.5x speedup can be achieved by using the 64-bit AXI-Stream interface. This approach requires additional logic to pack and unpack the matrices.

- [hls] contains the accelerator c++ source code for high level synthesis.

- [boards/Pynq-Z1/matmult] contains the Vivado project.

- [notebooks] contains the Jupyter Notebook to evaluate the design. This notebook uses the Xilinx/PYNQ Python library.

- [overlay] contains the generated hardware files. These files were generated using

vivadoandvivado_hlsversion 2019.2. - [service] contains code for how to expose matrix multiplication as a gRPC service and an example client that calls it.

- Copy overlay/matmult to the PYNQ-Z1 device.

- Copy notebooks/matmult.ipynb to the Jupyter notebooks area in the PYNQ-Z1 device.

Requires Xilinx vivado and vivado_hls version 2019.2. If necessary, a different version can be configured in the tcl scripts: script_solution1.tcl and matmult.tcl.

- Build the

matmultmodule:cd hls make clean && make solution1

- Build the Vivado project:

cd boards/Pynq-Z1/matmult make clean && make all

This is a minimal and naive implementation of matrix multiplication. You can build on top of this and implement various hardware optimizations such as:

- Pipelining (loop unrolling)

- Memory partioning

- Fixed-point optimization

These optimizations are talked about in detail here.

The gRPC runtime also seems to incur an extremely large (on the order of seconds) latency for some reason. This latency is not due to pickling/unpickling. For the service to be actually useful, this issue must be explored and fixed.

Once the RPC latency issue is fixed, the service can be deployed to an array of Pynq boards and be used to parallelize matrix computations.

I also encountered a problem trying to run a remote client on the same LAN. Ideally, a remote client should not need to know the internals of the service and just be able to call the service. However, the gRPC runtime tries to the access the pynq module on the remote client, which only exists on the server side. My guess is that this is a problem with how gRPC generates service stubs on the client side.

- This implementation borrows ideas and code from this application note, and the PYNQ hello world example.

- Schematic of matrix multiplication taken from Wikipedia