This is the final project of Computer vision course fall 2020 by Professor Alireza Talebpour.

Emotion recognition is the process of identifying human emotion, most typically from facial expressions as well as from verbal expressions.

We implemented two deep learning models for facial expression recognition (FER) using FER2013 dataset for recognizing 6 emotion of (Anger ,Disgust, Fear, Happy, Sad, Surprise, Neutral).

FER2013 dataset consists of 35,887 Gray Scale images with 48x48 resolution. This dataset was created by gathering the results of a Google image search of each emotion and synonyms of the emotions.

-

Include face occlusion,partial faces, low-contrast images, and eyeglasses

-

Includes Seven emotions: happy, sad, angry, afraid, surprise, disgust, and neutral

Pre-processing is required to align and normalize the visual semantic information conveyed by the face.

We applied multiple preprocessing technique before spliting data and training models.

- Apply noise reduction : Detect the face and remove background and non-face areas

- Augmentation : Flip, Rotation, Scale, Crop, Translation and Affine transformation

- Edge detection

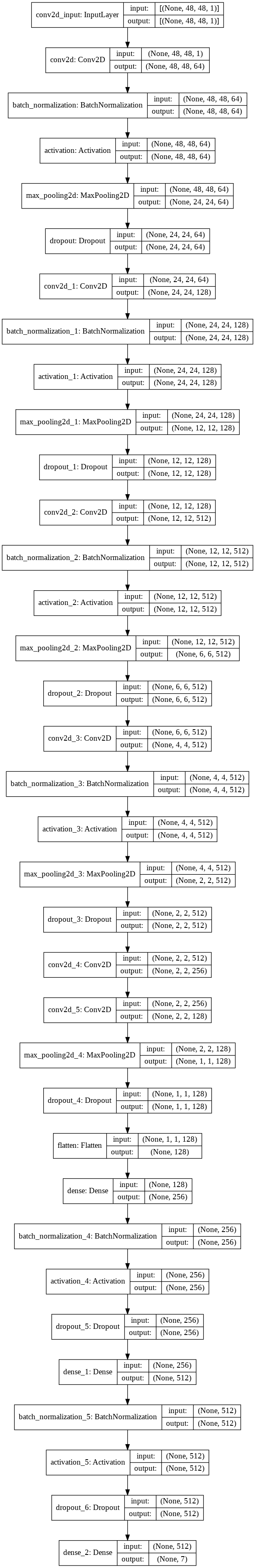

This model is based on the CNN introduced in paper, Deep-Emotion

- Architecture

- Result

| Training Accuracy | Validation Accuracy | Test Accuracy |

|---|---|---|

| 87% | 67% | 58% |

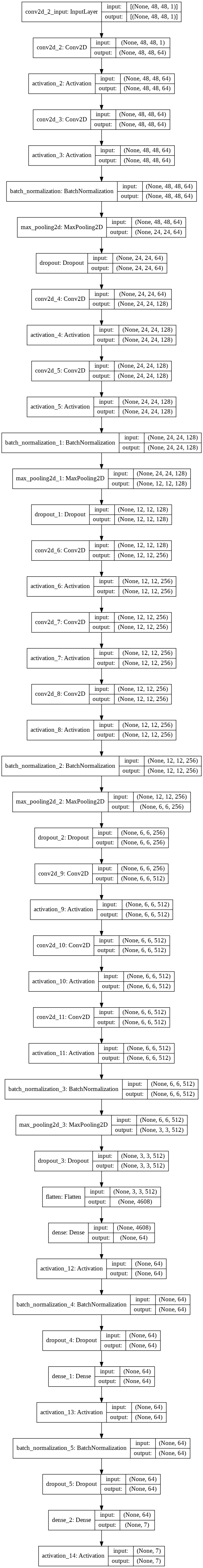

This model is modified version of VGG.

- Architecture

- Result

| Training Accuracy | Validation Accuracy | Test Accuracy |

|---|---|---|

| 75% | 61% | 61% |