Created by Wenliang Zhao*, Lujia Bai*, Yongming Rao, Jie Zhou, Jiwen Lu

This code contains the Pytorch implementation for UniPC.

An online demo for UniPC with stable-diffusion. Many thanks for the help and hardware resource supporting by HuggingFace 🤗.

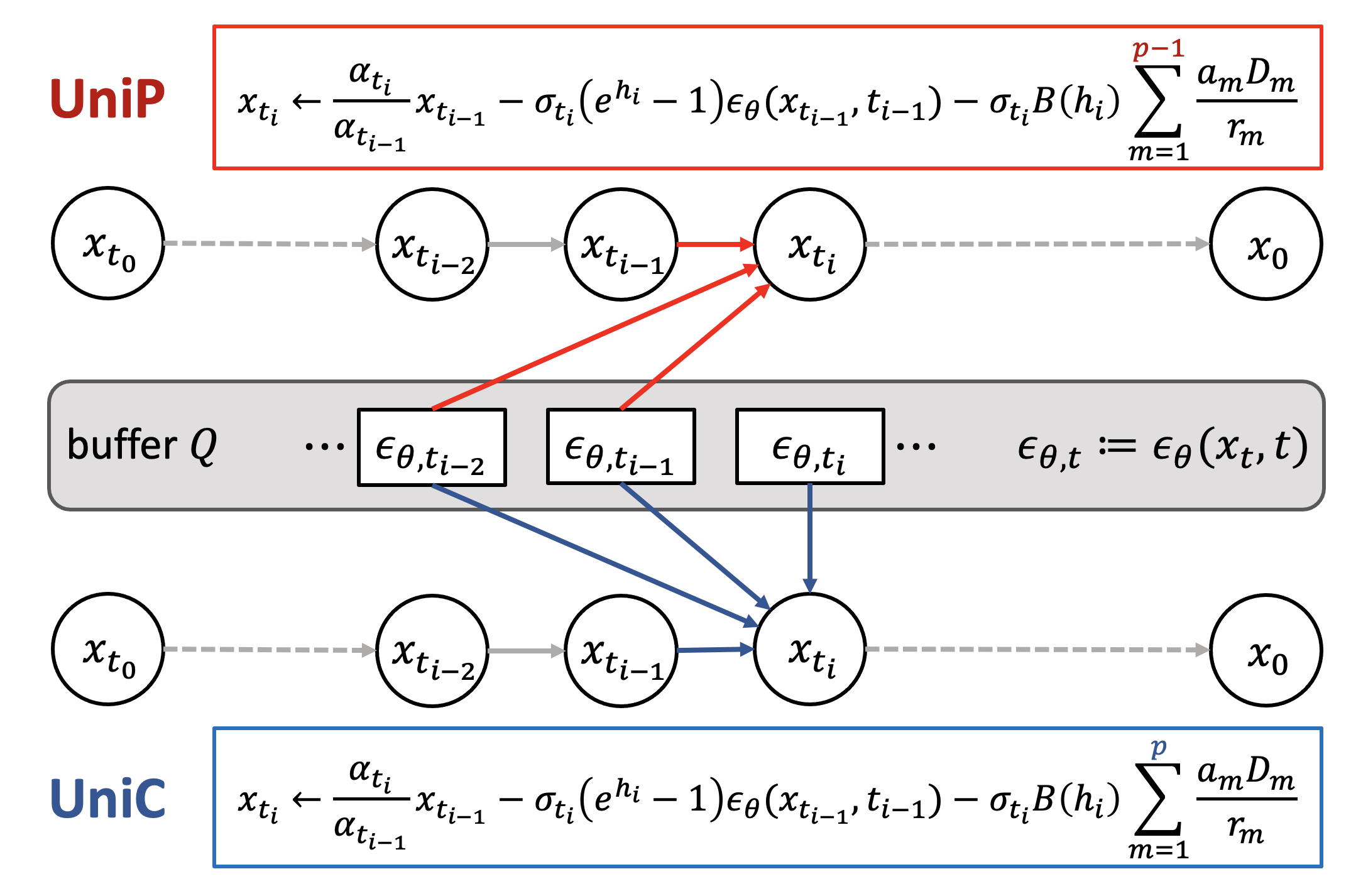

UniPC is a training-free framework designed for the fast sampling of diffusion models, which consists of a corrector (UniC) and a predictor (UniP) that share a unified analytical form and support arbitrary orders.

UniPC is by designed model-agnostic, supporting pixel-space/latent-space DPMs on unconditional/conditional sampling. It can also be applied to both noise prediction model and data prediction model.

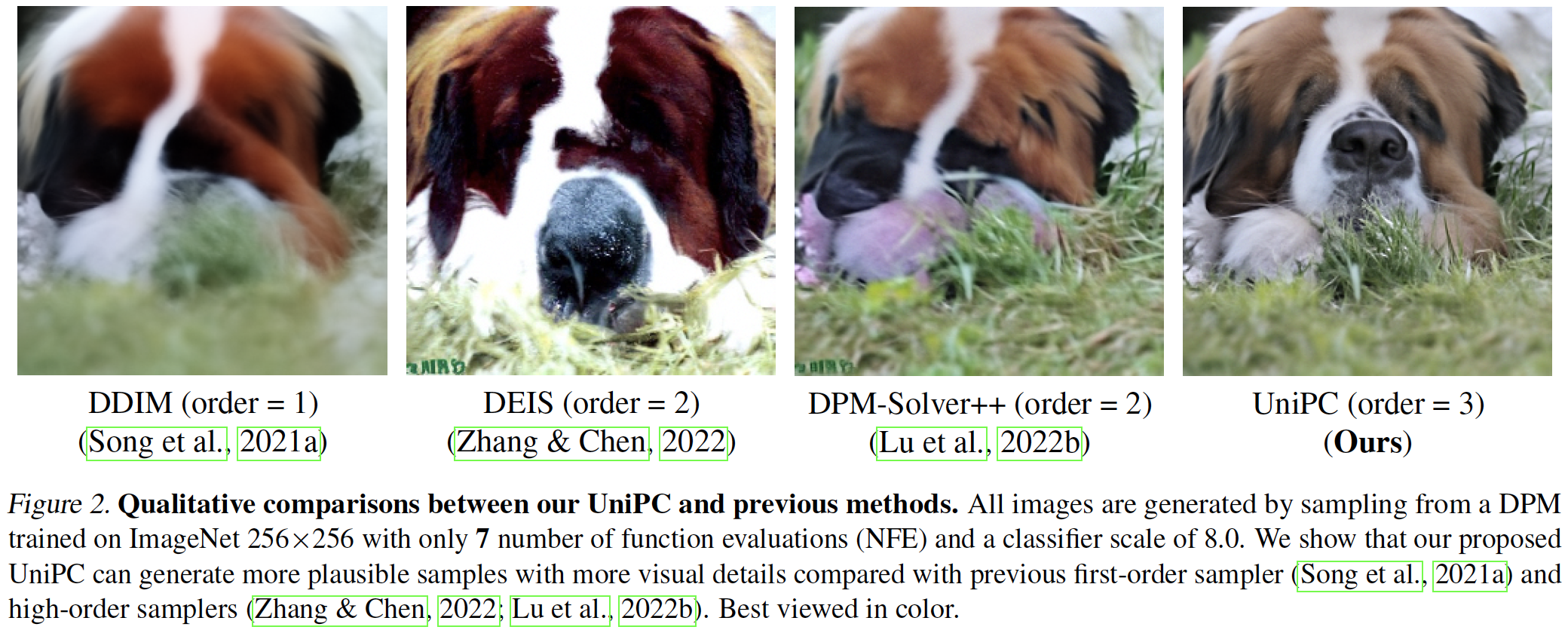

Compared with previous methods, UniPC converges faster thanks to the increased order of accuracy. Both quantitative and qualitative results show UniPC can remarkably improve the sampling quality, especially in extreme few steps (5~10).

We provide code examples based on the ScoreSDE and Stable-Diffusion in the example folder. Please follow the README.md file in the corresponding examples for further instructions to use our UniPC.

We provide a pytorch example in example/score_sde_pytorch, where we show how to use our UniPC to sample from a DPM pre-trained on CIFAR10.

We provide an example of applying UniPC to stable-diffusion in example/stable-diffusion. Our UniPC can accelerate the sampling in both conditional and unconditional sampling.

Our code is based on ScoreSDE, Stable-Diffusion, and DPM-Solver.

If you find our work useful in your research, please consider citing:

@article{zhao2023unipc,

title={UniPC: A Unified Predictor-Corrector Framework for Fast Sampling of Diffusion Models},

author={Zhao, Wenliang and Bai, Lujia and Rao, Yongming and Zhou, Jie and Lu, Jiwen},

journal={arXiv preprint arXiv:2302.04867},

year={2023}

}