https://ngc.nvidia.com/catalog/models/nvidia:tlt_peoplenet

https://ngc.nvidia.com/catalog/models/nvidia:tlt_peoplenet/files?version=pruned_v2.0

-

A Jetson Hardware(Nano,TX2,TX1,Jetson AGX Xavier,Jetson Xavier NX)

-

Jetpack(On Jetson Hardware,you should have installed a jetpack which version is V4.3 or V4.4 or V4.4.1)

-

tlt-converter(Depending on different versions of Jetpack)

- Download PeopleNet model

- Download tlt-converter in Jetson platform

tlt-converter in Jetson Platform Address

If you run tlt-converter in Jetson platform, we support three kinds of tlt-converter.

https://developer.nvidia.com/tlt-converter-trt51

https://developer.nvidia.com/tlt-converter-trt60 4

https://developer.nvidia.com/tlt-converter-trt71 4./tlt-converter resnet34_peoplenet_pruned.etlt -k tlt_encode -d 3,544,960 -t fp1cd sources

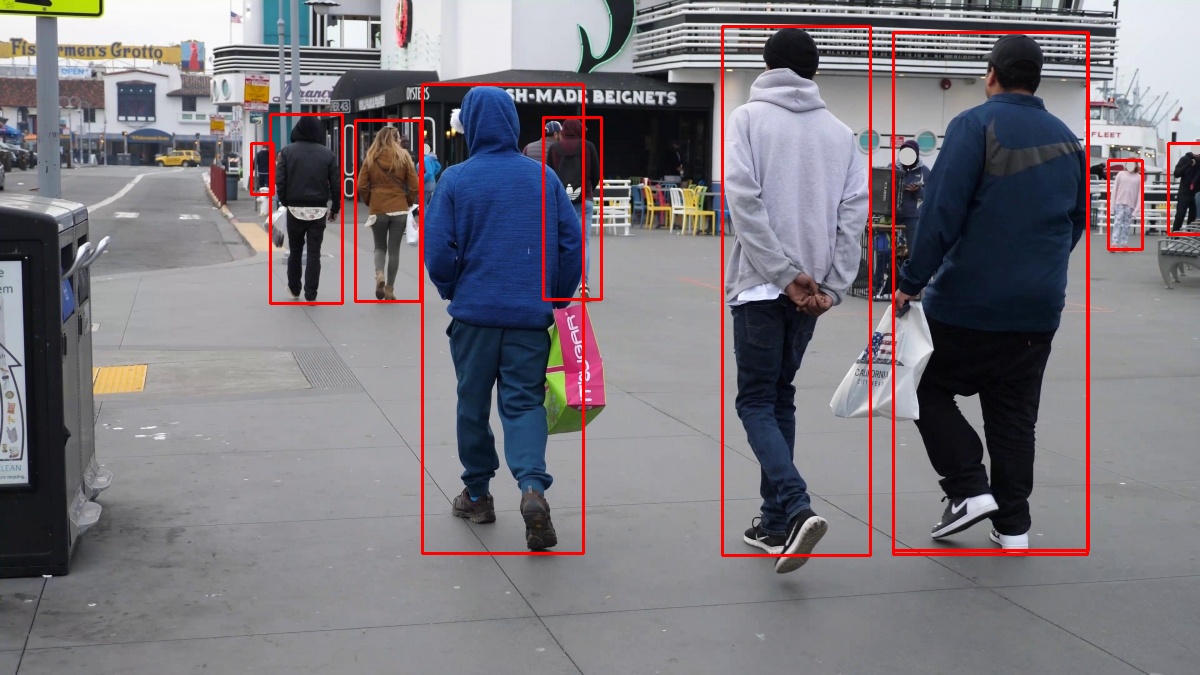

./main.pyAfter you execute the main.py, you will get a output image as below.

If this is useful for you,please star me.