Transfer learning (TL) consists of using knowledge acquired on a source learning task and use it to improve the performance of a target learning task. According to [4], the TL approach for this experiment is network-based, which reuses part of the network trained on a source domain to train on a target domain.

The objective of this work is to investigate different transfer learning setups for the target task of lane following using only frontal images and a discretized control (forward, left, right). The source task is image classification for the ImageNet Large Scale Visual Recognition Challenge (ILSVRC).

- frozen - the weights learned on the source (base) network are copied to the target network and they're kept frozen during training (i.e. the errors from training the target network are not backpropagated to these layers)

- fine-tuning - the weights of the target network are initialized with the weights from the base network and are fine-tuned during training

Following [1] the first thing to do is to train base networks for both tasks. For this study the chosen architecture was MobileNet v1. Since there is a mismatch in the number of classes (1000 for ImageNet and 3 for lane following), only the top layers will be different throughout this experiment.

- baseA - MobileNet model, with weights pre-trained on ImageNet (1000 categories and 1.2 million images)

- baseB - MobileNet model, with weights randomly initialized trained on self-driving dataset (3 categories and 56k images)

BaseA model is not available in this repository because it's part of Keras Applications.

BaseB achieved 91% accuracy on validation and was trained for 15 epochs. BaseB model is available at transfer/trained-models/baseB.h5.

There is also a baseB+ model, available on transfer/trained-models/baseBfinetune.h5, with weights initialized using the ImageNet pre-trained model and fine-tuned on task B. This model achieved 92% accuracy on validation and was trained for 15 epochs.

- left = 16141

- right = 10425

- forward = 29606

- left = 2034

- right = 1336

- forward = 3652

- left = 1362

- right = 1362

- forward = 1362

The MobileNet architecture consists of one regular convolutional layer (input), 13 depthwise separable convolutional blocks, one fully-connected layer and a softmax layer for classification.

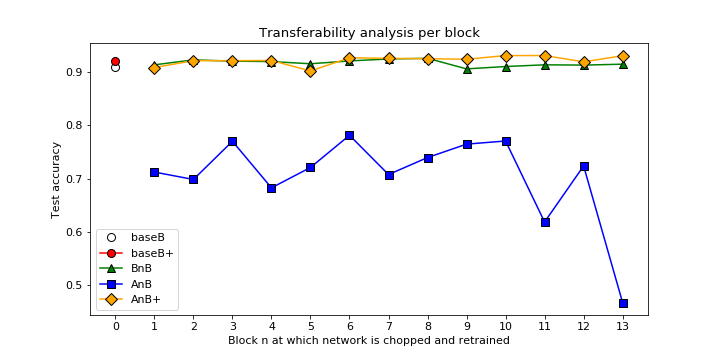

The selffer network BnB investigates the interaction between blocks; frozen some and training others for the same task.

Example: B3B - the first 3 blocks are copied from baseB.h5 model and frozen. The following blocks are randomly initialized and trained on the self-driving dataset. This network is a control for the transfer network (AnB).

The transfer network AnB investigates transferability of blocks between different tasks. Intuitively, if AnB performs as well as baseB, there is evidence that until the n-block the features are general in respect of task B.

Example: A3B - the first 3 blocks are copied from MobileNet model trained on ImageNet (task A) and frozen. The following blocks are randomly initialized and trained on the self-driving dataset (task B).

The transfer network AnB+ just like AnB, but there is no frozen weights. All layers learn during training on task B.

The following figure is a compilation of all the experiments. All the colected data is available at experiments-results.

As also reported in [3], there are no fragile co-adapted features on successive layers. This phenomenon happens when gradient descent can find a good solution when the network is initially trained but not after re-initializing some layers and then retraining them. This is not the case here possibly because task B is relatively simple

-

Given the amount of training data available for the target domain, there was not a significant advantage of using transfer learning in task B.

-

As reported in [1], initializing the network with weights trained on a different task, rather than random initialization, can improve performance (+1% accuracy) on the target task.

-

The poor results for transfer network AnB can be viewed as a negative transfer between tasks. Indicating that the actual feature extractors learned for task A are not useful for task B images.

In this section, we will explore the transferability of features as a straightforward method of layer pruning in CNNs. The main idea is to chop MobileNet at n adding a pooling layer, a fully-connected layer and a softmax classifier and finetune it for task B.

The table below shows the performance of each sub-network trained up to 15 epochs.

| n | val_acc | test_acc |

|---|---|---|

| 1 | 0.7677 | 0.7430 |

| 2 | 0.7930 | 0.7905 |

| 3 | 0.8036 | 0.7929 |

| 4 | 0.8160 | 0.8098 |

| 5 | 0.8212 | 0.7907 |

| 6 | 0.8303 | 0.8272 |

| 7 | 0.8444 | 0.8284 |

| 8 | 0.8887 | 0.8749 |

| 9 | 0.8675 | 0.8695 |

| 10 | 0.9050 | 0.9011 |

| 11 | 0.9152 | 0.9111 |

It is evident that at n=11 the accuracy on test set is equal to the performance of baseB model, and down by 1% if compared to baseB+ (finetuned version of baseB). Given this similar performance, the study of transferability of features may be seen as a naive approach for layer pruning.

The following table shows a comparison between a sliced model at block n=11 and baseB+ model.

| memory size | inference time | #parameters | accuracy | |

|---|---|---|---|---|

| sliced n11 | 13M | 13 sec. 3ms/step | 1M | 91.94% |

| base B+ | 25M | 13 sec. 3ms/step | 3M | 92.31% |

Even though there is no change in inference time, the reduction of footprint memory still may be of help to constraint resources systems.

The script to download the dataset for task B is available at self-driving data repository.

You can place the created folder data/ wherever you like, but don't rename the .npy files.

Pull requests are welcome. For major changes, please open an issue first to discuss what you would like to change.

Please make sure to update tests as appropriate.

[1] How transferable are features in deep neural networks?

[2] A Survey on Transfer Learning