In this lab, we'll learn about different common activation functions, and compare and constrast their effectiveness on an MLP for classification on the MNIST data set!

In your words, answer the following question:

What purpose do acvtivation functions serve in Deep Learning? What happens if our neural network has no activation functions? What role do activation functions play in our output layer? Which activation functions are most commonly used in an output layer?

Write your answer below this line:

For the first part of this lab, we'll only make use of the numpy library. Run the cell below to import numpy.

import numpy as npWe'll begin this lab by writing different activation functions manually, so that we can get a feel for how they work.

We'll begin with the Sigmoid activation function, as described by the following equation:

In the cell below, complete the sigmoid function. This functio should take in a value and compute the results of the equation returned above.

def sigmoid(z):

passsigmoid(.458) # Expected Output 0.61253961344091512The hyperbolic tangent function is as follows:

Complete the function below by implementing the tanh function.

def tanh(z):

passprint(tanh(2)) # 0.964027580076

print(np.tanh(2)) # 0.0

print(tanh(0)) # 0.964027580076The final activation function we'll implement manually is the Rectified Linear Unit function, also known as ReLU.

The relu function is:

def relu(z):

passprint(relu(-2)) # Expected Result: 0.0

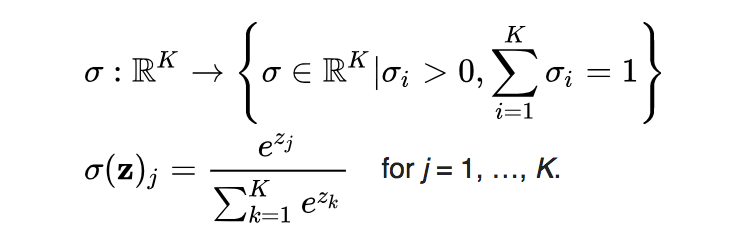

print(relu(2)) # Expected Result: 2.0The Softmax Function is primarily used as the activation function on the output layer for neural networks for multi-class categorical prediction. The softmax equation is as follows:

The mathematical notation for the softmax activation function is a bit dense, and this is a special case, since the softmax function is really only used on the output layer. Thus, the code for the softmax function ahs been provided.

Run the cell below to compute the softmax function on a sample vector.

z = [1.0, 2.0, 3.0, 4.0, 1.0, 2.0, 3.0]

softmax = np.exp(z)/np.sum(np.exp(z))

softmaxExpected Output:

array([ 0.02364054, 0.06426166, 0.1746813 , 0.474833 , 0.02364054, 0.06426166, 0.1746813 ])

Now that we have experience with the various activation functions, we'll gain some practical experience with each of them by trying them all as different hyperparameters in a neural network to see how they affect the performance of the model. Before we can do that, we'll need to preprocess our image data.

We'll build 3 different versions of the same network, with the only difference between them being the activation function used in our hidden layers. Start off by importing everything we'll need from Keras in the cell below.

HINT: Refer to previous labs that make use of Keras if you aren't sure what you need to import

We'll need to preprocess the MNIST image data so that it can be used in our model.

In the cell below:

- Load the training and testing data and their corresponding labels from MNIST.

- Reshape the data inside

X_trainandX_testinto the appropriate shape (from a 28x28 matrix to a vector of length 784). Also cast them to datatypefloat32. - Normalize the data inside of

X_trainandX_test - Convert the labels inside of

y_trainandy_testinto one-hot vectors (Hint: see the documentation if you can't remember how to do this).

Your task is to build a neural network to classify the MNIST dataset. The model should have the following architecture:

- Input layer of

(784,) - Hidden Layer 1: 100 neurons

- Hidden Layer 2: 50 neurons

- Output Layer: 10 neurons, softmax activation function

- Loss:

categorical_crossentropy - Optimizer:

'SGD' - metrics:

['accuracy']

In the cell below, create a model that matches the specifications above and use a sigmoid activation function for all hidden layers.

sigmoid_model = NoneNow, compile the model with the following hyperparameters:

loss='categorical_crossentropy'optimizer='SGD'metrics=['accuracy']

Now, fit the model. In addition to our training data, pass in the following parameters:

epochs=10batch_size=32verbose=1validation_data=(X_test, y_test)

Now, we'll build the exact same model as we did above, but with hidden layers that use tanh activation functions rather than sigmoid.

In the cell below, create a second version of the model that uses hyperbolic tangent function for activations. All other parameters, including number of hidden layers, size of hidden layers, and the output layer should remain the same.

tanh_model = NoneNow, compile this model. Use the same hyperparameters as we did for the sigmoid model.

Now, fit the model. Use the same hyperparameters as we did for the sigmoid model.

Finally, construct a third version of the same model, but this time with relu activation functions for the hidden layer.

relu_model = NoneNow, compile the model with the same hyperparameters as the last two models.

Now, fit the model with the same hyperparameters as the last two models.

Which activation function was most effective?