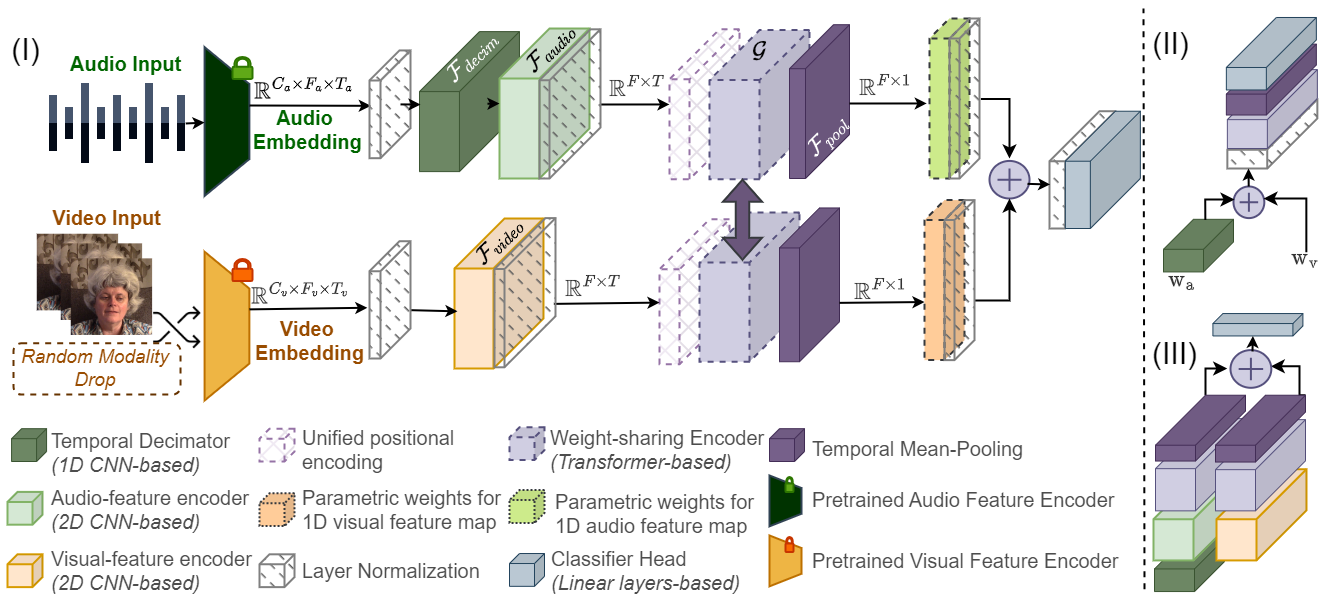

Traditional methods for detecting speech disfluencies often rely solely on acoustic data. This paper introduces a novel multimodal approach that incorporates both audio and video modalities for more robust disfluency detection. We propose a unified weight-sharing encoder framework that handles temporal and semantic context fusion, designed to accommodate scenarios where video data may be missing during inference. Experimental results across five disfluency detection tasks demonstrate significant performance gains compared to unimodal methods, achieving an average absolute improvement of 10% when both modalities are consistently available, and 7% even when video data is missing in half of the samples.

We provide a time-aligned 3-second segmented audio-visual dataset, preprocessed and available for download here. You can download the entire dataset using the following command:

wget https://figshare.com/ndownloader/articles/25526953/versions/1To set up the necessary dependencies, create a Conda environment using the provided environment.yml file:

conda env create -f environment.ymlAll training data splits are located in the ./metadata folder. Results reported are based on training with three different seeds (123, 456, 789).

To view available arguments for training:

python main_audio_video_unified_fusion.py -hExample command for training with specific parameters:

python main_audio_video_unified_fusion.py --stutter_type='Block/' --num_epochs=400 --p_mask=0.5 --seed_num=456@inproceedings{mohapatra24_interspeech,

title = {Missingness-resilient Video-enhanced Multimodal Disfluency Detection},

author = {Payal Mohapatra and Shamika Likhite and Subrata Biswas and Bashima Islam and Qi Zhu},

year = {2024},

booktitle = {Interspeech 2024},

pages = {5093--5097},

doi = {10.21437/Interspeech.2024-1458},

}

We acknowledge Lea et al. for their contributions in releasing the manually annotated audio dataset FluencyBank and the SEP28k dataset and Romana et. al for their support in extending their work as a baseline in our analyses of multimodal disfluency detection.