-

☀️ Hiring research interns for Neural Architecture Search, Tiny Machine Learning, Computer Vision tasks: xiuyu.sxy@alibaba-inc.com

-

💥 2023.03: [DeepMAD: Mathematical Architecture Design for Deep Convolutional Neural Network] is accepted by CVPR'23.

-

💥 2023.01: Maximizing Spatio-Temporal Entropy of Deep 3D CNNs for Efficient Video Recognition is accepted by ICLR'23.

-

💥 2022.11: MAE-NAS backbone for DAMO-YOLO is now supported! The DAMO-YOLO paper is on ArXiv now.

-

💥 2022.09: Mixed-Precision Quantization Search is now supported! The QE-Score paper is accepted by NeurIPS'22.

-

💥 2022.06: Code for MAE-DET is now released.

-

💥 2022.05: MAE-DET is accepted by ICML'22.

-

💥 2021.09: Code for Zen-NAS is now released.

-

💥 2021.07: The inspiring training-free paper Zen-NAS has been accepted by ICCV'21.

English | 简体中文

Lightweight Neural Architecture Search (Light-NAS) is an open source zero-short NAS toolbox for backbone search based on PyTorch. The master branch works with PyTorch 1.4+ and OpenMPI 4.0+.

Major features

-

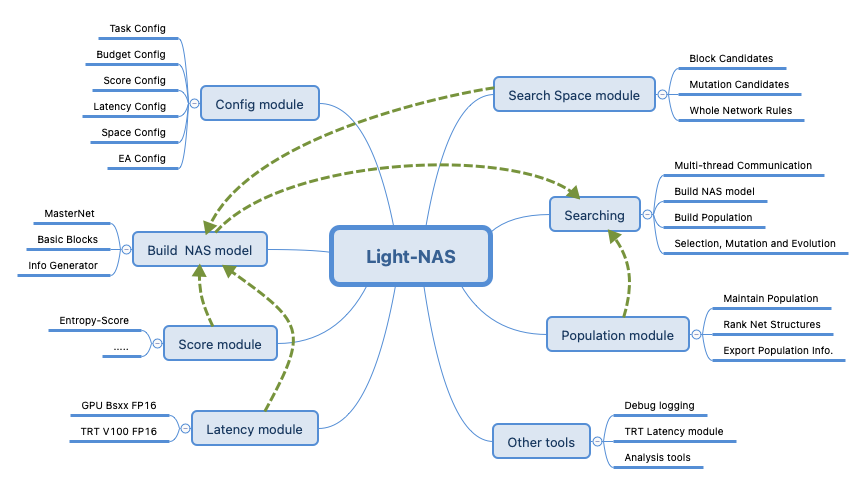

Modular Design

The toolbox consists of different modules (controlled by Config module), incluing Models Definition module , Score module, Search Space module, Latency module and Population module. We use

nas/builder.pyto build nas model, andnas/search.pyto complete the whole seaching process. Through the combination of these modules, we can complete the backbone search in different tasks (e.g., Classficaiton, Detection) under different budget constraints (i.e., Parameters, FLOPs, Latency). -

Supported Tasks

For a better start, we provide some examples for different tasks as follow.

Classification: Please Refer to this Search Space and Example Shell.Detection: Please Refer to this Search Space and Example Shell.Quantization: Please Refer to this Search Space and Example Shell.

This project is developed by Alibaba and licensed under the Apache 2.0 license.

This product contains third-party components under other open source licenses.

See the NOTICE file for more information.

1.0.3 was released in 2022/11/30:

- Support MAE-NAS for DAMO-YOLO.

- Add 2 new blocks: k1kx and kxkx.

- Add a tutorial on NAS for DAMO-YOLO.

Please refer to changelog.md for details and release history.

- Linux

- GCC 7+

- OpenMPI 4.0+

- Python 3.6+

- PyTorch 1.4+

- CUDA 10.0+

-

Compile the OpenMPI 4.0+ Downloads.

cd path tar -xzvf openmpi-4.0.1.tar.gz cd openmpi-4.0.1 ./configure --prefix=/your_path/openmpi make && make install

add the commands into your

~/.bashrcexport PATH=/your_path/openmpi/bin:$PATH export LD_LIBRARYPATH=/your_path/openmpi/lib:$LD_LIBRARY_PATH

-

Create a conda virtual environment and activate it.

conda create -n light-nas python=3.6 -y conda activate light-nas

-

Install torch and torchvision with the following command or offcial instruction.

pip install torch==1.4.0+cu100 torchvision==0.5.0+cu100 -f https://download.pytorch.org/whl/torch_stable.html

if meet

"Not be found for jpeg", please install the libjpeg for the system.sudo yum install libjpeg # for centos sudo apt install libjpeg-dev # for ubuntu

-

Install other packages with the following command.

pip install -r requirements.txt

-

Search with examples

cd scripts/classification sh example_xxxx.sh

Results for Classification, Details are here.

| Backbone | size | Param (M) | FLOPs (G) | Top-1 | Structure | Download |

|---|---|---|---|---|---|---|

| R18-like | 224 | 10.8 | 1.7 | 78.44 | txt | model |

| R50-like | 224 | 21.3 | 3.6 | 80.04 | txt | model |

| R152-like | 224 | 53.5 | 10.5 | 81.59 | txt | model |

Note: If you find this useful, please support us by citing it.

@inproceedings{zennas,

title = {Zen-NAS: A Zero-Shot NAS for High-Performance Deep Image Recognition},

author = {Ming Lin and Pichao Wang and Zhenhong Sun and Hesen Chen and Xiuyu Sun and Qi Qian and Hao Li and Rong Jin},

booktitle = {2021 IEEE/CVF International Conference on Computer Vision},

year = {2021},

}

The official code for Zen-NAS was originally released at https://github.com/idstcv/ZenNAS.

Results for Object Detection, Details are here.

| Backbone | Param (M) | FLOPs (G) | box APval | box APS | box APM | box APL | Structure | Download |

|---|---|---|---|---|---|---|---|---|

| ResNet-50 | 23.5 | 83.6 | 44.7 | 29.1 | 48.1 | 56.6 | - | - |

| ResNet-101 | 42.4 | 159.5 | 46.3 | 29.9 | 50.1 | 58.7 | - | - |

| MAE-DET-S | 21.2 | 48.7 | 45.1 | 27.9 | 49.1 | 58.0 | txt | model |

| MAE-DET-M | 25.8 | 89.9 | 46.9 | 30.1 | 50.9 | 59.9 | txt | model |

| MAE-DET-L | 43.9 | 152.9 | 47.8 | 30.3 | 51.9 | 61.1 | txt | model |

Note: If you find this useful, please support us by citing it.

@inproceedings{maedet,

title = {MAE-DET: Revisiting Maximum Entropy Principle in Zero-Shot NAS for Efficient Object Detection},

author = {Zhenhong Sun and Ming Lin and Xiuyu Sun and Zhiyu Tan and Hao Li and Rong Jin},

booktitle = {International Conference on Machine Learning},

year = {2022},

}

Results for low-precision backbones, Details are here.

| Backbone | Param (MB) | BitOps (G) | ImageNet TOP1 | Structure | Download |

|---|---|---|---|---|---|

| MBV2-8bit | 3.4 | 19.2 | 71.90% | - | - |

| MBV2-4bit | 2.3 | 7 | 68.90% | - | - |

| Mixed19d2G | 3.2 | 18.8 | 74.80% | txt | model |

| Mixed7d0G | 2.2 | 6.9 | 70.80% | txt | model |

Note: If you find this useful, please support us by citing it.

@inproceedings{qescore,

title = {Entropy-Driven Mixed-Precision Quantization for Deep Network Design on IoT Devices},

author = {Zhenhong Sun and Ce Ge and Junyan Wang and Ming Lin and Hesen Chen and Hao Li and Xiuyu Sun},

journal = {Advances in Neural Information Processing Systems},

year = {2022},

}

Zhenhong Sun, Ming Lin, Xiuyu Sun, Hesen Chen, Ce Ge, Yilun Huang.