Aspect-Based Sentiment Analysis Using Bitmask Bidirectional Long Short Term Memory Networks

https://peace195.github.io/choose-distinct-units-in-lstm/

SemEval-2014 Task 4: Aspect Based Sentiment Analysis

SemEval-2015 Task 12: Aspect Based Sentiment Analysis

SemEval-2016 Task 5: Aspect Based Sentiment Analysis

I specialize in restaurants and laptops domain. You can see final results of these contests in [1][2]. The purposes of this project are:

- Aspect based sentiment analysis.

- A sample of bidirectional LSTM (tensorflow 1.2.0).

- A sample of picking some special units of a recurrent network (not all units) to train and predict their labels.

- Compare between struct programming and object-oriented programming in Deep Learning model.

- Build stop words, incremental, decremental, positive & negative dictionary for sentiment problem.

Step by step:

- Used contest data and "addition restaurants review data" to learn word embedding by fastText.

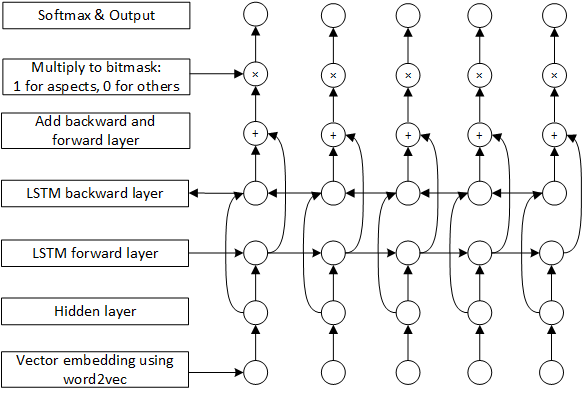

- Used bidirectional LSTM in the model as above. The input of the model is the vector of word embedding that we trained before.

BINGO!!

-

Outperforms state-of-the-art in semeval2014 dataset [3].

-

Achieved 81.2% accuracy. Better than 2.5% winner team in the semeval2015 competition [1].

-

Achieved 85.8% accuracy. rank 3/28 in the semeval2016 competition [2].

- SemEval-2015 Task 12 dataset

- SemEval-2016 Task 5 dataset

- Addition restaurant review data for restaurant-word2vec. Here, I use:

- My embedding result is available here: google drive

- python 2.7

- tensorflow 1.2.0

- fastText

$ python sa_aspect_term_oop.py

Binh Do

[1] http://alt.qcri.org/semeval2015/cdrom/pdf/SemEval082.pdf

[2] http://alt.qcri.org/semeval2016/task5/index.php?id=data-and-tools

[3] http://alt.qcri.org/semeval2014/task4/index.php?id=data-and-tools

This project is licensed under the GNU License