Masked Vision and Language Pre-training with Unimodal and Multimodal Contrastive Losses for Medical Visual Question Answering

This is the official implementation of MUMC for the medical visual question answering, which was accepted by MICCAI-2023.

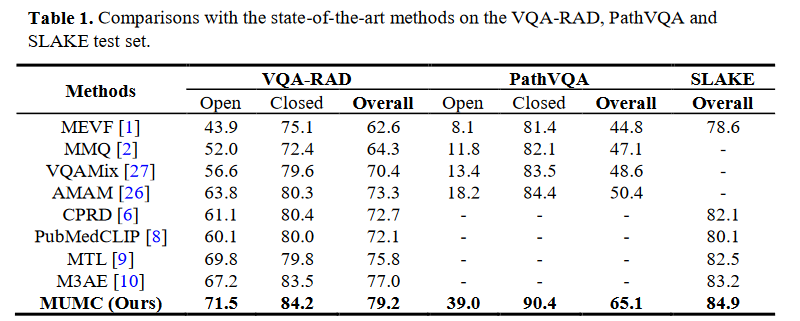

Our proposal achieves superior accuracy in comparison with other state-of-the-art (sota) methods on three public medical VQA datasets: VQA-RAD dataset, PathVQA dataset and Slake dataset. Paper link here.

This repository is based on our previous work and inspired by @Junnan Li's work. We sincerely thank for their sharing of the codes.

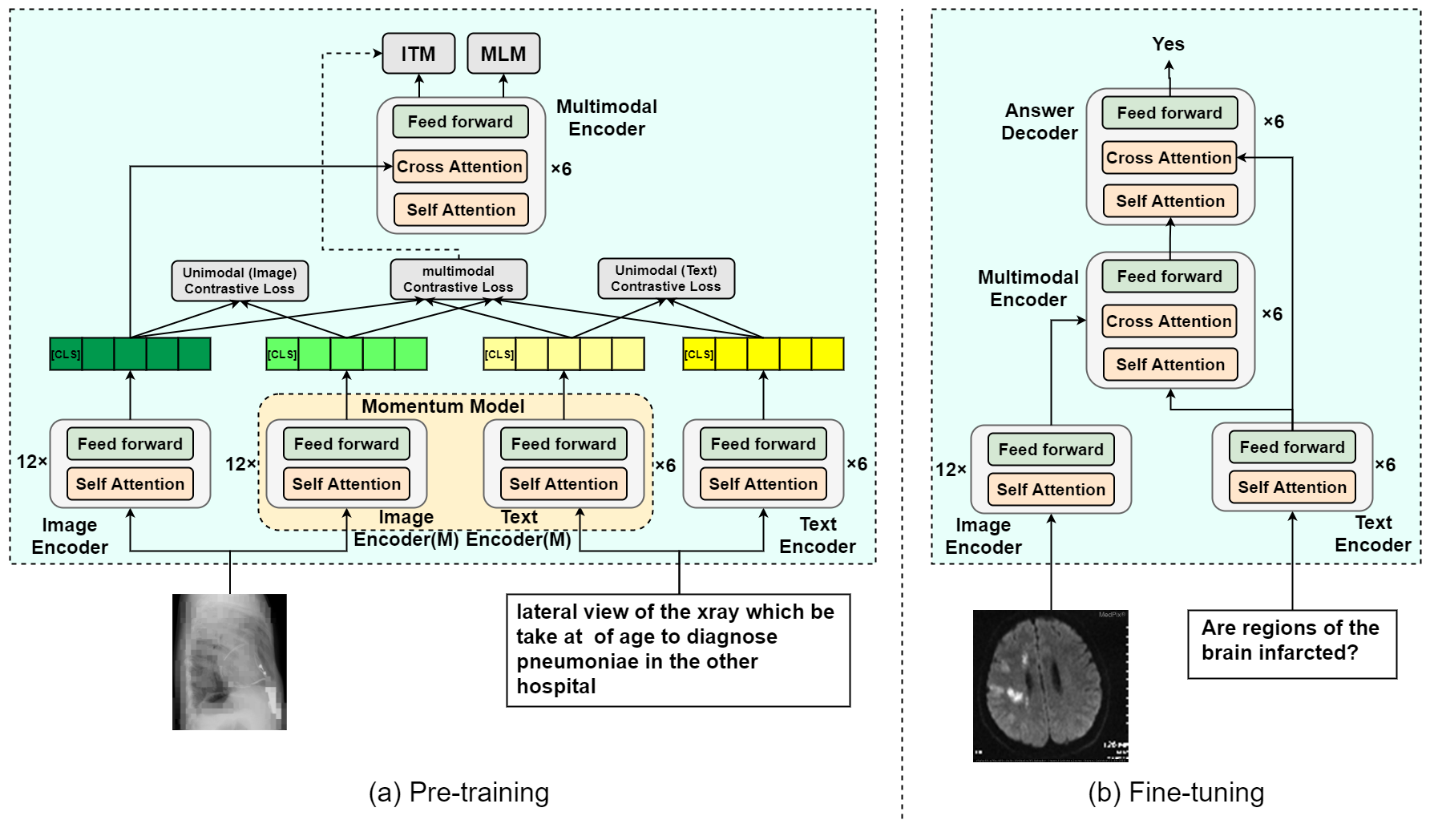

Figure 1: Overview of the proposed MUMC model.Run the following command to install the required packages:

pip install -r requirements.txtPlease organize the datasets as the following structure:

+--clef2022

| +--train

| | +--ImageCLEFmedCaption_2022_train_000001.jpg

| | +--ImageCLEFmedCaption_2022_train_000002.jpg

| | +--...

| +--valid

| | +--ImageCLEFmedCaption_2022_valid_084258.jpg

| | +--ImageCLEFmedCaption_2022_valid_084259.jpg

| | +--...

| +--clef22022_train.json

| +--clef22022_valid.json

+--data_RAD

| +--images

| | +--synpic100132.jpg

| | +--synpic100176.jpg

| | +--...

| +--trainset.json

| +--testset.json

| +--answer_list.json

+--data_PathVQA

| +--images

| | +--train

| | | +--train_0000.jpg

| | | +--train_0001.jpg

| | | +--...

| | +--val

| | | +--val_0000.jpg

| | | +--val_0001.jpg

| | | +--...

| | +--test

| | | +--test_0000.jpg

| | | +--test_0001.jpg

| | | +--...

| +--pathvqa_test.json

| +--pathvqa_train.json

| +--pathvqa_val.json

| +--answer_trainval_list.json

+--data_Slake

| +--imgs

| | +--xmlab0

| | | +--source.jpg.jpg

| | | +--question.json

| | | +--...

| | +--....

| +--slake_test.json

| +--slake_train.json

| +--slake_val.json

| +--answer_list.json

python3 pretrain --output_dir ./pretrain

# choose medical vqa dataset(rad, pathvqa, slake)

python3 train_vqa.py --dataset_use rad --checkpoint ./pretrain/med_pretrain_29.pth --output_dir ./output/rad

You can download the pre-trained weights through the following link.

@article{MUMC,

title = {Masked Vision and Language Pre-training with Unimodal and Multimodal Contrastive Losses for Medical Visual Question Answering},

author = {Pengfei Li, Gang Liu, Jinlong He, Zixu Zhao and Shenjun Zhong},

booktitle = {Medical Image Computing and Computer Assisted Intervention -- MICCAI 2023},

year = {2023},

pages = {374--383},

publisher = {Springer Nature Switzerland}

}

MIT License