Retrieval augmented generation demos with Llama-2, Mistral-7b, Zephyr-7b

The demos use quantized models and run on CPU with acceptable inference time.

You will need to set up your development environment using conda, which you can install directly.

conda env create --name rag -f environment.yaml --forceActivate the environment.

conda activate ragDownload and save the models in ./models and update config.yaml. The models used in this demo are:

- Embeddings

- LLMs

- Rerankers:

- facebook/tart-full-flan-t5-xl: save in

models/tart-full-flan-t5-xl/ - BAAI/bge-reranker-base: save in

models/bge-reranker-base/

- facebook/tart-full-flan-t5-xl: save in

- Propositionizer

- chentong00/propositionizer-wiki-flan-t5-large save in

models/propositionizer-wiki-flan-t5-large/

- chentong00/propositionizer-wiki-flan-t5-large save in

Since each model type has its own prompt format, include the format in ./src/prompt_templates.py. For example, the format used in openbuddy models is

_openbuddy_format = """{system}

User: {user}

Assistant:"""Refer to the file for more details.

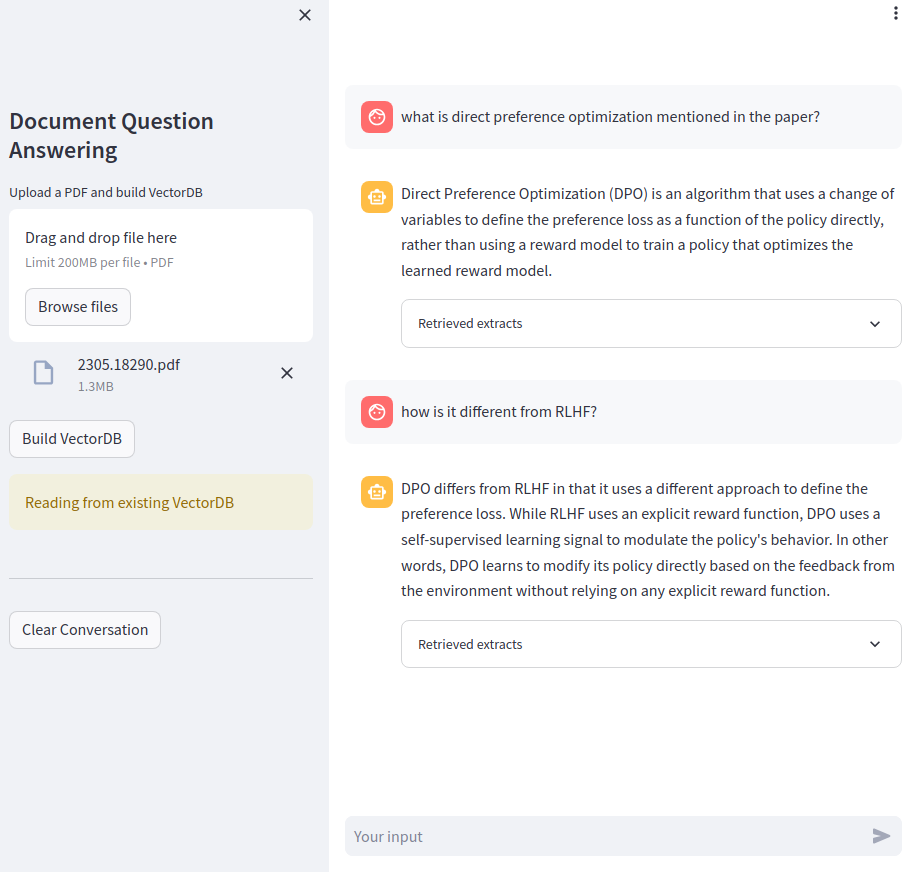

We use Streamlit as the interface for the demos. There are two demos:

- Retrieval QA

streamlit run app.py- Conversational retrieval QA

streamlit run app_conv.pyTo get started, upload a PDF and click on Build VectorDB. Creating vector DB will take a while.