The official Pytorch implementation of IEEE Transactions on Multimedia 2021 Fast Adaptive Meta-Learning for Few-Shot Image Generation (FAML).

If this code is useful for you, please consider citing the paper as following detail:

@article{phaphuangwittayakul2021fast,

title={Fast Adaptive Meta-Learning for Few-shot Image Generation},

author={Phaphuangwittayakul, Aniwat and Guo, Yi and Ying, Fangli},

journal={IEEE Transactions on Multimedia},

year={2021},

publisher={IEEE}

}

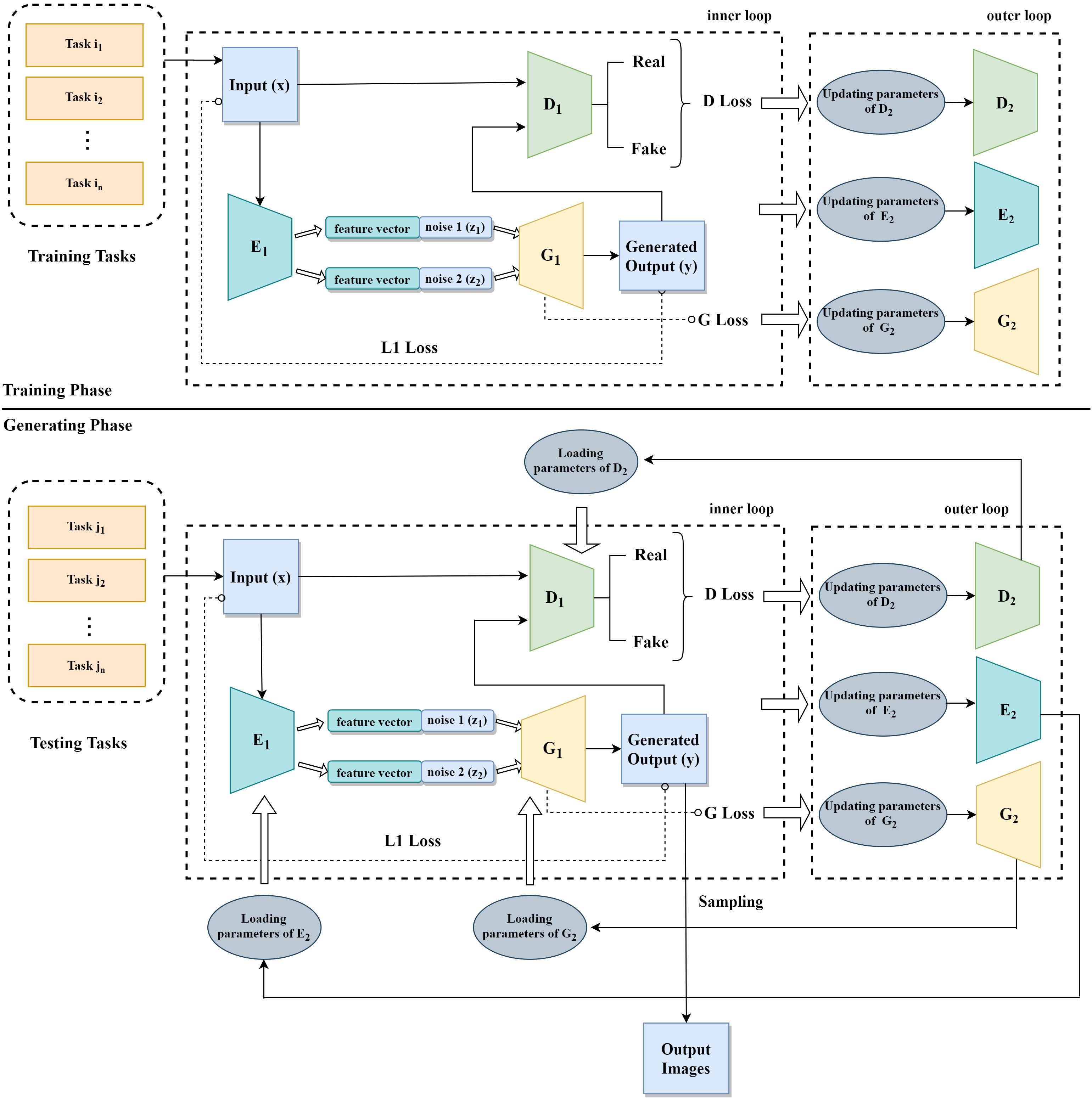

Fast Adaptive Meta-Learning (FAML) based on GAN and the encoder network is proposed in this study for few-shot image generation. The model can generate new realistic images from unseen target classes with few samples of data.

$ git clone https://github.com/phaphuang/FAML.git

$ cd FAML

$ pip install -r requirements.txt

- MNIST: 10 balanced classes link

- Omniglot: 1623 handwritten characters from 50 different alphabets. Each of the 1623 characters was drawn online via Amazon's Mechanical Turk by 20 different people link

- VGG-Faces: 2395 categories link

- miniImageNet: 100 classes with 600 samples of 84×84 color images per class. link

The default folder of datasets are placed under data.

MNIST and Omniglot can be automatically downloaded by running the training scripts.

VggFace

- Downloaded the modified 32x32 VggFace and 64x64 VggFace datasets and their training and validation tasks.

- Place all downloaded files in

data/vgg/

miniImageNet

-

Downloaded the modified 32x32 miniImageNet and 64x64 miniImageNet datasets and their training and validation tasks.

-

Place all downloaded files in

data/miniimagenet/

$ python train.py --dataset Mnist --height 32 --length 32

View all options for different training parameters:

$ python train.py --help

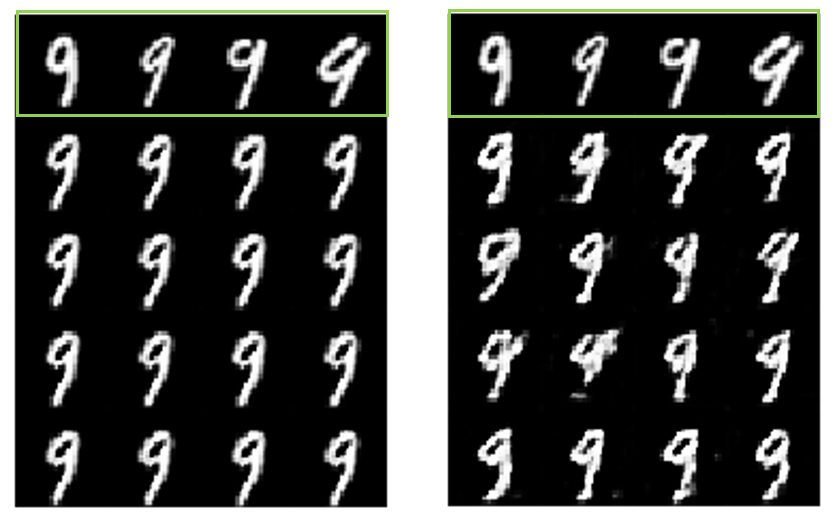

MNIST

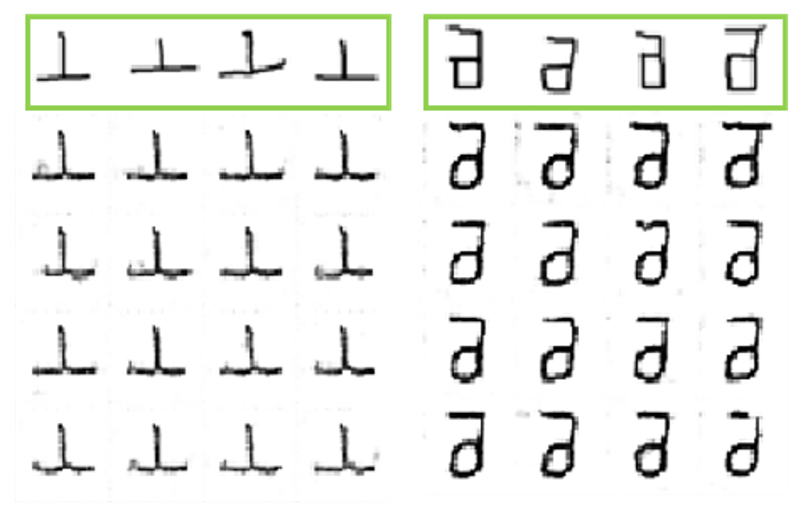

Omniglot

Omniglot

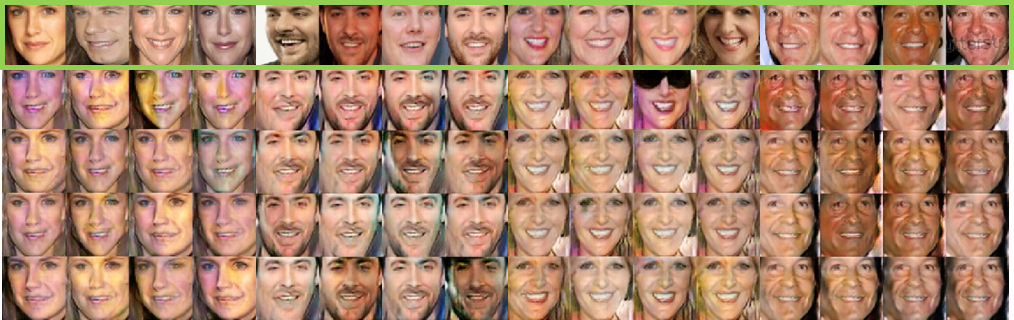

VggFace

VggFace

miniImageNet

miniImageNet

Some of the codes are built upon FIGR and RaGAN. Thanks them for their great work! FAML is freely available for non-commercial use. Don't hesitate to drop e-mail if you have any problem.