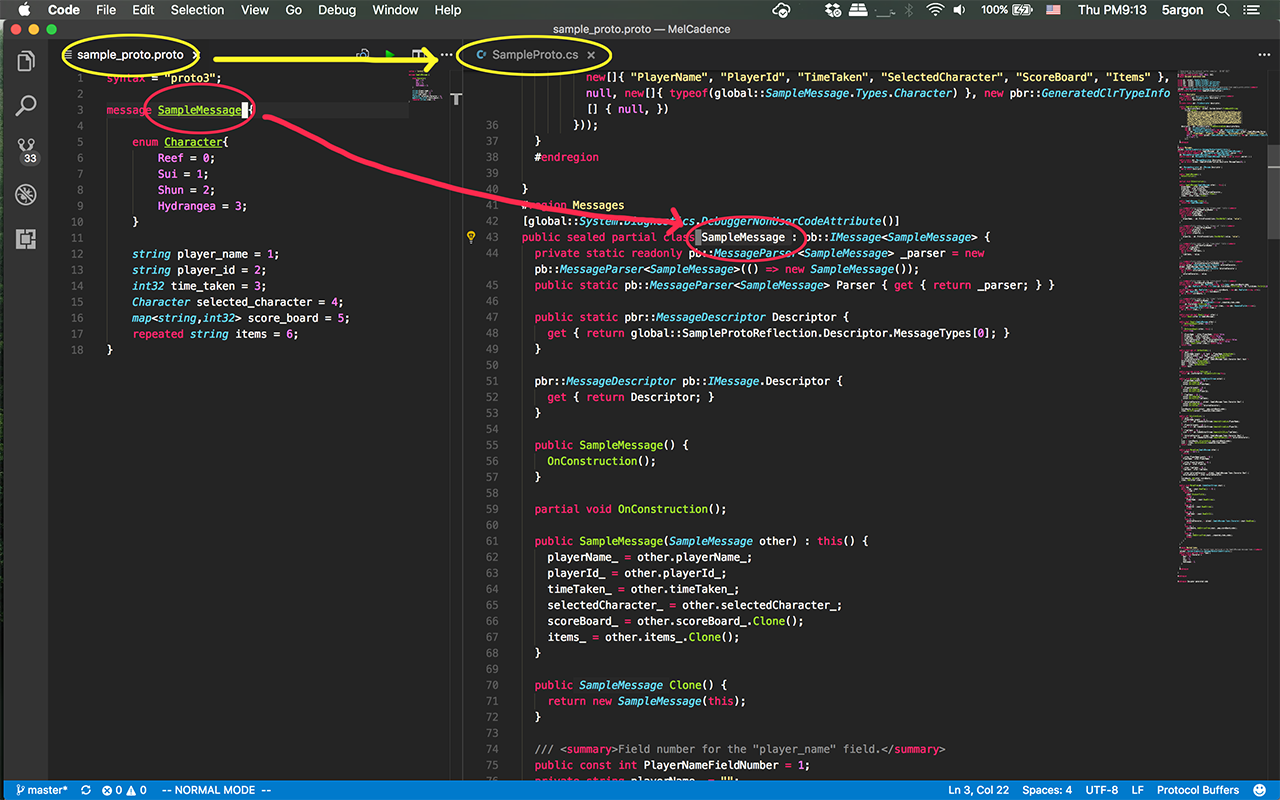

Do you want to integrate protobuf as a data class, game saves, message to the server, etc. in your game? Now you can put those .proto files directly in the project, work on it, and have the editor script in here generate the classes for you.

- Install

protocon the machine. This plugin does not includeprotoccommand and will try to run it from your command line (via .NETSystem.Diagnostics.Process.Start). Please see https://github.com/google/protobuf and install it. Confirm withprotoc --versionin your command prompt/terminal. Note that the version ofprotocyou use will depend on how high the C#Google.Protobuflibrary you want to use becauseprotocmay generate code that is not usable with older C# library. Later on this. - Put files in your Unity project. This is also Unity Package Manager compatible. You can pull from online to your project directly.

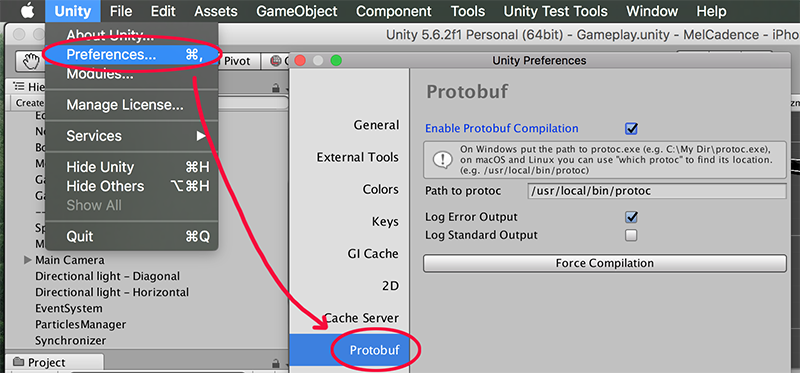

- You can access the settings in Preferences > Protobuf. Here you need to put a path to your

protocexecutable.

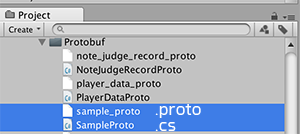

As soon as you import/reimport/modify (but not moving) .proto file in your project, it will compile only that file to the same location as the file. If you want to temporary stop this there is a checkbox in the settings, then you can manually push the button in there if you like. Note that deleting .proto file will not remove its generated class.

The next problem is that your generated classes references external library Google.Protobuf that you need to also include in the game client so it is able to serialize to Protobuf binary. Not only that, protobuf-unity itself also has Runtime assembly which has additional Protobuf toolings. So both your assembly definition (.asmdef) that your generated classes resides and this package need Google.Protobuf C# library.

It is not bundled with this repository. You should download the Nuget package then use archive extract tools to get the .dll out. It contains targets such as .NET 4.6 and .NET Standard 1.0/2.0 which you should choose mathcing your Project Settings.

Over the years, this Google.Protobuf requires more and more .NET dependencies that ultimately not included in Unity.

For example, if it ask for System.Memory.dll because it want to use Span class but Unity is not supporting it yet, you may also downloading it and forcibly put in the project. Now that will also ask for missing references System.Runtime.CompilerServices.Unsafe here and System.Buffers here.

Note that the reason why these libraries aren't included in Unity is likely that something does not work or partially work but wrong behavior on some platforms that Unity is committing to. So it is best that you don't use anything in these libraries other than satisfying the Google.Protobuf.dll, and pray that Google.Protobuf.dll itself doesn't use something bad.

If you did find problem when forcibly including exotic .NET dll such as System.Memory.dll, you may want to downgrade the C# Google.Protobuf.dll and the protoc to match until it does not require the problematic dependencies anymore. I have listed several breakpoint version where the next one changes its requirement here.

- Download csharp grpc plugin and put it somewhere safe.

- Set the path to this plugin in the editor shown above

Leave empty or like it is if you don't want to use gRPC

-

When you write a

.protofile normally you need to use theprotoccommand line to generate C# classes. This plugin automatically find all your.protofiles in your Unity project, generate them all, and output respective class file at the same place as the.protofile. It automatically regenerate when you change any.protofile. If there is an error the plugin will report via the Console. -

You could use

importstatement in your.protofile, which normally looks for all files in--proto_pathfolders input to the command line. (You cannot use relative path such as../inimport) With protobuf-unity,--proto_pathwill be all parent folders of all.protofile in your Unity project combined. This way you can useimportto refer to any.protofile within your Unity project. (They should not be in UPM package though, I usedApplication.dataPathas a path base and packages aren't in here.) Also,google/protobuf/path is usable. For example, utilizing well-known types or extending custom options.

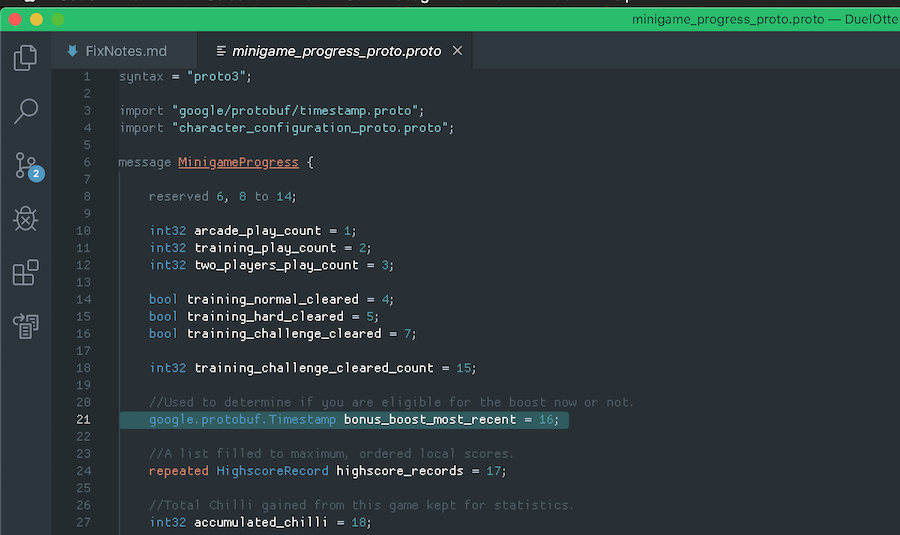

Maybe you are thinking about storing time, for your daily resets, etc. Storing as C# .ToString() of DateTime/DateTimeOffset is not a good idea. Storing it as an integer seconds / milliseconds from Unix epoch and converting to DateTimeOffset later is a better idea.

But instead of using generic int32/64 which is prone to error when you look at it later what this number supposed to represent, Google already has Timestamp ready for use waiting in the protobuf's DLL you need to include in the Unity project (Google.Protobuf.WellKnownTypes.___), so you don't even have to copy Google's .proto of Timestamp into your game. (That will instead cause duplicate declaration compile error.)

Google's Timestamp consist of 2 number fields, an int64 for seconds elapsed since Unix epoch and an int32 of nanoseconds in that second for extra accuracy. What's even more timesaving is that Google provided utility methods to interface with C#. (Such as public static Timestamp FromDateTimeOffset(DateTimeOffset dateTimeOffset);)

Here's how you do it in your .proto file.

google/protobuf/ path is seemingly available for import from nowhere. Then you need to fully qualify it with google.protobuf.__ since Google used package google.protobuf;.

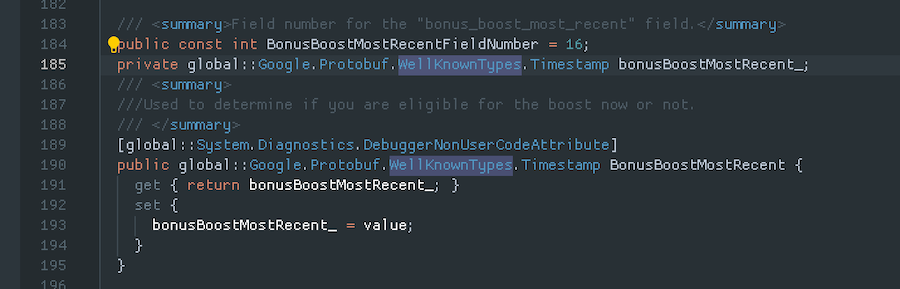

Resulting C# class looks like this :

See other predefined well-known types. You will see other types already used for typical data types such as uint32 as well. Other useful one are google.Protobuf.Struct where it could store JSON-like key value pair where the key is string and value is varying type. Use google.Protobuf.Value for only the varying value type part of the Struct. I think generally when you think you are going to use google.Protobuf.Any, think of Struct first. (Unless it is really a byte stream.)

- Smaller size, no big luggages like type information when if you used

System.Serializable+BinaryFormatter. - You could use Unity's

ScriptableObject, but one well-known gotchas is that Unity can't serializeDictionary. Here you could usemap<,>in protobuf together with available protobuf types. Any and Oneof could be very useful too. System.Serializableis terrible on both forward and backward compatibility unpredictably, may affect your business badly. (e.g. you wanna change how your game's monetization works, that timed ads that was saved in the save file is now unnecessary, but because inflexibility you have to live with them forever in the code.)- For Unity-specific problem, just rename your

asmdefand the serialized file is now unreadable without binder hacks becauseBinaryFormatterneeds fully qualified assembly name. - Protobuf is flexible that it is a generic C# library, and the serialized file could potentially be read in other languages like on your game server. For more Unity-tuned serialization, you may want to check out Odin Serializer.

- Protobuf-generated C# class is powerful. It comes with sensible

partialand some useful data merging methods which otherwise would be tedious and buggy for a class-type variable. (e.g. it understands how to handle list-like and dictionary-like data, therepeatedfield andmapfield.) - Programming in

.prototo generate a C# class is simply faster and more readable than C# to get the same function. (e.g. has properties, null checks, bells and whistles, and not just all C#publicfields.)

Here's one interesting rebuttal against Protobuf : http://reasonablypolymorphic.com/blog/protos-are-wrong/ And here's one interesting counter argument from the author : https://news.ycombinator.com/item?id=18190005

Use your own judgement if you want it or not!

Now that you can't use mono backend anymore on iOS, there is a problem that IL2CPP is not supporting System.Reflection.Emit. Basically you should avoid anything that will trigger reflection as much as possible.

Luckily the most of core funtions does not use reflection. The most likely you will trigger reflection is protobufClassInstance.ToString() (Or attempting to Debug.Log any of the protobuf instance.) It will then use reflection to figure out what is the structure of all the data to print out pretty JSON-formatted string. To alleviate this you might override ToString so that it pull the data out to make a string directly from generated class file's field. I am not sure of other functions that might trigger reflection.

You should see the discussion in this and this thread. The gist of it is Unity failed to preserve some information needed for the reflection and it cause the reflection to fail at runtime.

And lastly the latest protobuf (3.6.0) has something related to this issue. Please see https://github.com/google/protobuf/blob/master/CHANGES.txt So it is recommended to get the latest version!

For complete understanding I suggest you visit Google's document but here are some gotchas you might want to know before starting.

- Use CamelCase (with an initial capital) for message names, for example, SongServerRequest. Use underscore_separated_names for field names – for example, song_name.

- By default of C#

protoc, theunderscore_nameswill becomePascalCaseandcamelCasein the generated code. .protofile name matters and Google suggests you useunderscore_names.proto. It will become the output file name inPascalCase. (Does not related to the file's content or the message definition inside at all.)- The comment in your

.protofile will carry over to your generated class and fields if that comment is over them. Multiline supported. - Field index 1 to 15 has the lowest storage overhead so put fields that likely to occur often in this range.

- The generated C# class will has

sealed partial. You could write more properties to design new access or write point. - You cannot use

enumasmap's key. - You cannot use duplicated

enumname even if they are not in the same type. You may have to prefix yourenumespecially if they sounded generic likeNone. - It's not

intbutint32. And this data type is not efficient for negative number. (In that case usesint32) - If you put

//comment (or multiline) over a field or message definition, it will be transferred nicely to C# comment. - It is possible to generate a C# namespace.

- The generated class contains parameterless constructor definition, but you still could interfere and add something more because it call

partial void OnConstruction(), which has no definition and you can add something to it in your handwrittenpartial. This is C#'s partial method feature that works similar to apartialclass. - One thing to note about the timing of

OnConstructionthough, it is called before any data is populated to the instance. For example, if I got arepeatedint32of highscores, I am thinking about maintaining this list to have exactly 10 scores at any moment. To ensure this I add some logic to fill it with empty scores until it has 10 count inOnConstruction. However, I later found that when loading from a save file that already has scores (let's say 10 scores as intended),OnConstructioncomes beforerepeatedlist is populated from the stream. My code see it as empty where actually it is going to be populated a bit later. The result is I get 20 scores in the deserialized list.

This is a Unity-specific utility to deal with physical file save-load of your generated protobuf class. This is perfect for game saves so you can load it from binary on the next start up. It has 2 versions, a completely static utility class and an abstract version which requires some generic typing.

The point of generic version is that, by providing your Protobuf-generated class T in the type parameter, you will get a manager just for that specific class T to easily save and load Protobuf data to disk and back to memory, plus an extra static "active slot" of that T for an even easier management of loaded data. (So you don't load it over and over, and save when necessary.) The most common use of this active slot is as a local game saves, since nowadays mobile games are single-save and there is usually no explicit load screen where you choose your save file. There are methods you can use to implement other game save schemes. And because you subclass it, it open ways for your validation logic which would be impossible with just the static utility version.

It also contains some basic C# AES encryption, I think almost everyone wants it even though you are likely too lazy to separate key and salt from your game's code. At least it is more difficult for the player to just open the serialized protobuf file with Note Pad and see exactly where his money variable is.

//Recommended naming is `LocalSave`. The LocalSave 2nd type param will give you magic `static` access point later.

public class LocalSave : ProtoBinaryManager<T, LocalSave> {

//Implement required `abstract` implementations...

}

// Then later you could :

//`.Active` static access point for your save data. Automatic load from disk and cache. `Gold` is a property in your generated `T` class from Protobuf.

LocalSave.Active.Gold += 5555;

//.Save easy static method to save your active save file to the disk.

LocalSave.Save();

//When you start the game the next time, LocalSave.Active will contains your previous state because .Active automatically load from disk.

//Other utilities provided in `.Manager` static access point.

LocalSave.Manager.BackupActive();

LocalSave.Manager.ReloadActive();There are some problems with Protobuf-generated C# code that I am not quite content with :

- The generated properties are all

public getandpublic set, this maybe not desirable. For example yourGemproperty could be modified by everyone and that's bug-prone. You probably prefer some kind ofPurchaseWithGem(iapItem)method in yourpartialthat decreases yourGemand keep the setterprivate. - The class contains

partial, I would like to usepartialfeature and don't want to make a completely new class as a wrapper to this protobuf-generated class. It would be easier to handle the serialization and data management. Also I don't want to redo all the protobuf-generated utility methods likeMergeFromor deepClone. - Some fields in

protolikemapare useful as Unity couldn't even serializeDictionaryproperly, but it is even more likely than normal fields that you don't want anyone to access this freely and add things to it. Imagine amap<string,string>describing friend's UID code to the string representation ofDateTimeof when they last online. It doesn't make sense to allow access to this map becausestringdoesn't make sense. You want it completelyprivatethen write a method accessor likeRememberLastOnline(friend, dateTime)to modify its value, and potentially call the save method to write to disk at the same time. - These unwanted accessors show up in your intellisense and you don't want to see them.

So I want some more control over the generated C# classes. One could utilize the Compiler Plugin feature, but I think it is overkill. I think I am fine with just some dumb RegEx replace over generated C# classes in Unity as a 2nd pass.

The next problem is how to select some fields or message to be triggered by this post-processing. It will be by custom options feature. In the folder Runtime/CustomOptions, there is a protobuf_unity_custom_options.proto file that extends the options to Protobuf.

- If you use protobuf-unity by copying the whole thing into your project, it will be in your

importscope already, plus protobuf-unity will generate its C# counterpart. - If you use protobuf-unity via UPM include, I don't want to deal with path resolving to the package location so

protocknows where theprotobuf_unity_custom_options.protois. A solution is just copy this.protofile to your project. protobuf-unity will then generate its C# file again locally in your project. protobuf-unity has an exception that it will not generate C# script for.protocoming from packages.

You then use import "protobuf_unity_custom_options.proto"; on the head of .proto file that you want to use the custom options. The generated C# file of this class of yours, will then have a reference to the C# file of protobuf_unity_custom_options.proto (namely ProtobufUnityCustomOptions.cs)

Right now this custom options gives you 1 message option private_message and 1 field option private. Unfortunately I think options can't be just a flag, so they are boolean and you have to set them to true.

syntax = "proto3";

import "enums.proto";

import "protobuf_unity_custom_options.proto";

message PlayerData {

option (private_message) = true; // Using message option

string player_id = 1;

string display_name = 2 [(private)=true]; // Using field option

}private would apply tighter accessor to only one field, private_message apply to all fields in the message. But yeah, I didn't work on that yet. I just want to write these documentation as I code. :P

protobuf-unity and ProtoBinaryManager together deals with your offline save data. What about taking that online? Maybe it is just for backing up the save file for players (without help from e.g. iCloud), or you want to be able to view, inspect, or award your player something from the server.

The point of protobuf is often to sent everything over the wire with matching protobuf files waiting. But what if you are not in control of the receiving side? The key is often JSON serialization, since that is kinda the standard of interoperability. And what I just want to tell you is to know that there is a class called Google.Protobuf.JsonFormatter available for use from Google's dll already.

How to use it is just instantiate that class instance (or JsonFormatter.Default for no config quick formatting) then .Format(yourProtobufMessageObject). It uses a bunch of reflections to make a key value pairs of C# variable name and its value which may not be the most efficient solution, but it did the trick.

If you want to see what the JSON string looks like, here is one example of .Format from my game which contains some nested Protobuf messages as well. That's a lot of strings to represent C# field names. I heard it didn't work well with any, not sure. But repeated works fine as JSON array [] as far as I see it.

"{ "playerId": "22651ba9-46c6-4be6-b031-d8373fe5c6de", "displayName": "5ARGON", "playerIdHash": -1130147695, "startPlaying": "2019-08-31T09:30:26", "minigameProgresses": { "AirHockey": { "trainingPlayCount": 4, "twoPlayersPlayCount": 1, "trainingHardCleared": true, "trainingChallengeCleared": true, "trainingChallengeClearedCount": 1, "bonusBoostMostRecent": "1970-01-01T00:03:30Z", "accumulatedChilli": 1963, "hardClearStreak": 1, "challengeClearStreak": 1, "inGameTraining": true }, "PerspectiveBaseball": { "arcadePlayCount": 5, "twoPlayersPlayCount": 5, "bonusBoostMostRecent": "2019-09-01T06:43:10Z", "highscoreRecords": [ { "playerName": "PLAYER5778", "score": 40355, "localTimestamp": "2019-09-02T10:38:59.737323Z", "characterConfiguration": { } }, { "playerName": "PLAYER5778", "score": 34805, "localTimestamp": "2019-09-02T10:40:47.862259Z", "characterConfiguration": { } }, { "playerName": "5ARGON", "score": 8495, "localTimestamp": "2019-09-04T09:46:10.733110Z", "characterConfiguration": { } }, { "playerName": "PLAYER5778", "localTimestamp": "2019-09-01T06:43:41.571264Z", "characterConfiguration": { } }, { "localTimestamp": "1970-01-01T00:00:00Z" }, { "localTimestamp": "1970-01-01T00:00:00Z" }, { "localTimestamp": "1970-01-01T00:00:00Z" }, { "localTimestamp": "1970-01-01T00:00:00Z" }, { "localTimestamp": "1970-01-01T00:00:00Z" }, { "localTimestamp": "1970-01-01T00:00:00Z" } ], "accumulatedChilli": 4066 }, "BlowItUp": { "trainingPlayCount": 1, "twoPlayersPlayCount": 4, "trainingHardCleared": true, "accumulatedChilli": 1114, "hardClearStreak": 1 }, "CountFrog": { }, "BombFortress": { "trainingPlayCount": 1, "twoPlayersPlayCount": 2, "trainingHardCleared": true, "trainingChallengeCleared": true, "trainingChallengeClearedCount": 1, "accumulatedChilli": 1566, "hardClearStreak": 1, "challengeClearStreak": 1 }, "StackBurger": { "twoPlayersPlayCount": 2 }, "SwipeBombFood": { }, "CookieTap": { }, "Rocket": { }, "Pulley": { "trainingPlayCount": 2, "accumulatedChilli": 146 }, "Pinball": { }, "Fruits": { }, "Warship": { "twoPlayersPlayCount": 3 } }, "characterConfigurations": { "0": { }, "1": { "kind": "CharacterKind_Bomberjack" } }, "chilli": 58855, "permanentEvents": [ "FirstLanguageSet", "GameReviewed" ], "trialTimestamps": { "Fruits": "2019-09-04T09:57:09.631383Z", "Warship": "2019-09-04T10:34:34.249723Z" }, "savedConfigurations": { "autoGameCenter": true }, "purchasableFeatures": [ "RecordsBoard" ] }"If just for backup, you may not need JSON and just dump the binary or base 64 of it and upload the entire thing. But JSON often allows the backend to actually do something about it. You may think that there is one benefit of Protobuf that it could be read in a server, so shouldn't we just upload the message instead of JSON? But that maybe only your own server which you code up yourself. For other commercial solutions maybe you need JSON.

For example Microsoft Azure Playfab supports attaching JSON object to your entity. Then with understandable save data available in Playfab, you are able to segment and do live dev ops based on the save, e.g. the player that progressed slower in the game. Or award points from the server on event completion, then the player sync back to Protobuf in the local device. (However attaching a generic file is also possible.)

As opposed to doing JSON at client side and send it to server, how about sending protobuf bytes to the server and deserialize with JS version of generated code instead?

Here's an example how to setup Node's Crypto so it decrypts what C# encrypted in my code. I used this pattern in my Firebase Functions where it spin up Node server with a lambda code fragment, receiving the save file for safekeeping and at the same time decipher it so server knows the save file's content. Assuming you have already got a Node Buffer of your save data at the server as content :

function decipher(saveBuffer)

{

//Mirrors `Rfc2898DeriveBytes` in C#. Use the same password and salt, and iteration count if you changed it.

const key = pbkdf2Sync(encryptionPassword, encryptionSalt, 5555, 16, 'sha1')

//Pick the IV from cipher text

const iv = saveBuffer.slice(0, 16)

//The remaining real content

const content = saveBuffer.slice(16)

//C# default when just creating `AesCryptoServiceProvider` is in CBC mode and with PKCS7 padding mode.

const decipher = createDecipheriv('aes-128-cbc', key, iv)

const decrypted = decipher.update(content)

const final = decipher.final()

const finalBuffer = Buffer.concat([decrypted, final])

//At this point you get a naked protobuf bytes without encryption.

//Now you can obtain a nicely structured class data.

return YourGeneratedProtoClassJs.deserializeBinary(finalBuffer)

}Firestore could store JSON-like data, and the JS Firestore library can store JS object straight into it. However not everything are supported as JS object is a superset of what Firestore supports.

One thing it cannot store undefined, and other it cannot store nested arrays. While undefined does not exist in JSON, nested array is possible in JSON but not possible to be stored in Firestore.

What you get from YourGeneratedProtoClassJs.deserializeBinary is not JS object. It is a class instance of Protobuf message. There is a method .ToObject() available to change it to JS object, but if you take a look at what you had as map<A,B>, the .ToObject() produces [A,B][] instead. This maybe how Protobuf really keep your map. Unfortunately as I said, nested array can't go straight into Firestore.

After eliminating possible undefined or null, you need to manually post-process every map field (and nested ones), changing [A,B][] into a proper JS object by using A as key and B as value by looping over them and replace the field.

repeated seems to be ToObject()-ed just fine. It is just a straight array.

repeatedenum : It works serializing at C# and deserializing at C# just fine. But if you getUnhandled error { AssertionError: Assertion failedwhen trying to turn the buffer made from C# into JS object, it may be this bug from a year ago. I just encountered it in protobuf 3.10.0 (October 2019). Adding[packed=false]does not fix the issue for me.- Integer map with key 0. I found a bug where

map<int, Something>works in C# but fail to deserialize in JS. As long as this is not fixed, use number other than 0 for your integer-keyedmap.

As this will need Protobuf you need to follow Google's license here : https://github.com/google/protobuf/blob/master/LICENSE. For my own code the license is MIT.