jenkins-kubernetes-plugin

Jenkins plugin to run dynamic slaves in a Kubernetes/Docker environment.

Based on the Scaling Docker with Kubernetes article, automates the scaling of Jenkins slaves running in Kubernetes.

The plugin creates a Kubernetes Pod for each slave started, defined by the Docker image to run, and stops it after each build.

Slaves are launched using JNLP, so it is expected that the image connects automatically to the Jenkins master. For that some environment variables are automatically injected:

JENKINS_URL: Jenkins web interface urlJENKINS_JNLP_URL: url for the jnlp definition of the specific slaveJENKINS_SECRET: the secret key for authenticationJENKINS_NAME: the name of the Jenkins agent

Tested with jenkinsci/jnlp-slave,

see the Docker image source code.

Pipeline support

Nodes can be defined in a pipeline and then used

podTemplate(label: 'mypod', containers: [

containerTemplate(name: 'maven', image: 'maven:3.3.9-jdk-8-alpine', ttyEnabled: true, command: 'cat'),

containerTemplate(name: 'golang', image: 'golang:1.6.3-alpine', ttyEnabled: true, command: 'cat')

],

volumes: [secretVolume(secretName: 'shared-secrets', mountPath: '/etc/shared-secrets')]) {

node ('mypod') {

stage 'Get a Maven project'

git 'https://github.com/jenkinsci/kubernetes-plugin.git'

container('maven') {

stage 'Build a Maven project'

sh 'mvn clean install'

}

stage 'Get a Golang project'

git url: 'https://github.com/hashicorp/terraform.git'

container('golang') {

stage 'Build a Go project'

sh """

mkdir -p /go/src/github.com/hashicorp

ln -s `pwd` /go/src/github.com/hashicorp/terraform

cd /go/src/github.com/hashicorp/terraform && make core-dev

"""

}

}

}The jnlp agent image used can be customized by adding it to the template

containerTemplate(name: 'jnlp', image: 'jenkinsci/jnlp-slave:2.62-alpine', args: '${computer.jnlpmac} ${computer.name}'),Container Configuration

When configuring a container in a pipeline podTemplate the following options are available:

podTemplate(label: 'mypod', containers: [

containerTemplate(

name: 'mariadb',

image: 'mariadb:10.1',

ttyEnabled: true,

command: 'cat'

privileged: false,

alwaysPullImage: false,

workingDir: '/home/jenkins',

args: '',

instanceCap: 1,

resourceRequestCpu: '50m',

resourceLimitCpu: '100m',

resourceRequestMemory: '100Mi',

resourceLimitMemory: '200Mi',

envVars: [

containerEnvVar(key: 'MYSQL_ALLOW_EMPTY_PASSWORD', value: 'true'),

...

]

),

...

],

volumes: [

emptyDirVolume(mountPath: '/etc/mount1', memory: false),

secretVolume(mountPath: '/etc/mount2', secretName: 'my-secret'),

configMapVolume(mountPath: '/etc/mount3', configMapName: 'my-config'),

hostPathVolume(mountPath: '/etc/mount4', hostPath: '/mnt/my-mount'),

nfsVolume(mountPath: '/etc/mount5', serverAddress: '127.0.0.1', serverPath: '/', readOnly: true),

persistentVolumeClaim(mountPath: '/etc/mount6', claimName: 'myClaim', readOnly: true)

]) {

...

}

Constraints

Multiple containers can be defined in a pod.

One of them must run the Jenkins JNLP agent service, with args ${computer.jnlpmac} ${computer.name},

as it will be the container acting as Jenkins agent.

Other containers must run a long running process, so the container does not exit. If the default entrypoint or command

just runs something and exit then it should be overriden with something like cat with ttyEnabled: true.

Configuration on Google Container Engine

Create a cluster

gcloud container clusters create jenkins --num-nodes 1 --machine-type g1-small

and note the admin password and server certitifate.

Or use Google Developer Console to create a Container Engine cluster, then run

gcloud container clusters get-credentials jenkins

kubectl config view --raw

the last command will output kubernetes cluster configuration including API server URL, admin password and root certificate

Debugging

To inspect the json messages sent back and forth to the Kubernetes API server you can configure

a new Jenkins log recorder for org.apache.http

at DEBUG level.

Building

Run mvn clean package and copy target/kubernetes.hpi to Jenkins plugins folder.

Docker image

Docker image for Jenkins, with plugin installed. Based on the official image.

Running

docker run --rm --name jenkins -p 8080:8080 -p 50000:50000 -v /var/jenkins_home csanchez/jenkins-kubernetes

Testing locally

A local testing cluster with one node can be created with minukube

minikube start

Then create the Jenkins ReplicationController and Service with

kubectl create -f ./src/main/kubernetes/minikube.yml

kubectl config set-context $(kubectl config current-context) --namespace=kubernetes-plugin

Running in Kubernetes (Google Container Engine)

Assuming you created a Kubernetes cluster named jenkins this is how to run both Jenkins and slaves there.

Create a GCE disk named kubernetes-jenkins to store the data.

gcloud compute disks create --size 20GB kubernetes-jenkins

Creating all the elements and setting the default namespace

kubectl create -f ./src/main/kubernetes/gke.yml

kubectl config set-context $(kubectl config current-context) --namespace=kubernetes-plugin

Connect to the ip of the network load balancer created by Kubernetes, port 80.

Get the ip (in this case 104.197.19.100) with kubectl describe services/jenkins

(it may take a bit to populate)

$ kubectl describe services/jenkins

Name: jenkins

Namespace: default

Labels: <none>

Selector: name=jenkins

Type: LoadBalancer

IP: 10.175.244.232

LoadBalancer Ingress: 104.197.19.100

Port: http 80/TCP

NodePort: http 30080/TCP

Endpoints: 10.172.1.5:8080

Port: slave 50000/TCP

NodePort: slave 32081/TCP

Endpoints: 10.172.1.5:50000

Session Affinity: None

No events.

Until Kubernetes 1.4 removes the SNATing of source ips, seems that CSRF (enabled by default in Jenkins 2)

needs to be configured to avoid WARNING: No valid crumb was included in request errors.

This can be done checking Enable proxy compatibility under Manage Jenkins -> Configure Global Security

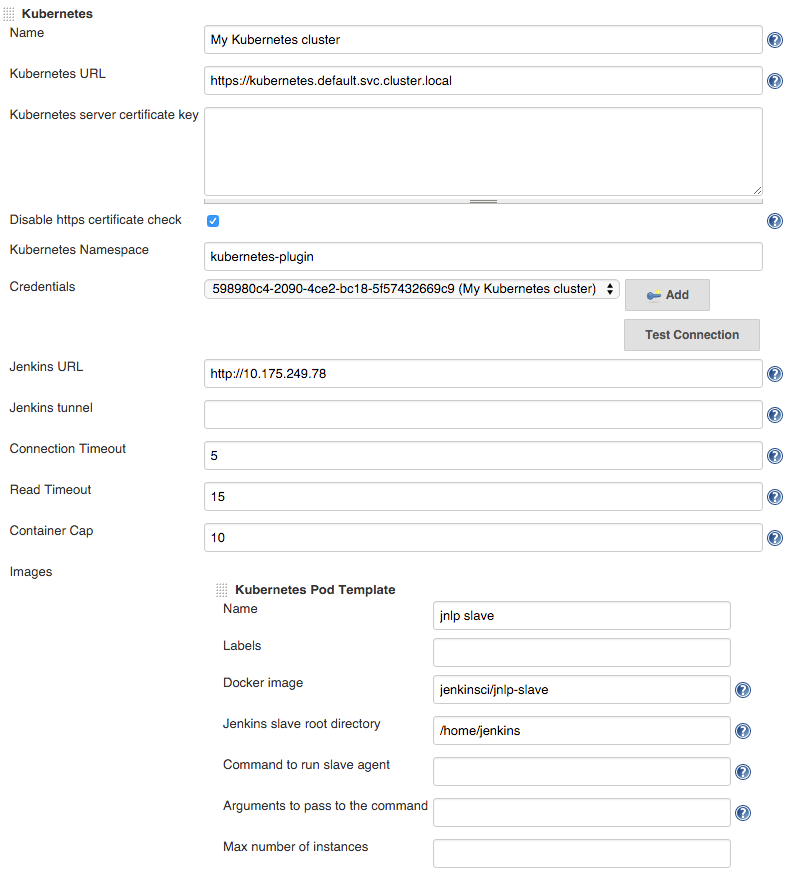

Configure Jenkins, adding the Kubernetes cloud under configuration, setting

Kubernetes URL to the container engine cluster endpoint or simply https://kubernetes.default.svc.cluster.local.

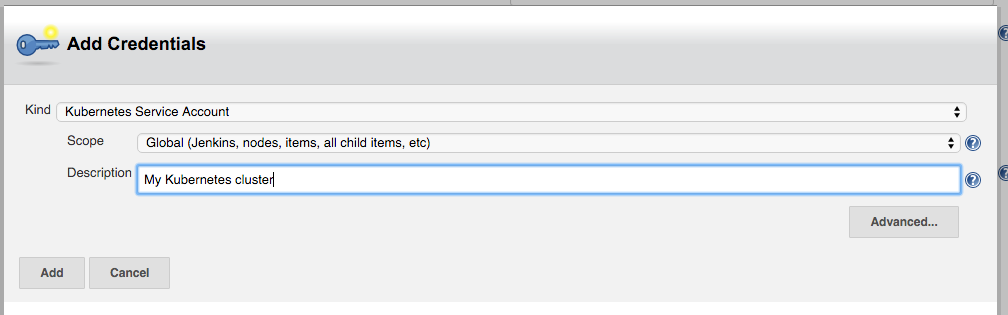

Under credentials, click Add and select Kubernetes Service Account,

or alternatively use the Kubernetes API username and password. Select 'Certificate' as credentials type if the

kubernetes cluster is configured to use client certificates for authentication.

You may want to set Jenkins URL to the internal service IP, http://10.175.244.232 in this case,

to connect through the internal network.

Set Container Cap to a reasonable number for tests, i.e. 3.

Add an image with

- Docker image:

jenkinsci/jnlp-slave - Jenkins slave root directory:

/home/jenkins

Now it is ready to be used.

Tearing it down

kubectl delete namespace/kubernetes-plugin

Building

docker build -t csanchez/jenkins-kubernetes .