This repository is the official implementation of "Categorical Normalizing Flows via Continuous Transformations". Please note that this is a simplified re-implementation of the original code used for the paper, and hence the exact scores for specific seeds can slightly differ.

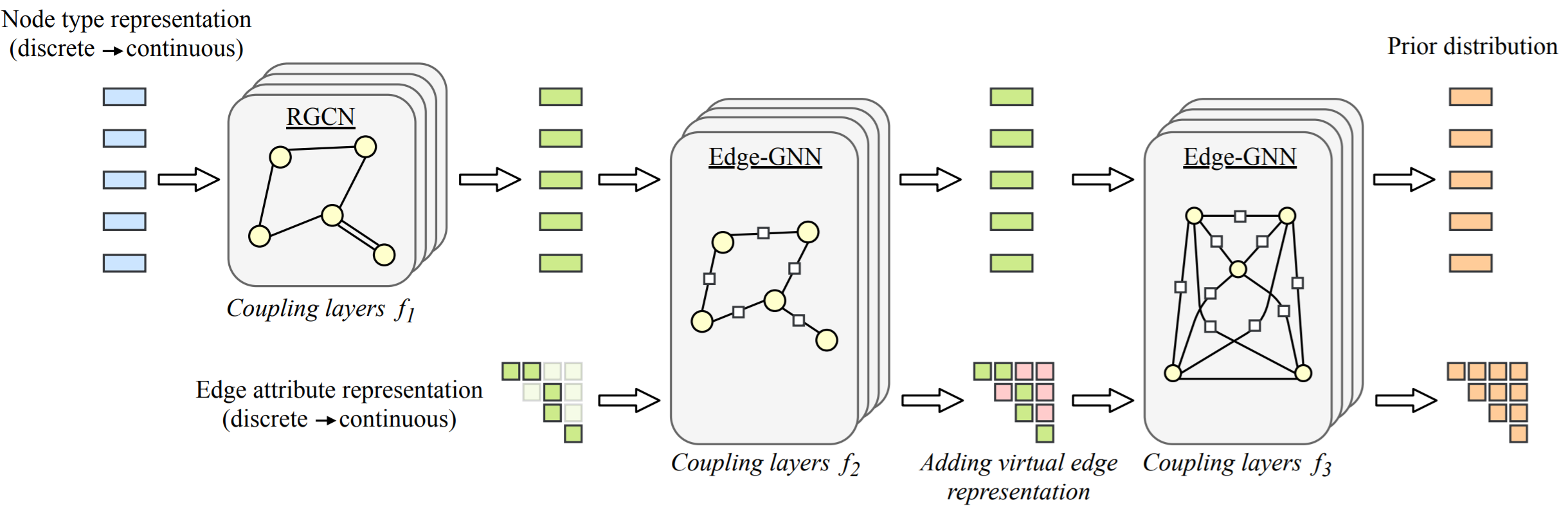

Despite their popularity, to date, the application of normalizing flows on categorical data stays limited. The current practice of using dequantization to map discrete data to a continuous space is inapplicable as categorical data has no intrinsic order. Instead, categorical data have complex and latent relations that must be inferred, like the synonymy between words. In this paper, we investigate Categorical Normalizing Flows, that is normalizing flows for categorical data. By casting the encoding of categorical data in continuous space as a variational inference problem, we jointly optimize the continuous representation and the model likelihood. Using a factorized decoder, we introduce an inductive bias to model any interactions in the normalizing flow. As a consequence, we do not only simplify the optimization compared to having a joint decoder, but also make it possible to scale up to a large number of categories that is currently impossible with discrete normalizing flows. Based on Categorical Normalizing Flows, we propose GraphCNF a permutation-invariant generative model on graphs. GraphCNF implements a three step approach modeling the nodes, edges and adjacency matrix stepwise to increase efficiency. On molecule generation, GraphCNF outperforms both one-shot and autoregressive flow-based state-of-the-art.

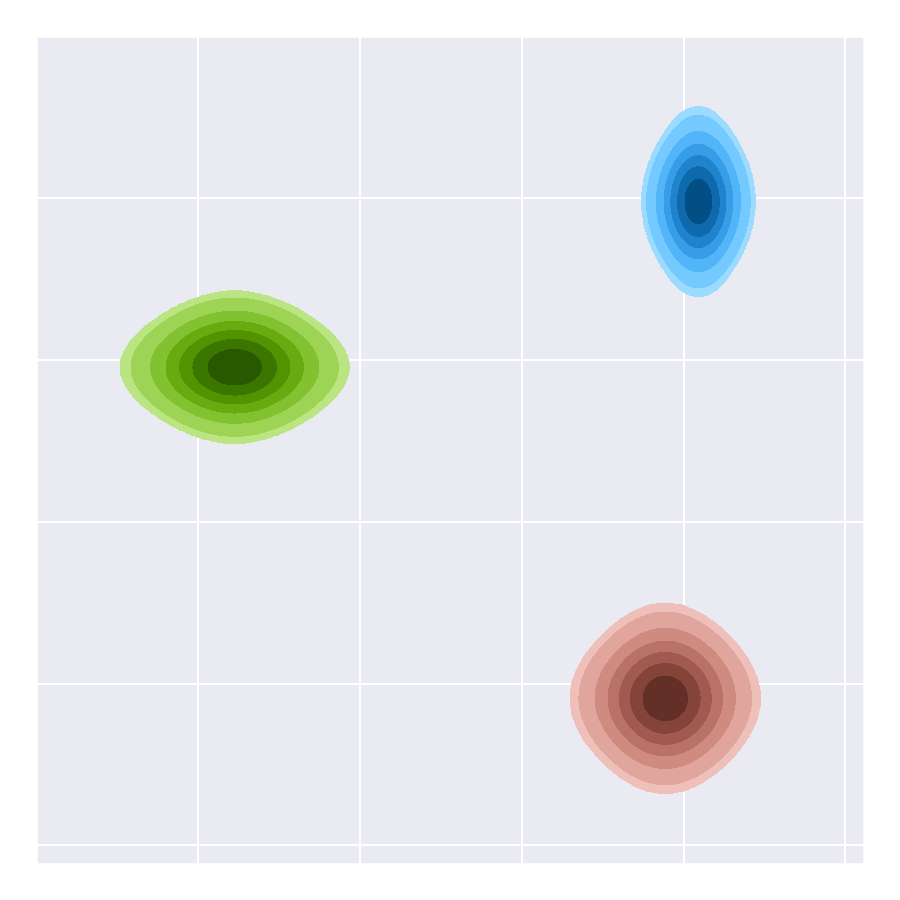

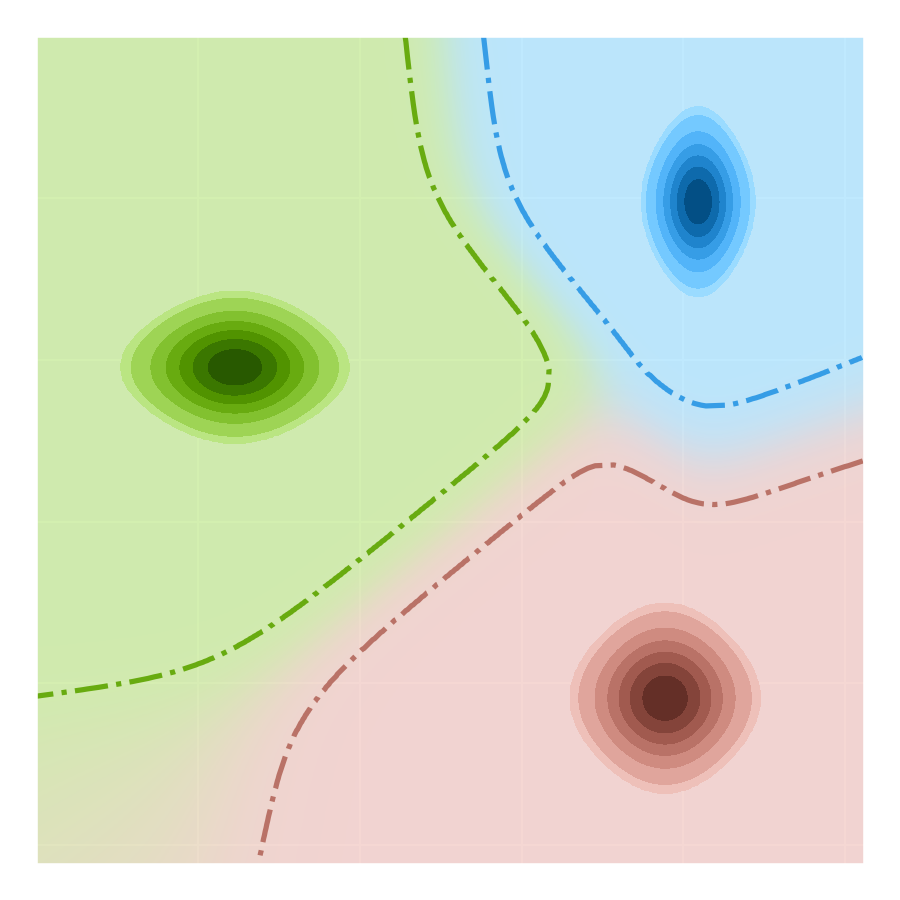

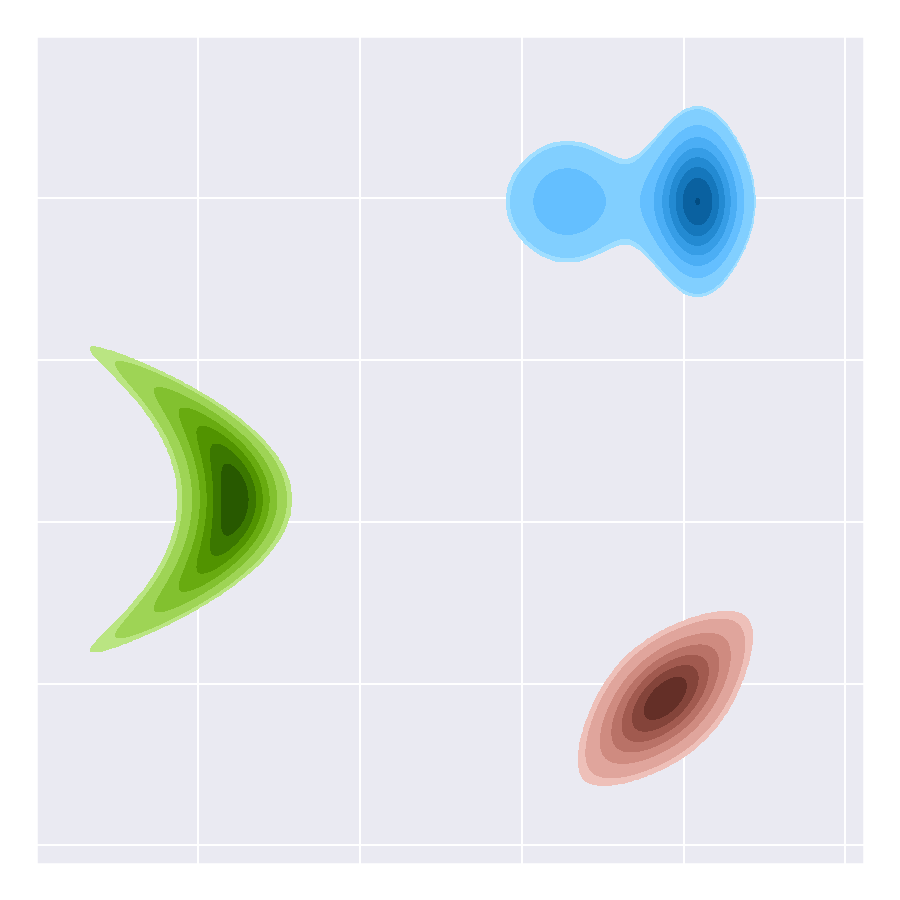

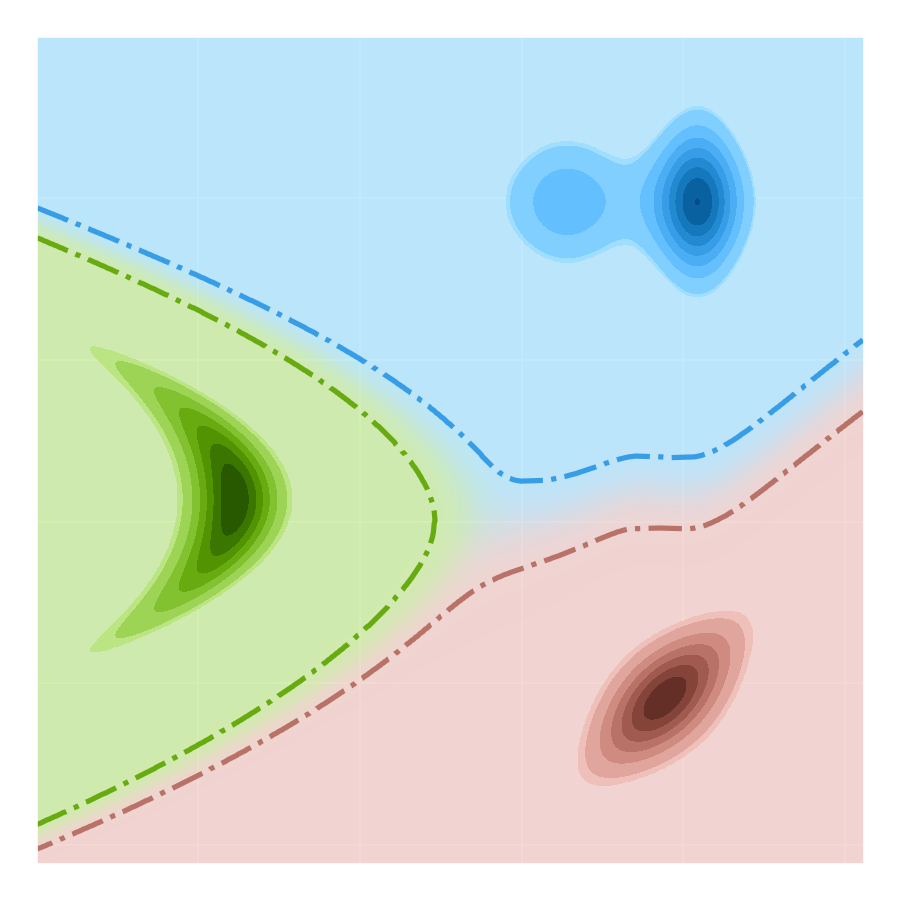

Encoding categorical data using variational inference allows flexible distributions. Below we visualized two encoding schemes with their corresponding partitioning of the latent space: a simple mixture model (left) and linear flows, i.e. mixtures with additional class-conditional flows (right). The distribution in continuous space is shown in different colors for three categories. The borders between categories indicate the part of the latent space where the decoding is almost deterministic (posterior probability > 0.95). Although the linear flows allow more flexible and hard borders between categories, we find that a mixture model is sufficient for the flow to accurately model discrete distributions.

|

|

|

|

|---|

Based on such an encoding, GraphCNF is a normalizing flow on graphs which assigns equal probability to any permutation of nodes. Most current approaches are autoregressive and assume an order of nodes although any permutation of nodes represents the exact same graph. GraphCNF encodes the node types, edge attributes and binary adjacency matrix in three steps into latent space. This stepwise encoding strategy makes the flow more efficient as it reduces the network sizes and number of transformations applied, and allows coupling layers to leverage the graph structure earlier when generating.

The code is written in PyTorch (1.4) and Python 3.6. Higher versions of PyTorch and Python are expected to work as well.

We recommend to use conda for installing the requirements. If you haven't installed conda yet, you can find instructions here. The steps for installing the requirements are:

-

Create a new environment

conda create env -n CNFIn the environment, a python version >3.6 should be used.

-

Activate the environment

conda activate CNF -

Install the requirements within the environment via pip:

pip install -r requirements.txtIn case you have a GPU, make sure you install PyTorch with GPU support (see the official website for details). Some requirements are only necessary for specific experiments, and can be left out if wanted:

- CairoSVG (used for converting SVG images of molecules to PDFs)

- ortools (only needed for generating a new graph coloring dataset)

- torchtext (used for downloading and managing datasets in language modeling experiments)

-

(Optional) Install RDKit for evaluating and visualizing molecules:

conda install -c rdkit rdkitThis step can be skipped in case you do not want to run experiments on molecule generation.

Experiments have been performed on a NVIDIA GTX 1080Ti and TitanRTX with 11GB and 24GB of memory respectively. The experiments on the TitanRTX can also be run on a smaller GPU such as the GTX 1080Ti with smaller batch sizes. The results are expected to be similar while requiring slightly longer training.

The code is split into three main parts by folders:

- general summarizes files that are needed for code infrastructure, as functions for loading/saving a model and a template for a training loop.

- experiments includes the task-specific code for the four experiment domains.

- layers contains the implementation of network layers and flow transformations, such as mixture coupling layers, Edge-GNN and the encoding of categorical variables into continuous space.

We provide training and evaluation scripts for all four experiments in the paper: set modeling, graph coloring, molecule generation and language modeling. The detailed description for running an experiment can be found in the corresponding experiment folder: set modeling, graph coloring, molecule generation and language modeling.

We provide preprocessed datasets for the graph coloring and molecule generation experiments, which can be found here. The data for the experiments should be placed at experiment/_name_/data/.

For set modeling, the datasets are generated during training and do not require a saved file. The datasets for the language experiments are managed by torchtext and automatically downloaded when running the experiments for the first time.

We uploaded pretrained models for most of the experiments in the paper here. We also provide a tensorboard with every pretrained model to show the training progress. Please refer to the experiment folders for loading and evaluating such models.

| Model | Bits per variable |

|---|---|

| Discrete NF | 3.87bpd |

| Variational Dequantization | 3.01bpd |

| CNF + Mixture model (pretrained) | 2.78bpd |

| CNF + Linear flows | 2.78bpd |

| CNF + Variational encoding | 2.79bpd |

| Optimum | 2.77bpd |

| Model | Bits per variable |

|---|---|

| Discrete NF | 2.51bpd |

| Variational Dequantization | 2.29bpd |

| CNF + Mixture model (pretrained) | 2.24bpd |

| CNF + Linear flows | 2.25bpd |

| CNF + Variational encoding | 2.25bpd |

| Optimum | 2.24bpd |

| Model | Validity | Bits per variable |

|---|---|---|

| VAE (pretrained) | 7.75% | 0.64bpd |

| RNN + Smallest_first (pretrained) | 32.27% | 0.50bpd |

| RNN + Random (pretrained) | 49.28% | 0.46bpd |

| RNN + Largest_first (pretrained) | 71.32% | 0.43bpd |

| GraphCNF (pretrained) | 66.80% | 0.45bpd |

| Model | Validity | Uniqueness | Novelty | Bits per node |

|---|---|---|---|---|

| GraphCNF (pretrained) | 83.41% | 99.99% | 100% | 5.17bpd |

| + Subgraphs | 96.35% | 99.98% | 100% |

| Model | Validity | Uniqueness | Novelty | Bits per node |

|---|---|---|---|---|

| GraphCNF (pretrained) | 82.56% | 100% | 100% | 4.94bpd |

| + Subgraphs | 95.66% | 99.98% | 100% |

| Model | Penn-Treebank | Text8 | Wikitext103 |

|---|---|---|---|

| LSTM baseline | 1.28bpd | 1.44bpd | 4.81bpd |

| Categorical Normalizing Flow | 1.27bpd | 1.45bpd | 5.43bpd |

All content in this repository is licensed under the MIT license. Please feel free to raise any issue if the code is not working properly.

This code is designed to be easily extended to new tasks and datasets. For details on how to add a new experiment task, see the README in the experiments folder.

If you use the code or results in your research, please consider citing our work:

@inproceedings{lippe2021categorical,

author = {Phillip Lippe and Efstratios Gavves},

booktitle = {International Conference on Learning Representations},

title = {Categorical Normalizing Flows via Continuous Transformations},

url = {https://openreview.net/forum?id=-GLNZeVDuik},

year = {2021}

}