PyTorch implementation of Self-Supervised Learning for Real-World Super-Resolution from Dual Zoomed Observations

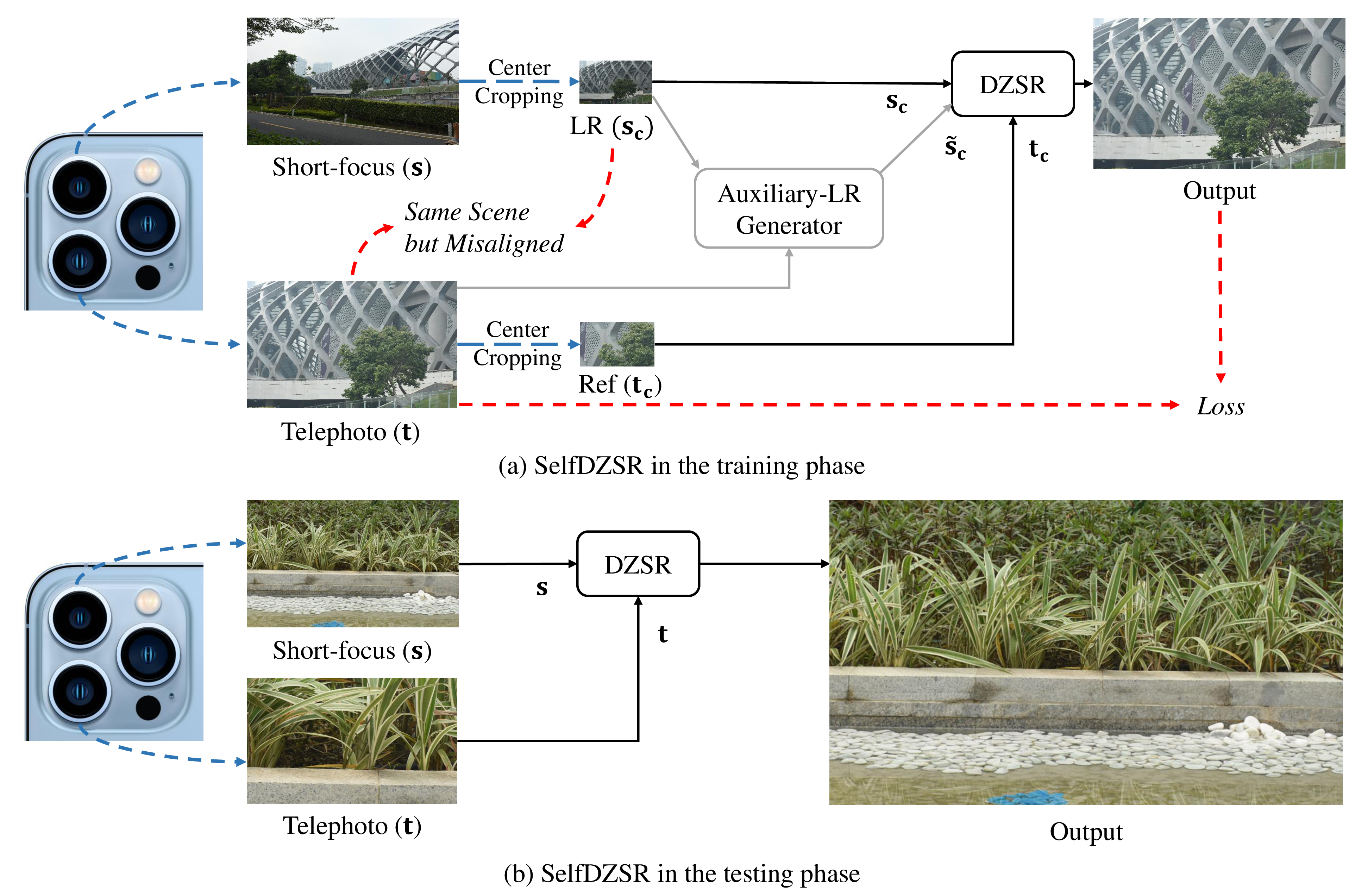

Overall pipeline of proposed SelfDZSR in the training and testing phase.

-

In the training, the center part of the short-focus and telephoto image is cropped respectively as the input LR and Ref, and the whole telephoto image is taken as the GT. The auxiliary-LR is generated to guide the alignment of LR and Ref towards GT.

-

In the testing, SelfDZSR can be directly deployed to super-solve the whole short-focus image with the reference of the telephoto image.

-

Prerequisites

- Python 3.x and PyTorch 1.6.

- OpenCV, NumPy, Pillow, tqdm, lpips, scikit-image and tensorboardX.

-

Dataset

- Nikon camera images and CameraFusion dataset can be downloaded from this link.

-

Data pre-processing

- If you want to pre-process additional short-focus images and telephoto images, we provide a demo in

./data_preprocess. (2022/9/13)

- If you want to pre-process additional short-focus images and telephoto images, we provide a demo in

-

For simplifying the training process, we provide the pre-trained models of feature extractors and auxiliary-LR generator. The models for Nikon camera images and CameraFusion dataset are put in the

./ckpt/nikon_pretrain_models/and./ckpt/camerafusion_pretrain_models/folder, respectively. -

For direct testing, we provide the four pre-trained DZSR models (

nikon_l1,nikon_l1sw,camerafusion_l1andcamerafusion_l1sw) in the./ckpt/folder. Takingnikon_l1swas an example, it represents the model trained on the Nikon camera images using$l_1$ and sliced Wasserstein (SW) loss terms.

-

For Nikon camera images, modify

datarootintrain_nikon.shand then run: -

For CameraFusion dataset, modify

datarootintrain_camerafusion.shand then run:

-

For Nikon camera images, modify

datarootintest_nikon.shand then run: -

For CameraFusion dataset, modify

datarootintest_camerafusion.shand then run:

- You can specify which GPU to use by

--gpu_ids, e.g.,--gpu_ids 0,1,--gpu_ids 3,--gpu_ids -1(for CPU mode). In the default setting, all GPUs are used. - You can refer to options for more arguments.

If you find it useful in your research, please consider citing:

@inproceedings{SelfDZSR,

title={Self-Supervised Learning for Real-World Super-Resolution from Dual Zoomed Observations},

author={Zhang, Zhilu and Wang, Ruohao and Zhang, Hongzhi and Chen, Yunjin and Zuo, Wangmeng},

booktitle={ECCV},

year={2022}

}

This repo is built upon the framework of CycleGAN, and we borrow some code from C2-Matching and DCSR, thanks for their excellent work!