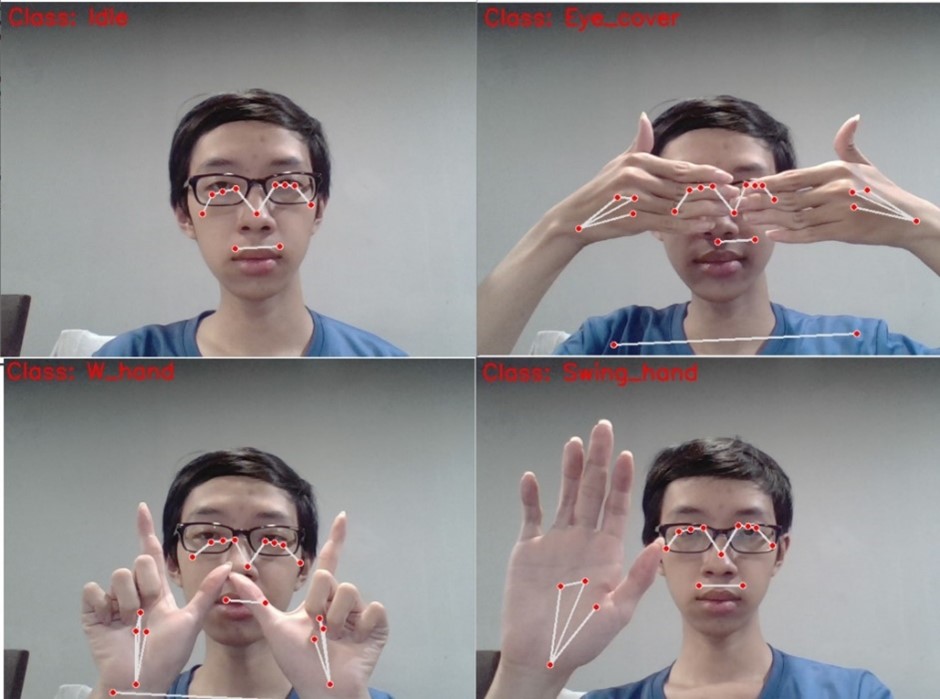

A real-time system to classify single-object behaviour. Using MediaPipe Pose and Tensorflow with LSTM model to not only inference on trained model, but also to train.

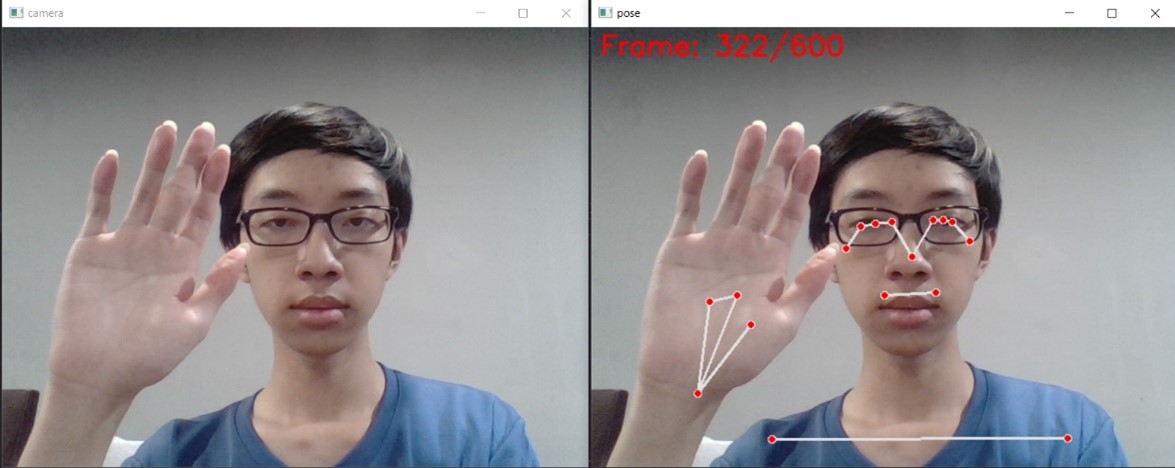

Training process

Inference process

In each frame of picture, the MediaPipe Pose library first detect poses' landmark. Each landmark not only contains two coordinates (x, y, z, visibility). Then, these landmarks will be used to train a RNN model which contains LSTM layers. Furthermore, the model also attached some drop-out layers to reduce overfitting.

Model architecture (from AI Design)

Firstly, please do install all the required libraries using the command:

pip install -r requirements.txtYou can either use the pre-trained model in models/best.h5, or train a new one by yourself. In this case, the instruction will train a new model.

At the first stage, you need to generate a new dataset. For each class, you need to run the gen_data.py:

python gen_data.pyAfter generating all the classes in data, run the train.py to train the model:

python train.pyThe training process will generate a model in models/best.h5. At this moment, you can run the model:

python inference.pyThis project was developed by phuc16102001 and referencing from Mi AI.