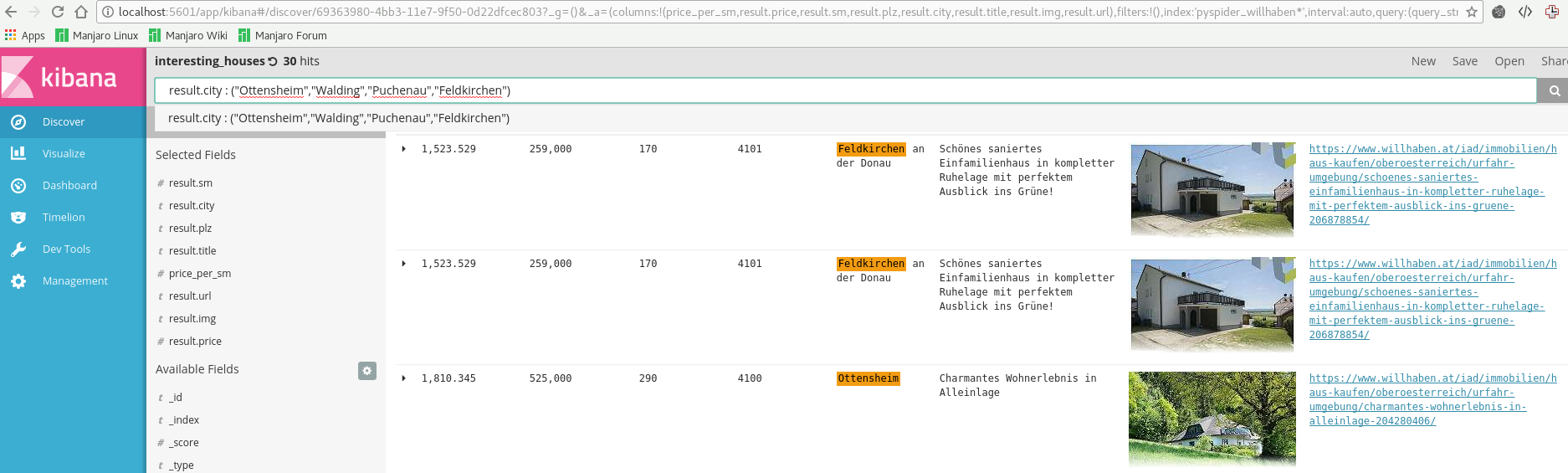

- extract information (pysider)

- store results (elasticsearch)

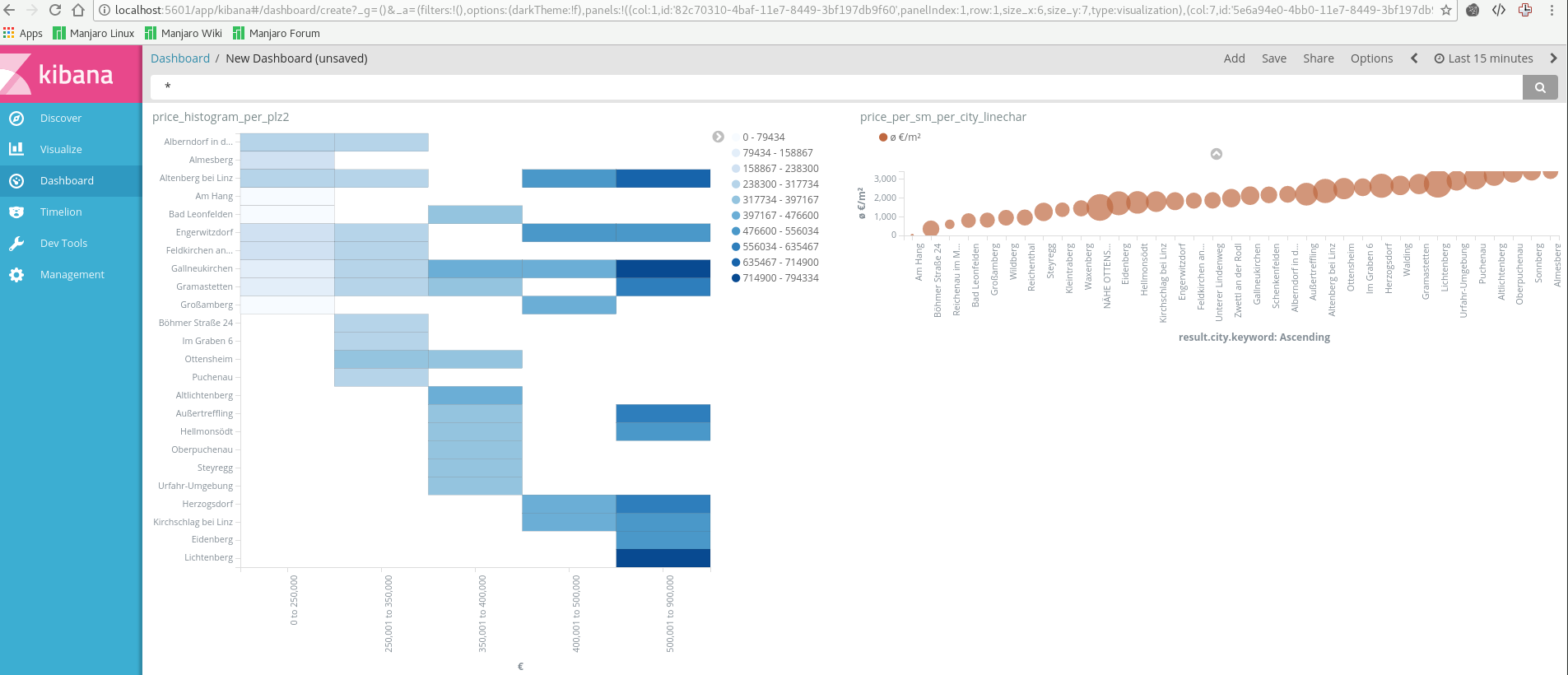

- analyse (kibana)

- start docker containers

cd willhaben docker-compse up - create and run pyspider project

- go to http://localhost:5000

- click "create" and paste content of pyspider_scripts/willhaben_hauser_kaufen_ooe_uu.py

- on overview page: change status to "RUNNING" and click "Run"

- check kibana

- go to http://localhost:5601

- import saved setting from docs/assets/kibana_export.json

- check "Discover" or "Visualizations" or "Dashboard"

docker is used as reproduceable easy-to-use runtime environment.

everything can be started via docker-compose up

crawls website and extracts information

- pyspider documentation

- supported databases for usage of undocumented databases search in GitHub e.g. elasticsearch+resultdb

to store results in a kibana-friendly way

to browse and analyse results

import configuration via docs/kibana_export.json

import configuration via docs/kibana_export.json