As a cluster administrator, you can back up and restore applications running on the OpenShift Container Platform by using the OpenShift API for Data Protection (OADP).

OADP backs up and restores Kubernetes resources and internal images, at the granularity of a namespace by using the version of Velero that is appropriate for the version of OADP you install, according to the table in Downloading the Velero CLI tool.

OADP backs up and restores persistent volumes (PVs) by using snapshots or Restic. For details, see OADP features. In this guide, we’ll use Restic for volume backup/restore.

OADP can be leveraged to recover from any of the following situations:

-

Cross Cluster Backup/Restore

-

You have a cluster that is in an irreparable state.

-

You have lost the majority of your control plane hosts, leading to etcd quorum loss.

-

Your cloud/data center region is down.

-

For many other reasons, you simply want/need to move to another OCP cluster.

-

-

In-Place Cluster Backup/Restore

-

You have deleted something critical in the cluster by mistake.

-

You need to recover from workload data corruption within the same cluster.

-

The guide discusses disaster recovery in the context of implementing application backup and restore. To get a broader understanding of DR in general, I recommend reading the Additional Learning Resources section.

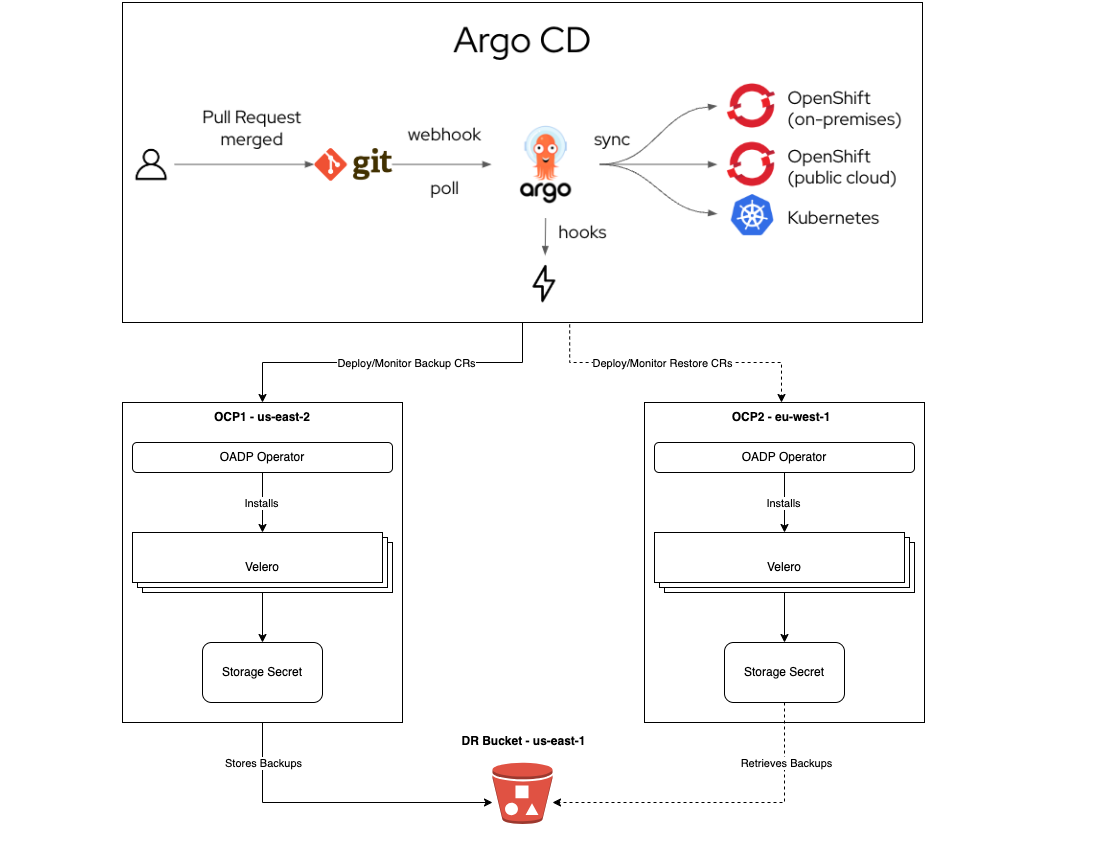

The goal is to demonstrate a cross-cluster Backup and Restore; we backup applications in a cluster running in the AWS us-east-2 region and then restore these apps on a cluster deployed in the AWS eu-west-1 region. Note that we could use this guide to set up an in-place backup and restore as well; I’ll point out the setup difference when we get there.

Here’s the architecture we are going to build:

This guide assumes there is an up-and-running OpenShift cluster, and that the default storage solution the cluster uses implements the Container Storage Interface (CSI). Moreover, the guide supplements applications CI/CD best practices, one should opt for a fast, consistent, repeatable Continuous Deployment/Delivery process for recovering lost/deleted Kubernetes API objects.

-

You must have an S3-compatible object storage.

-

Ensure the storage solution implements the Container Storage Interface (CSI).

-

This must be the case for source (backup from) and target (restore to) clusters.

-

-

Storage solution supports

StorageClassandVolumeSnapshotClassAPI objects. -

StorageClassis configured as Default and it is the only one with this status.-

Annotate it with:

storageclass.kubernetes.io/is-default-class: 'true'

-

-

VolumeSnapshotClassis configured as Default and it is the only one with this status.-

Annotate:

snapshot.storage.kubernetes.io/is-default-class: 'true' -

Label:

velero.io/csi-volumesnapshot-class: 'true'

-

-

Linux Packages:

yq, jq, python3, python3-pip, nfs-utils, openshift-cli, aws-cli -

Configure your bash_profile (

~/.bashrc):

alias velero='oc -n openshift-adp exec deployment/velero -c velero -it -- ./velero'-

Permission to run commands with

sudo

-

You must have the

cluster-adminrole. -

Up and Running GitOps Instance. To learn more, follow the steps described in Installing Red Hat OpenShift GitOps.

-

OpenShift GitOps instance should be installed in an Ops (Tooling) cluster.

-

To protect against regional failure, the Operations cluster should be running in a region different from the BACKUP cluster region.

-

For example, if the BACKUP cluster is running in

us-east-1, the Operations Cluster should be deployed inus-east-2orus-west-1.

-

-

-

Applications being backed up should be healthy.

oc login --token=SA_TOKEN --server=API_SERVERARGO_PASS=$(oc get secret/openshift-gitops-cluster -n openshift-gitops -o jsonpath='{.data.admin\.password}' | base64 -d)

ARGO_URL=$(oc get route openshift-gitops-server -n openshift-gitops -o jsonpath='{.spec.host}{"\n"}')

argocd login --insecure --grpc-web $ARGO_URL --username admin --password $ARGO_PASS-

Generate a read-only Personal Access Token (PAT) token from your GitHub account or any other Git solution provider.

-

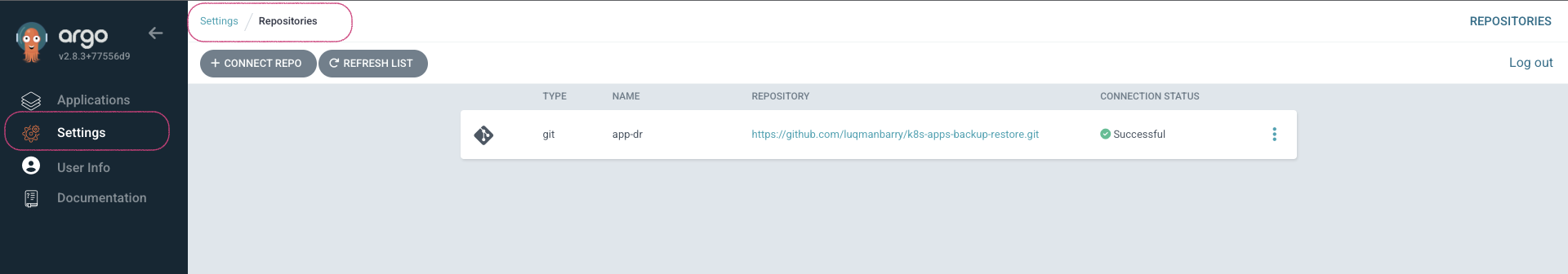

Using the username (ie: git), and password (PAT Token) above, register the repository to ArgoCD

argocd repo add https://github.com/luqmanbarry/k8s-apps-backup-restore.git \

--name CHANGE_ME \

--username git \

--password PAT_TOKEN \

--upsert \

--insecure-skip-server-verificationIf there are no ArgoCD RBAC issues, the repository should show up in the ArgoCD web console; and the outcome should look like this:

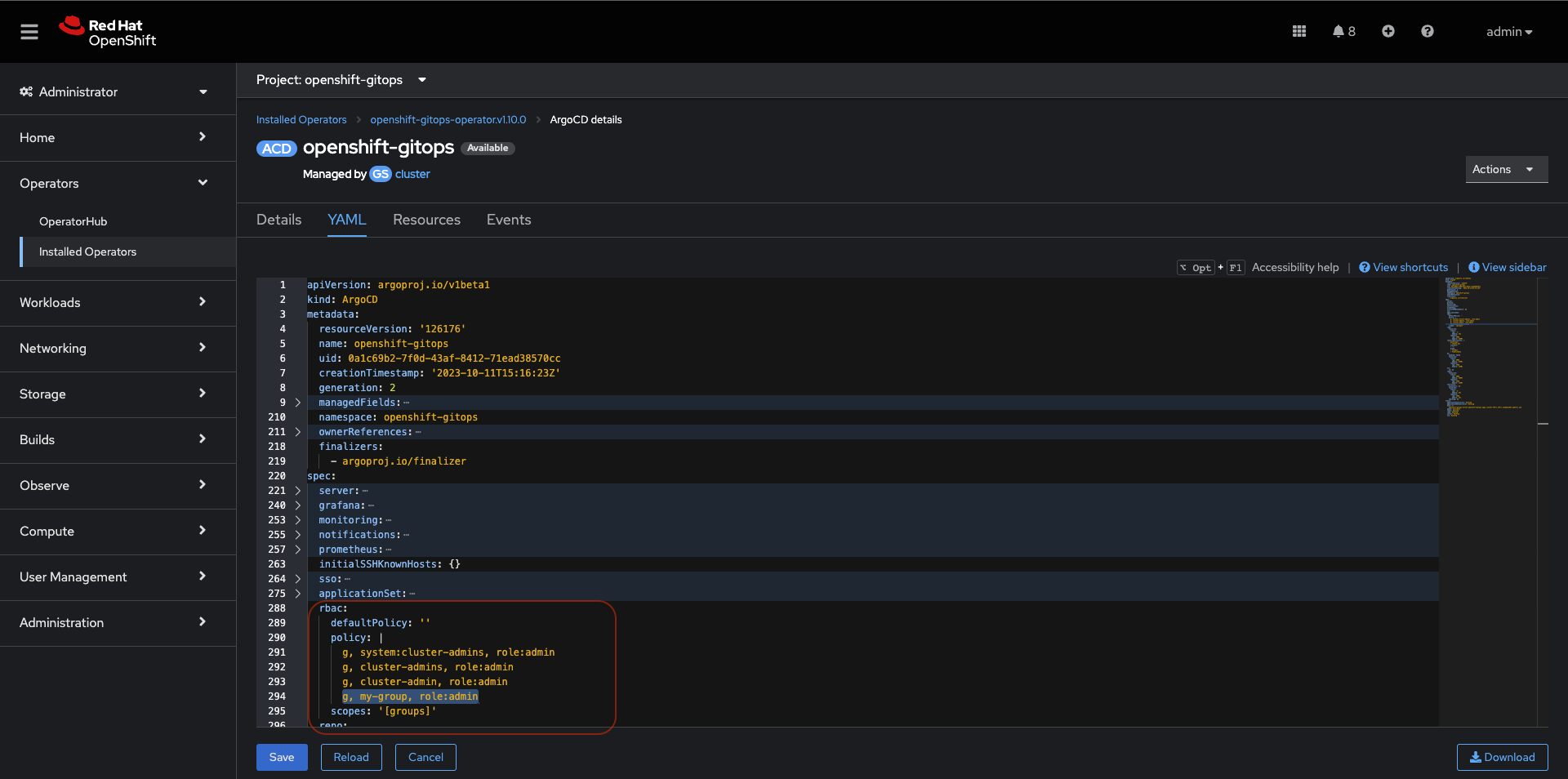

If the repository does not appear in the ArgoCD UI, take a look at the the openshift-gitops-server-xxxx-xxxx pod logs. If you find error messages such as repository: permission denied: repositories, create, it is most likely related to RBAC issues; edit the ArgoCD custom resource to add the group/user with required resources and actions.

For example:

Click on ArgoCD RBAC Configuration to learn more.

Use an account (recommended to use a ServiceAccount) with permission to create Projects, OperatorGroups, Subscriptions resources.

Login to the BACKUP cluster

BACKUP_CLUSTER_SA_TOKEN=CHANGE_ME

BACKUP_CLUSTER_API_SERVER=CHANGE_ME

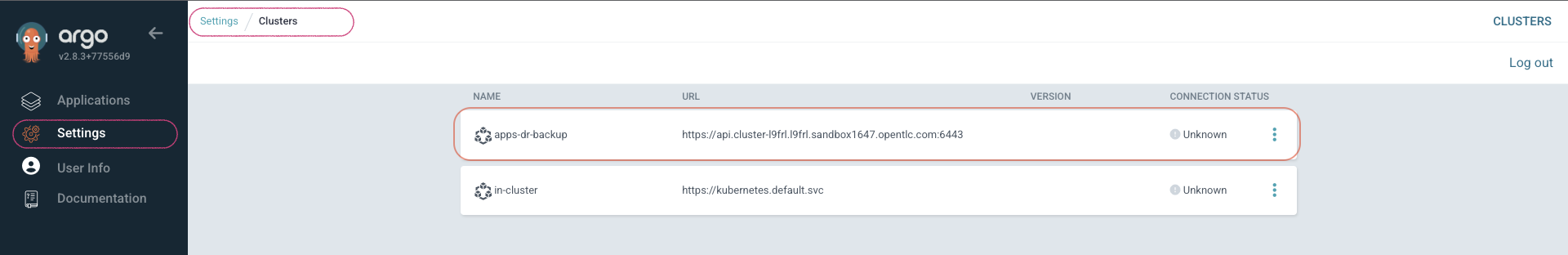

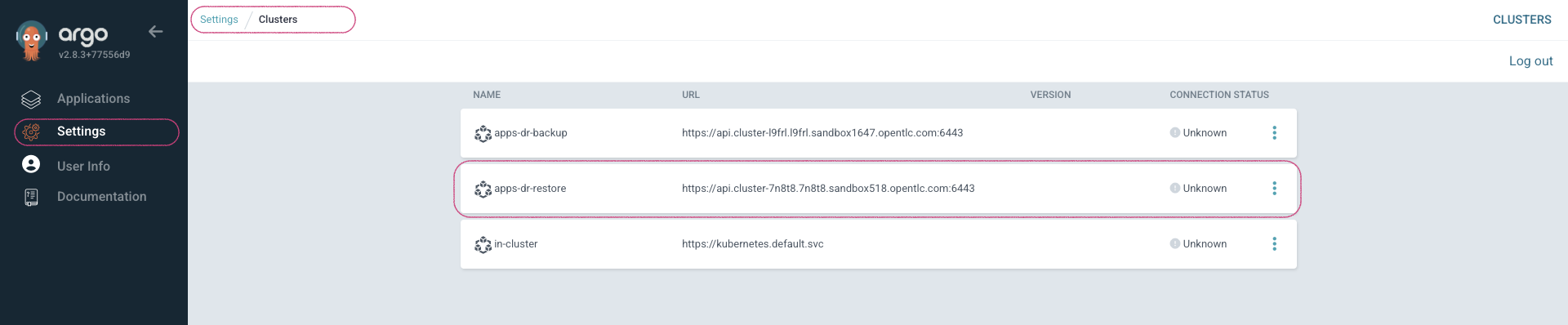

oc login --token=$BACKUP_CLUSTER_SA_TOKEN --server=$BACKUP_CLUSTER_API_SERVERAdd the BACKUP cluster to ArgoCD

BACKUP_CLUSTER_KUBE_CONTEXT=$(oc config current-context)

BACKUP_ARGO_CLUSTER_NAME="apps-dr-backup"

argocd cluster add $BACKUP_CLUSTER_KUBE_CONTEXT \

--kubeconfig $HOME/.kube/config \

--name $BACKUP_ARGO_CLUSTER_NAME \

--yesIf things go as they should, the outcome should look like this:

You should use an IAM account with read/write permissions to just this one S3 bucket. For simplicity I placed the S3 credentials in the oadp-operator helm chart; however, AWS credentials should be injected at deploy time rather than being stored in Git.

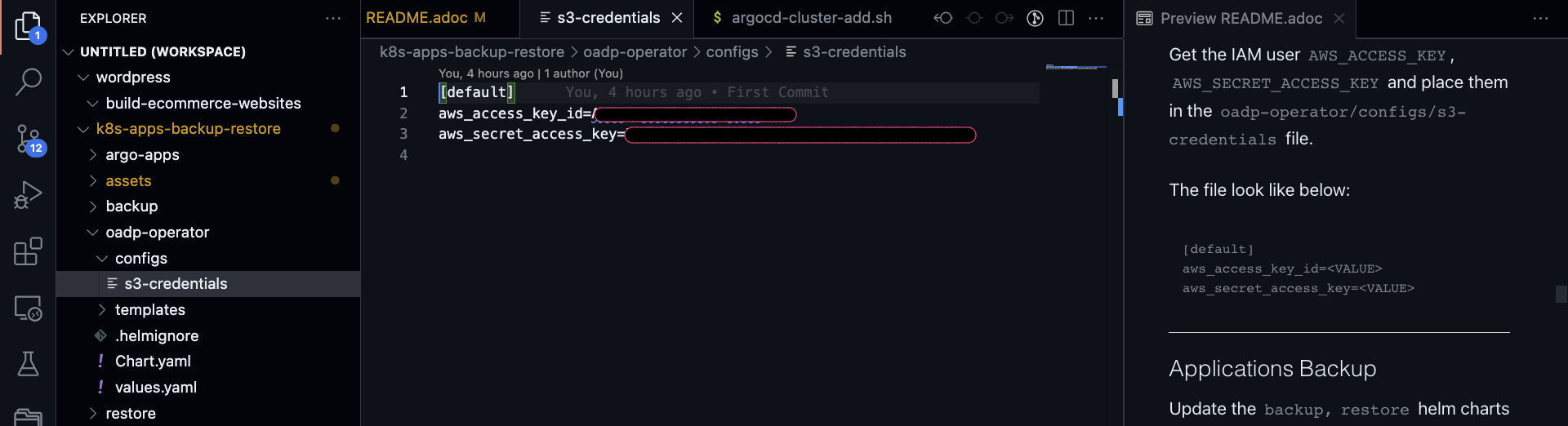

Get the IAM user AWS_ACCESS_KEY, and AWS_SECRET_ACCESS_KEY, and place them in the oadp-operator/configs/s3-credentials file.

The file looks like below:

Both the backup helm chart and restore helm chart deploy the OADP operator defined in the oadp-operator helm chart as a dependency.

Once the S3 credentials are set, update the backup helm chart and restore helm chart dependencies. You only need to do it once per S3 bucket.

cd backup

helm dependency update

helm lintcd restore

helm dependency update

helm lintIf no errors show up, you are ready to proceed to the next steps.

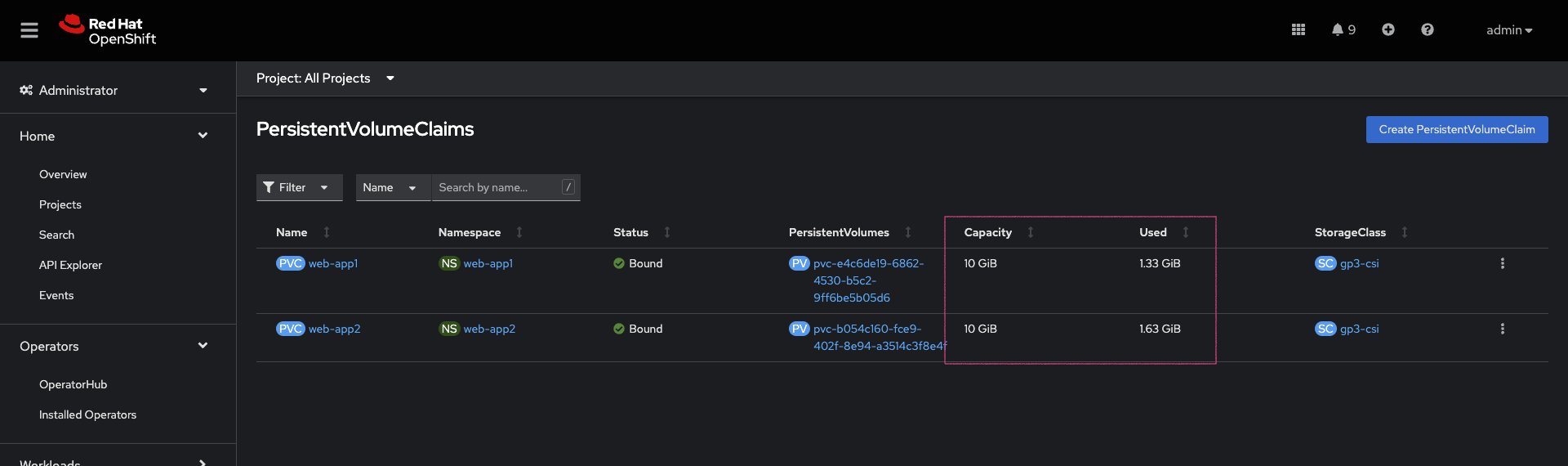

I have prepared two OpenShift templates that will deploy 2 stateful apps (Deployment, DeploymentConfig) in the web-app1, web-app2 namespaces.

Login to the BACKUP cluster

BACKUP_CLUSTER_SA_TOKEN=CHANGE_ME

BACKUP_CLUSTER_API_SERVER=CHANGE_ME

oc login --token=$BACKUP_CLUSTER_SA_TOKEN --server=$BACKUP_CLUSTER_API_SERVER# Web App1

oc process -f sample-apps/web-app1.yaml -o yaml | oc apply -f -

sleep 10

oc get deployment,pod,svc,route,pvc -n web-app1# Web App2

oc process -f sample-apps/web-app2.yaml -o yaml | oc apply -f -

sleep 10

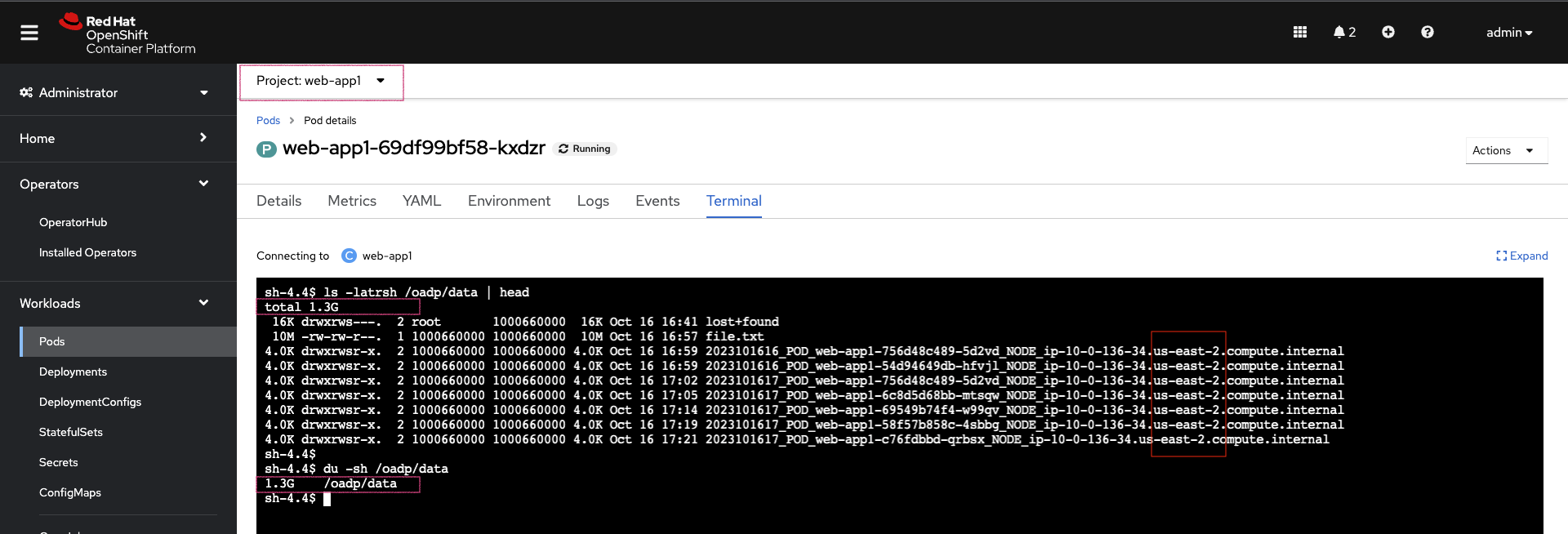

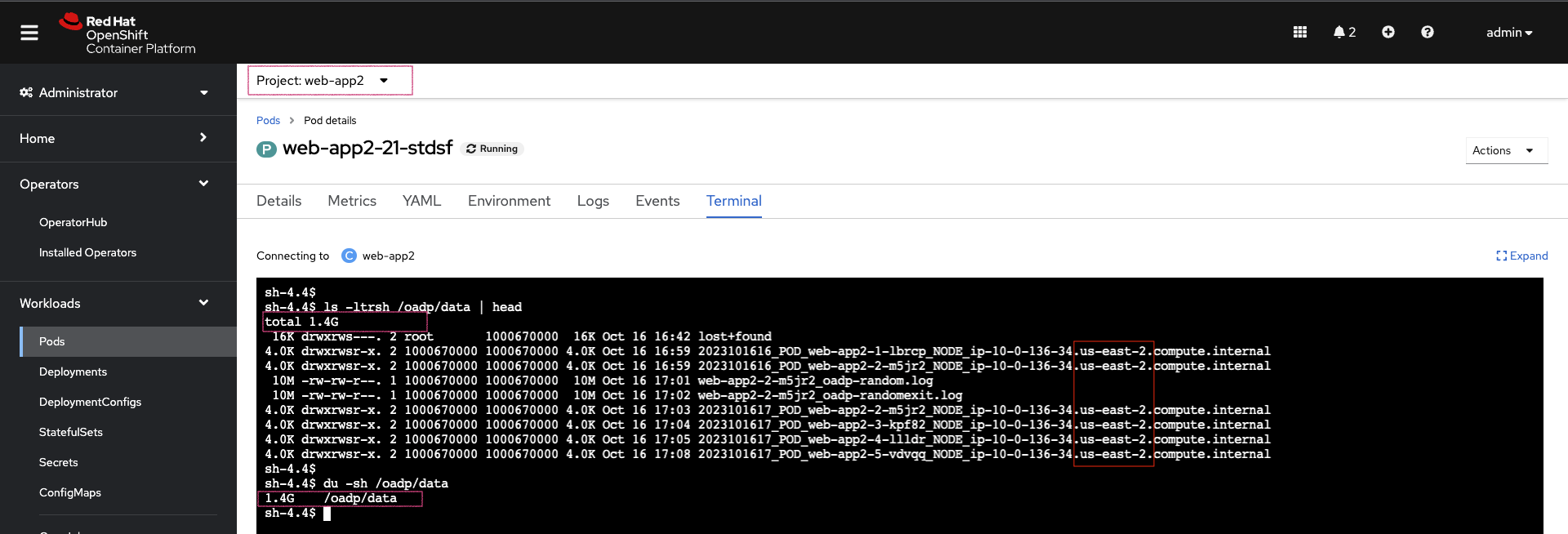

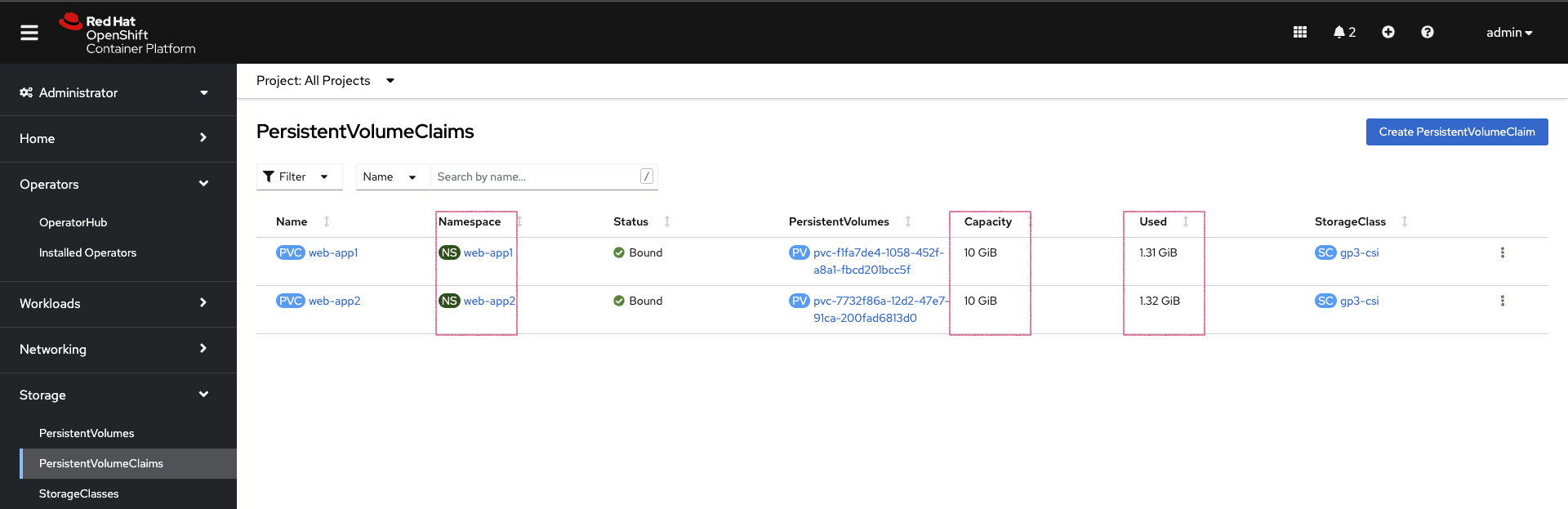

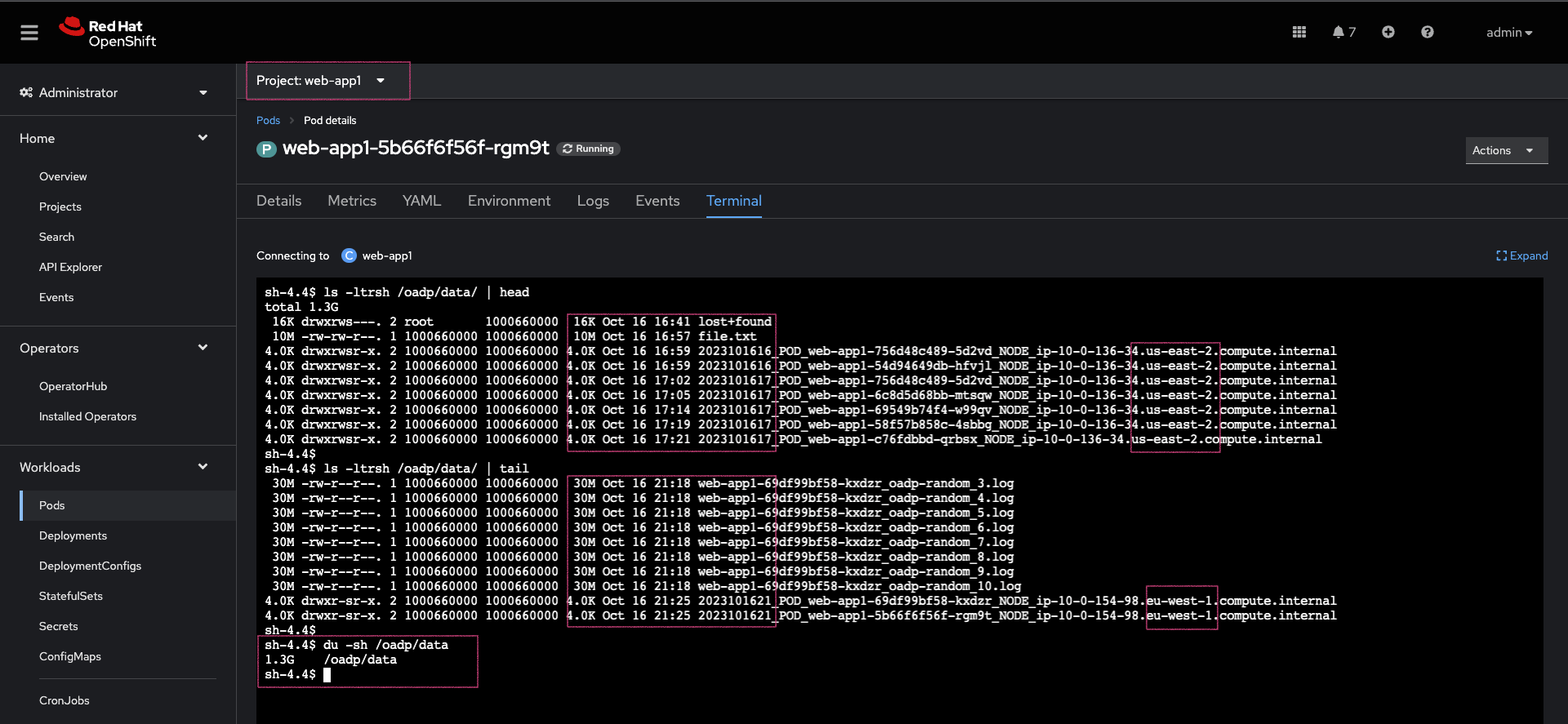

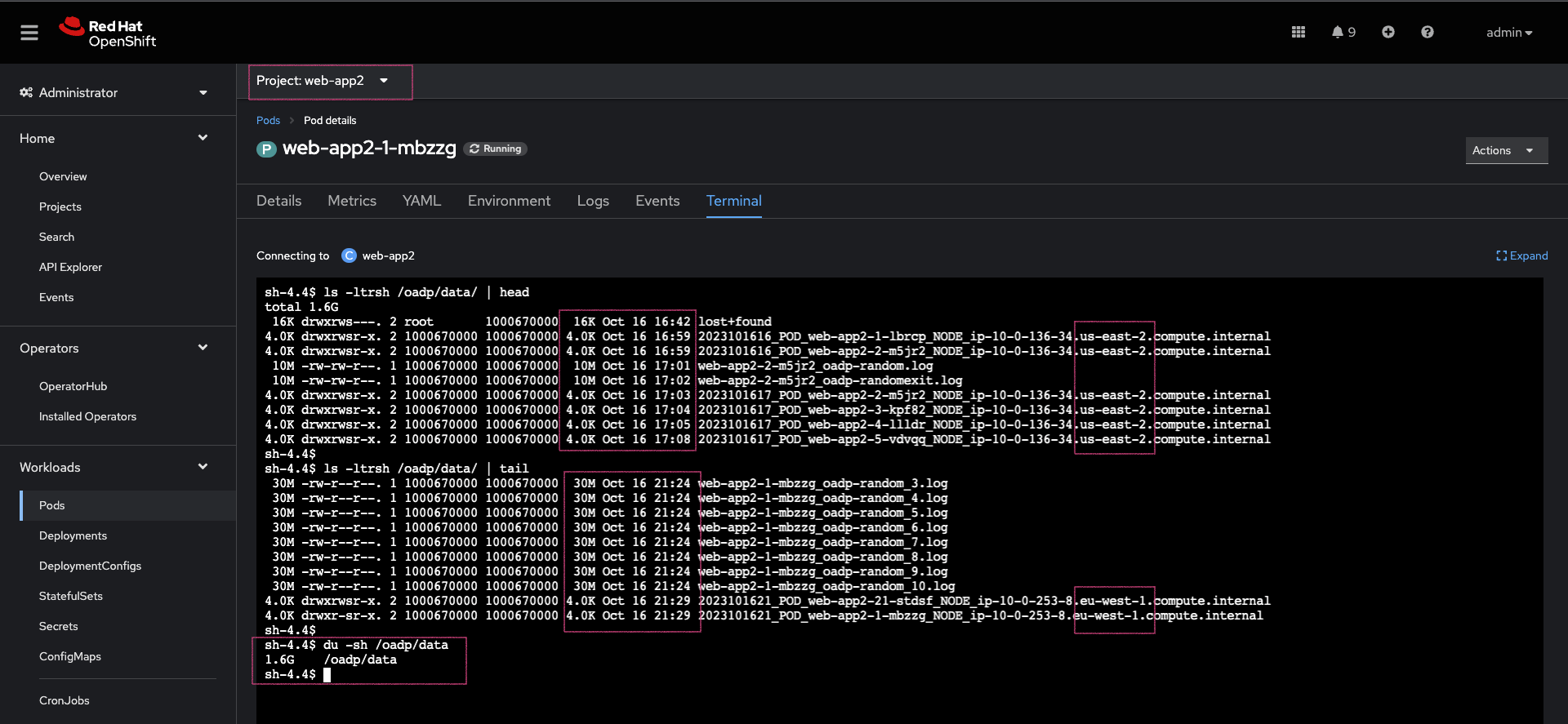

oc get deploymentconfig,pod,svc,route,pvc -n web-app2Sample web-app1, and web-app2 volumes data before starting backup. Every time the pods spin up, the entry command generates about 30MB of data. To generate more data, delete the pods a few times.

After the restore, along with application resources, we expect this same data to be present on the restore cluster volumes.

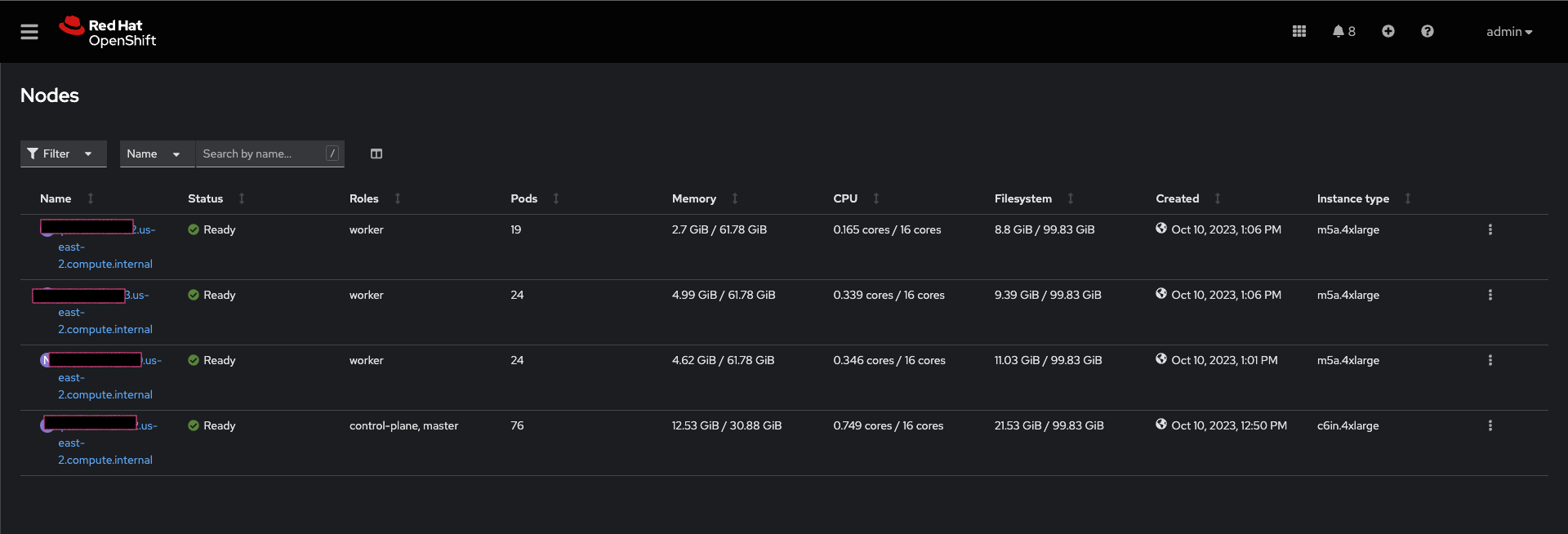

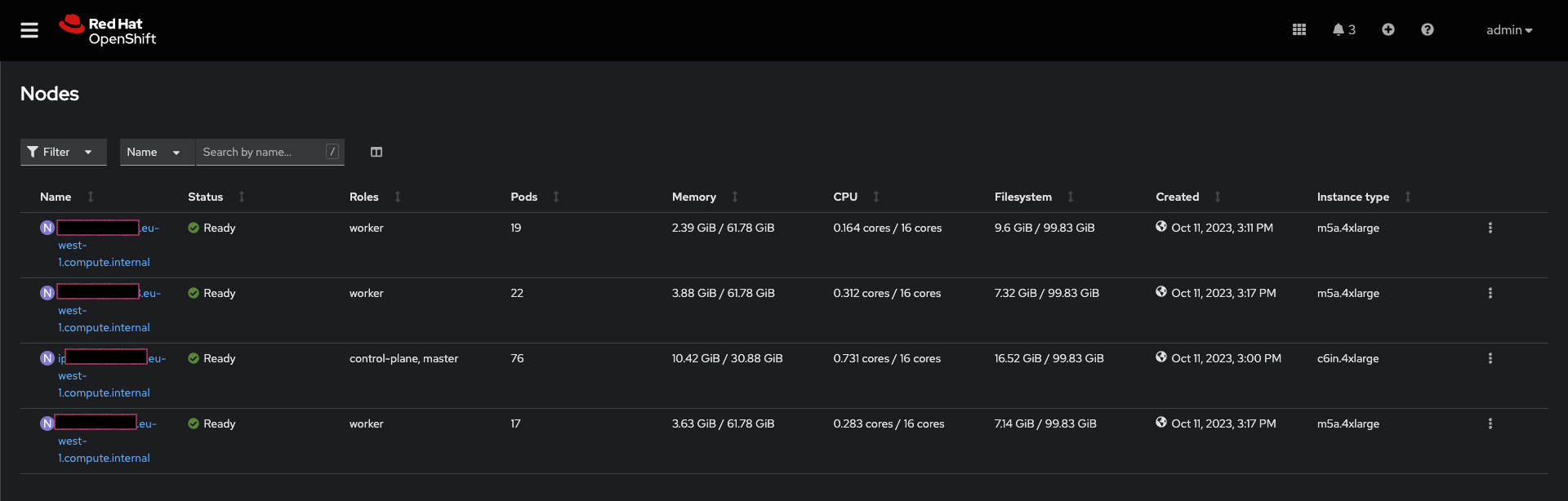

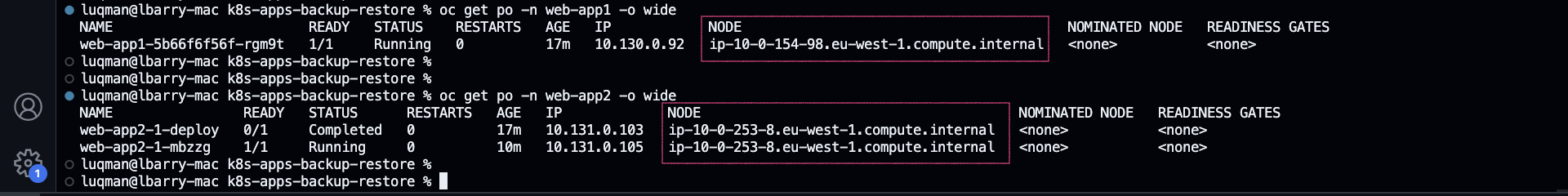

As you can see in the node name column, the BACKUP cluster is running in the us-east-2 region.

The ArgoCD Application is located here: argocd-applications/apps-dr-backup.yaml.

Make the necessary changes, and pay special attention to spec.source: and spec.destination:

spec:

project: default

source:

repoURL: https://github.com/luqmanbarry/k8s-apps-backup-restore.git

targetRevision: main

path: backup

# Destination cluster and namespace to deploy the application

destination:

# cluster API URL

name: apps-dr-backup

# name: in-cluster

namespace: openshift-adpUpdate the backup/values.yaml file to provide the following:

-

s3 bucket name

-

namespace list

-

backup schedule

For example:

global:

operatorUpdateChannel: stable-1.2 # OADP Operator Subscription Channel

inRestoreMode: false

resourceNamePrefix: apps-dr-guide # OADP CR instances name prefix

storage:

provider: aws

s3:

bucket: apps-dr-guide # S3 BUCKET NAME

dirPrefix: oadp

region: us-east-1

backup:

cronSchedule: "*/30 * * * *" # Cron Schedule - For assistance, use https://crontab.guru

excludedNamespaces: []

includedNamespaces:

- web-app1

- web-app2Commit and push your changes to the git branch specified in the ArgoCD Application.spec.source.targetRevision:. You could use a Pull Request (recommended) to update the branch being monitored by ArgoCD.

Log on to the OCP cluster where the GitOps instance is running.

OCP_CLUSTER_SA_TOKEN=CHANGE_ME

OCP_CLUSTER_API_SERVER=CHANGE_ME

oc login --token=$OCP_CLUSTER_SA_TOKEN --server=$OCP_CLUSTER_API_SERVERApply the ArgoCD argocd-applications/apps-dr-backup.yaml manifest.

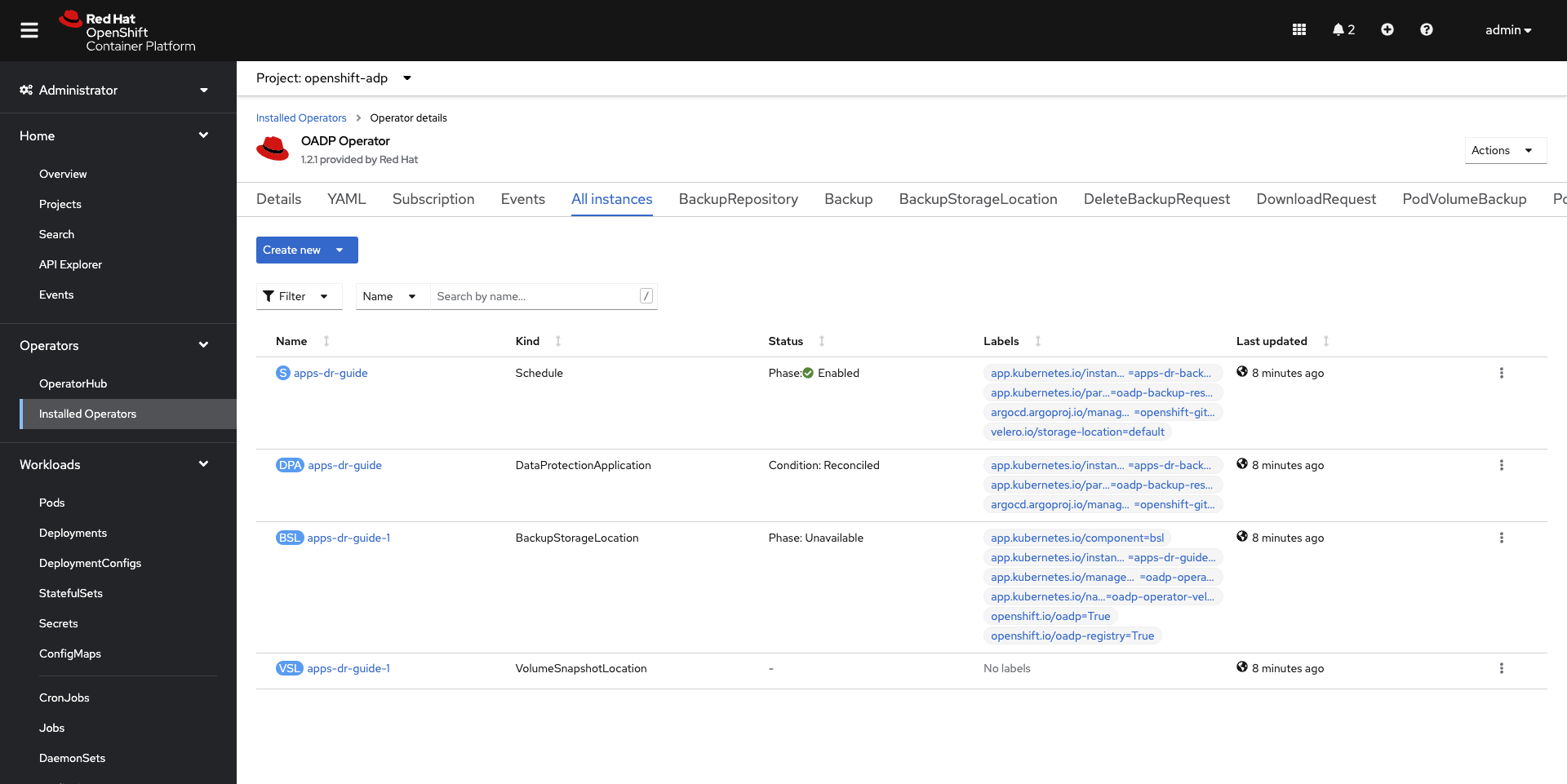

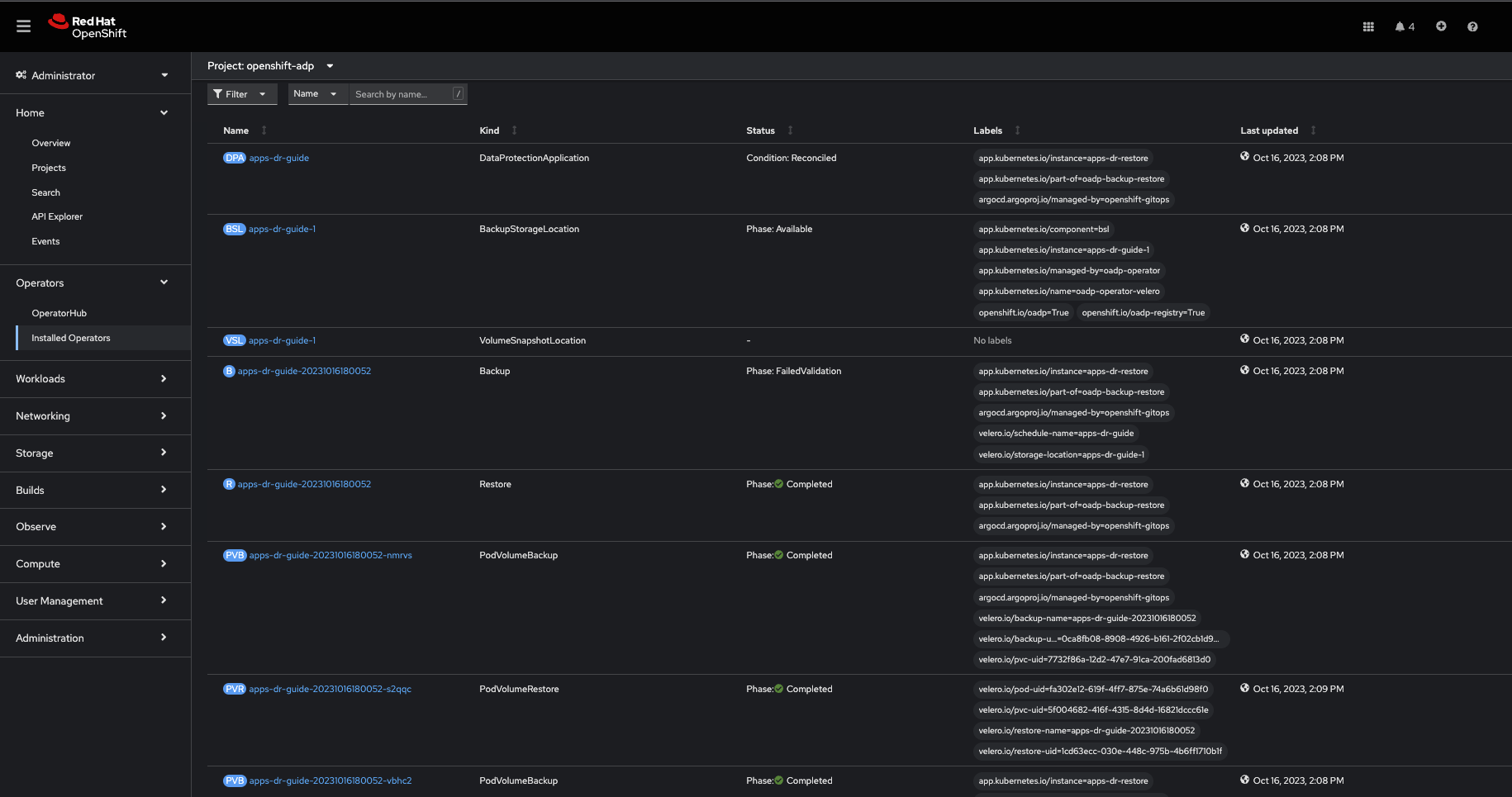

oc apply -f argocd-applications/apps-dr-backup.yamlIf things go as they should, the outcome should look the image below:

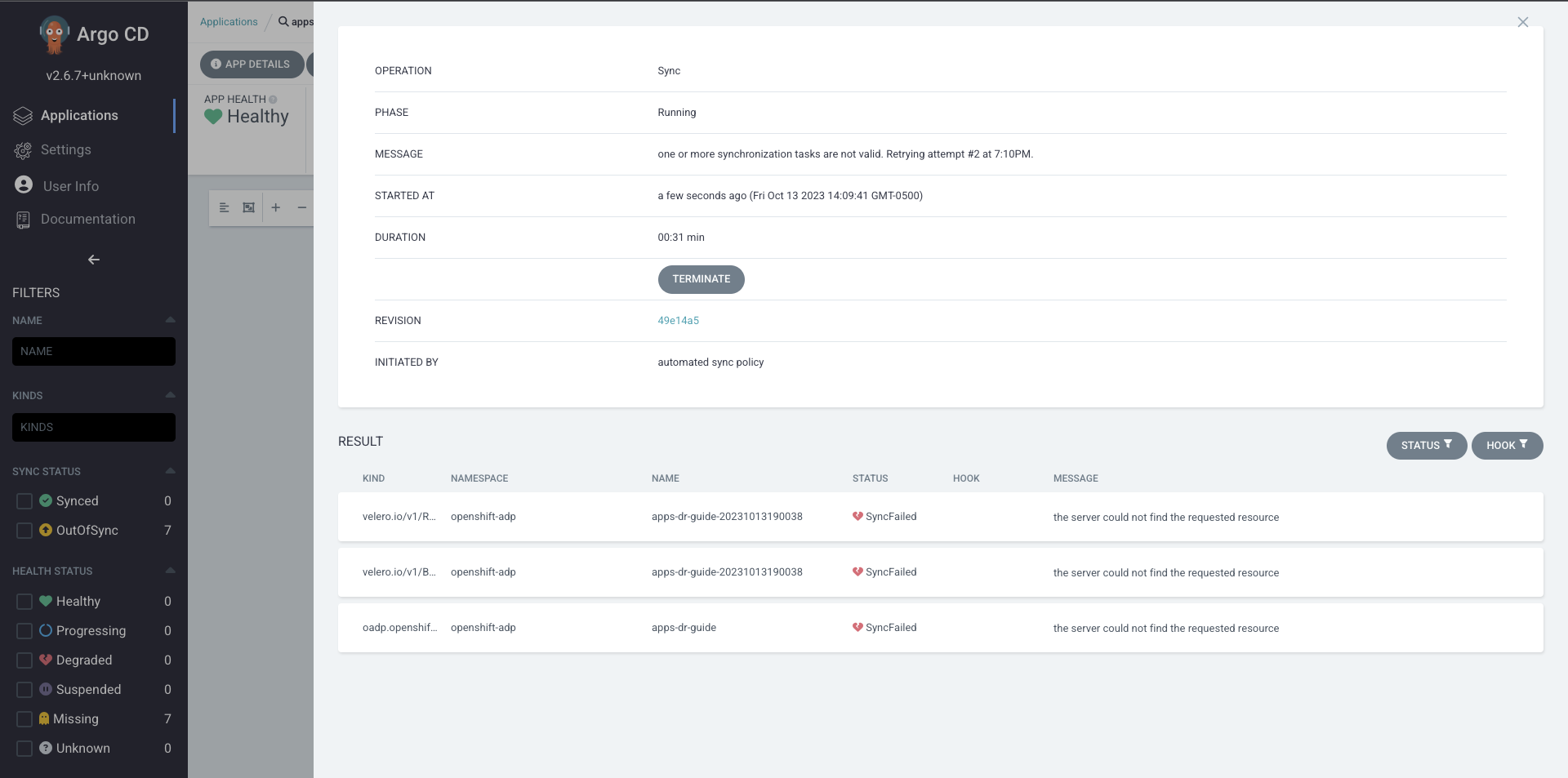

If you run into errors such as DataProtectionApplication, Schedule CRDs not found as shown below; install the OADP Operator, then uninstall it and delete the openshift-adp namespace.

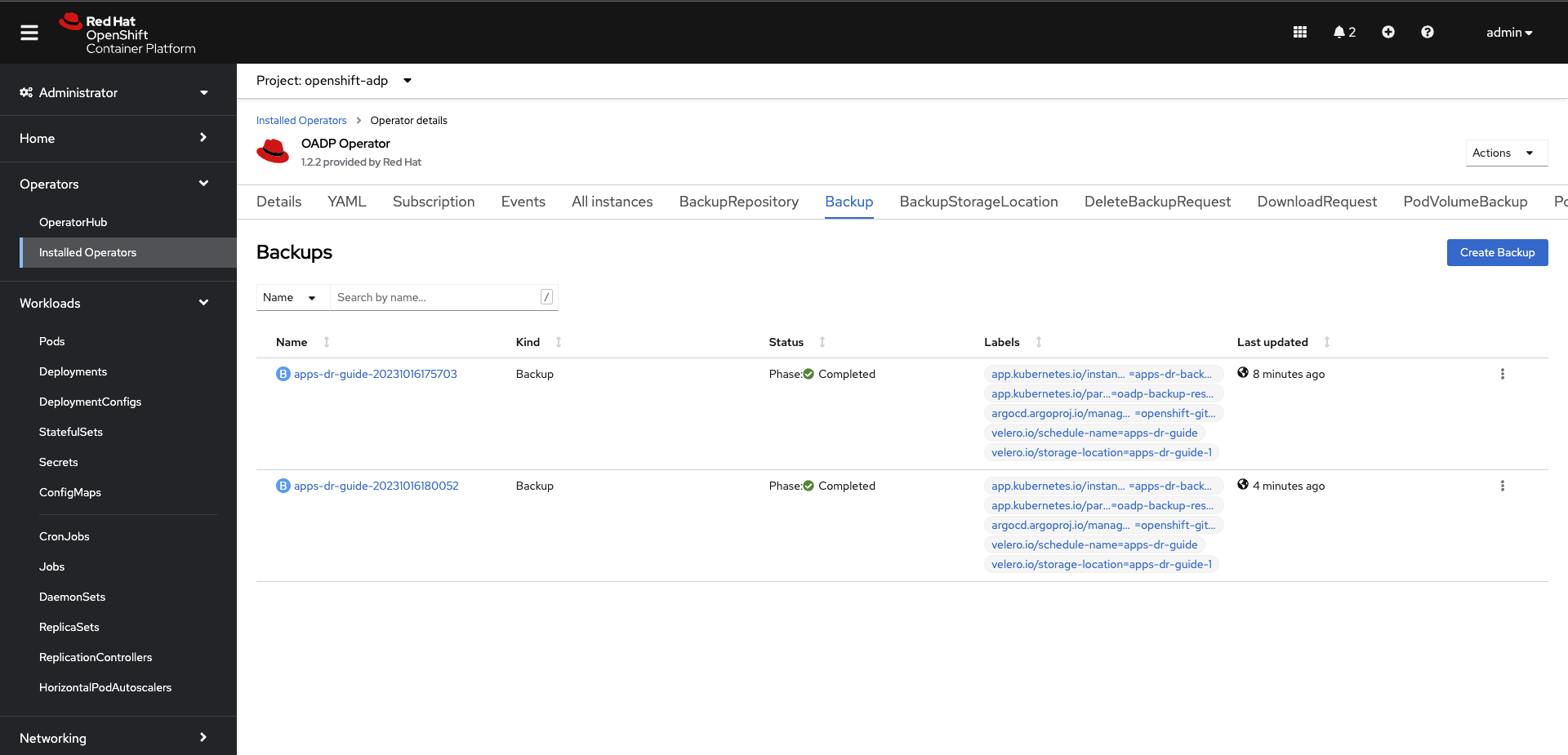

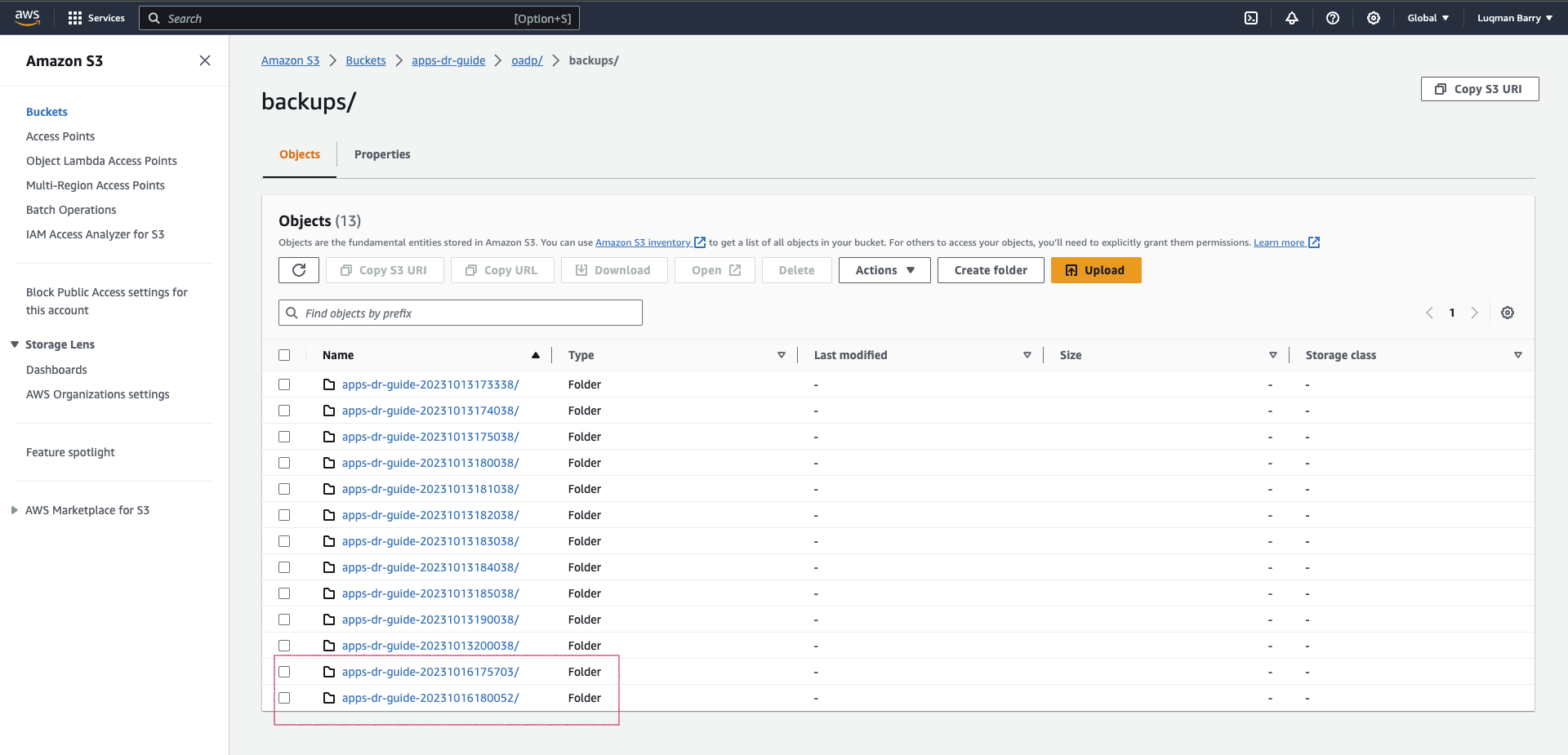

After a successful backup, resources will be saved in S3. For example, the backup directory content will look like the below:

Follow the Troubleshooting guide at the bottom of the page if the OAD Operator > Backup status changes to Failed or PartiallyFailed.

As you can see in the node name column, the RESTORE cluster is running in the eu-west-1 region.

|

Important

|

If the RESTORE cluster is the same as the BACKUP cluster, skip steps #1, #2, #3, #4, and start from step #5. |

1. Setup: Install the "OpenShift GitOps" Operator

Login to the RESTORE cluster

RESTORE_CLUSTER_SA_TOKEN=CHANGE_ME

RESTORE_CLUSTER_API_SERVER=CHANGE_ME

oc login --token=$RESTORE_CLUSTER_SA_TOKEN --server=$RESTORE_CLUSTER_API_SERVERLogin to the ArgoCD CLI

ARGO_PASS=$(oc get secret/openshift-gitops-cluster -n openshift-gitops -o jsonpath='{.data.admin\.password}' | base64 -d)

ARGO_URL=$(oc get route openshift-gitops-server -n openshift-gitops -o jsonpath='{.spec.host}{"\n"}')

argocd login --insecure --grpc-web $ARGO_URL --username admin --password $ARGO_PASSAdd the OADP configs repository

argocd repo add https://github.com/luqmanbarry/k8s-apps-backup-restore.git \

--name CHANGE_ME \

--username git \

--password PAT_TOKEN \

--upsert \

--insecure-skip-server-verificationIf there are no ArgoCD RBAC issues, the repository should show up in the ArgoCD web console; and the outcome should look like this:

RESTORE_CLUSTER_KUBE_CONTEXT=$(oc config current-context)

RESTORE_ARGO_CLUSTER_NAME="apps-dr-restore"

argocd cluster add $RESTORE_CLUSTER_KUBE_CONTEXT \

--kubeconfig $HOME/.kube/config \

--name $RESTORE_ARGO_CLUSTER_NAME \

--yesIf things go as they should, the outcome should look like this:

Login to AWS CLI

export AWS_ACCESS_KEY_ID=CHANGE_ME

export AWS_SECRET_ACCESS_KEY=CHANGE_ME

export AWS_SESSION_TOKEN=CHANGE_ME # Optional in some casesVerify you have successfully logged in by listing objects in the S3 bucket:

# aws s3 ls s3://BUCKET_NAME

# For example

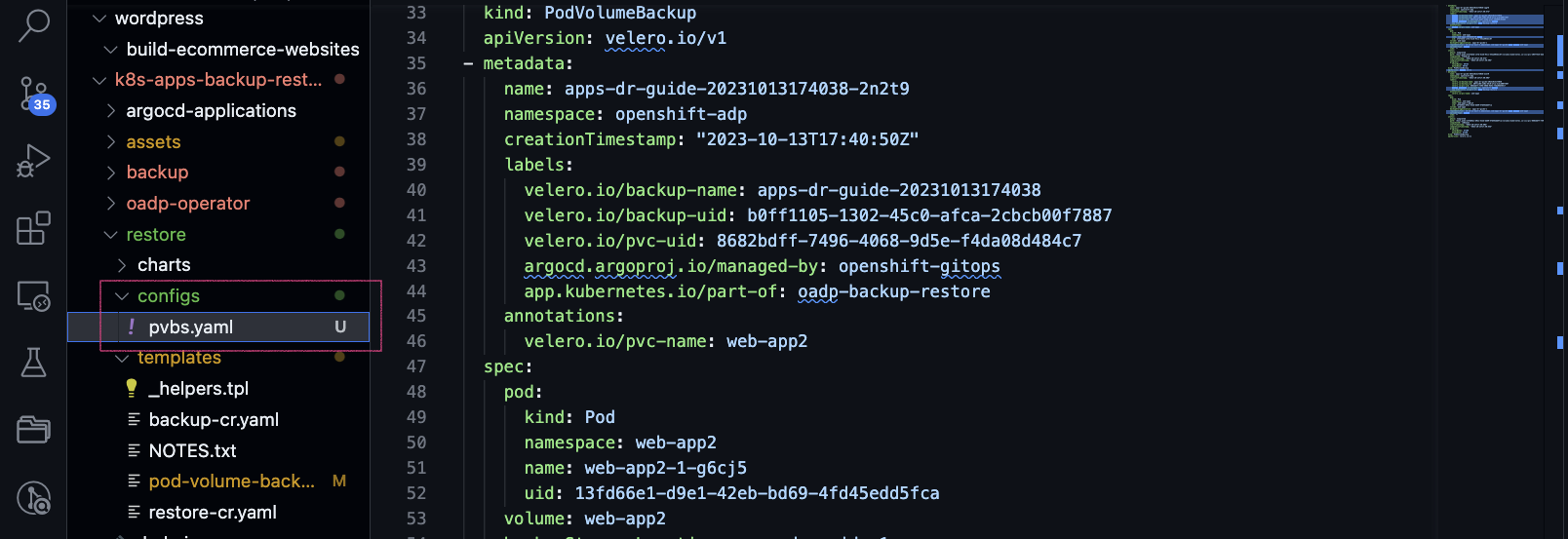

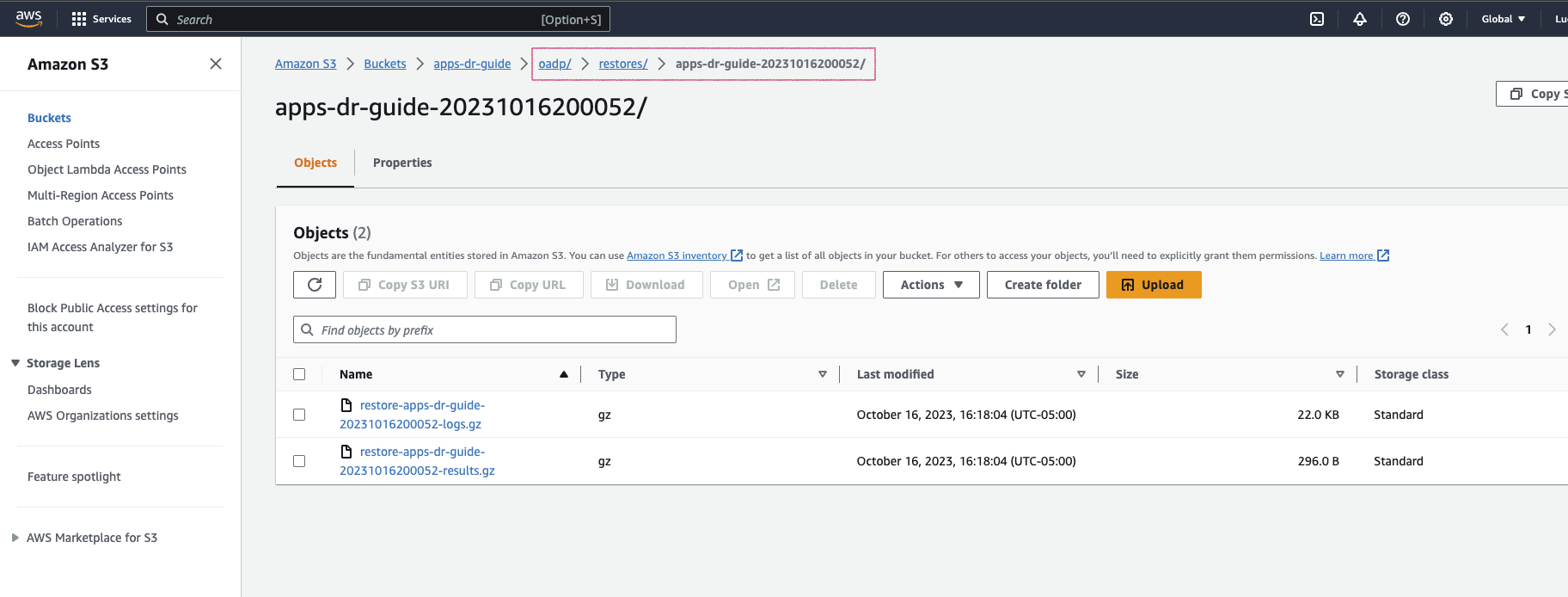

aws s3 ls s3://apps-dr-guideIn S3, select the backup (usually the latest) you want to restore from, find the BACKUP_NAME-podvolumebackups.json.gz file and copy its S3 URI.

In the example below, the backup name is apps-dr-guide-20231016200052.

Run the scripts/prepare-pvb.sh script; when prompted, provide the S3 URI you copied and then press enter.

cd scripts

./prepare-pvb.shOnce the script completes, a new directory will be added in the restore helm chart.

Update the restore/values.yaml file with the backupName selected in S3.

Set isSameCluster: true if you are doing an in-place backup and restore.

isSameCluster: false # Set this flag to true if the RESTORE cluster is the same as the BACKUP cluster

global:

operatorUpdateChannel: stable-1.2 # OADP Operator Sub Channel

inRestoreMode: true

resourceNamePrefix: apps-dr-guide # OADP CRs name prefix

backup:

name: "apps-dr-guide-20231016200052" # Value comes from S3 bucket

excludedNamespaces: [] # Leave empty unless you want to exclude certain namespaces from being restored.

includedNamespaces: [] # Leave empty if you want all namespaces. You may provide namespaces if you want a subset of projects.Once satisfied, commit your changes and push.

git add .

git commit -am "Initiating restore from apps-dr-guide-20231016200052"

git push

# Check that the repo is pristine

git statusInspect the argocd-applications/apps-dr-restore.yaml manifest and ensure it is polling from the correct git branch and that the destination cluster is correct.

Log on to the OCP cluster where the GitOps instance is running; in our case, it is running on the recovery cluster.

OCP_CLUSTER_SA_TOKEN=CHANGE_ME

OCP_CLUSTER_API_SERVER=CHANGE_ME

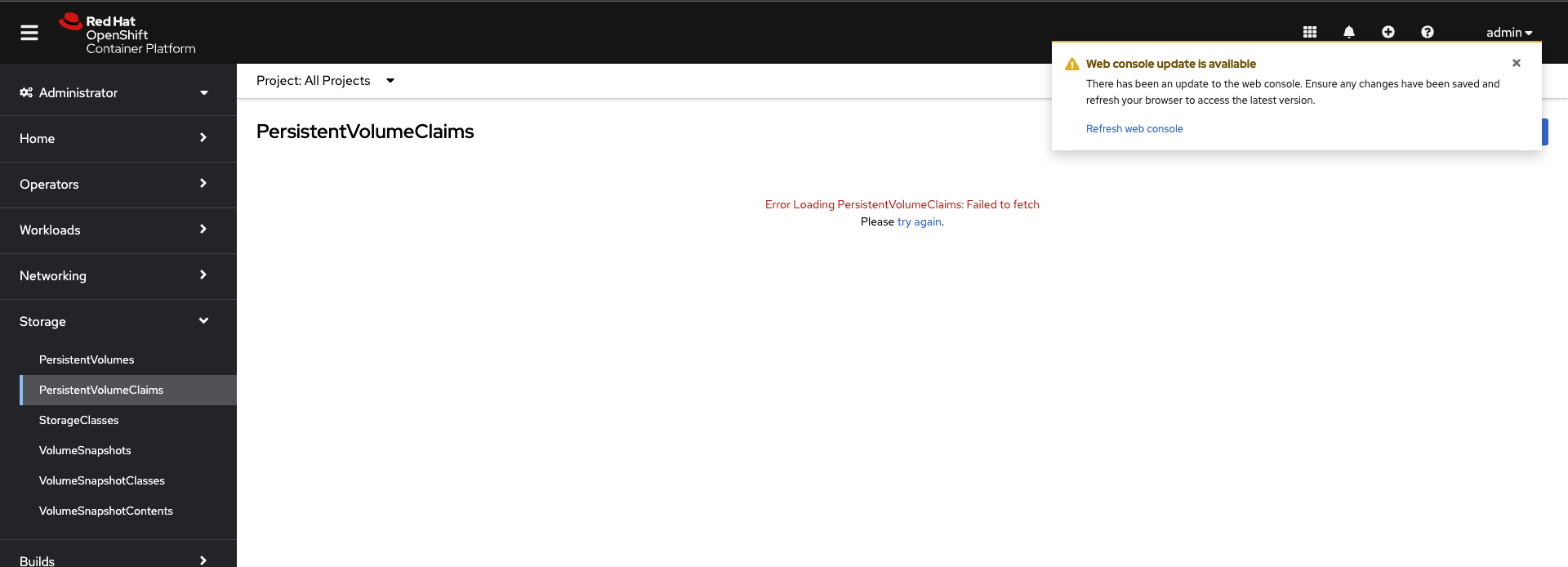

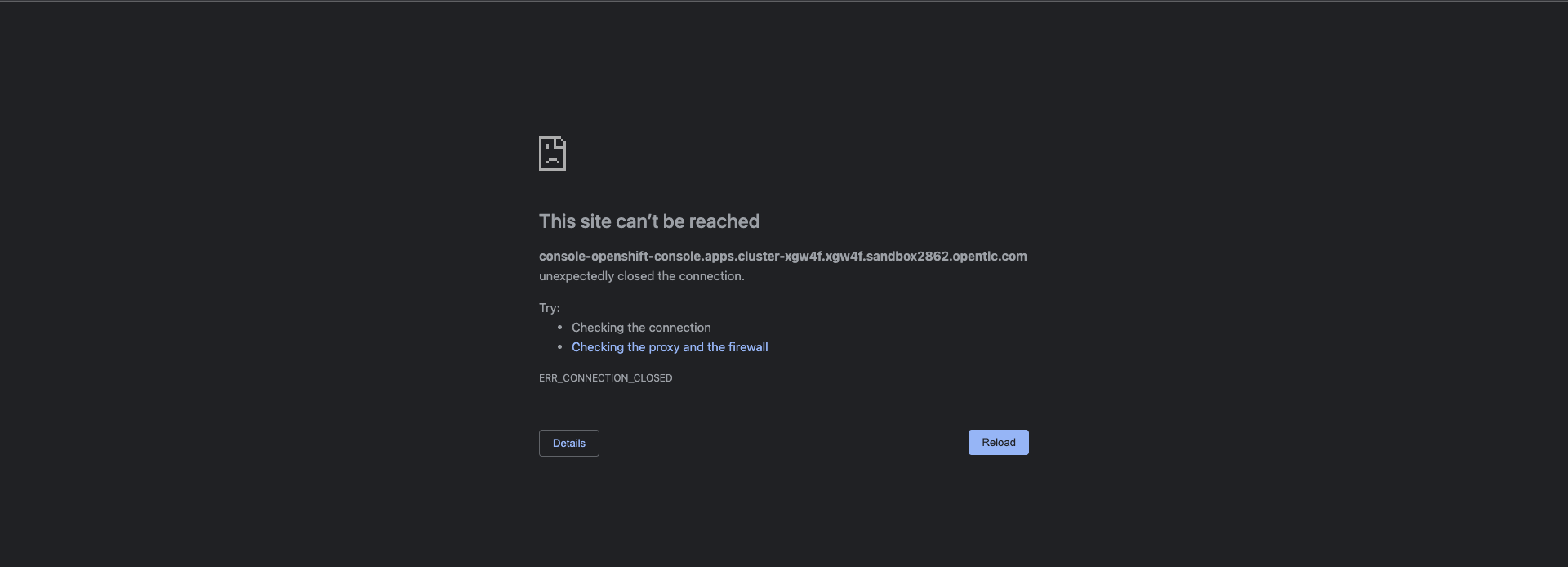

oc login --token=$OCP_CLUSTER_SA_TOKEN --server=$OCP_CLUSTER_API_SERVERBefore applying the ArgoCD Application to trigger the recovery, I will simulate a DR by shutting down the BACKUP cluster.

After reloading the page.

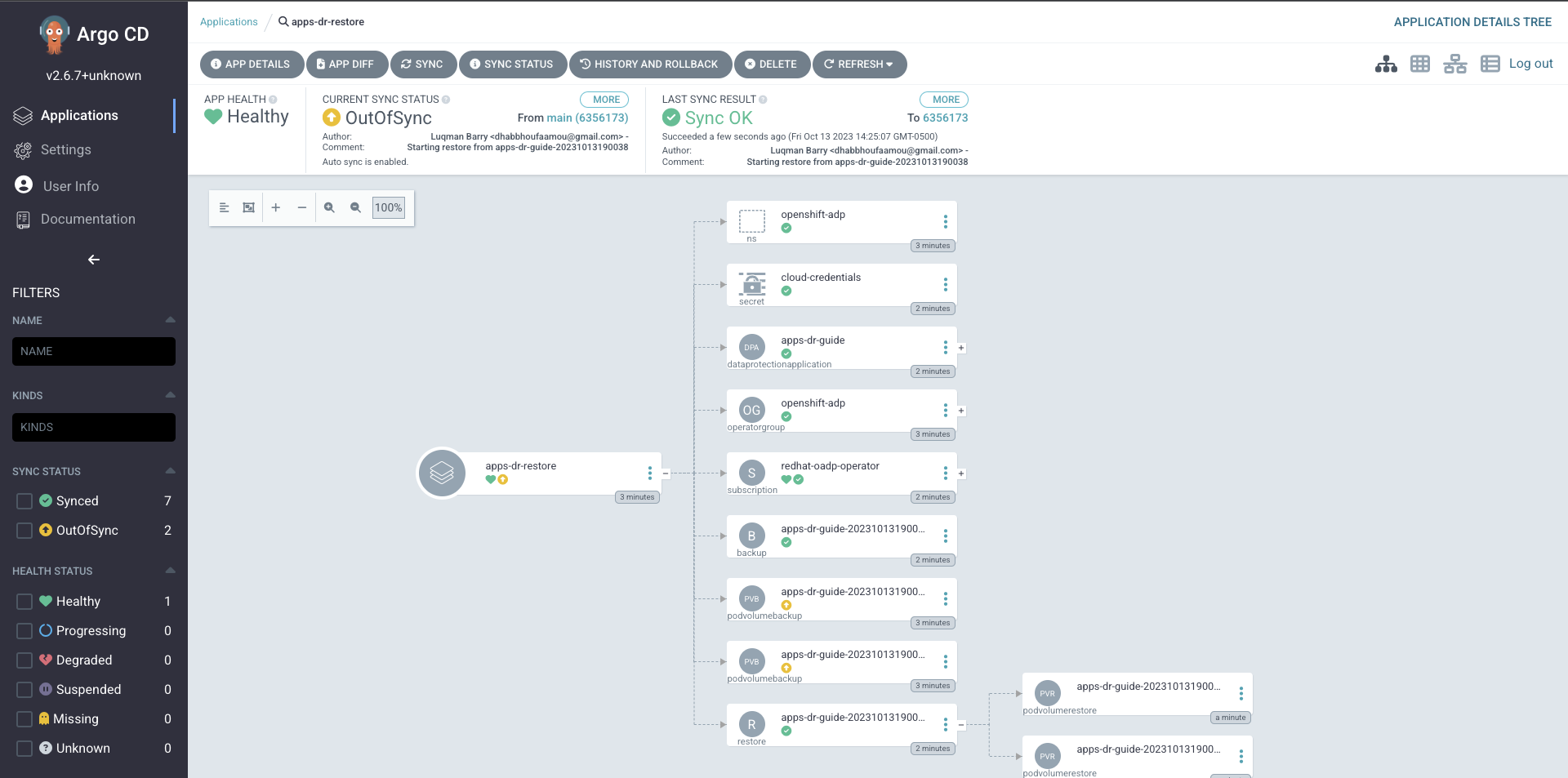

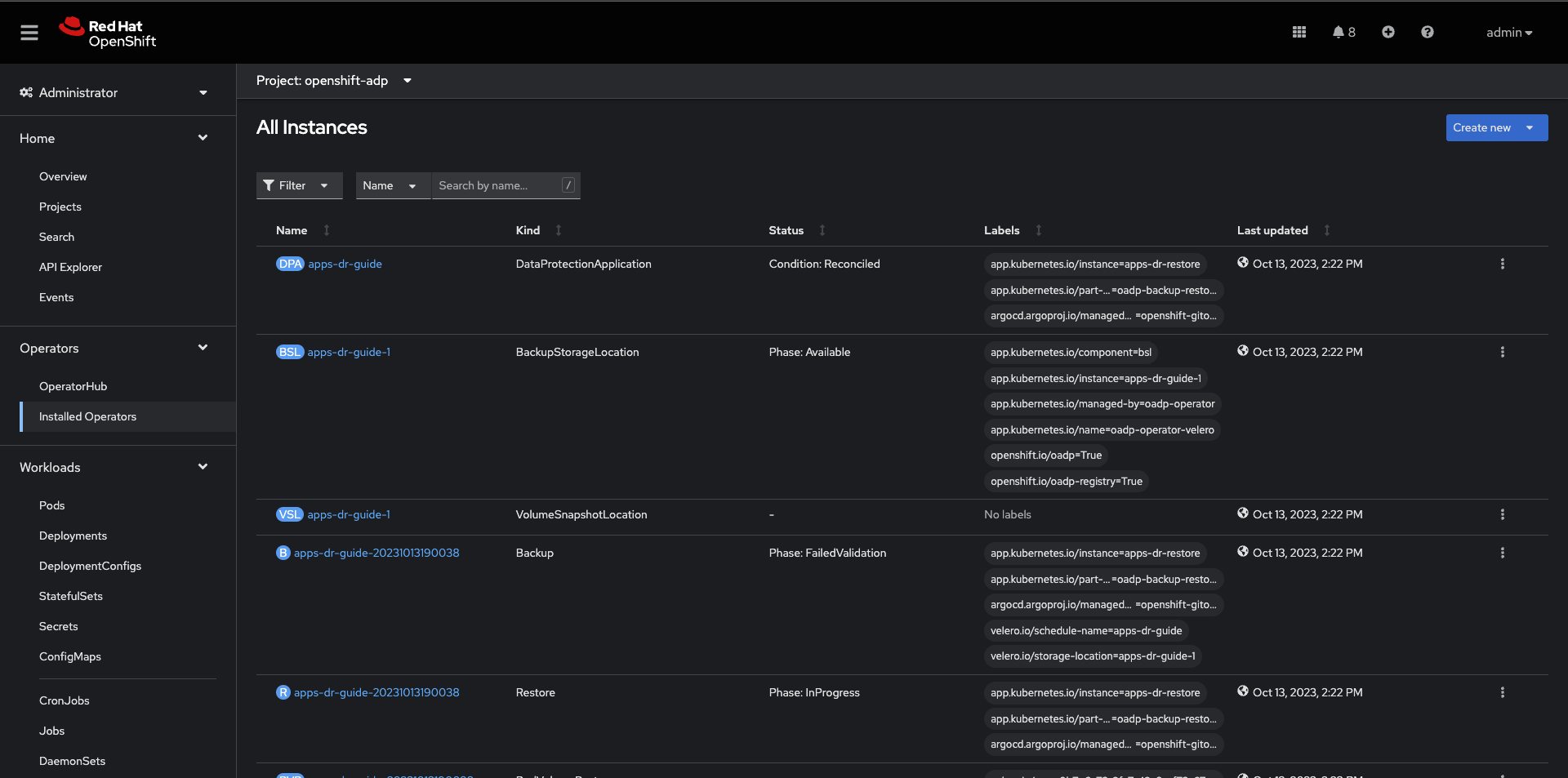

Apply the ArgoCD argocd-applications/apps-dr-restore.yaml manifest.

oc apply -f argocd-applications/apps-dr-restore.yamlAfter a successful restore, resources will be saved to S3. For example, the restores directory content for backup apps-dr-guide-20231016200052 will look like below:

Follow the Troubleshooting guide below if the OAD Operator > Restore status changes to Failed or PartiallyFailed.

OADP by default scales down DeploymentConfigs and does not clean up orphaned pods. You need to run the dc-restic-post-restore.sh to do the clean up.

Login to the RESTORE OpenShift Cluster

oc login --token=SA_TOKEN_VALUE --server=CONTROL_PLANE_API_SERVERRun the cleanup script.

# REPLACE THIS VALUE

BACKUP_NAME="apps-dr-guide-20231016200052"

./scripts/dc-restic-post-restore.sh $BACKUP_NAMESet up the Velero CLI program before starting.

alias velero='oc -n openshift-adp exec deployment/velero -c velero -it -- ./velero'Login to the BACKUP OCP Cluster

oc login --token=SA_TOKEN_VALUE --server=BACKUP_CLUSTER_CONTROL_PLANE_API_SERVERDescribe the Backup

velero backup describe BACKUP_NAMEView Backup Logs (all)

velero backup logs BACKUP_NAMEView Backup Logs (warnings)

velero backup logs BACKUP_NAME | grep 'level=warn'View Backup Logs (errors)

velero backup logs BACKUP_NAME | grep 'level=error'Login to the RESTORE OCP Cluster

oc login --token=SA_TOKEN_VALUE --server=RESTORE_CLUSTER_CONTROL_PLANE_API_SERVERDescribe the Restore CR

velero restore describe RESTORE_NAMEView Restore Logs (all)

velero restore logs RESTORE_NAMEView Restore Logs (warnings)

velero restore logs RESTORE_NAME | grep 'level=warn'View Restore Logs (errors)

velero restore logs RESTORE_NAME | grep 'level=error'To further learn about debugging OADP/Velero, use these links:

In this guide, we’ve demonstrated how to perform cross-cluster application backup and restore. We used OADP to back up applications running in the OpenShift v4.10 cluster deployed in us-east-2, simulated a disaster by shutting down the cluster, and restored the same apps and their state to the OpenShift v4.12 cluster running in eu-west-1.