This project is an unofficial implementation of AlphaGAN: Generative adversarial networks for natural image matting published at the BMVC 2018. As for now, the result of my experiment is not as good as the paper's.

Follow the instruction to contact the author for the dataset

You might need to follow the method mentioned in the Deep Image Matting to generate the trimap using the alpha mat.

The trimap are generated while the data are loaded.

import numpy as np

import cv2 as cv

def generate_trimap(alpha):

k_size = random.choice(range(2, 5))

iterations = np.random.randint(5, 15)

kernel = cv.getStructuringElement(cv.MORPH_ELLIPSE, (k_size, k_size))

dilated = cv.dilate(alpha, kernel, iterations=iterations)

eroded = cv.erode(alpha, kernel, iterations=iterations)

trimap = np.zeros(alpha.shape, dtype=np.uint8)

trimap.fill(128)

trimap[eroded >= 255] = 255

trimap[dilated <= 0] = 0

return trimapThe Dataset structure in my project

Train

├── alpha # the alpha ground-truth

├── fg # the foreground image

├── input # the real image composed by the fg & bg

MSCOCO

├── train2014 # the background image

-

SyncBatchNorm instead of pytorch original BatchNorm when use multi GPU.

-

Using GroupNorm [2]

4 GPUS 32 batch size, and SyncBatchNorm

- Achieved SAD=78.22 after 21 epoches.

1 GPU 1 batch size, and GroupNorm

- Achieved SAD=68.61 MSE=0.03189 after 33 epoches.

- Achieved SAD=61.9 MSE=0.02878 after xx epoches.

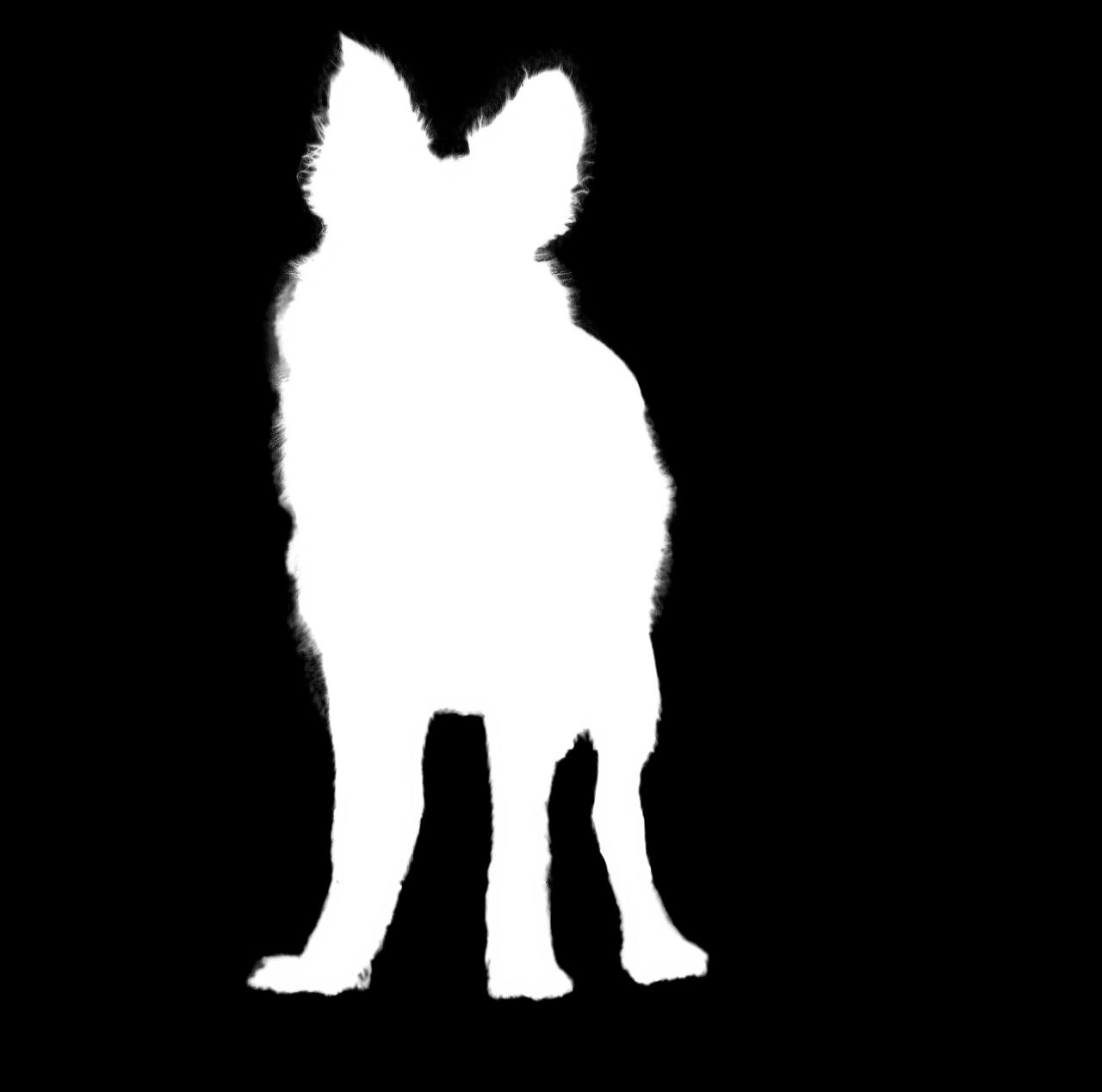

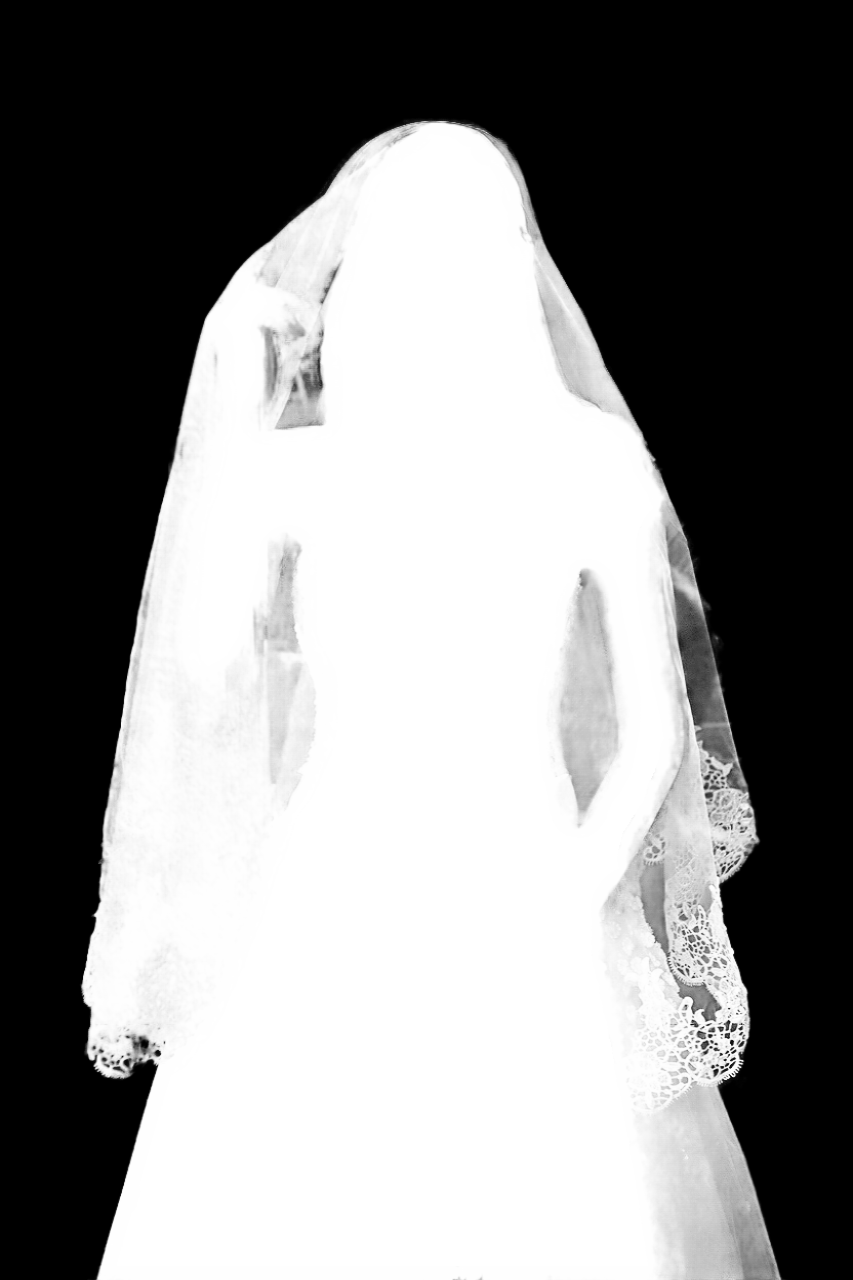

| image | trimap | alpha(predicted) |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

My code is inspired by:

-

[2] FBA-Matting

-

[3] GCA-Matting

-

pytorch-book chapter7 generate anime head portrait with GAN