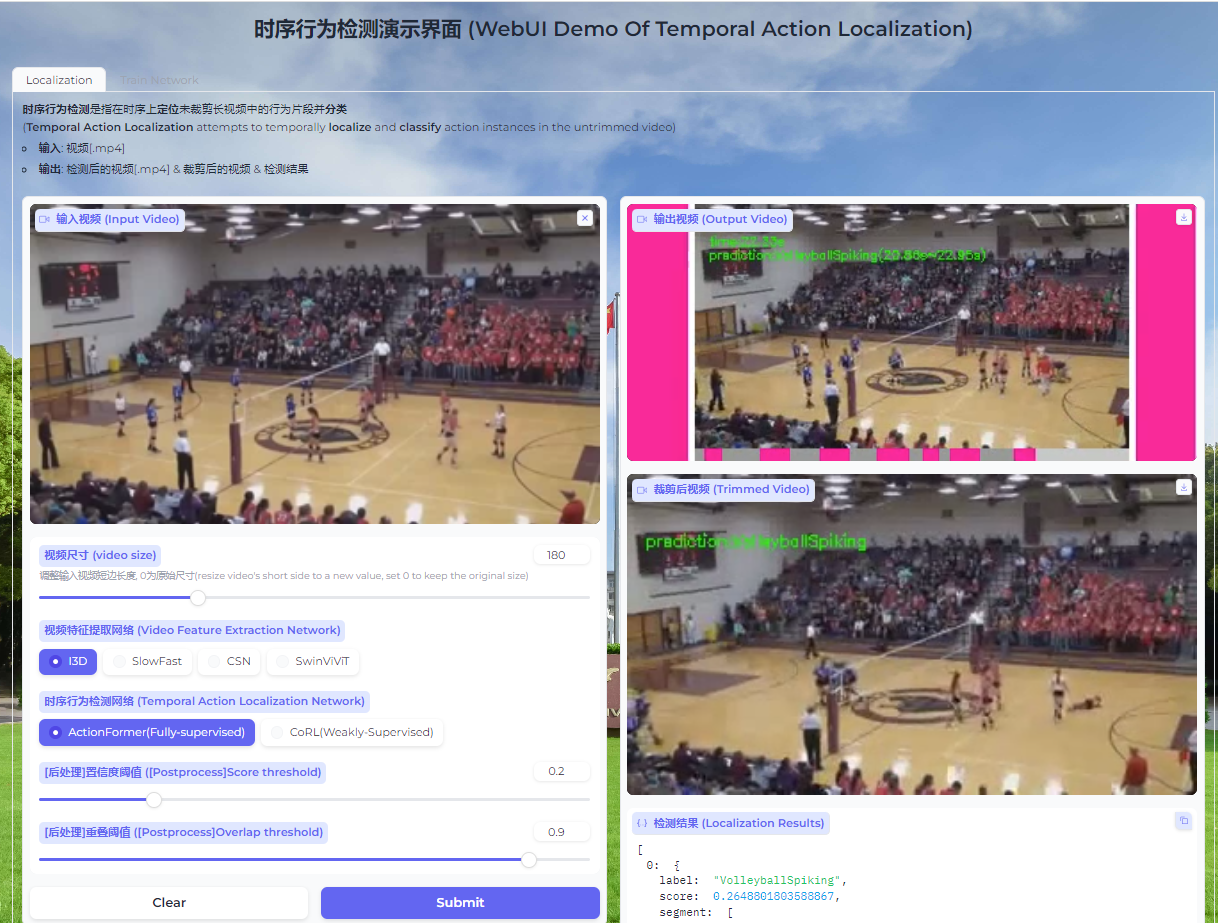

This is a Temporal Action Localization(TAL) Demo based on the gradio, TAL attempts to temporally localize and classify action instances in the untrimmed video, you can reference this repo if you want to build app for other video understanding tasks.

conda create --name tal_app python=3.8

conda activate tal_app

pip install torch==1.10.0+cu111 -f https://download.pytorch.org/whl/torch_stable.html

pip install torchvision==0.11.0+cu111 torchaudio==0.10.0 -f https://download.pytorch.org/whl/torch_stable.html- use MIM install MMEngine, MMCV.

pip install -U openmim

mim install mmengine

<!-- mim install mmcv -->

pip install mmcv-full==1.3.18 -f https://download.openmmlab.com/mmcv/dist/cu111/torch1.10.0/index.html- install MMAction2.

cd Video-Swin-Transformer

pip install -v -e .

cd ..https://github.com/open-mmlab/denseflow/blob/master/INSTALL.md

Part of NMS is implemented in C++. The code can be compiled by

cd ./tal_alg/actionformer/libs/utils

python setup.py install --usercd ../../../../../

pip install -r requirements.txtDownload examples and checkpoint

- use

wgetto download the backbone checkpoint listed in ./backbone/download.py - we provide checkpoint of ActionFormer trained on thumos14 dataset and testing examples, download them and put them in

./tal_alg/actionformer/ckptand./examples, respectively. - Download Link

If you want to train a customised model, following the steps below.

- Extract RGB and Flow Frame of video RGB:

python extract_rawframes.py ./tmp/video/ ./tmp/rawframes/ --level 1 --ext mp4 --task rgb --use-opencv- Extract features of dataset Some videos are too long and cannot be loaded into memory when running in parallel. Filtering out the overly-long videos by param 'max-frame', the overly-long videos will be divided to picies.

cd dataset

python extract_datasets_feat.py --gpu-id <gpu> --part <part> --total <total> --resume --max-frame 10000- Train your temporal action localization algorithm

- Write a

inference.pyand import it inprocessor.py

- set demo.launch(share=True) if you want to share your app to others.

- The whole process runs on the host server so the client(PC,Android,apple...) does not need to install the environment.

python main.py- 若未生成外部访问网站, 将frpc_linux_amd64_v0.2置于anaconda3/envs/tal_app/lib/python3.8/site-packages/gradio中

- 若未安装ffmpeg

sudo apt-get install ffmpegWe referenced the repos below for the code.