Training of Convolutional Networks on Multiple Heterogeneous Datasets for Street Scene Semantic Segmentation (IV 2018)

Code for reproducing results for IV2018 paper "Training of Convolutional Networks on Multiple Heterogeneous Datasets for Street Scene Semantic Segmentation".

Panagiotis Meletis and Gijs Dubbelman (2018) Training of convolutional networks on multiple heterogeneous datasets for street scene semantic segmentation. The 29th IEEE Intelligent Vehicles Symposiom (IV 2018), full paper on arXiv.

If you find our work useful for your research, please cite the following paper:

@inproceedings{heterogeneous2018,

title={Training of Convolutional Networks on Multiple Heterogeneous Datasets for Street Scene Semantic Segmentation},

author={Panagiotis Meletis and Gijs Dubbelman},

booktitle={2018 IEEE Intelligent Vehicles Symposium (IV)},

year={2018}

}

See here.

Discrimative power and generalization capabilities of convolutional networks is vital for deployment of semantic segmentation systems in the wild. These properties can be obtained by training a single net on multiple datasets.

Combined training on multiple datasets is hampered by a variety of reasons, mainly including:

- different level-of-detail of labels (e.g. person label in dataset A vs pedestrian and rider labels in dataset B)

- different annotation types (e.g. per-pixel annotations in dataset A vs bounding box annotations in dataset B)

- class imbalances between datasets (e.g. class person has 10^3 annotated pixels in dataset A and 10^6 pixels in dataset B)

We propose to construct a hierarchy of classifiers to combat above challenges. Hierarchical Semantic Segmentation is based on ResNet50. Its main novelty compared to other semantic segmentation systems, is that a single model can handle a variety of different datasets, with disjunct sets of semantic classes. Our system also runs in real time 18fps @512x1024 resolution. Figures 1-3 below provide sample results, from 3 different datasets.

|

|

|

|---|---|---|

|

|

|

|

|

|

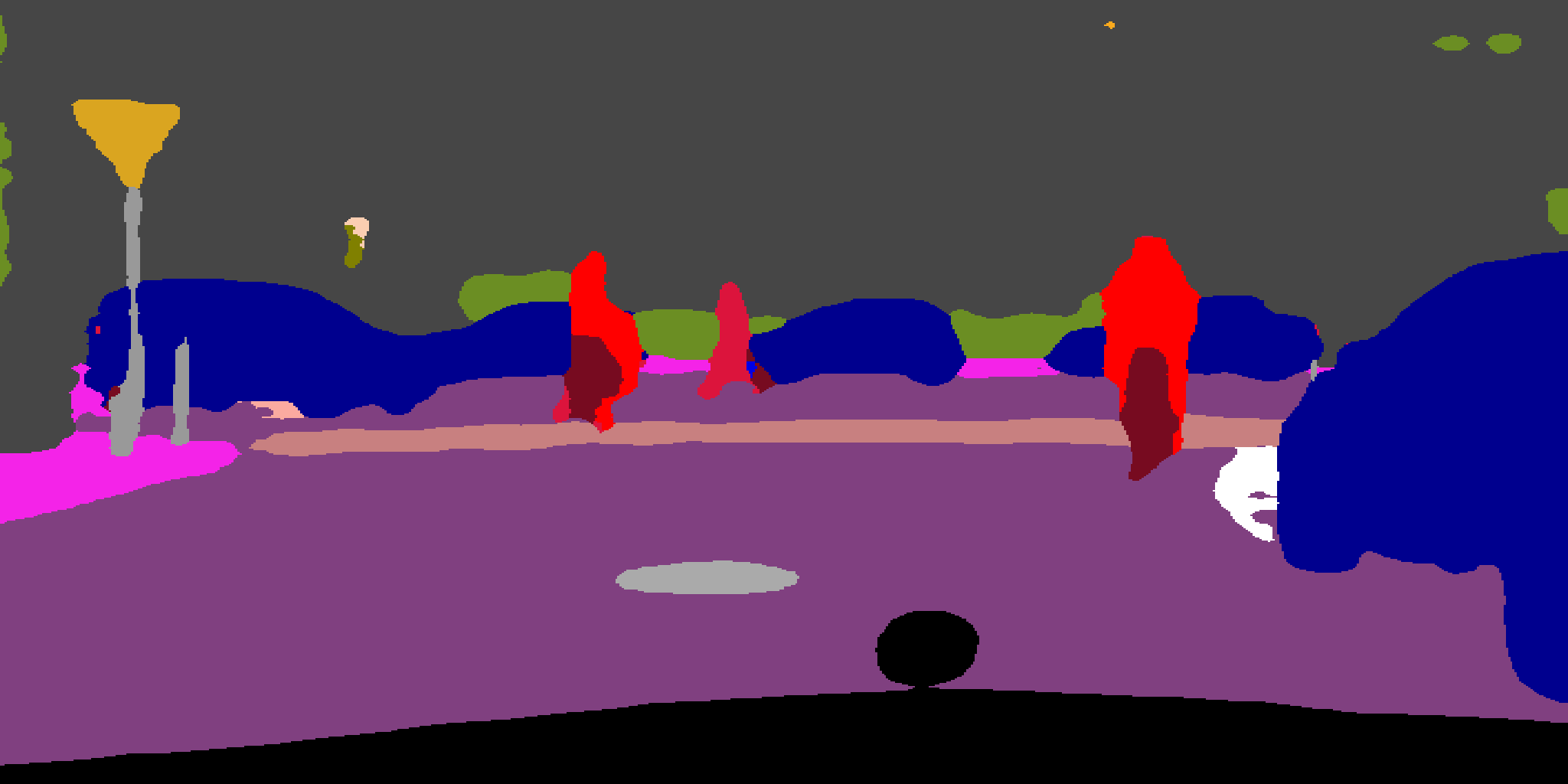

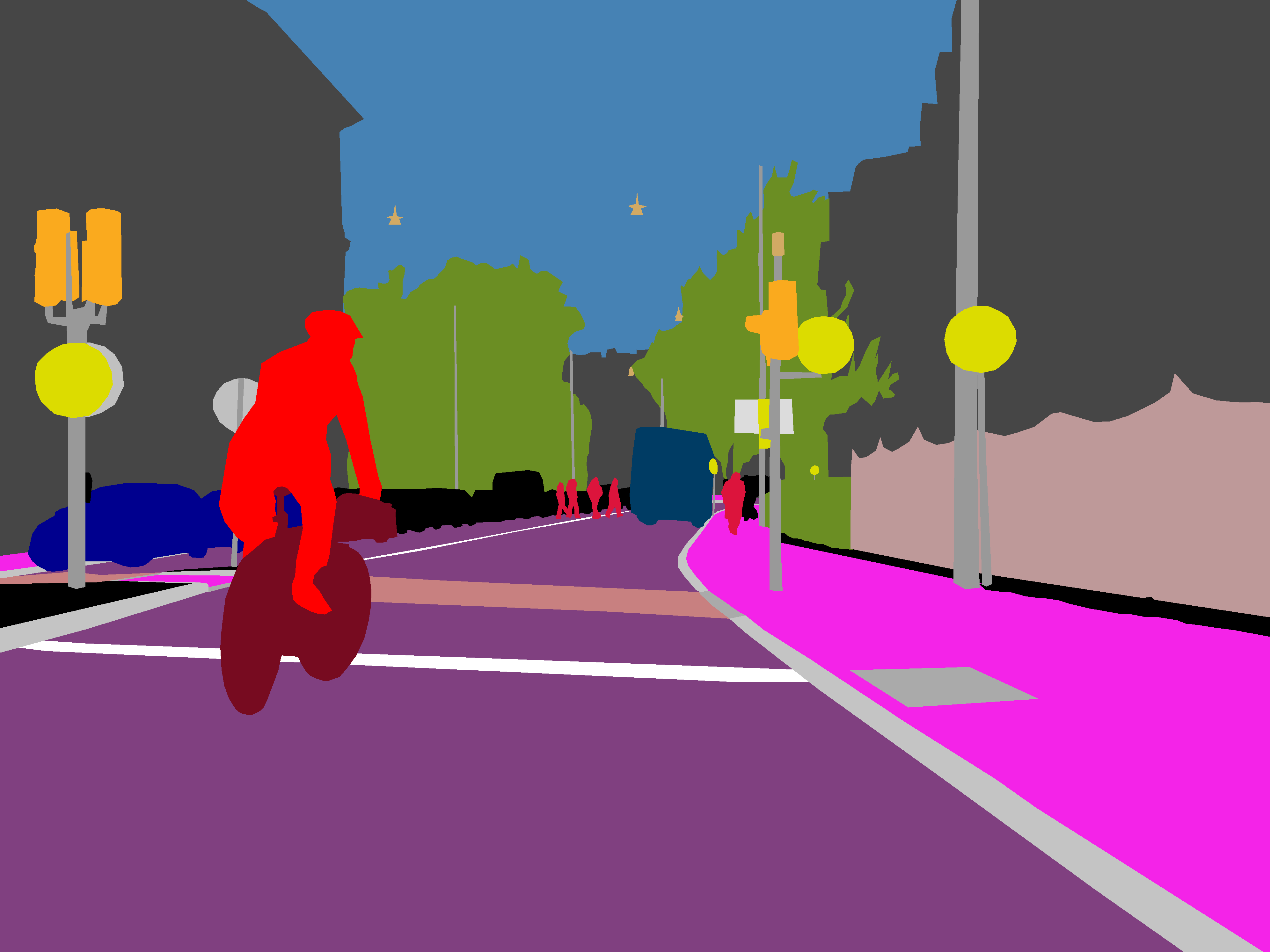

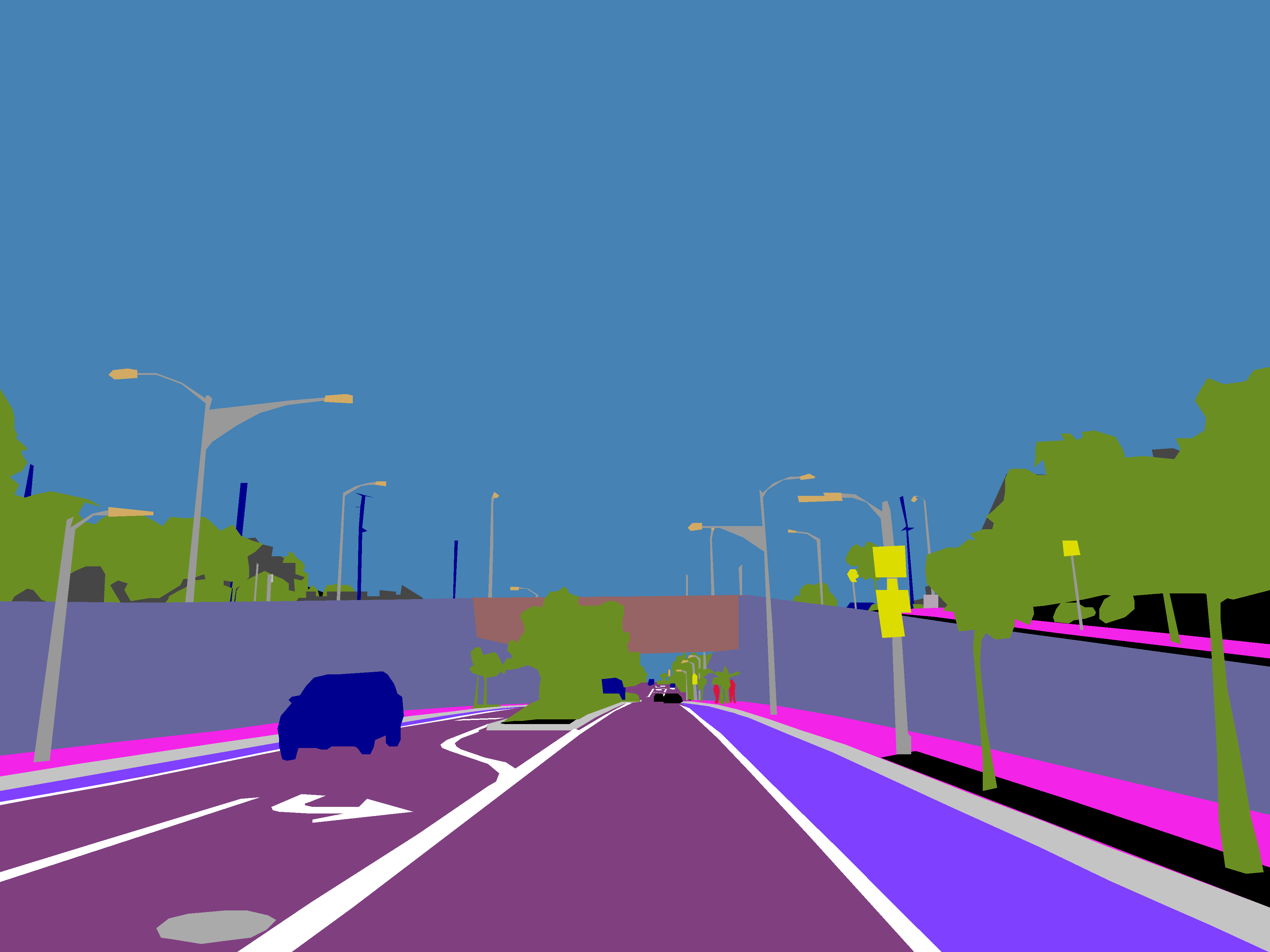

Figure 1. Cityscapes validation split image examples - top: input images, center: predictions, bottom: ground truth. The network predictions include decisions from L1-L3 levels of the hierarchy. Note that the ground truth includes only one traffic sign superclass (yellow) and no road attribute markings.

|

|

|

|---|---|---|

|

|

|

|

|

|

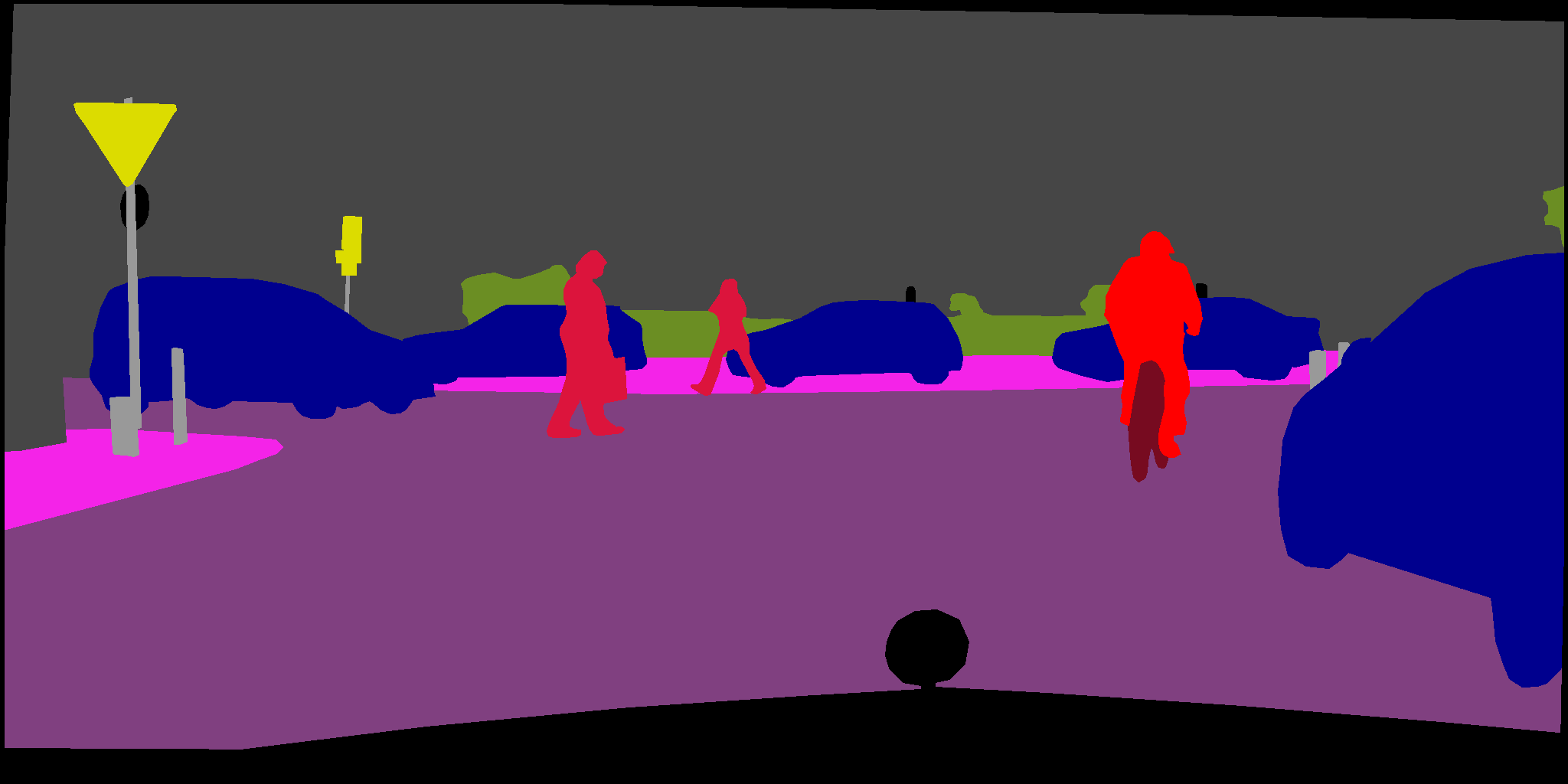

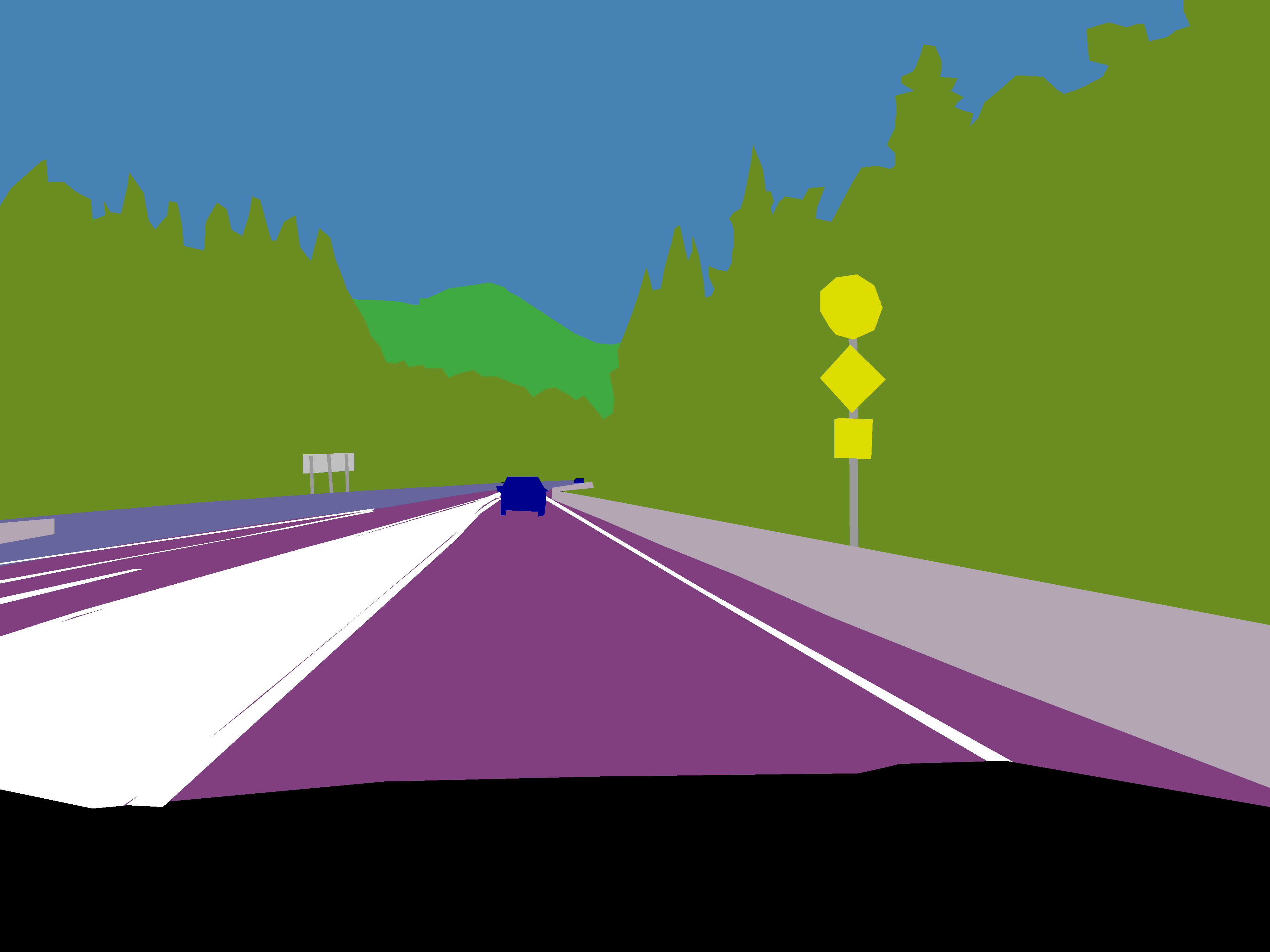

Figure 2. Mapillary Vistas validation split image examples - top: input images, center: predictions, bottom: ground truth. The network predictions include decisions from L1-L3 levels of the hierarchy. Note that the ground truth does not include traffic sign subclasses.

|

|

|

|---|---|---|

|

|

|

|

|

|

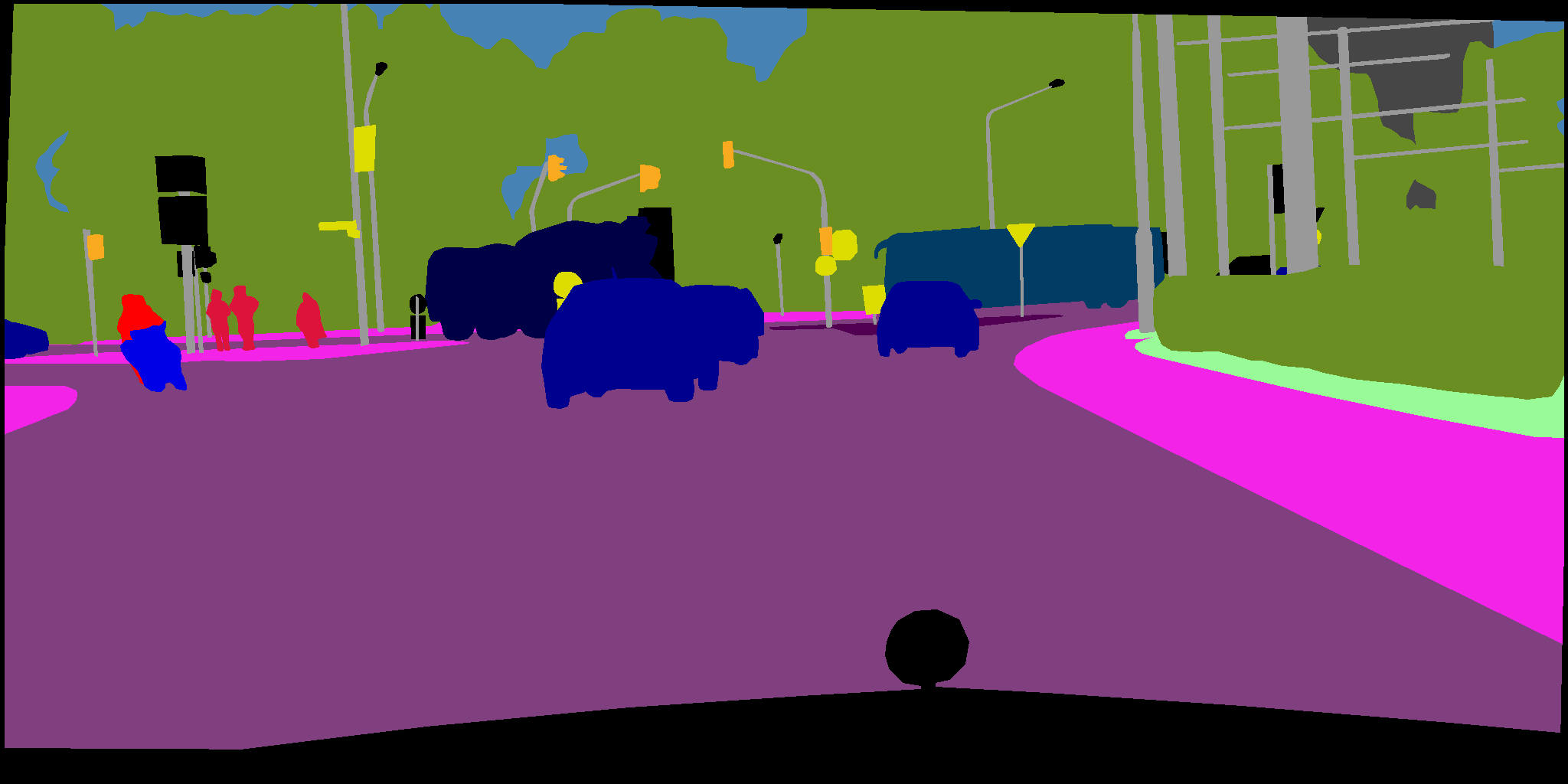

Figure 3. TSDB test split image examples - top: input images, center: predictions, bottom: ground truth. The network predictions include decisions from L1-L3 levels of the hierarchy. Note that the ground truth includes only traffic sign bounding boxes, since rest pixels are unlabeled.