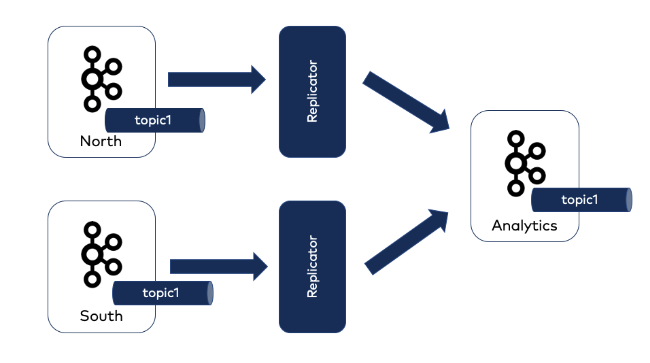

We would like to run Replicator on several local Kafka clusters and replicate the data into a central cluster on CC. This repository serves as an example for one local cluster aggregation. However, this configuration was successfully tested for multiple local Kafka clusters.

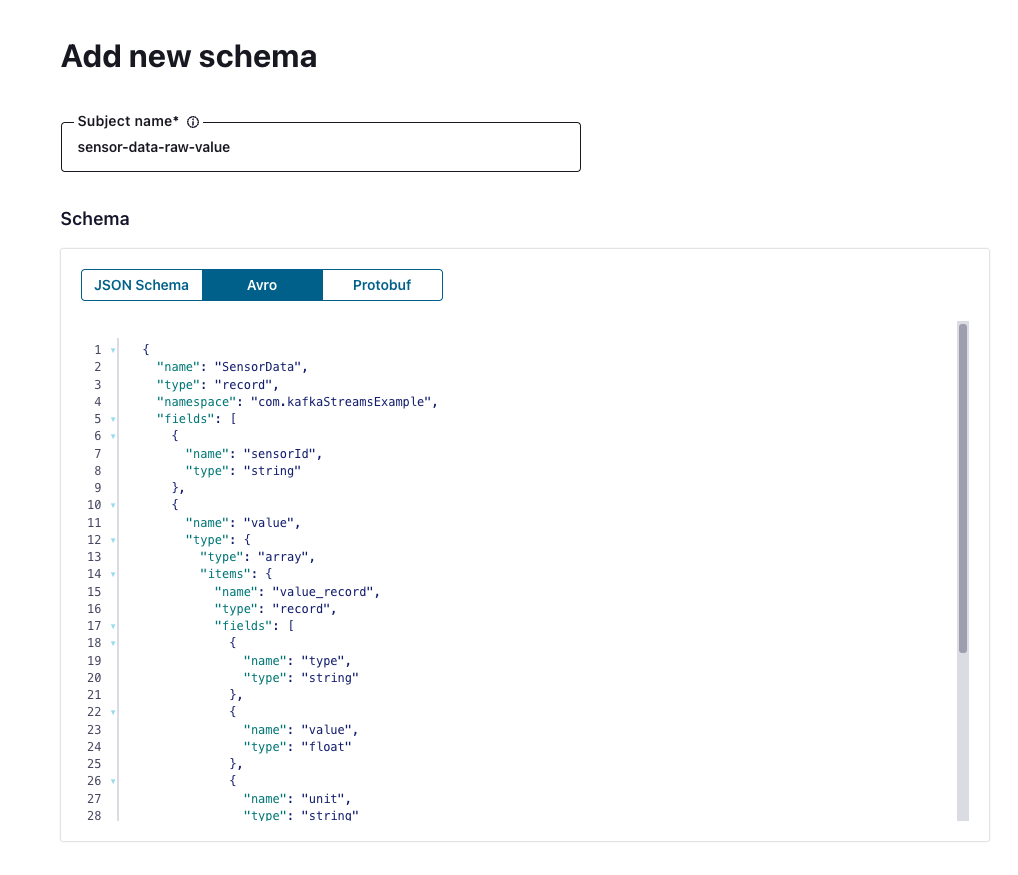

Also, we will run Replicator as an executable and data is emitted with an Avro format.

We first create the Kafka topic and register the schema in CC.

We start a local Kafka cluster, a Schema Registry and Replicator as an executable

docker-compose up -d

We produce data to the local Kafka cluster with an Avro schema. The schema is created automatically on the local SR.

./gradlew run

We verify it is produced with a schema on the local Cluster

kafka-avro-console-consumer --bootstrap-server localhost:9092 --topic sensor-data-raw --property schema.registry.url="http://localhost:8081"We verify it is migrated with a schema also on CC

kafka-avro-console-consumer --bootstrap-server <CC Bootstrap Server> --consumer.config client.properties --topic sensor-data-raw --property schema.registry.url="<CC SR>" --property schema.registry.basic.auth.user.info="<API KEY: API SECRET>" --property basic.auth.credentials.source="USER_INFO"- Only configure the

--cluster.idfor each Replicator, noclient.idorgroup.idwhen running Replicator as an executable. - The internal topics (config, offset, status) will be created on the destination cluster. If you want to have the internal topics created on the source cluster we need to create the Replicator as a Connector.

- Do not sync the topic configuration.

- We connect the

src.value.converterwith the local SR, whereas thevalue.converteris connected to the CC SR. - The used SA has full permissions, to restrict it following the least privilege guidance, see the corresponding ACLs documentation

For more information check out replicator.properties.