Robust Principles: Architectural Design Principles for Adversarially Robust CNNs. ShengYun Peng, Weilin Xu, Cory Cornelius, Matthew Hull, Kevin Li, Rahul Duggal, Mansi Phute, Jason Martin, Duen Horng Chau. British Machine Vision Conference (BMVC), 2023.

📺 Video Presentation 📖 Research Paper 🚀Project Page 🪧 Poster

robust-principles.mp4

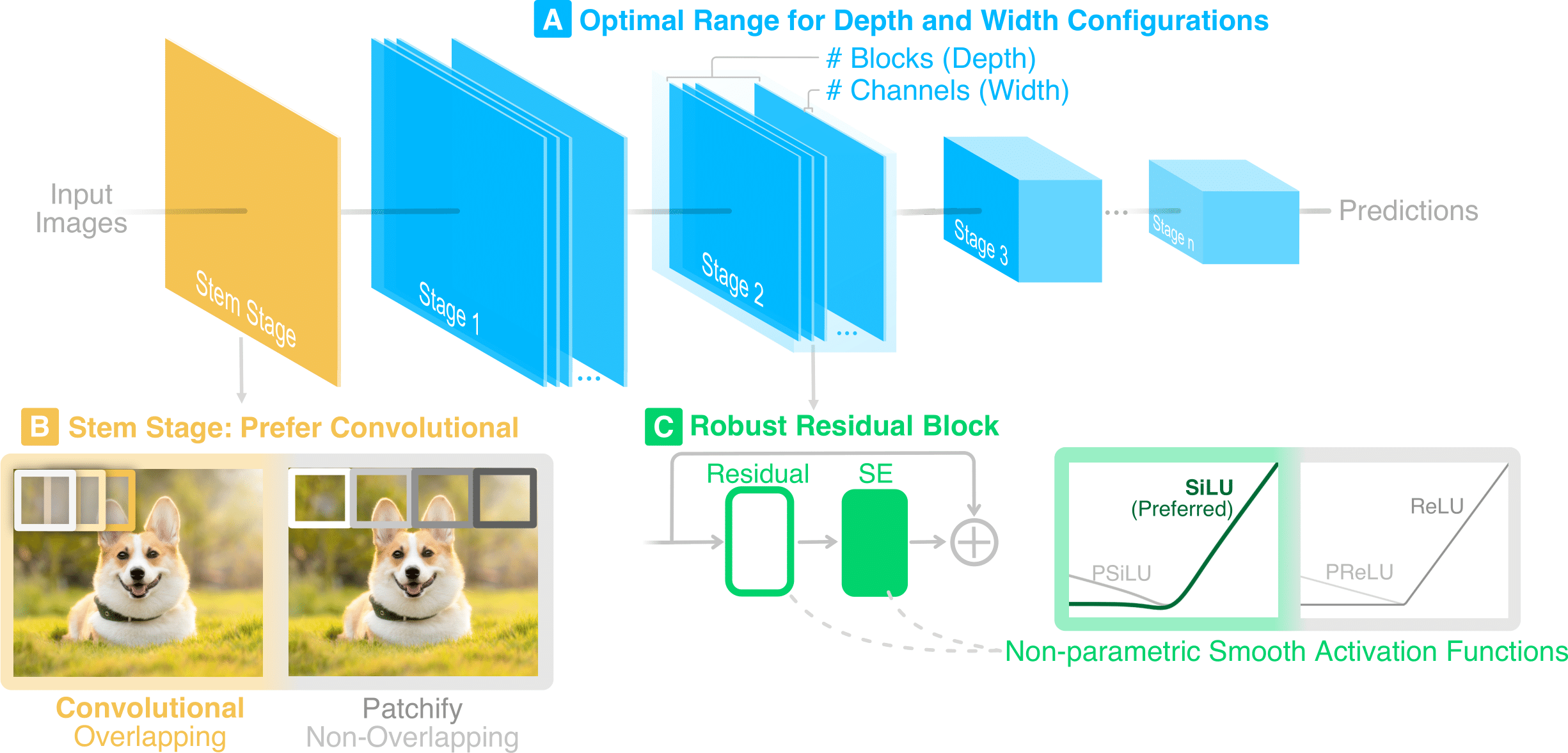

We aim to unify existing works' diverging opinions on how architectural components affect the adversarial robustness of CNNs. To accomplish our goal, we synthesize a suite of three generalizable robust architectural design principles: (a) optimal range for depth and width configurations, (b) preferring convolutional over patchify stem stage, and (c) robust residual block design through adopting squeeze and excitation blocks and non-parametric smooth activation functions. Through extensive experiments across a wide spectrum of dataset scales, adversarial training methods, model parameters, and network design spaces, our principles consistently and markedly improve AutoAttack accuracy: 1-3 percentage points (pp) on CIFAR-10 and CIFAR-100, and 4-9 pp on ImageNet.

Aug. 2023 - Paper accepted by BMVC'23

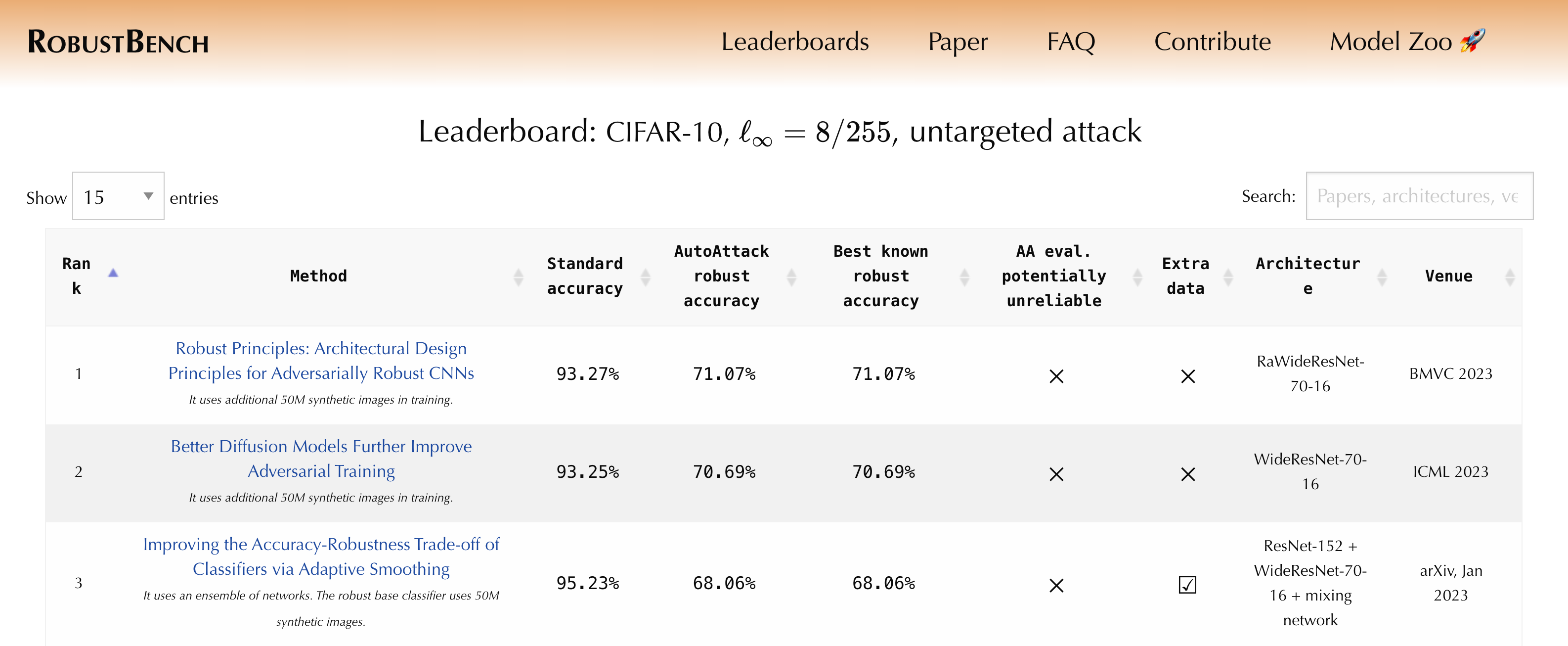

Sep. 2023 - 🎉 We are the top on RobustBench CIFAR-10 $\ell_\infty = 8/255$ leaderboard

- Prepare ImageNet following installation steps 3&4. Skip step 4 if you don't plan to run Fast adversarial training (AT).

- Set up python environment:

make .venv_done- (Optional) Register Weights & Biases account if you want to visualize training curves.

- Update "BASE" to ImageNet root directory and "WANDB_ACCOUNT" to your account name and validate by:

make check_dirmake experiments/Torch_ResNet50/.done_test_pgdTo test other off-the-shelf models in torchvision, add the model name in MODEL.mk and create a new make target in Makefile.

make experiments/RaResNet50/.done_test_pgd# Training

make experiments/RaResNet50/.done_train

# Evaluation on PGD

make experiments/RaResNet50/.done_test_pgd

# Evaluation on AutoAttack

make experiments/RaResNet50/.done_test_aa

# Pretrained models evaluated on AutoAttack

make experiments/RaResNet50/.done_test_pretrained| Architecture | #Param | Clean(%) | AA(%) | PGD100-2(%) | PGD100-4(%) | PGD100-8(%) |

|---|---|---|---|---|---|---|

| RaResNet-50 | 26M | 70.17 | 44.14 | 60.06 | 47.77 | 21.77 |

| RaResNet-101 | 46M | 71.88 | 46.26 | 61.89 | 49.30 | 23.01 |

| RaWRN-101-2 | 104M | 73.44 | 48.94 | 63.49 | 51.03 | 25.31 |

| CIFAR-10 | CIFAR-100 | ||||||

|---|---|---|---|---|---|---|---|

| Method | Model | Clean(%) | AA(%) | PGD20(%) | Clean(%) | AA(%) | PGD20(%) |

| Diff. 1M | RaWRN-70-16 | 92.16 | 66.33 | 70.37 | 70.25 | 38.73 | 42.61 |

| Diff. 50M | RaWRN-70-16 | 93.27 | 71.09 | 75.29 | - | - | - |

@article{peng2023robust,

title={Robust Principles: Architectural Design Principles for Adversarially Robust CNNs},

author={Peng, ShengYun and Xu, Weilin and Cornelius, Cory and Hull, Matthew and Li, Kevin and Duggal, Rahul and Phute, Mansi and Martin, Jason and Chau, Duen Horng},

journal={arXiv preprint arXiv:2308.16258},

year={2023}

}

@misc{peng2023robarch,

title={RobArch: Designing Robust Architectures against Adversarial Attacks},

author={ShengYun Peng and Weilin Xu and Cory Cornelius and Kevin Li and Rahul Duggal and Duen Horng Chau and Jason Martin},

year={2023},

eprint={2301.03110},

archivePrefix={arXiv},

primaryClass={cs.CV}

}If you have any questions, feel free to open an issue or contact Anthony Peng (CS PhD @Georgia Tech).