Deep Deformable 3D Caricature with Learned Shape Control (DD3C)

[Paper] [Project page] [ArXiv] [Additional result gallery] [Video]

📝 This repository contains the official PyTorch implementation of the following paper:

Deep Deformable 3D Caricature with Learned Shape Control

Yucheol Jung, Wonjong Jang, Soongjin Kim, Jiaolong Yang, Xin Tong, Seungyong Lee, SIGGRAPH 2022

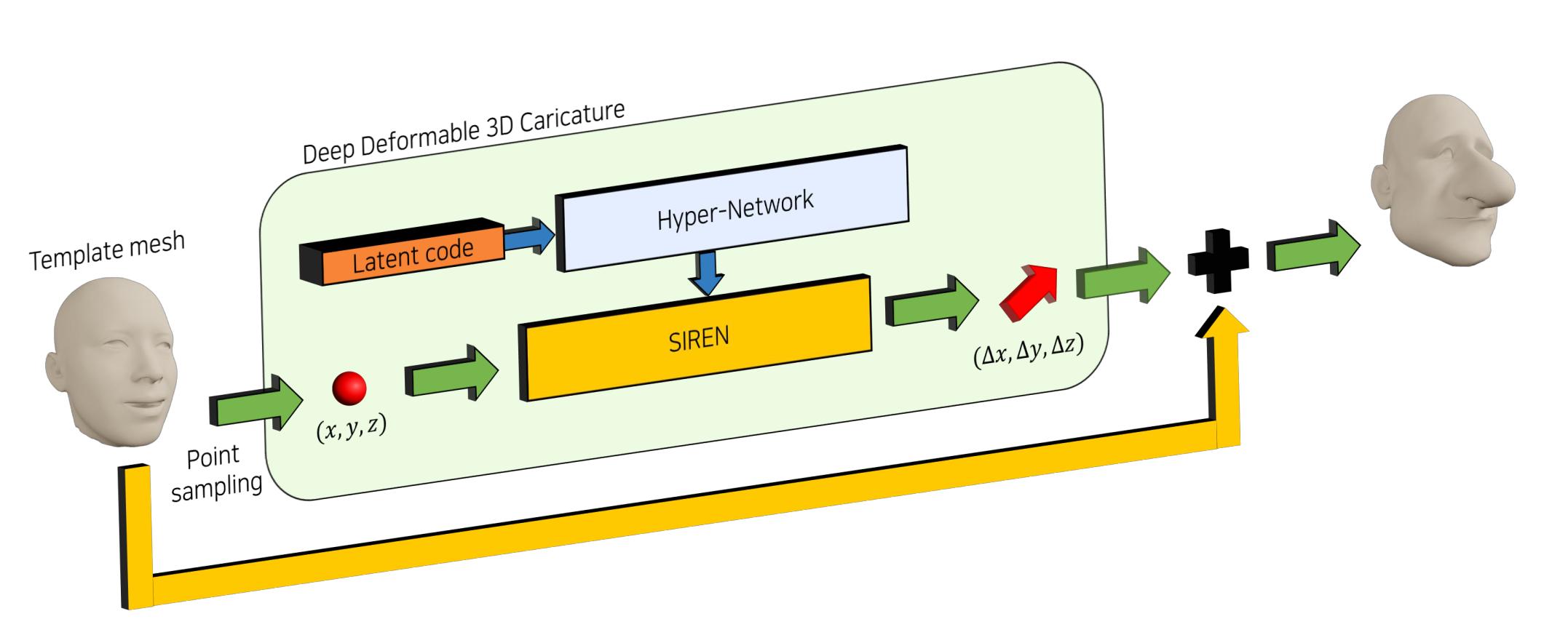

Overview

Explanation

Environment

The code was tested on an Arch Linux desktop with a NVIDIA TITAN Xp GPU.

Setup

Clone this repository using --recursive option.

git clone --recursive <URL>If clonded already

git submodule update --initUse provided docker image in docker/Dockerfile.

cd docker

docker build -t dd3c .

cd ..

docker run --gpus all -it --rm -v $PWD:/workspace dd3c /bin/bash

cd /workspace

# Run your codeor build an equivalent environment yourself (Read Dockerfile and requirements.txt)

Executables

Download pre-trained models

You are required to download the models before running the executables. Select one of the mirrors to download the models. If one mirror does not work, try the other ones.

Extract the tar.zst archive and place logs folder on the same level as README.md.

Fitting the 3D caricature model to 2D landmarks (68 landmark annotation)

Fit the model to 68 2D landmarks (68 landmarks used in DLIB face landmark detection).

By default the code runs fitting on the 50 test examples in data provided by the authors of Wu et al. Alive Caricature from 2D to 3D. CVPR 2018. (Included as submodule in dataset/Caricature-Data).

python run_fitting_2d_68.py --config ./configs/eval/deepdeformable_68.ymlYou may test on different data by specifying different dir_caricature_data in configs/eval/deepdeformable.yml.

Fitting the 3D caricature model to 2D landmarks (81 landmark annotation)

This code does the the same fitting as the above one, but uses a different landmark annoataion. The annotation adds 13 additional landmarks for forehead, ears, and cheekbones. We recommend using this annotation for caricature in frontal view to guarantee good fitting around forehead and ears.

Visual demonstration of the 81 landmarks is in (imgs/landmarks_81.png). The annotation itself is contained in staticdata/rawlandmarks.py:CaricShop3D.LANDMARKS as vertex indices. The first 68 landmarks are the same as the 68 annotation.

python run_fitting_2d_81.py --config ./configs/eval/deepdeformable_81.ymlSemantic-label-based 3D caricature editing

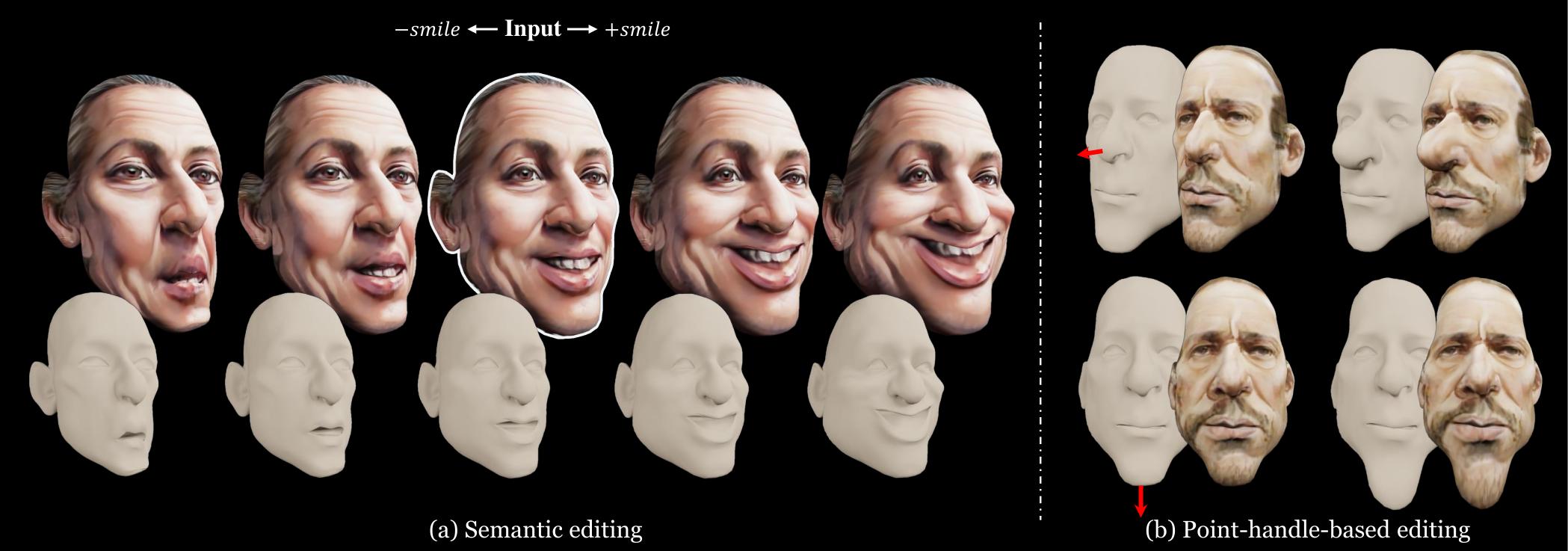

Given a 3D caricature (In our demo, 3D caricatures from trained latent codes), edit the shape using semantic labels.

python latent_manipulation_interfacegan.py --config ./configs/eval/edit_Smile.ymlThe default behaviour is to adjust "Smile" attribute of the fittings. To change the semantic label, change attr_index option in the config yml file. The labels corresponding to each index are listed in attr_list.txt.

Point-handle-based 3D caricature editing

PREREQUISITE: Run this code after running run_fitting_2d_68.py.

Given a 3D caricature (In our demo, a 3D caricature generated with 2D landmark fitting), edit the shape using landmark point handles.

To edit the faces from 68-landmark fittings, run

python latent_manipulation_pointhandle.py --config ./configs/eval/point.ymlCurrent implementation runs a pre-defined set of editings. To change the editing behaviour, refer to latent_manipulation_pointhandle.py:L123:L149 and change them to fit your application.

Automatic caricaturization

Automatically exaggerate regular 3D face using a model trained both on 3DCaricShop and FaceWarehouse.

python latent_manipulation_caricature.py --config ./configs/eval/deepdeformable_fw_caricature.ymlContact

📫 You can contact us via email: ycjung@postech.ac.kr or wonjong@postech.ac.kr

License

This software is being made available under the terms in the LICENSE file.

Any exemptions to these terms require a license from the Pohang University of Science and Technology.

Citation

If you find our material useful for your work, please consider citing our paper:

@inproceedings{jung2022deepdeformable,

author = {Jung, Yucheol and Jang, Wonjong and Kim, Soongjin and Yang, Jiaolong and Tong, Xin and Lee, Seungyong},

title = {Deep Deformable 3D Caricatures with Learned Shape Control},

year = {2022},

isbn = {9781450393379},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3528233.3530748},

doi = {10.1145/3528233.3530748},

booktitle = {ACM SIGGRAPH 2022 Conference Proceedings},

articleno = {59},

numpages = {9},

keywords = {3D face deformation, Deformable model, Semantic 3D face control, Auto-decoder, Parametric model, 3D face model},

location = {Vancouver, BC, Canada},

series = {SIGGRAPH '22}

}Credits

This implementation builds upon DIF-Net and InterFaceGAN. We thank the authors for sharing the code for the work publicly.